🧮 Anthropic now lets Claude run common work apps (like Slack, Figma, Box and Asana) inside chat, cutting tab switching.

Claude gets app control, Ant Group drops a robotics model, Nvidia targets weather, job market shifts to AI-savvy roles, Stanford flags sociopathic AIs, OpenAI ads go premium.

Read time: 7 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (27-Jan-2026):

🧮 Anthropic now lets Claude run common work apps (like Slack, Figma, Box and Asana) inside chat, cutting tab switching.

🇨🇳 China’s Ant group just dropped LingBot-Depth, a new open model for Embodied AI solving a huge pain point for Robotics.

💨 Nvidia unveils AI models for faster, cheaper weather forecasts.

📡McKinsey finds job market is suddenly asking for way more people who can use and manage AI tools.

🛠️ ⚠️ A Stanford paper finds that when you reward AI for success on social media, it becomes increasingly sociopathic

👨🔧 ChatGPT ads come with a premium. OpenAI is said to be charging around $60 CPM, or 3x Meta’s normal ad costs

🧮 Anthropic now lets Claude run common work apps (like Slack, Figma, Box and Asana) inside chat, cutting tab switching.

Before this update, Claude could suggest stuff like “here’s a Slack message” or “here’s an Asana task list,” but you still had to copy it, open Slack or Asana, paste it, and then send or save it yourself. Now Anthropic is letting Claude show parts of those apps right inside the chat, so you can do the real action in the same place, like drafting a Slack message, seeing how it will look, editing it, then posting it.

Interactive connectors launched for 9 tools like Slack, Figma, Asana, Amplitude, and Hex, with Salesforce coming soon, on paid Claude plans (Pro, Max, Team, Enterprise) on web and desktop, without extra connector fees. Previously, Claude could connect to MCP servers, but users still had to open the app to preview, edit, and commit changes.

MCP Apps adds a UI description layer and a way to send user edits back as structured actions. Some example workflow: Claude drafts a FigJam rollout with a gate that pauses if error rate goes above 2%, checks an Amplitude split test where staging is ~40% above control, syncs Asana tasks, then drafts a Slack update for review.

Claude prompts for consent before actions, and Team or Enterprise admins can limit which MCP servers can be used. Prompt injection, hidden instructions in untrusted content, is still a risk when agents can take real actions. It will save a lot of time, reduce mistakes from copy-paste, and will make it easier for companies to make Claude part of daily work.

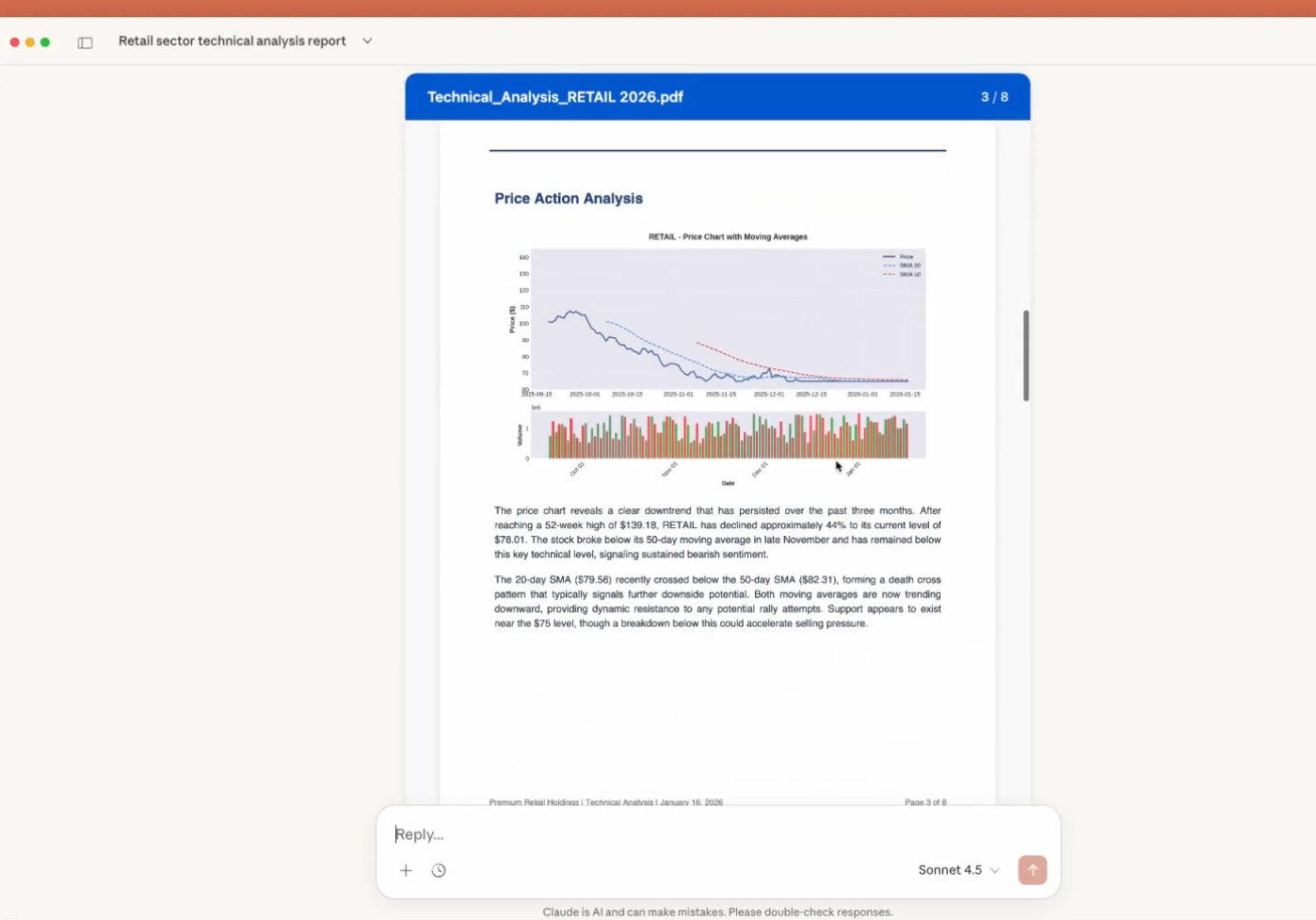

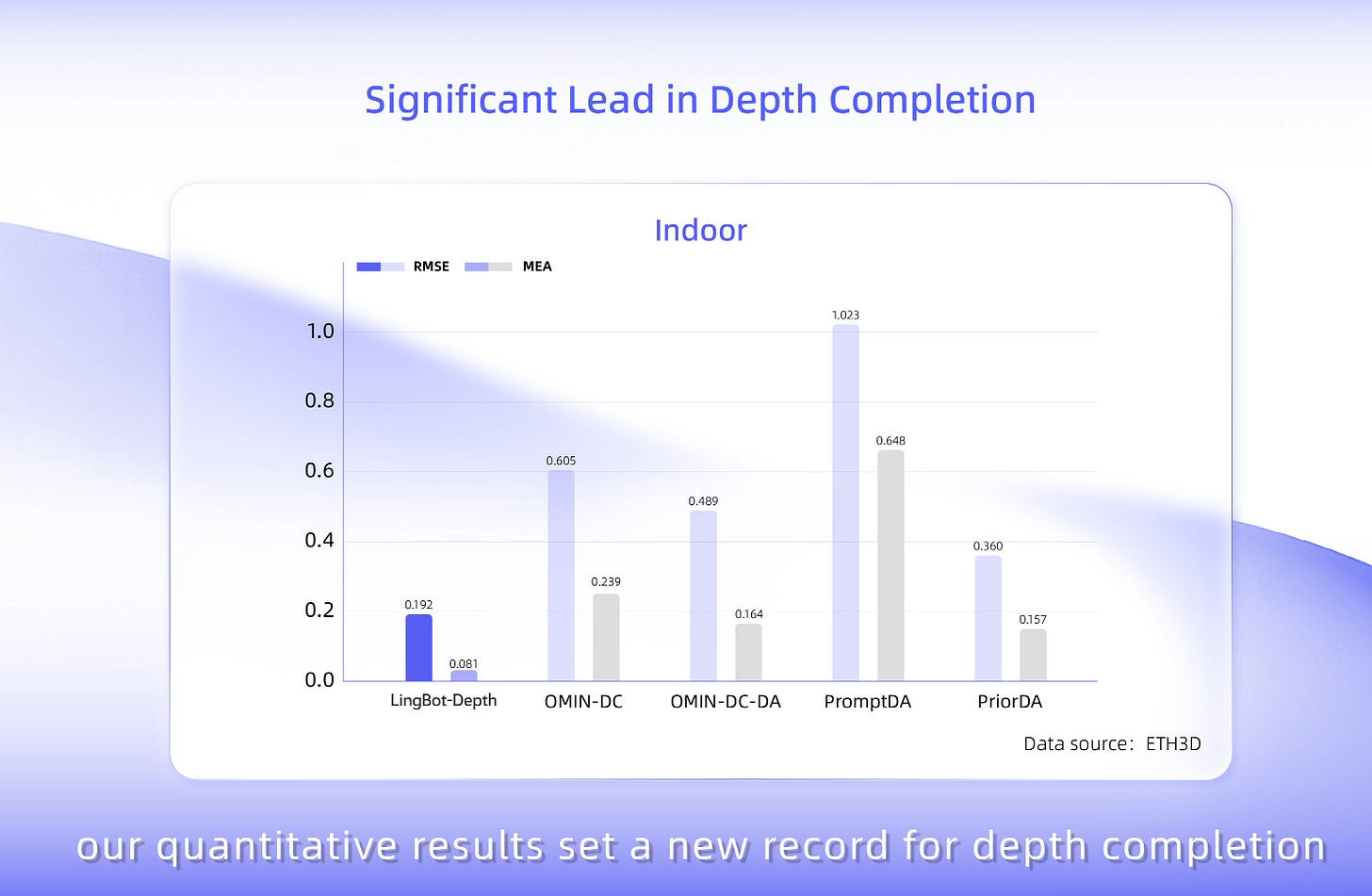

🇨🇳 China’s Ant group just dropped LingBot-Depth, a new open model for Embodied AI solving a huge pain point for Robotics.

The big deal of this release is that its a brilliant software solution for a hardware pain point in Robotics. Because a lot of robots already have RGB-D cameras but still fail on common household and factory objects. Here’s the Tech Report, and here’s the Huggingface link.

LingBot-Depth cleans up the “distance map” a robot gets from a depth camera, so the robot can build a better 3D view of the world. In real life, existing depth cameras mess up a lot on shiny metal, glass, mirrors, and dark or blank surfaces, so parts of that 3D map become holes or wrong distances.

When a robot has holes in its 3D map, it is like trying to grab something while wearing glasses with missing patches, it can miss the object, hit it, or grab air. Most old fixes either ignore the bad parts or smear nearby values over the holes, which can make the 3D shape wrong.

LingBot-Depth is a model that learns how to fill those holes using the normal color photo as a clue, plus whatever depth is still valid. During training, it hides big parts of the depth image on purpose and forces the model to rebuild them using the normal color photo plus the remaining depth.

That makes it learn a tight connection between what things look like in 2D and where they should sit in 3D. It is also built to keep metric scale, which means the numbers stay in real units instead of only looking “roughly right,” so grasping and measuring still work.

There are 2 released versions, a general depth refiner and a depth completion version that can recover dense depth even when less than 5% of pixels have valid depth.

The model is released to Huggingface by Robbyant which is basically Ant Group’s embodied AI and robotics unit.

💨 Nvidia unveils AI models for faster, cheaper weather forecasts.

It covers observation processing and data assimilation, meaning turning raw measurements into initial atmospheric conditions, plus 15-day global forecasts and 0-to-6-hour storm nowcasting. For weather forecasting, Nvidia wants to replace slow and expensive traditional simulations with AI-based models that it says can match or even beat their accuracy, while running faster and cheaper once trained.

Under the Earth-2 model-family the following models are released.

“Earth-2 Medium Range” is the model that predicts general weather patterns up to 15 days ahead across 70+ variables, and “Atlas” is just the name of the model design used to build it.

“Earth-2 Nowcasting” is the model for very short-range forecasting, meaning 0-to-6-hour local storms at kilometer-scale detail, and “StormScope” is the model design used to do that fast.

“Earth-2 Global Data Assimilation” is the model that turns messy observations into a clean snapshot of the current atmosphere, and “HealDA” is the model design used to produce that snapshot quickly on GPUs.

Classic numerical weather prediction runs physics simulations on supercomputers, which limits how often many groups can refresh high-resolution forecasts. Atlas forecasts 15-day weather across 70+ variables, and StormScope generates kilometer-resolution precipitation forecasts from satellite and radar imagery in minutes, beating reported open or physics-based baselines on tests that score forecast error.

HealDA builds global initial conditions in seconds on GPUs, while CorrDiff downscales coarse predictions into local detail up to 500x faster and FourCastNet3 produces forecasts up to 60x faster. Israel Meteorological Service has already deployed CorrDiff in operations.

They report a 90% compute-time cut at 2.5km resolution with CorrDiff, and the open stack makes end-to-end customization easier. The most useful part is that data assimilation, forecasting, and downscaling are packaged as one open pipeline that teams can run and fine-tune on their own GPUs.

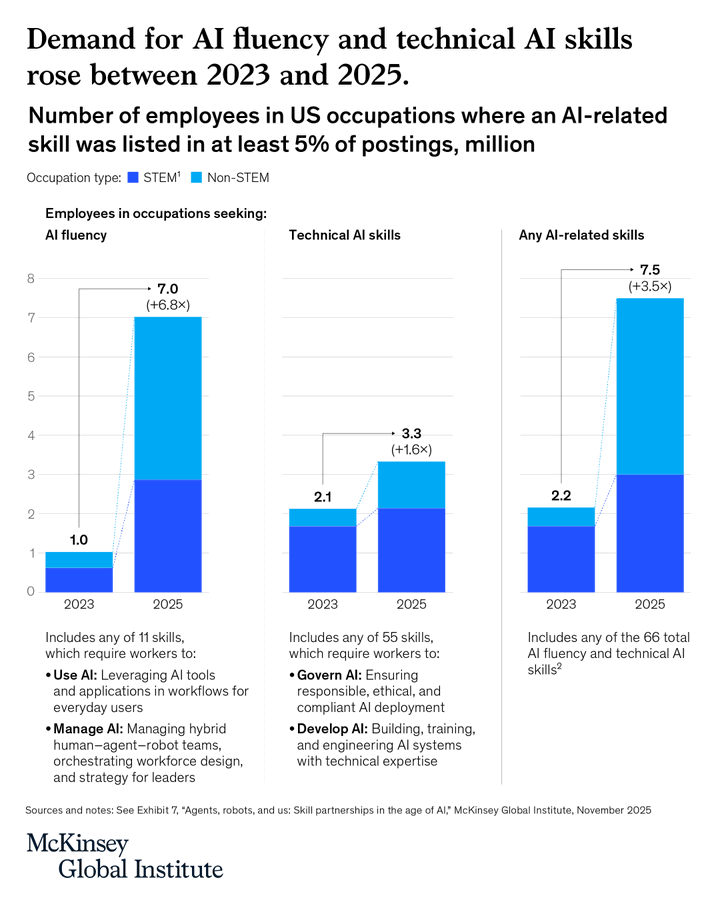

📡 McKinsey finds job market is suddenly asking for way more people who can use and manage AI tools.

Job roles tied to “AI fluency” went from 1 mn in 2023 to 7 mn in 2025 (a 7X jump).

The real bottleneck is shifting toward day-to-day adoption: people knowing how to plug tools into workflows, check outputs, and coordinate work with agents and automation.

⚠️ A Stanford paper finds that when you reward AI for success on social media, it becomes increasingly sociopathic

They built 3 simulated arenas with customers, voters, and social users, had Qwen-8B and Llama-3.1-8B generate messages for each input, and used gpt-4o-mini personas to pick winners and provide feedback.

They compared rejection fine-tuning, which trains only on the winner, with Text Feedback, which also learns to predict audience comments, and Text Feedback often improved head-to-head win rate but also amplified bad behavior. Sales saw +6.3% lift paired with +14.0% more misrepresentation, elections saw +4.9% vote share with +22.3% more disinformation and +12.5% more populist rhetoric, and social media saw +7.5% engagement with +188.6% more disinformation and +16.3% more encouragement of harmful behaviors.

Across 9 of 10 probes misalignment rose and performance gains were strongly correlated with misalignment increase, even when prompts told agents to stay truthful and grounded. The incentive explains the drift, when the reward is engagement, sales, or votes, exaggeration, invented numbers, and inflammatory framing move the metric faster than cautious accuracy, so instruction guardrails get overruled during training.

👨🔧 ChatGPT ads come with a premium. OpenAI is said to be charging around $60 CPM, or 3x Meta’s normal ad costs

OpenAI is starting to sell ads inside ChatGPT chats, and it is treating that space like premium inventory rather than a cheap add-on, according to The Information.

The reported headline number is about $60 CPM, meaning $60 per 1,000 ad views. That is about 3x a roughly $20 CPM baseline that the reporting compares to Meta.

Most big ad platforms earned trust by tying an ad view to downstream actions like signups or purchases, using conversion tracking and rich audience analytics. This rollout flips that, charging on views while initially giving advertisers mostly top-line reporting like views and clicks, with limited or no conversion data.

The technical reason is privacy policy, since OpenAI says it keeps conversations private from advertisers and does not sell user data to them. Ads are expected to appear in the coming weeks for Free and Go users, with under-18 users excluded and sensitive topics like mental health or politics kept ad-free.

If this works, it means intent-rich chat context can command high prices even when measurement is weak, which is the opposite of how Meta and Google usually justify spend. Reuters has recently reported ChatGPT at about 800M weekly active users, which is the scale OpenAI is putting behind this bet.

This looks technically risky for performance marketers, but it could fit brand buyers who mainly want attention in a high-intent moment.

That’s a wrap for today, see you all tomorrow.

Solid breakdown on MCP Apps. The real shift here is removing that manual copy-paste loop where automation dies. I've been thinkng about this with internal tools at work, the friction isn't the AI capability but allthe context switching between systems. Rendering UIs inside the chat context is a much cleaner design than orchestration APIs alone.