🧠 OpenAI is moving from “tell us your age once” to continuous, probability-based age gating.

Reasoning LLMs, GPT-5.2 solving Erdős #281, Copilot job impact rankings, Nvidia's jobs vs AI stance, AGI debates, continuous age-gating, and code’s end per Node.js creator.

Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (21-Jan-2026):

🧠 OpenAI is moving from “tell us your age once” to continuous, probability-based age gating.

🏗️ New GoogleAI paper investigates into why reasoning models such as OpenAI’s o-series, DeepSeek-R1, and QwQ perform so well.

📡 Microsoft used 200,000 Copilot chats to rank which jobs overlap most with generative AI.

🛠️ ‘AI Will Create Jobs, Not Kill Them’: Nvidia CEO Jensen Huang at Davos

👨🔧 Demis Hassabis, Dario Amodei Debate What Comes After AGI at World Economic Forum

🧠 GPT-5.2 Pro Solves Decades-Old Erdős Math Problem #281

🧑🎓 AI is not exacly creating “10x boost” yet at the macro level.

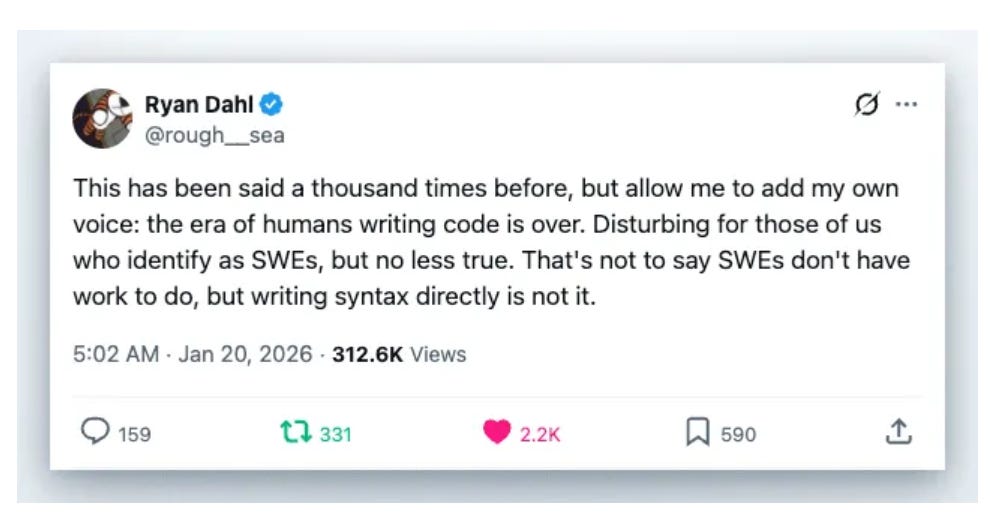

🗞️ The creator of Node.js says the era of writing code is over in a massively viral Tweet.

🏆 OpenAI is moving from “tell us your age once” to continuous, probability-based age gating.

It estimates whether an account likely belongs to someone under 18, then applies extra safeguards if so. Adults who get misclassified can confirm age with a Persona selfie to restore full access, and the EU rollout follows regional requirements.

Earlier teen safeguards mainly relied on a user saying they were under 18 at signup, which can miss teens who do not disclose. The age prediction model uses account and behavior signals like account age, typical active hours, usage patterns over time, and stated age.

During rollout, OpenAI watches how well different signals predict under-18 accounts, mostly by comparing the model’s guesses to ground truth from people who later verify their age. If a signal helps separate teens from adults it gets more weight, and if it causes lots of mistakes it gets down-weighted or removed.

When it predicts under 18, ChatGPT tightens handling for graphic violence, risky viral challenges, sexual or violent role play, self-harm content, and body-shaming or unhealthy dieting content. If age is uncertain or information is incomplete, it defaults to the safer experience, and parents can add controls like quiet hours, memory or model-training controls, and distress notifications.

There is also an escape hatch: if an adult gets misclassified, they can quickly restore full access by doing selfie-based age confirmation through Persona. So the system is basically “soft” identity verification by default, and “harder” verification only when needed for an appeal.

They also pair this with parent-facing controls, like quiet hours, controls over memory and model training, and notifications if the system detects signs of acute distress. Overall, OpenAI treating “age” as a safety routing problem, not a profile field. If this works, it may become a template for how consumer AI products can enforce teen protections at scale without universal identity checks, using inference first, verification only on dispute.

🏗️ New GoogleAI paper investigates into why reasoning models such as OpenAI’s o-series, DeepSeek-R1, and QwQ perform so well.

They claim “think longer” is not the whole story. Rather thinking models build internal debates among multiple agents—what the researchers call “societies of thought.” Through interpretability and large-scale experiments, the paper finds that these systems develop human-like discussion habits: questioning their own steps, exploring alternatives, facing internal disagreement, and then reaching common ground.

It’s basically a machine version of human collective reasoning, echoing the same ideas Mercier and Sperber talked about in The Enigma of Reason. Across 8,262 benchmark questions, their reasoning traces look more like back-and-forth dialogue than instruction-tuned baselines, and that difference is not just because the traces are longer.

A mediation analysis suggests more than 20% of the accuracy advantage runs through these “social” moves, either directly or by helping checking habits like verification and backtracking. Mechanistic interpretability uses sparse autoencoders (SAEs), which split a model’s internal activity into thousands of features, to find feature 30939 in DeepSeek-R1-Llama-8B.

DeepSeek-R1 is about35% more likely than DeepSeek-V3 to include question-answering on the same problem, and a mediation model attributes more than20% of the accuracy advantage to these social behaviors directly or via cognitive habits like verification. The takeaway is that “thinking longer” is a weak proxy for what changes, since the useful change looks like structured disagreement plus selective backtracking.

📡Microsoft used 200,000 Copilot chats to rank which jobs overlap most with generative AI.

On the high end AI overlap, the researchers report strong GenAI applicability for knowledge-work groups like computer and mathematical and office and administrative support, plus sales work that centers on explaining information. The paper also finds higher AI applicability for occupations that typically require a Bachelor’s degree than those with lower formal requirements.

The top 10 most affected occupations by generative AI:

Interpreters and Translators

Historians

Passenger Attendants

Sales Representatives of Services

Writers and Authors

Customer Service Representatives

CNC Tool Programmers

Telephone Operators

Ticket Agents and Travel Clerks

Broadcast Announcers and Radio DJs

The top 10 least affected occupations by generative AI:

Dredge Operators

Bridge and Lock Tenders

Water Treatment Plant and System Operators

Foundry Mold and Coremakers

Rail-Track Laying and Maintenance Equipment Operators

Pile Driver Operators

Floor Sanders and Finishers

Orderlies

Motorboat Operators

Logging Equipment Operators

🛠️ ‘AI Will Create Jobs, Not Kill Them’: Nvidia CEO Jensen Huang at Davos

Speaking with BlackRock CEO Larry Fink, Huang framed AI not as a single technology but as a “a five-layer cake,” spanning energy, chips and computing infrastructure, cloud data centers, AI models and, ultimately, the application layer.

Below are some takeawys

He breaks AI into a 5 layer stack. At the bottom is energy, because real time inference at scale eats power.

On top of that come chips and systems, where Nvidia lives, then cloud infrastructure, then the AI models, and finally the application layer in domains like finance, healthcare, and manufacturing where actual money is made.

This stack is triggering the largest infrastructure buildout in human history.

We are already a few hundred billion dollars into data centers, GPUs, networking, and memory, and he talks about trillions still to come.

TSMC planning around 20 new fabs, Foxconn and others building roughly 30 new computer plants, Micron announcing around $200 billion in memory investment, and SK Hynix and Samsung ramping heavily all show that this is not hype, it is capex.

He points to a simple sanity check on the “AI bubble” narrative. Nvidia has millions of GPUs deployed in every major cloud, yet GPU capacity is still so tight that spot rental prices are rising even for hardware that is 2 generations old.

On the model side, he highlights 3 shifts in the last year: language models becoming agentic systems that can plan and execute tasks, the rise of strong open reasoning models like DeepSeek that anyone can fine tune, and physical AI that understands proteins, chemistry, and physics well enough to change drug discovery and industrial design.

He is very aggressive on jobs. He says we are heading toward labor shortages, not mass unemployment, because AI automates tasks, not the purpose of a job.

Radiology is his favorite proof point.

AI now touches almost every scan, but the number of radiologists has gone up because throughput and revenue increased, so hospitals hired more. The same pattern shows up with nurses once AI takes over charting and transcription.

For countries, especially emerging markets and Europe, his message is: treat AI like roads and electricity, build local AI in your own language and law, and combine it with your existing industrial base so your workers can “program” simply by talking to models.

👨🔧 Demis Hassabis, Dario Amodei Debate What Comes After AGI at World Economic Forum

Dario expects AI beating top humans on almost all cognitive tasks within roughly 1 to 2 years.

He predicts code models will handle nearly all software engineering within 6 to 12 months and then heavily automate AI research.

That is meant to create a self improvement loop where AIs help design, train and evaluate stronger successor models.

Demis agrees coding and math are near full automation but argues experimental science, theory discovery and robotics still need breakthroughs.

He keeps his estimate of around 50% chance for human level cognition across domains by decade end, not earlier.

Both see the real AGI tipping point as AI autonomously running most of the AI development pipeline.

Dario cites Anthropic’s revenue rising from 0 to 100M, 1B and 10B in 3 years as proof that frontier models instantly become giant businesses.

He bluntly forecasts about 50% of entry level white collar roles disappearing within 1 to 5 years once such systems are widely deployed.

Inside Anthropic he already foresees needing fewer junior and mid level engineers because models absorb much of the routine coding and analysis.

On geopolitics and risk, he says rivalry with China blocks slowdown, compares exporting advanced chips to selling nuclear weapons, and joins Demis warning that deception, bioterror help and misaligned autonomous systems remain dangers without shared safety rules.

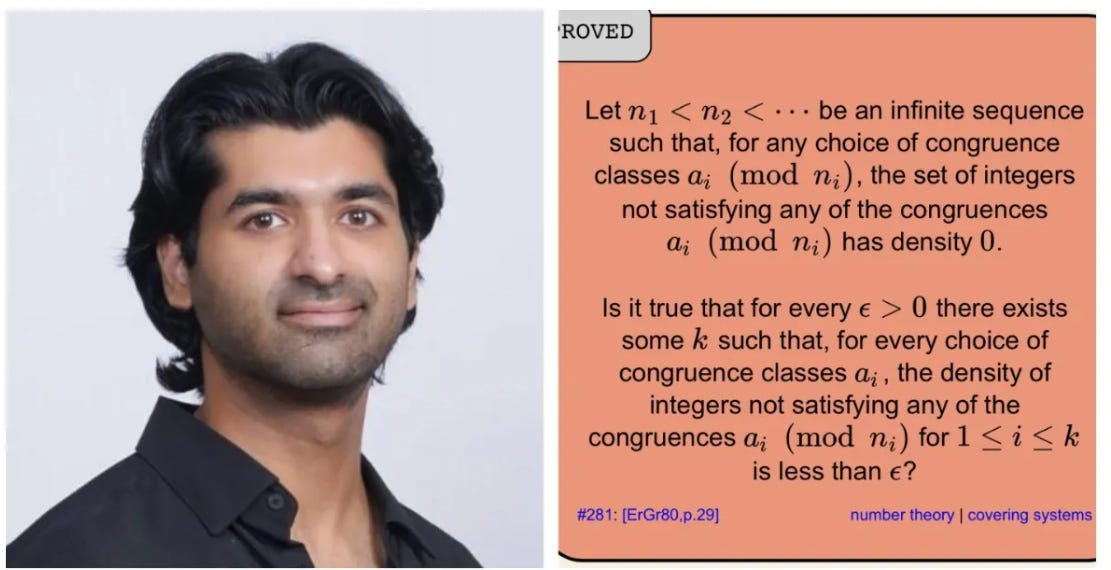

🧠 GPT-5.2 Pro Solves Decades-Old Erdős Math Problem #281

GPT-5.2 Pro was used by Neel Somani to produce a proof of Erdős Problem #281, a 1980 question about when a family of congruence classes can force an integer set to have density 0.

Terence Tao checked the argument and called it “perhaps the most unambiguous instance” of an AI system helping solve an open problem.

AI has really crossed a key threshold in Math. This shows a useful pattern, namely that modern LLMs can suggest a correct bridge between combinatorial density questions and ergodic tools, then humans can formalize and audit the edge cases.

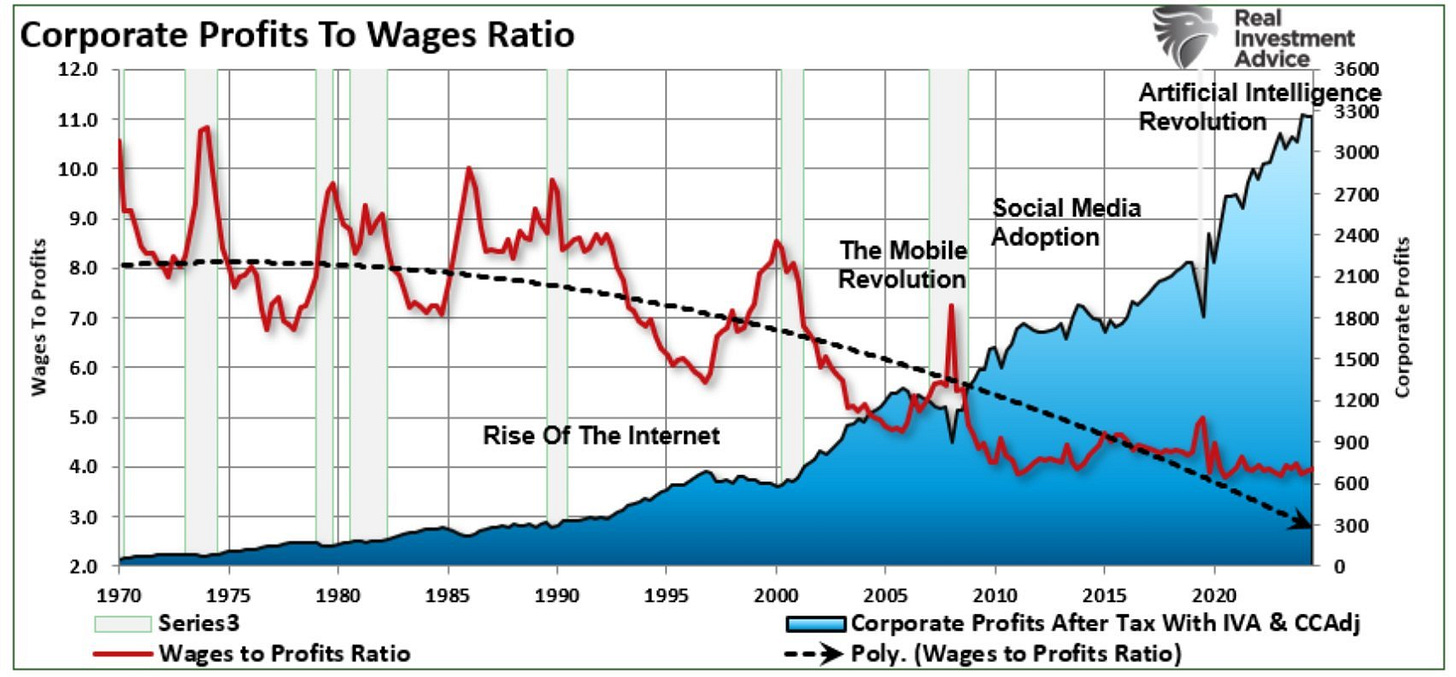

🧑🎓 AI is not exacly creating “10x boost” yet at the macro level.

At the macro level, AI is not quite producing a “10x” increase so far, according to data from economist reports.

While at the same time, AI is still changing company economics in a very real way.

JPMorgan has said AI doubled its operations productivity improvement rate from 3% to about 6%, and it expects 40%-50% productivity lifts in some operations specialist roles.

Other large banks are describing the same basic playbook: keep staffing flat, push more volume through the system, and let the difference show up as lower costs and higher margins rather than as hiring.

Once you look at it that way, the story stops being “when will AI show up in the macro data” and turns into “who gets paid when it does.”

The labor share of United States gross domestic product, basically the slice of the economy that shows up as pay to workers, fell to 53.8% in Q3-25, the lowest level since records began in 1947.

And the long-running gap between productivity growth and typical worker pay has been widening since 1979, i.e. the economy can get more output per hour without most workers getting matching pay growth.

AI can raise output per worker while wages barely move, because companies can treat the gain as a cost cut and a margin boost.

That kind of change can show up first as slower hiring, higher profits, and more pressure on the wage share

🗞️ The creator of Node.js says the era of writing code is over in a massively viral Tweet.

The guy who got a standing ovation at JSConf EU in 2009 for showing JavaScript could run on the server. 9 years later, he came back with a talk called “10 Things I Regret About Node.js,” then went on to build a brand-new runtime, Deno, to fix those issues.

When Ryan Dahl says something is done, it’s smart to listen.

However, note that Dahl isn’t saying software engineers are no longer needed. He’s saying that writing syntax line by line is no longer the job.

Building software still involves real thinking. You still need to understand the problem, design the system, choose architectures, and confirm things actually work. What’s changing is the mechanical task of converting those decisions into code.

He’s held this view for a while. In June 2025, he published “We’re Still Underestimating What AI Really Means,” where he compared the shift to watching childbirth: deeply meaningful, occasionally shocking, but mixed with a lot of waiting.

And he’s hands-on. In another tweet from June 2025, he complained about Claude’s habit of saying “You’re absolutely right!” That’s not theory. That’s lived experience with AI tools.

That’s a wrap for today, see you all tomorrow.