A Comparative Study on Reasoning Patterns of OpenAI's o1 Model

The paper finds six distinct patterns for OpenAI o1-preview's superior performance.

The paper finds six distinct patterns for OpenAI o1-preview's superior performance. 💡

Original Problem 🎯:

Test-time Compute methods enhance LLM performance during inference, but their effectiveness across different tasks remains unexplored. Current approaches face limitations in reward model capabilities and search space constraints.

Solution in this Paper 🔧:

• Comparative study of OpenAI's o1 model against existing Test-time Compute methods

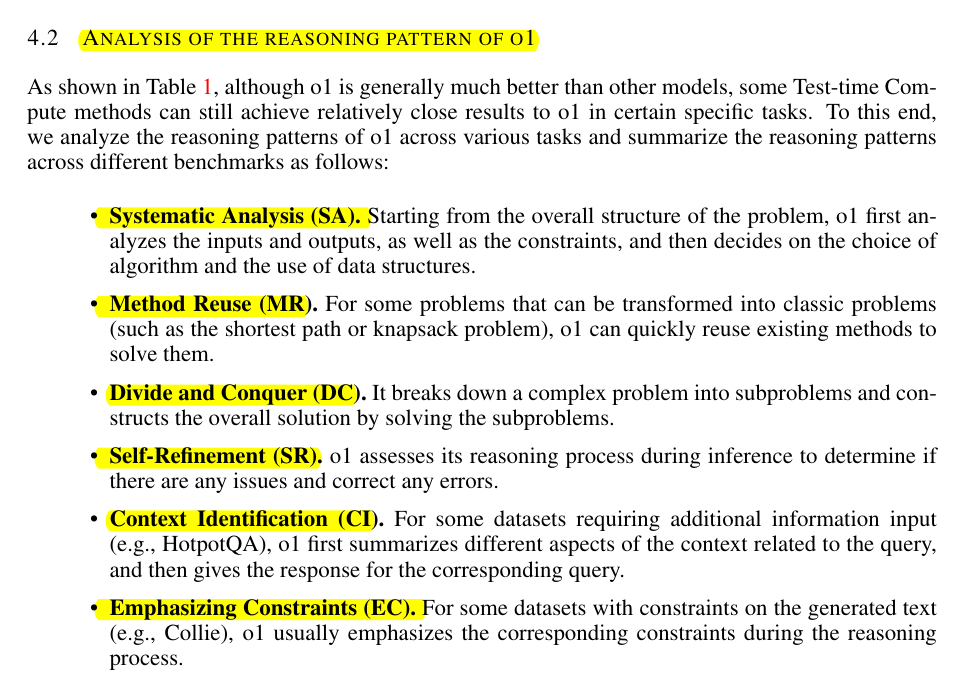

• Identified 6 key reasoning patterns: Systematic Analysis, Method Reuse, Divide and Conquer, Self-Refinement, Context Identification, and Emphasizing Constraints

• Evaluated performance across math, code, and commonsense reasoning using GPT-4o as backbone

• Implemented data filtering module to ensure benchmark quality

Key Insights from this Paper 💡:

• Reward model capabilities and search space limit the performance of search-based methods

• Domain-specific system prompts are crucial for step-wise methods

• No clear correlation between input prompt length and reasoning token length

• Divide and Conquer + Self-Refinement are o1's most effective reasoning patterns

Results 📊:

• o1-mini achieved 35.77% overall accuracy, surpassing o1-preview (34.32%)

• Agent Workflow showed 24.70% accuracy, outperforming other Test-time methods

• Best-of-N (N=8) reached 19.04% accuracy

• Step-wise BoN performance limited to 9.79% due to long-context inference

• Self-Refine showed minimal improvement at 5.62%

🎯 The paper identified six key reasoning patterns:

Systematic Analysis (SA): Starting with overall problem structure analysis

Method Reuse (MR): Transforming problems into classic problem types

Divide and Conquer (DC): Breaking complex problems into subproblems

Self-Refinement (SR): Assessing and correcting reasoning during inference

Context Identification (CI): Summarizing context aspects for queries

Emphasizing Constraints (EC): Emphasizing constraints during reasoning

🎯 The limitations of existing Test-time Compute methods?

The performance of search-based methods like Best-of-N (BoN) is limited by:

Reward model capabilities affecting response selection quality

Search space constraints impacting performance improvement potential

Long context inference limiting Step-wise BoN effectiveness

Complex tasks making it difficult for Step-wise methods to maintain coherent reasoning