"A Lightweight Method to Disrupt Memorized Sequences in LLM"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2502.05159

LLMs can memorize training data, leading to unintentional reproduction of copyrighted content. This paper addresses the challenge of reducing this memorization in LLMs without sacrificing performance or requiring extensive retraining.

This paper proposes TOKENSWAP. It is a lightweight method that reduces memorization by selectively replacing token probabilities during inference.

-----

The user wants me to provide 3 technical perspectives on the TOKENSWAP paper, from the viewpoint of a Deep Learning PhD Engineer.

Each perspective should be max 35 words, technically insightful yet easy to understand, and focus on the current capabilities of TOKENSWAP, not future directions.

I need to extract deep technical insights from the paper and present them concisely.

Each perspective should start with "📌 ".

Plan:

1. Re-read the paper summary I already wrote to refresh my understanding of TOKENSWAP.

2. Identify the core technical strengths and innovative aspects of TOKENSWAP.

3. Formulate 3 distinct perspectives, each highlighting a key technical advantage or characteristic of TOKENSWAP.

4. Ensure each perspective is within the 35-word limit, uses simple language, and maintains technical depth.

5. Start each perspective with "📌 ".

6. Avoid abbreviations unless extremely common (like LLM, which I should expand to LLM at least once in each perspective for clarity if possible).

7. Focus on what TOKENSWAP *currently* achieves as per the paper.

Perspective 1: Focus on the mechanism - probability replacement and auxiliary model.

Perspective 2: Focus on the 'lightweight' nature and accessibility - post-hoc, no retraining.

Perspective 3: Focus on the selective token modification and performance preservation.📌 TOKENSWAP cleverly uses a smaller auxiliary model to guide the generation of a larger LLM, specifically for grammar related tokens. This targeted probability replacement effectively reduces memorization.

📌 The key innovation is TOKENSWAP's post-hoc nature. It offers memorization mitigation without costly retraining or modifying model weights, making it highly accessible and practical for real-world LLM deployments.

📌 By selectively modifying probabilities of only grammar tokens, TOKENSWAP minimizes performance impact on downstream tasks. This targeted disruption balances memorization reduction with maintaining model utility.

----------

Methods Explored in this Paper 🔧:

→ TOKENSWAP is introduced as a post-hoc method to mitigate memorization in LLMs.

→ It operates by using a smaller auxiliary language model, like DistilGPT-2, alongside a larger main LLM.

→ During text generation, for each token, TOKENSWAP checks if the token belongs to a predefined set 'G' of grammar-related tokens.

→ If a token is in 'G', its probability distribution is taken from the smaller auxiliary model. Otherwise, the probability distribution is taken from the main LLM.

→ This selective probability replacement disrupts the high-probability pathways that lead to memorized sequences, as smaller models memorize less.

→ The set 'G' is carefully constructed using high-frequency grammar-based tokens like determiners, prepositions, conjunctions, pronouns, and auxiliary verbs.

→ This selection ensures that fluency is maintained while memorization is reduced.

-----

Key Insights 💡:

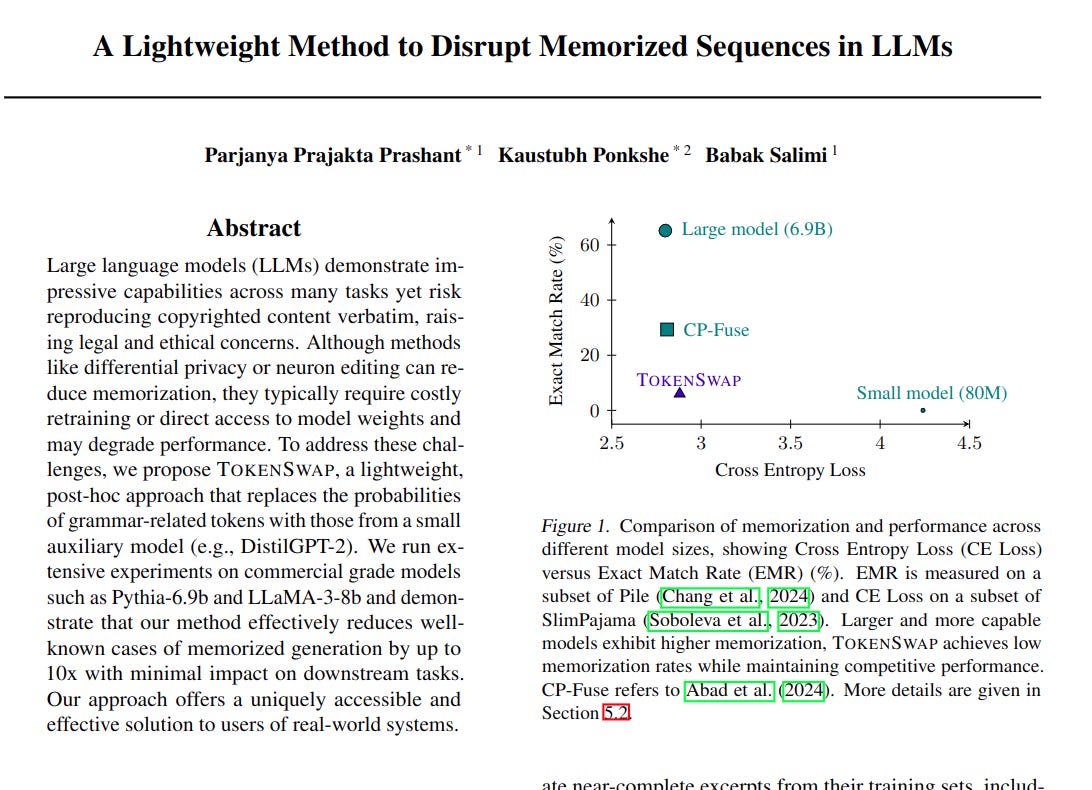

→ Smaller language models exhibit significantly lower memorization compared to larger models, but often have poorer performance.

→ TOKENSWAP leverages the strengths of both large and small models. It achieves low memorization from the smaller model and high performance from the larger model.

→ TOKENSWAP is a lightweight and accessible method as it does not require access to model weights, training data, or model retraining. It only needs the output token probabilities.

→ Theoretical analysis shows that TOKENSWAP exponentially reduces the probability of generating memorized sequences with increasing length.

-----

Results 📊:

→ Achieves up to 800x reduction in Exact Match Rate (EMR) in controlled experiments on WritingPrompts dataset, reducing EMR from 83.35% to 0.10%.

→ Demonstrates a 10x reduction in verbatim generation on real-world models like Pythia-6.9B and Llama-3-8B.

→ Maintains or even improves performance, achieving lower cross-entropy loss in some cases compared to standard generation.