A PRESCRIPTIVE THEORY FOR BRAIN - LIKE INFERENCE

A spiking neural network that thinks like a brain and learns like a VAE

A spiking neural network that thinks like a brain and learns like a VAE

Making AI neurons behave more like biological ones through Poisson magic

🎯 Original Problem:

Current brain-inspired AI models struggle to match biological neural behavior, particularly in handling prediction signals and negative firing rates. The challenge lies in developing a model that can perform Bayesian inference while maintaining biological plausibility.

🔧 Solution in this Paper:

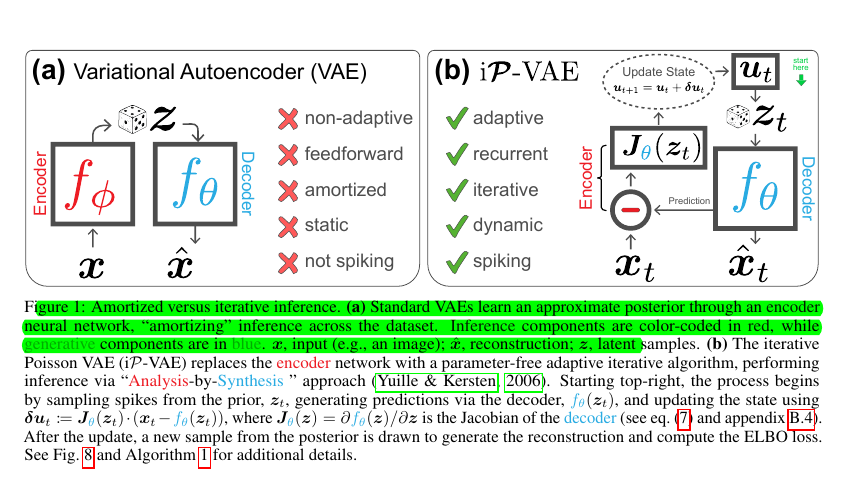

• Introduces iP-VAE (iterative Poisson Variational Autoencoder) that performs Bayesian inference through membrane potential dynamics

• Uses Poisson distribution assumptions instead of Gaussian for sequence data

• Implements spiking neural communication without explicit prediction signals

• Features modulatory feedback mechanism aligned with biological neurons

• Reuses weights across iterations for parameter efficiency

• Utilizes sparse integer spike counts for energy-efficient deployment

💡 Key Insights:

• Poisson assumptions in ELBO lead to more biologically accurate neural networks

• Membrane potential dynamics can effectively handle posterior inference

• Iterative processing enables better generalization than one-shot inference

• Sparse representations emerge naturally from the architecture

• The model bridges theoretical neuroscience and practical machine learning

📊 Results:

• Achieves superior reconstruction performance compared to state-of-the-art iterative VAEs

• Demonstrates stable performance across input rotations (0-180 degrees)

• Shows better classification accuracy on novel datasets

• Uses fewer parameters while maintaining performance

• Learns more compositional features than alternative models

💡 The key advantages of iP-VAE over existing models

iP-VAE demonstrates several advantages:

Learns sparser representations

Shows superior generalization to out-of-distribution samples

Uses fewer parameters than alternative models

Converges to sparse posterior representations

Outperforms state-of-the-art iterative VAEs