Accelerating AI Performance using Anderson Extrapolation on GPUs

Mathematical optimization (Anderson extrapolation) makes AI training 8x faster with less energy

Mathematical optimization (Anderson extrapolation) makes AI training 8x faster with less energy

Accelerates training by upto 8.6x while using upto 88% less compute.

🎯 Original Problem:

AI training consumes massive computational resources, projected to use 2% of global electricity by 2030. Current methods are inefficient and environmentally costly, potentially consuming 160 terawatt-hours annually.

🔧 Solution in this Paper:

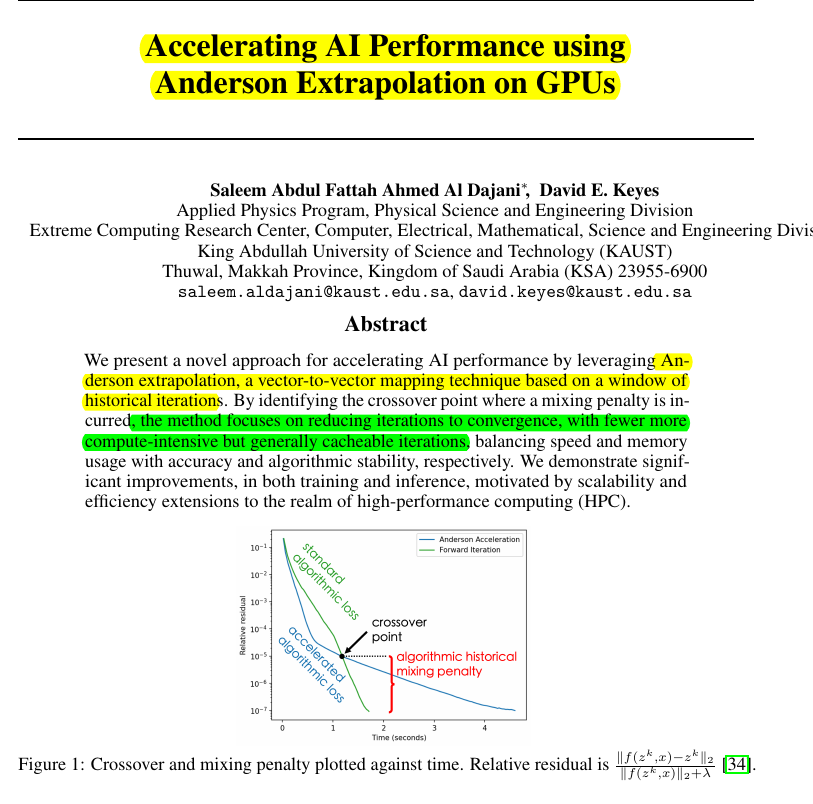

• Uses Anderson extrapolation - a vector-to-vector mapping technique using historical iterations

• Implements window-based approach to balance memory usage and speed

• Optimizes linear combination of prior iterates to minimize residual norm

• Incorporates mixing parameter β to balance between previous and current iterations

• Focuses on Deep Equilibrium Models (DEQs) that represent infinite layers in single implicit layer

💡 Key Insights:

• Higher cost per iteration is offset by fewer total iterations needed

• Memory-intensive but cacheable iterations prove more efficient

• GPU implementation significantly outperforms CPU processing

• Balances between accuracy and computational efficiency

• Reduces environmental impact through optimized computation

📊 Results:

• Achieves 50-88% compute savings compared to standard methods

• Delivers 2-8.6x speedup in processing time

• Improves training accuracy from 64.7% to 96.3%

• Enhances testing accuracy from 64.2% to 79.1%

• Reduces training time from 12,000 to 1,400 seconds

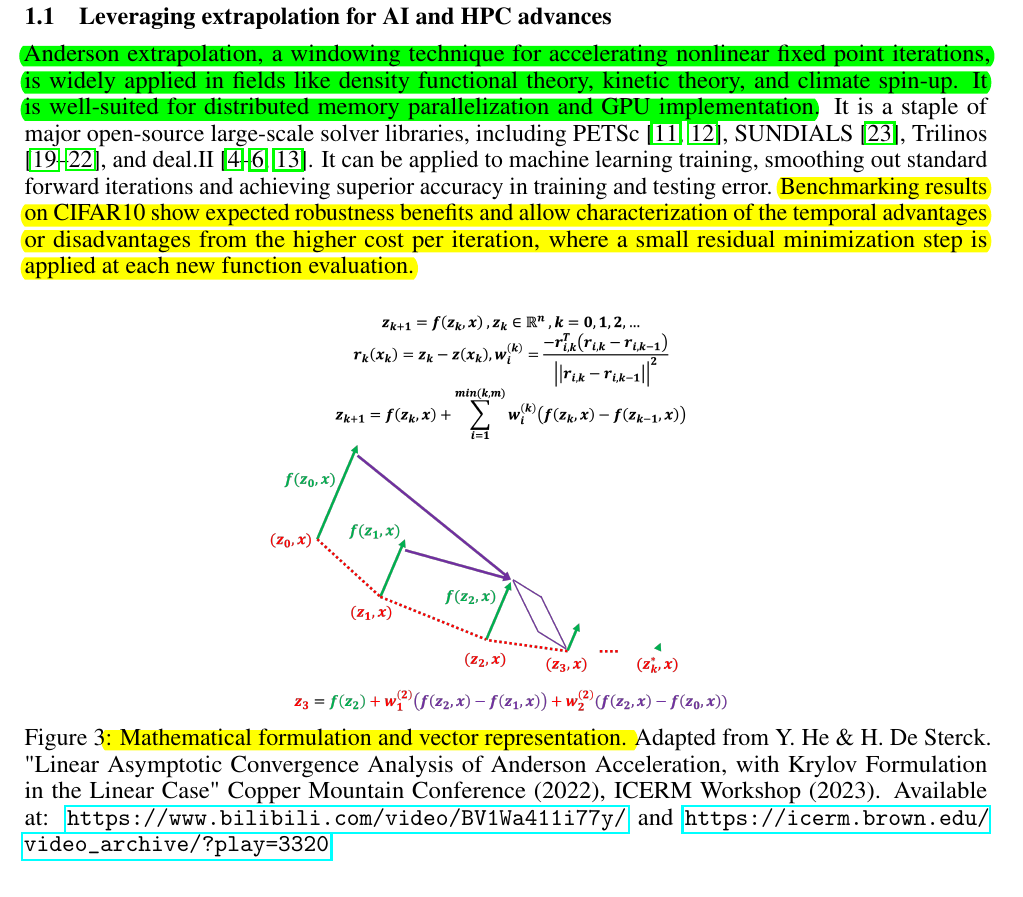

The key components of Anderson acceleration implementation 👨🔧

Fixed-point iteration formula for finding equilibrium state

Linear combination of prior iterates optimized to minimize residual norm

Window-based approach for historical iterations

Mixing parameter β to balance between previous and current iterations.

Generated the below podcast on this Paper with Google's Illuminate, a specialized tool to create podcast from arXiv papers only.