Agent S: An Open Agentic Framework that Uses Computers Like a Human

Significant development for the power of AI Agents, they are learning to use GUIs.

Significant development for the power of AI Agents, they are learning to use GUIs.

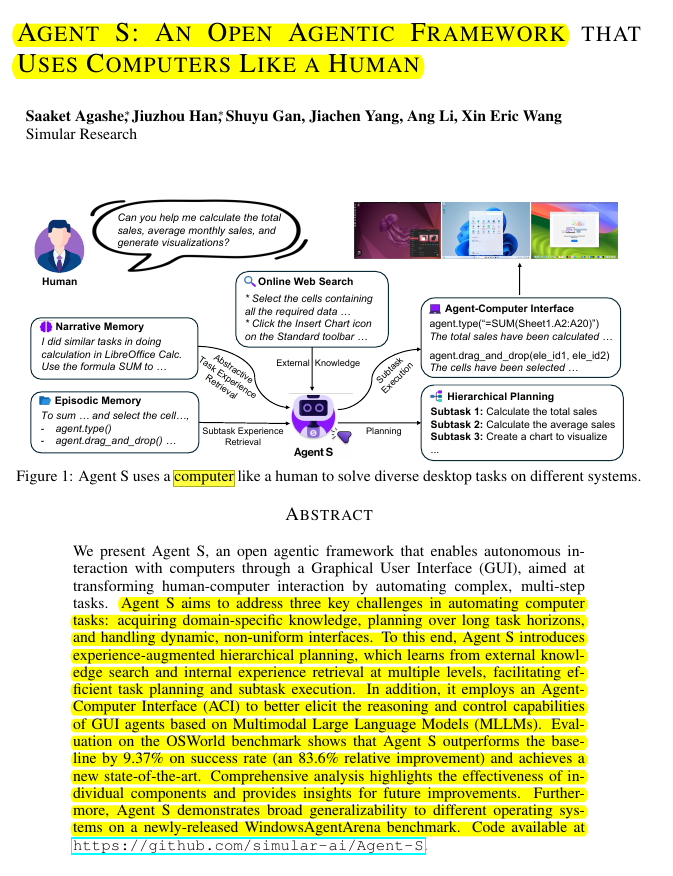

Agent-S uses a computer like a human to solve diverse desktop tasks on different systems, by breaking them down into bite-sized actions and remembering what works.

Experience-augmented hierarchical planning enables Agent S to handle diverse GUI tasks with improved performance.

The original Problem 🎯:

Automating complex computer tasks presents challenges in acquiring domain-specific knowledge, planning over long task horizons, and handling dynamic interfaces.

Solution in this Paper 🛠️:

• Experience-augmented hierarchical planning:

Manager module for task decomposition

Worker modules for subtask execution

Self-evaluator for experience summarization

• Agent-Computer Interface (ACI):

Dual-input strategy for visual understanding and element grounding

Bounded action space of language-based primitives

• Continual memory update mechanism for ongoing learning

Key Insights from this Paper 💡:

• Combining external knowledge and internal experience enhances task planning

• Structured interface improves MLLM reasoning for GUI control

• Hierarchical planning supports long-horizon workflows

• Continual learning enables adaptation to new tasks and environments

Results 📊:

• OSWorld benchmark: 20.58% success rate (83.6% relative improvement over baseline)

• Consistent improvements across five computer task categories

• WindowsAgentArena: 18.2% success rate (36.8% improvement without adaptation)

• Ablation studies confirm effectiveness of individual components

🤖 Agent S addresses three main challenges in automating computer tasks:

Acquiring domain-specific knowledge for diverse applications

Planning over long task horizons

Handling dynamic, non-uniform interfaces

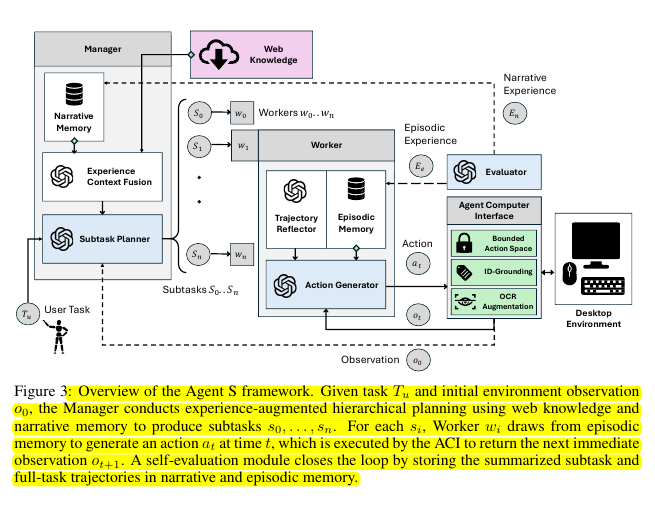

🧠 Agent S handles the experience-augmented hierarchical planning work by

A Manager module that decomposes complex tasks into subtasks using web knowledge and narrative memory

Worker modules that execute subtasks using episodic memory and trajectory reflection

A self-evaluator that summarizes experiences as textual rewards, updating narrative and episodic memories

Agent-Computer Interface (ACI) improves agent performance

The ACI is an abstraction layer that:

Uses a dual-input strategy: visual input for understanding environmental changes and an image-augmented accessibility tree for precise element grounding

Defines a bounded action space of language-based primitives conducive to Multimodal Large Language Model reasoning

Generates environment transitions at the right temporal resolution for observing immediate feedback