🤯 AGI Is Closer Than You Think And AI Agents Will Join The Workforce In 2025, Says Sam Altman

OpenAI's AGI claims, NVIDIA's CES dominance with personal AI supercomputer, and open-source Cosmos models reshape robotics AI training.

Read time: 8 min 10 seconds

⚡In today’s Edition (7-Jan-2025):

🤯 OpenAI CEO Sam Altman: ‘We Know How To Build AGI’

🔥 NVIDIA was on fire: CES 2025, Top Takeaways

📡NVIDIA A $3,000 Personal Ai Supercomputer That Is 1,000x The Power Of Your Laptop And Will Let You Ditch The Data Center

🛠️ NVIDIA Makes Cosmos World Foundation Models Openly Available to Physical AI Developer Community

🧑🎓 Deep Dive Tutorial

📚 How to use the GPT-4o Realtime API for speech and audio

🤯 OpenAI CEO Sam Altman: ‘We Know How To Build AGI’

🎯 The Brief

Sam Altman of OpenAI announced confidence in knowing how to build AGI, with plans to deploy AGI workforce agents capable of multi-domain tasks by 2025. This marks a shift from research to product focus, aiming to create agents that significantly boost productivity across industries. The announcement emphasizes superintelligence development for scientific breakthroughs and innovation acceleration.

⚙️ The Details

→ In his blogpost Sam Altman said, “We believe that, in 2025, we may see the first AI agents “join the workforce” and materially change the output of companies.”

→ OpenAI’s latest advancements in reasoning and decision-making underpin its confidence in building AGI, which goes beyond existing LLM capabilities. The company aims for real-world deployment by releasing iterative models for refinement.

→ The AGI workforce agents are designed to handle tasks like data analysis, iterative problem-solving, and autonomous decision-making, aiming to transform industry productivity.

→ OpenAI envisions superintelligence—far surpassing human capabilities—to accelerate scientific innovation.

→ Interestingly, Dario Amodei, CEO of Anthropic shares a similar sentiment. In a recent interview with Lex Fridman, Amodei predicted that AGI could emerge by 2026 or 2027, highlighting the rapid acceleration of AI capabilities.

🔥 NVIDIA was on fire: CES 2025, Top Takeaways

🎯 The Brief

Nvidia CEO Jensen Huang unveiled new AI and computing advancements at CES, including the GB10 superchip, GeForce RTX 50 series GPUs, and Project DIGITS AI desktop. These releases underscore Nvidia’s strategy to dominate AI across industries, from gaming to robotics and autonomous systems. The RTX 5090 GPU offers 2x the performance of its predecessor, while the Cosmos platform simulates real-world conditions for developing AI-powered robots and vehicles.

⚙️ The Details

→ The GB10 superchip is a downsized version of the GB200, pairing one Blackwell GPU with a Grace CPU. It powers Project DIGITS, an AI desktop with 128GB memory and 4TB storage, targeting researchers.

→ The RTX 5090 GPU, part of the new GeForce RTX 50 series, delivers twice the performance of the RTX 4090. The highlight was the GeForce RTX 5070, which Huang said matches the performance of the previous generation’s RTX 4090 but costs just $549—far less than the 4090’s $1,599 launch price. This is due to improved DLSS AI software.

→ The Cosmos platform uses world foundation models (WFMs) for virtual robot and vehicle training. Companies like Toyota, Continental, and Aurora use Nvidia’s automotive suite for autonomous driving tech.

→ Nvidia introduced a video analysis AI blueprint on the Metropolis platform, enhancing video summarization at 30x real-time speed. This technology aids industrial processes, sports, and entertainment analytics.

→ The agentic AI blueprints enable enterprises to build autonomous AI systems for tasks like PDF summarization and real-time insights from videos. These “knowledge robots,” as Nvidia describes them, can analyze large amounts of data, summarize information from videos and PDFs, and take actions based on what they learn. To make this happen, Nvidia partnered with five leading AI companies — CrewAI, Daily, LangChain, LlamaIndex, and Weights & Biases.

→ Jensen Huang (CEO of Nvidia) famously said in CES 2025 “The IT department of every company is going to be the HR department of AI agents in the future.” And Huang further says AI agents are ‘a multi-trillion-dollar opportunity’ and ‘the age of AI Agentics is here’

→ Another major leap demonstrated at CES was DLSS 4, Nvidia’s latest AI-powered upscaling, capable of generating multiple frames at once. In a demonstration, DLSS 4 rendered a scene at 247 frames per second (FPS)—over eight times faster than without AI—while keeping latency at just 34 milliseconds.

→ In a magical demo Jensen showed how NVIDIA’s new cards generates 3 additional frames with AI for every frame that is calculated, all at 4K. That is, here the traditional ray-tracing algorithm only calculates 2mn pixels out of the 33mn pixels and rest are predicted with AI.

→ That the new card uses neural nets to generate 90+% of the pixels for your games. Traditional ray-tracing algorithms only render ~10%, kind of a "rough sketch", and then a generative model fills in the rest of fine details. In one forward pass. In real time. So its a pardigm shift and now AI is the new graphics.

Key product announcements from the keynote:

RTX 5070 — Offers the performance of the RTX 4090 at $549, dramatically undercutting the previous generation’s high-end price.

RTX 5070 Ti — Delivers 4090-like performance at $749, featuring 1,406 AI TOPS and 16GB of G7 memory.

RTX 5090 — The top-tier model with 3,404 AI TOPS and 32GB of G7 memory, retailing for $1,999.

RTX 5080 — Mid-range option with 1,800 AI TOPS and 16GB of G7 memory, priced at $999.

RTX Blackwell laptops — Laptops powered by RTX Blackwell GPUs, offering 40% longer battery life and twice the performance at half the power, these range from $1,299 - $2,899.

📡NVIDIA A $3,000 Personal AI Supercomputer That Is 1,000x The Power Of Your Laptop And Will Let You Ditch The Data Center

🎯 The Brief

NVIDIA has unveiled Project DIGITS, a $3,000 personal AI supercomputer featuring the GB10 Grace Blackwell Superchip. It provides 1 petaflop of AI performance, enabling desktop-scale development of 200-billion-parameter models. This aims to make advanced AI accessible for developers, researchers, and students, bypassing expensive cloud dependencies.

⚙️ The Details

→ Project DIGITS is designed to fit on a desk, resembling a Mac Mini-sized system, and operates from a standard power outlet. It offers 128GB of unified memory and 4TB of NVMe storage.

→ Powered by the GB10 Superchip, it integrates a GPU with CUDA and Tensor cores and a 20-core Arm-based Grace CPU, achieving power-efficient 1 petaflop performance at FP4 precision.

→ Users can link two systems to handle 405-billion-parameter models, comparable to top-tier LLMs.

→ It runs NVIDIA DGX OS and supports PyTorch, Python, NeMo, and RAPIDS, with access to NVIDIA’s NGC catalog and AI Enterprise software for seamless local-to-cloud deployment.

→ The system aims to democratize supercomputer-level AI for prototyping and experimentation across industries.

🛠️ NVIDIA Makes Cosmos World Foundation Models Openly Available to Physical AI Developer Community

🎯 The Brief

Cosmos is an open-source, open-weight Video World Model trained on 20 million hours of video, offering capabilities like text-to-video generation and physics-based synthetic data creation. It includes pre-trained models under permissive licenses for commercial use, advanced tokenizers, and an AI-accelerated data pipeline.

Cosmos offers two flavors: diffusion (continuous tokens) and autoregressive (discrete tokens); and two generation modes: text->video and text+video->video.

⚙️ The Details

→ Cosmos WFMs are neural networks that can predict and generate physics-aware videos of a virtual environment's future state. They are trained on 9,000 trillion tokens from 20 million hours of real-world data, including human interactions, industrial environments, robotics, and driving scenarios.

→ To put in perspective, 20M hours is like watching YouTube 24/7 non-stop from the age of Roman Empire to today.

→ The models come in three categories: Nano, Super, and Ultra, each optimized for different performance needs. NVIDIA also introduced NeMo Curator, an accelerated video processing pipeline that can process 20 million hours of video in 14 days using NVIDIA Blackwell GPUs.

→ The Cosmos Tokenizer is a visual data compression tool that offers 8x more compression than state-of-the-art methods and 12x faster processing speed. Cosmos is available under an open model license on Hugging Face and the NVIDIA NGC catalog. Developers can freely use, customize, and fine-tune the models for their specific needs.

→ Developers can access these tokenizers, available under NVIDIA’s open model license, via Hugging Face and GitHub. Developers adopting Cosmos can use NVIDIA’s DGX Cloud for an easy way to deploy Cosmos models, and can also harness model training and fine-tuning capabilities offered by NVIDIA’s NeMo framework.

→ NVIDIA also introduced Llama Nemotron large language models and Cosmos Nemotron vision language models for enterprise use in sectors like healthcare, finance, and manufacturing.

→ This is significant because, physical AI has a big data problem, and Cosmos just democratized access to advanced AI models, enabling developers of all sizes to build sophisticated physical AI applications with reduced reliance on real-world data. The Cosmos platform includes generative world foundation models (WFMs), video tokenizers, guardrails, and an accelerated data processing pipeline.

🧑🎓 Deep Dive Tutorial

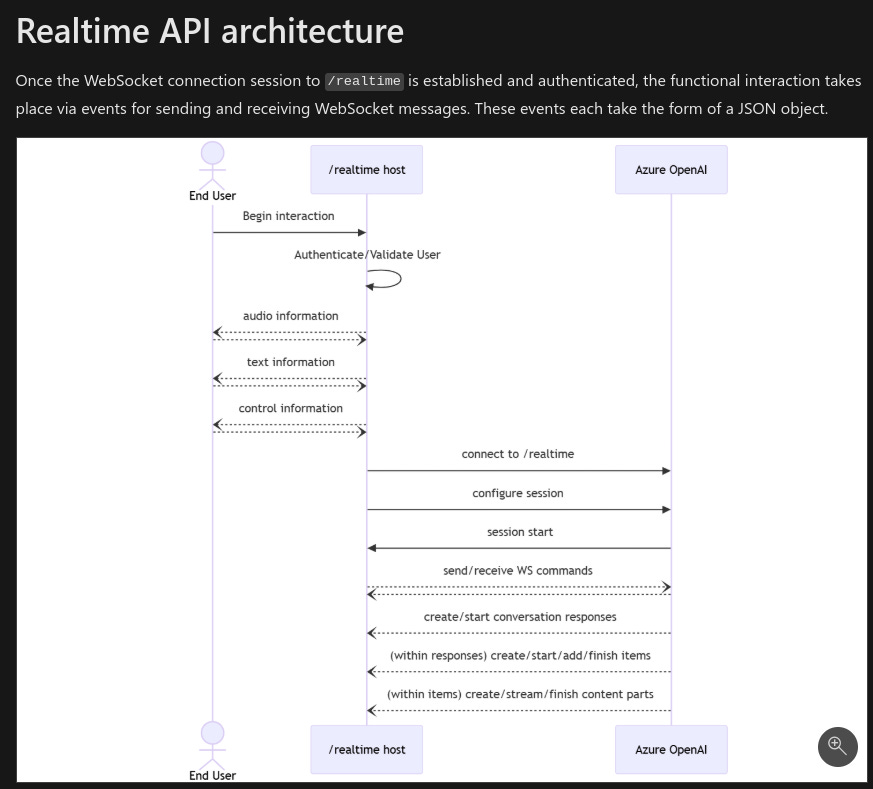

📚 How to use the GPT-4o Realtime API for speech and audio

This blog explains Azure’s GPT-4o Realtime API for real-time speech interactions.

🛠️ Key technical takeaways

WebSocket-based architecture for real-time, bidirectional speech-to-text and text-to-speech communication.

Session configurations allow customization of audio formats, voice activity detection (VAD), and transcription models.

Two modes for turn detection: client-managed mode for precise control and server VAD for automatic handling.

Real-time session events such as

session.update,response.create, andconversation.item.truncateto manage input/output states.Support for server-sent incremental updates, streaming both audio and text simultaneously for low-latency interactions.

Detailed usage metrics on token breakdown, covering text and audio tokens, to optimize API usage.

Authentication options include Microsoft Entra and API keys for secure resource connections.