🧑🔬 AI now predicts 130 diseases from 1 night of sleep 🛌.

Sleep-based disease prediction by AI, Nvidia's Vera-Rubin GPU, file-system-style context, Cursor’s smarter agents, Atlas goes to market, and xAI raises at $20B.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (7-Jan-2026):

🧑🔬 AI now predicts 130 diseases from 1 night of sleep 🛌.

🏆 Boston Dynamics unveiled a product version of its fully electric Atlas humanoid at CES 2026 and said manufacturing starts immediately.

📡“Inside the NVIDIA Rubin Platform: Six New Chips, One AI Supercomputer”

🛠️ Nvidia’s Vera-Rubin GPU makes one thing clear: the company isn’t after one unbeatable chip anymore. Their focus has turned to the full stack strategy.

🧠 New paper says the best way to manage AI context is to treat everything like a file system.

🎉 xAI completed its upsized Series E funding round at $20B

🗞️ Cursor is rolling out a way for coding agents to fetch context only when they need it, instead of stuffing everything into the prompt.

🧑🔬 AI now predicts 130 diseases from 1 night of sleep 🛌.

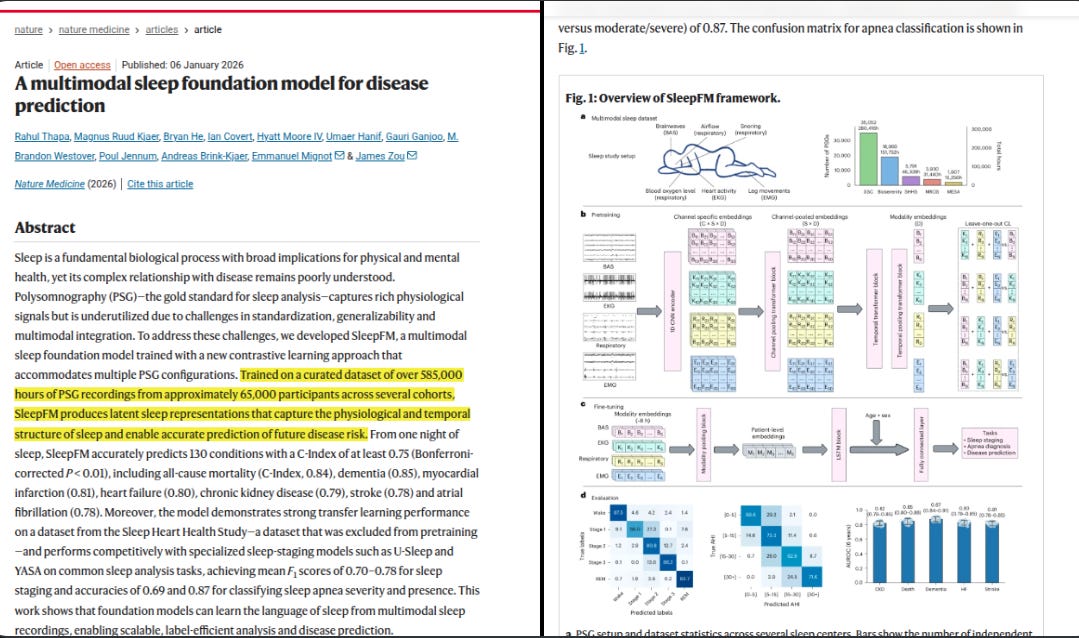

Polysomnography (PSG) records brain, heart, muscle, and breathing signals during sleep, but most AI only stages sleep or flags apnea. SleepFM pretrains on 585,000h of PSG from 65,000 people and turns 1 night into a general sleep embedding.

From 1 night, 130 diseases hit Harrell’s concordance index (C-Index) of at least 0.75, which checks whether higher predicted risk happens sooner, including mortality 0.84 and dementia 0.85. Older supervised models use less data, need expert labels, and break when clinics record different channel sets.

SleepFM resamples to 128Hz, makes 5s tokens, uses 1D convolutions, pools channels with attention, then a transformer summarizes time. Leave-one-out contrastive learning trains each modality to match the average of the others, so missing modalities hurt less.

🏆 Boston Dynamics unveiled a product version of its fully electric Atlas humanoid at CES 2026 and said manufacturing starts immediately.

The main shift is from the older hydraulic Atlas research platform to a production-oriented electric design that is meant to run predictable factory work at scale. Atlas is a general industrial worker for material handling and order fulfillment, with “learn once, replicate across the fleet” behavior built into the product story.

Orbit is positioned as the control layer that connects Atlas into manufacturing execution systems (MES) and warehouse management systems (WMS), so tasks and fleet metrics can live inside existing plant software. Operators can run Atlas autonomously, teleoperate it, or steer it via tablet, which keeps a single hardware platform usable across different maturity levels of autonomy.

The published specs include 56 degrees of freedom, fully rotational joints, 2.3m reach, up to 50kg lift, and operation from -20C to 40C. The safety and workflow stack includes human detection, fenceless guarding, and integration points like barcode scanners and RFID.

Boston Dynamics also says Google DeepMind foundation models will be integrated for broader task learning, while Hyundai Mobis supplies actuators to harden the supply chain. Hyundai separately described a path to 30,000 robots per year and a first U.S. plant rollout from 2028, while cost and pricing remain undisclosed.

This looks strongest where factories need a humanoid that plugs into fleet ops software and repeatable parts logistics, not just a one-off demo. No pricing details so far, but Hyundai is gearing up to integrate tens of thousands of Boston Dynamics robots into its manufacturing operations.

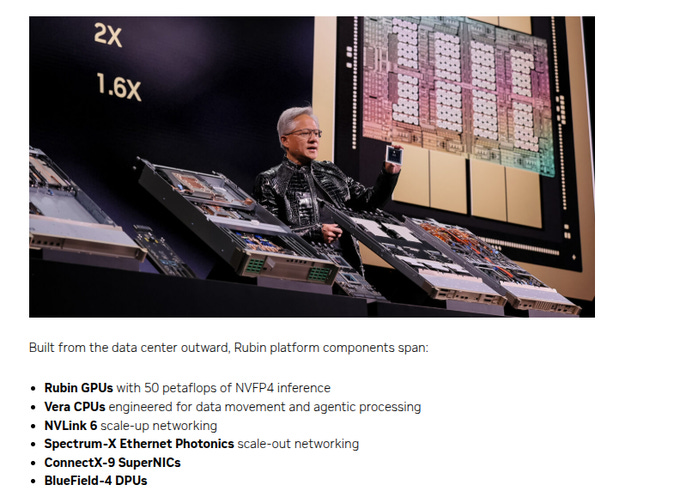

📡“Inside the NVIDIA Rubin Platform: Six New Chips, One AI Supercomputer”

Key takeaways from Nvidia’s official blog

To train a 10T MoE in 1 month, Rubin would use 75% fewer GPUs than Blackwell. For inference, at the same power draw, Rubin can serve about 10x more tokens per second. If a 1 MW cluster delivered 1 million tokens per second before, Rubin would deliver about 10 million.

For cost, the price to serve 1 million tokens could drop by about 10x with Rubin.

The Vera Rubin NVL72 integrates 6 new chips so compute, networking, and control act as 1 system of compute: Rubin GPU, Vera CPU, NVLink 6 switch, ConnectX-9 SuperNIC, BlueField-4 DPU, and Spectrum-6 Ethernet.

Vera CPU is a data movement engine, not just a host. It has 88 Olympus cores, Spatial Multithreading, up to 1.2 TB/s memory bandwidth, up to 1.5 TB LPDDR5X, and 1.8 TB/s coherent NVLink-C2C to GPUs. Compared to Grace, it doubles C2C bandwidth and adds confidential computing.

Rubin GPU raises sustained throughput across compute, memory, and communication. It offers 224 SMs with 6th gen Tensor Cores, NVFP4 inference up to 50 PFLOPS, FP8 training 17.5 PFLOPS, and HBM4 with up to 288 GB and about 22 TB/s bandwidth.

NVLink 6 doubles per GPU scale up bandwidth to 3.6 TB/s and enables uniform all to all across 72 GPUs. NVLink 6 also adds SHARP in network compute, reducing all reduce traffic up to 50% and improving tensor parallel time up to 20% for large jobs.

At the network edge, ConnectX-9 provides 1.6 Tb/s per GPU with programmable congestion control and endpoint isolation.

BlueField-4 offloads infrastructure with a 64 core Grace CPU and 800 Gb/s networking, introduces ASTRA for trusted control, and powers “Inference Context Memory Storage,” enabling shared KV cache reuse that can boost tokens per second up to 5x and power efficiency up to 5x.

Spectrum-6 switches, part of Spectrum-X Ethernet Photonics, double per chip bandwidth to 102.4 Tb/s and use co packaged optics for about 5x better network power efficiency and 64x better signal integrity, tuned for bursty MoE traffic.

Operations add Mission Control, rack scale RAS with zero downtime self test, and full stack confidential computing with NRAS attestation.

Energy features target “tokens per watt.” NVL72 uses warm water DLC at 45°C, rack level power smoothing with about 6x more local energy buffering than Blackwell Ultra, and grid aware DSX controls, enabling up to 30% more GPU capacity in the same power envelope.

Rubin pushes extreme codesign across Rubin GPUs, Vera CPUs, NVLink6, Spectrum-X Ethernet Photonics, and BlueField-4 data processing units (DPUs).

NVIDIA rates Rubin GPUs at 50petaflops of NVFP4 inference, a low-precision mode, and uses that in its 10x lower token-cost claim. For long contexts, the Inference Context Memory Storage Platform adds an AI-native key-value cache (KV-cache), the attention state reused across tokens, and NVIDIA claims 5x higher tokens per second plus 5x better cost and power efficiency.

🛠️ OPINION: Nvidia’s Vera-Rubin GPU makes one thing clear: the company isn’t after one unbeatable chip anymore. Their focus has turned to the full stack strategy.

Rubin clearly shows that Nvidia is no longer chasing one ultimate chip anymore.

It’s all about the full stack. The six Rubin chips are built to sync like parts of a single machine.

The “product” is basically a rack-scale computer built from 6 different chips that were designed together: the Vera Central Processing Unit, Rubin Graphics Processing Unit, NVLink 6 switch, ConnectX-9 SuperNIC, BlueField-4 data processing unit, and Spectrum-6 Ethernet switch.

We are seeing the same kind of strategy from AMD and Huawei. In massive-scale data-center that matters, since the slowest piece always calls the shots.

AMD is doing the same move, just with a different vibe. Helios is AMD packaging a rack as the unit you buy, not a single accelerator card.

The big difference vs Nvidia is how tightly AMD controls the whole stack. Nvidia owns the main compute chip, the main scale-up fabric (NVLink), a lot of the networking and input output path (SuperNICs, data processing units), and it pushes reference systems like DGX hard.

AMD is moving to rack-scale too, but it is leaning more on “open” designs and partners for parts of the rack, like the networking pieces shown with Helios deployments. So you still get the “parts syncing like 1 machine” idea, but it is less of a single-vendor closed bundle than Nvidia’s approach.

Huawei is also clearly in the “full machine” game, and honestly it is even more forced into it than AMD. Under export controls, Huawei has to build a whole domestic stack that covers the chip, the system, and the software toolchain.

That is why you see systems like CloudMatrix 384 and the Atlas SuperPoD line being described as a single logical machine made from many physical machines, with examples like 384 Ascend 910C chips in a SuperPoD and then larger supernodes like Atlas 950 with 8,192 Ascend chips and Atlas 960 with 15,488 Ascend chips. On software, Huawei keeps pushing CANN plus MindSpore as a CUDA-like base layer and full-stack alternative, so developers can train and serve models without Nvidia’s toolchain.

Some key points on NVIDIA Rubin.

Nvidia rolled out 6 new chips under the Rubin platform. One highlight is the Vera Rubin superchip, which pairs 1 Vera CPU with 2 Rubin GPUs on a single processor.

The Vera Rubin timeline is still fuzzy. Nvidia says the chips ship this year, but no exact date. Wired noted that chips this advanced, built with TSMC, usually begin with low-volume runs for testing and validation, then ramp later.

Nvidia says these superchips are faster and more efficient, which should make AI services more efficient too. That is why the biggest companies will line up to buy. Huang even said Rubin could generate tokens 10x more efficiently. We still need the full specs and a real launch date, but this was clearly one of the biggest AI headlines out of CES.

🧠 New paper says the best way to manage AI context is to treat everything like a file system.

Today, a model’s knowledge sits in separate prompts, databases, tools, and logs, so context engineering pulls this into a coherent system.

A persistent context repository separates raw history, long term memory, and short lived scratchpads, so the model’s prompt holds only the slice needed right now.

Every access and transformation is logged with timestamps and provenance, giving a trail for how information, tools, and human feedback shaped an answer.

Because large language models see only limited context each call and forget past ones, the architecture adds a constructor to shrink context, an updater to swap pieces, and an evaluator to check answers and update memory.

All of this is implemented in the AIGNE framework, where agents remember past conversations and call services like GitHub through the same file style interface, turning scattered prompts into a reusable context layer.

🎉 xAI completed its upsized Series E funding round at $20B

The round beat the $15B target. The oversubscribed round drew participation from Valor Equity Partners, Stepstone Group, Fidelity, Qatar Investment Authority, MGX, and Baron Capital Group. Strategic investors NVIDIA and Cisco Investments also joined to support infrastructure expansion.

The money is mainly for infrastructure and faster development and deployment of Grok 5.

It says its Colossus I and II systems ended 2025 with over 1mn H100 GPU equivalents.

It also says it has been running reinforcement learning at “pretraining-scale compute”, meaning the expensive post-training stage is pushed much harder than the usual small add-on pass.

Earlier xAI raised $6.6 billion in a major round back in 2024, and a year later inked a deal to acquire X in an all-stock transaction that values xAI at $80 billion and X at $33 billion ($45 billion, his original take-private price, minus $12 billion in debt).

While the company did not confirm its valuation in the round, The Information previously pegged the value at around $230B for xAI.

🗞️ Cursor is rolling out a way for coding agents to fetch context only when they need it, instead of stuffing everything into the prompt.

The main change is treating many things as files that the agent can read on demand.

This cuts token use and often improves answers by avoiding extra, conflicting context. Instead of pasting huge tool outputs into the model input, long results get written to a file and the agent reads slices like the end first.

That avoids blunt truncation, reduces forced summarization, and keeps full details available when they matter. When a session hits a context limit and gets summarized, the agent can still search a saved chat-history file to recover missing specifics.

Agent Skills are stored as files too, with small static hints so the agent can grep or semantically search and then load only the relevant skill text.

For Model Context Protocol (MCP) servers, tool descriptions can be synced into a folder so only tool names are shown up front, and deeper docs are loaded only if needed.

In an A/B test where an MCP tool was used, this approach reduced total agent tokens by 46.9%, with high variance. Integrated terminal sessions are also synced as files, so the agent can search long logs without copying them into chat.

This file-first setup looks practical for teams trying to keep long agent runs stable and cheap.

It also shifts work toward better retrieval and indexing, which is where many agent failures actually start.

That’s a wrap for today, see you all tomorrow.