💼 AI will boost star workers more than everyone else,

AI is amplifying top performers, straining teams, as Gemini 2.5 excels in speech reasoning, CA regulates AI disclosure, and Meta improves agent self-learning without rewards.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (14-Oct-2025):

💼 AI will boost star workers more than everyone else, widening performance gaps and straining teams.

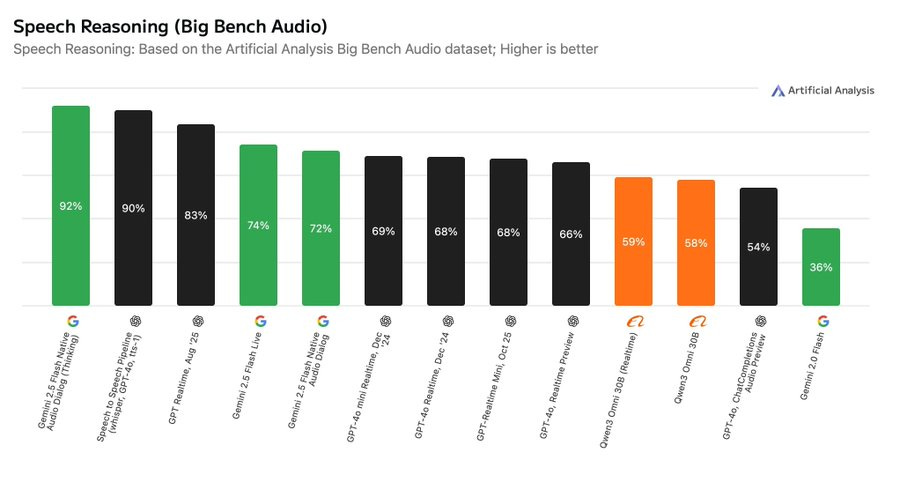

🔊 Google’s Gemini 2.5 Native Audio Thinking hits 92% on Big Bench Audio, the leading speech to speech reasoning. 👏

📢: California just passed a law that forces AI to admit they’re not human.

🛠️ New paper from Meta shows a simple way for language agents to learn from their own early actions without rewards

💼 AI will boost star workers more than everyone else, widening performance gaps and straining teams.

A new WSJ article says that domain expertise and organized habits let stars get more from AI.

Stars adopt AI earlier, explore features fast, and build personal workflows while others wait for rules. They judge outputs better, accepting correct advice and rejecting errors.

Their domain expertise helps them ask precise questions, set constraints, and iterate, which raises prompt quality and accuracy. The high-status of those employees get more recognition and praise for their AI-assisted work, while others doing similar work get less credit.

So, their status and reputation make the gap even bigger, because success gets attributed to their talent rather than to the AI help. Managers also give stars more freedom to experiment and more forgiveness when tests fail.

Other Research that proved this independently as well

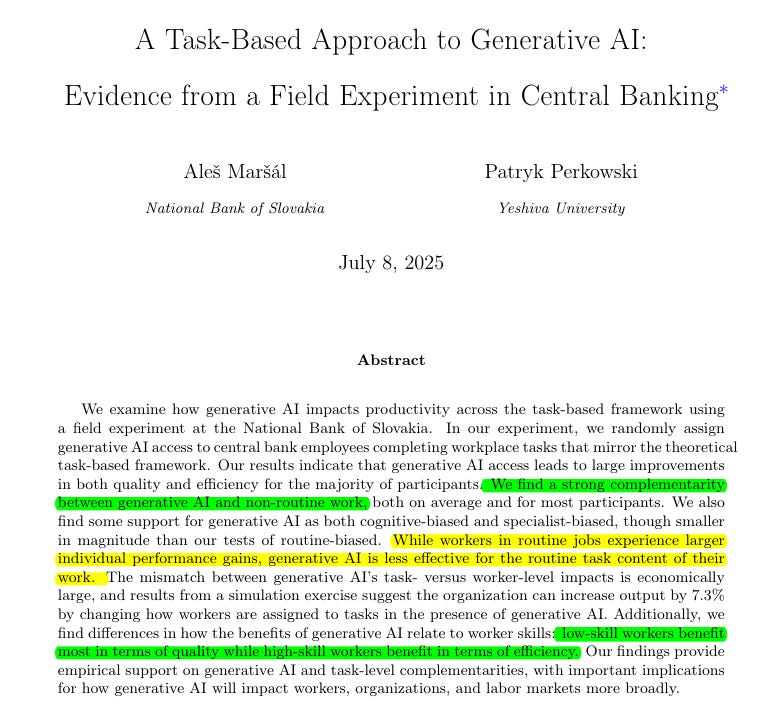

This paper showed there’s a “specialist‑biased” effects with AI.

This research finds, lower‑skill employees see bigger quality gains with AI, while higher‑skill employees gain more on efficiency, which is what top performers are often rewarded for.

On generalist tasks, average quality rose 48% and time fell 23% with access. On specialist tasks, quality more than doubled with gains of 108-117%.

Routine work improved 24%, nonroutine work improved 58%, showing strong complementarity with nonroutine content. While workers in routine jobs experience larger individual performance gains, generative AI is less effective for the routine task content of their work.

In this paper, a simple allocation model indicates that reassigning who does which tasks under AI could raise output by 7.3%.

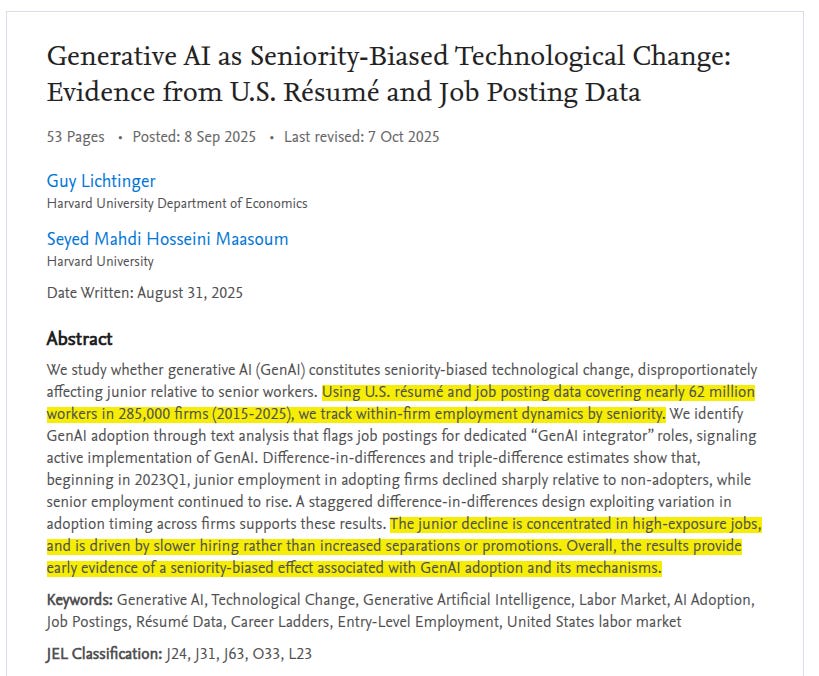

Another research shows AI adoption is “seniority-biased” — it favors more experience levels over entry levels, amplifying within-firm gaps between junior and senior employees.

With AI, junior employment in AI-adopting firms fell sharply relative to non-adopters of AI, while senior employment continued rising.

In a large empirical study, researchers looked at U.S. résumé and job-posting data covering about 62 million workers across ~285,000 firms. They used a method to detect firms that had adopted generative AI (for example through job ads for “GenAI integrator” roles) to mark them as “adopters.”

🔊 Google’s Gemini 2.5 Native Audio Thinking hits 92% on Big Bench Audio, the leading speech to speech reasoning. 👏

Big Bench Audio has 1,000 spoken questions from Big Bench Hard that test multi step reasoning, so 92% shows strong correctness on hard prompts. The model reasons directly on speech without transcription and then returns natural speech or text.

Normally, speech-to-speech systems use several steps:

speech → text transcription → reasoning on the text → generate text → convert text back to speech.

Gemini 2.5 Native Audio Thinking skips that middle part. It directly interprets the meaning and intent of the spoken input, reasons internally, and then produces either a spoken reply or text output.

This makes it more “native” to audio, because it thinks in sound form rather than treating speech as just text that happens to be spoken aloud. It accepts audio, video, and text, outputs text or speech, and supports function calling and search grounding.

Context is 128k input tokens, 8k output tokens, and the knowledge cut off is Jan-25. In comparision, GPT Realtime models begin speaking around 0.81 s to 1.49 s, yet their accuracy on this set sits near 68% to 83%.

Pick thinking mode for complex tutoring or troubleshooting, and pick the fast mode for quick back and forth. The most valuable change is direct speech reasoning with a tunable thinking budget, which cuts pipeline errors while keeping a low latency option.

📢: California just passed a law that forces AI to admit they’re not human.

SB 243 takes effect in January-26, and it requires operators to remind known minor users every 3 hours that they are chatting with AI and to take a break. If a typical person could think they are talking to a human, the service must show a clear notice that the chatbot is artificial.

Operators must maintain a self-harm protocol that blocks suicidal or self-harm content and pushes hotline or text-line referrals, and they must publish the protocol on their site. Platforms must state that interactions are artificially generated, may not claim to be healthcare professionals, must provide break reminders for minors, and must block sexually explicit images for minors.

Starting July-27, operators must send yearly reports to the Office of Suicide Prevention on how they detect and respond to suicidal ideation, and users can sue for at least $1,000 per violation. Age verification rules, social-media warning labels, and civil relief up to $250,000 for deepfake porn were signed the same day as companion bills, not as part of SB 243.

California also enacted SB 53 in September-25, which forces large AI developers to publish safety protocols and protects whistleblowers at major labs. Lawmakers moved after reports and lawsuits alleging harmful teen interactions with chatbots, including suits tied to Character[.]AI and a wrongful-death case blaming ChatGPT.

🛠️ New paper from Meta shows a simple way for language agents to learn from their own early actions without rewards

The big deal is using the agent’s own rollouts (i.e. own actions) as free supervision when rewards are missing, instead of waiting for human feedback or numeric rewards. It cuts human labeling needs and makes agents more reliable in messy, real environments.

On a shopping benchmark, success jumps by 18.4 points over basic imitation training. Many real tasks give no clear reward and expert demos cover too few situations.

So they add an early experience stage between imitation learning and reinforcement learning. The agent proposes alternative actions, tries them, and treats the resulting next screens as supervision.

State means what the agent sees right now, and action means what it does next. Implicit world modeling teaches the model to predict the next state from the current state and the chosen action, which builds a sense of how the environment changes.

Self reflection teaches the model to compare expert and alternative results, explain mistakes, and learn rules like budgets and correct tool use. Across 8 test environments, both methods beat plain imitation and handle new settings better.

When real rewards are available later, starting reinforcement learning from these checkpoints reaches higher final scores. This makes training more scalable, less dependent on human labels, and better at recovering from errors.

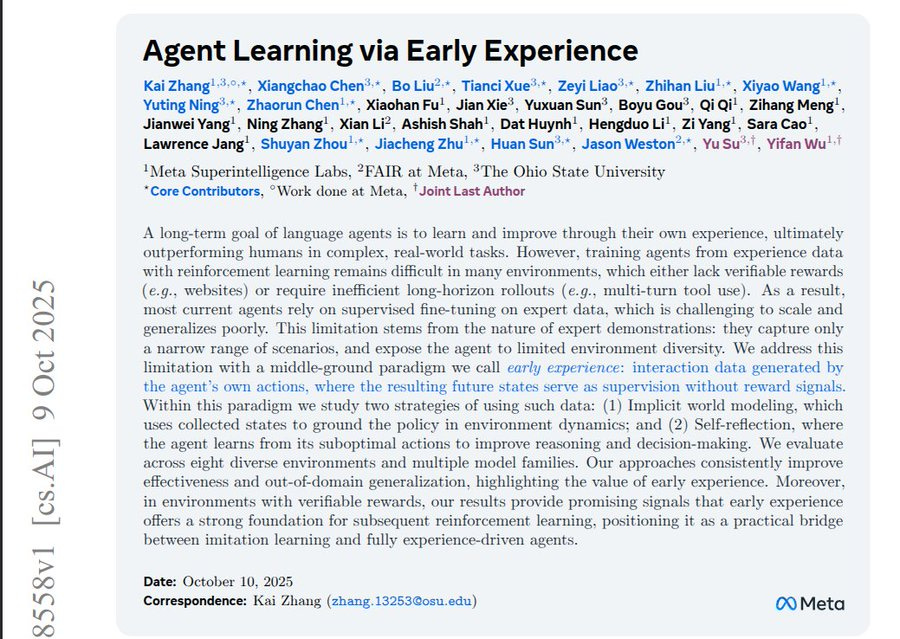

This image shows how training methods for AI agents have evolved.

On the left, the “Era of Human Data” means imitation learning, where the agent just copies human examples. It doesn’t need rewards, but it can’t scale because humans have to create all the data.

In the middle, the “Early Experience” approach from this paper lets the agent try its own actions and learn from what happens next. It is both scalable and reward-free because the agent makes its own training data instead of waiting for rewards.

On the right, the “Era of Experience” means reinforcement learning, where the agent learns from rewards after many steps. It can scale but depends on getting clear reward signals, which many environments don’t have.

So this figure shows that “Early Experience” combines the best of both worlds: it scales like reinforcement learning but doesn’t need rewards like imitation learning.

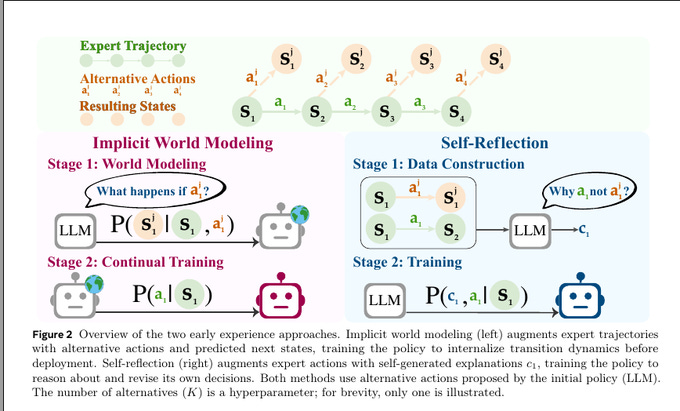

The two methods that make up the “Early Experience” training idea.

On the left side, “Implicit World Modeling” means the agent learns how its actions change the world. It looks at what happens when it tries an action and predicts the next state. This helps it build an internal sense of cause and effect before actual deployment.

On the right side, “Self-Reflection” means the agent learns by questioning its own choices. It compares the expert’s action and its own alternative, then explains to itself why one action was better. That explanation becomes new training data.

Both methods start with expert examples but add extra self-generated data. This makes the agent smarter about how the environment works and better at improving its own decisions without extra human feedback.

That’s a wrap for today, see you all tomorrow.

This section on star workers versus everyone else is really thought-provoking, and honestly, a bit concerning. It seems like AI acts as a massive multiplier for those who already have domain expertise and good habits. The point about status inflating the perceived gap—attributing success purely to talent rather than AI leverage—is something managers really need to watch out for.

It makes me wonder about junior workers specifically. Since everyone starts as a junior, how can new hires or those without deep existing expertise build the necessary foundation to even begin leveraging AI effectively, or will they be left behind from day one?