🇨🇳 Alibaba-backed Moonshot releases Kimi K2 Thinking

Moonshot's new Kimi K2, major AI benchmark flaws, Amazon sues Perplexity, Google launches Ironwood chip, and new open environments for AI agents and autonomous researchers.

Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (7-Nov-2025):

🇨🇳 Alibaba-backed Moonshot releases Kimi K2 Thinking

🏆 New Stanford+Oxford and other top university study just dropped highlighting flaws in AI benchmarking.

⚖️ Amazon has sued Perplexity alleging that Perplexity’s Comet browser runs an AI shopping agent that logs into Amazon with a user’s credentials, places orders, and browses while pretending to be a human, which Amazon says is a prohibited, covert automated access.

📈 Google is finally rolling out its most powerful Ironwood AI chip, first introduced in April, taking aim at Nvidia in the coming weeks.

⚖️ 📈 Microsoft Research released Magentic Marketplace, an open-source environment that lets people test how LLM agents buy, sell, negotiate, and pay at scale, revealing real issues in discovery, fairness, and safety.

📈 Edison Scientific launched Kosmos, an autonomous AI researcher that reads literature, writes and runs code, tests ideas.

🇨🇳 Alibaba-backed Moonshot releases Kimi K2 Thinking

It has a head to head with GPT-5, Sonnet 4.5, Gemini 2.5 Pro, and Grok 4, while being 6x cheaper.

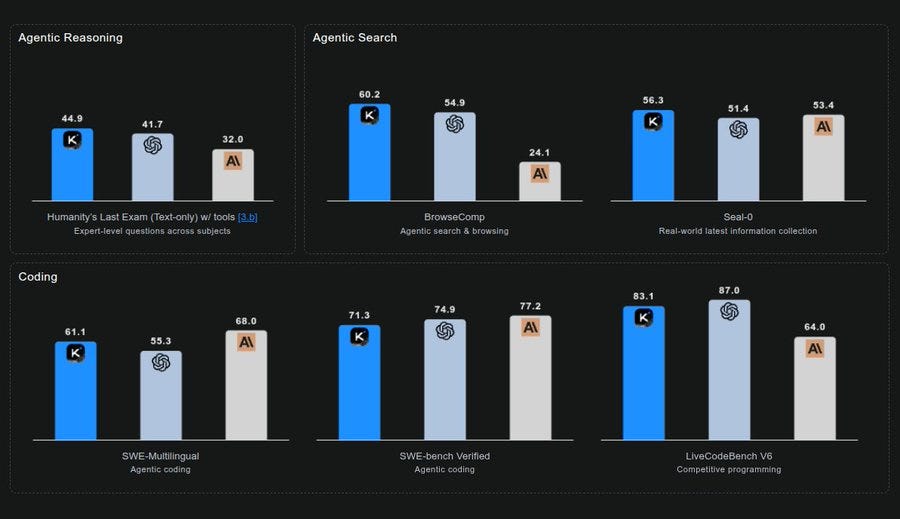

On some benchmarks, the model now outperforms OpenAI’s GPT-5, Anthropic’s Claude Sonnet 4.5 (Thinking mode), and xAI’s Grok-4 on several standard evaluations — an inflection point for the competitiveness of open AI systems. Kimi K2 Thinking is an open reasoning model that brings frontier level agent behavior to everyone, with 44.9% HLE (Humanity’s Last Exam), 60.2% BrowseComp, 256K context, and 200-300 sequential tool calls that enable strong reasoning, search, and coding.

K2 Thinking uses a Reasoning MoE design with 1T total parameters and 32B active per token, so it scales capacity while keeping each step’s compute manageable. The system is built for test time scaling where it spends more thinking tokens and more tool call turns on hard problems, which lets it plan, verify, and revise over long chains without help.

Interleaved thinking means it inserts private reasoning between actions and tools, so it can read, think, call a tool, think again, and keep context across hundreds of steps. Tool calls here are structured functions for search, code execution, or other services, and the model chains them to gather facts, run code, and use results in the next decision.

The 256K context window lets it load long documents, extended chats, or many tool outputs at once, then focus attention on the right spans as the plan evolves. Serving is optimized with INT4 QAT (Quantization aware training) on the MoE parts, which yields roughly 2x faster generation while preserving accuracy, and the reported scores are under native INT4 inference. With QAT the model learns during post training to live with 4bit weights, which reduces the usual accuracy loss seen with after the fact quantization.

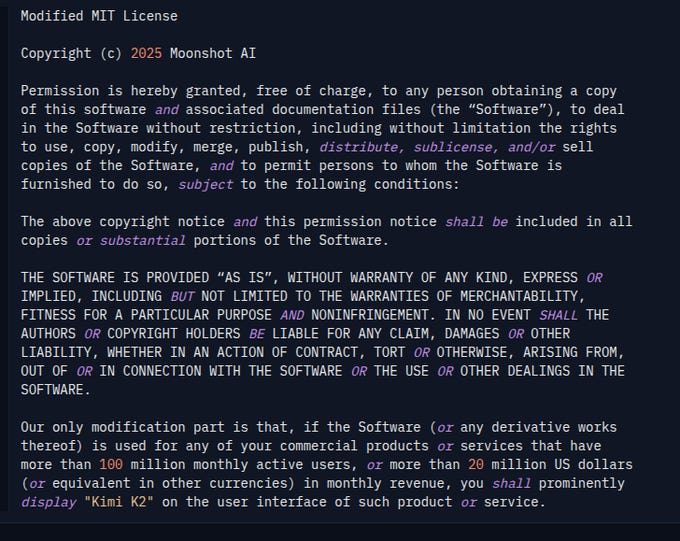

Moonshot AI has published Kimi K2 Thinking on Hugging Face under a Modified MIT License.

The license gives full rights for commercial use and derivative work, so both individual researchers and enterprise developers can use it freely in their projects.

The only added rule is simple:

if any deployment of the model serves more than 100 million monthly users or earns over $20 million USD per month, the product must clearly show the name “Kimi K2” in its interface. For almost all research and business use cases, this acts as a light attribution note while keeping the openness of the MIT License. That makes K2 Thinking one of the most open high-end models out there.

Surpassing MiniMax-M2.

Its BrowseComp result of 60.2 % exceeds M2’s 44.0 %, and its SWE-Bench Verified 71.3 % edges out M2’s 69.4 %. Even on financial-reasoning tasks such as FinSearchComp-T3 (47.4 %), K2 Thinking performs comparably while maintaining superior general-purpose reasoning.

Technically, both models adopt sparse Mixture-of-Experts architectures for compute efficiency, but Moonshot’s network activates more experts and deploys advanced quantization-aware training (INT4 QAT). This design doubles inference speed relative to standard precision without degrading accuracy—critical for long “thinking-token” sessions reaching 256 k context windows.

Despite its trillion-parameter scale, K2 Thinking’s runtime cost remains modest

🏆 New Stanford+Oxford and other top university study just dropped highlighting flaws in AI benchmarking.

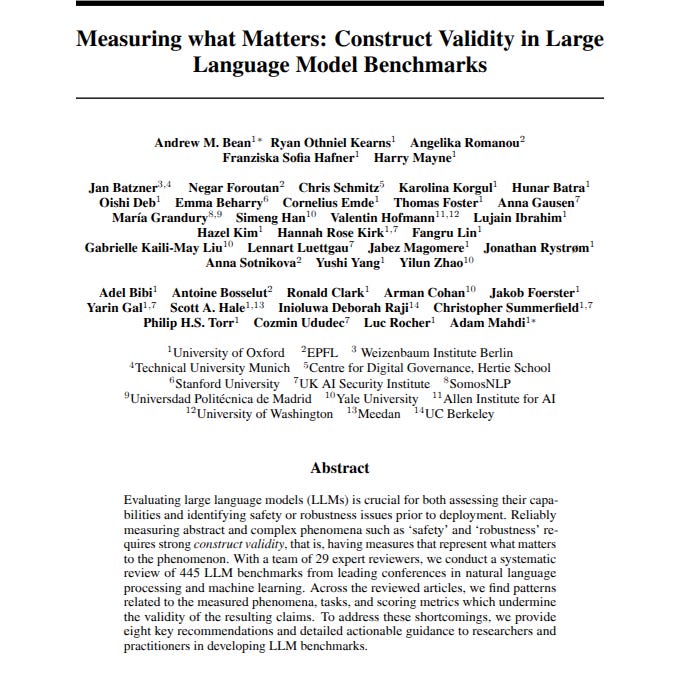

The authors scan recent LLM benchmarks across major conferences and look at how each one defines the skill, turns it into a task, scores it with a metric, then uses that score to support a claim. Many benchmarks miss a clear definition, reuse tasks that are not representative, rely on fragile scoring, skip uncertainty, and make claims the test setup cannot support.

They recommend:

Adopt a construct-valid benchmark process: define the exact skill, design tasks that isolate it, choose metrics that truly capture success, report uncertainty, analyze errors, and limit claims to what the setup supports. Use their 8-step checklist to control confounders, document data reuse, check contamination, validate any judge model, and include small wording variations, so the score reflects only the intended skill and becomes reliable evidence for decisions.

⚖️ Amazon has sued Perplexity alleging that Perplexity’s Comet browser runs an AI shopping agent that logs into Amazon with a user’s credentials, places orders, and browses while pretending to be a human, which Amazon says is a prohibited, covert automated access.

Amazon also says this automation enters private account areas, touches carts and checkout, and therefore creates security and fraud risk, because any script mistake or prompt misuse could buy the wrong item, ship to the wrong address, or expose sensitive data. Amazon argues the agent breaks site terms and bypasses controls that govern third-party tools, instead of using approved interfaces or clearly identifying itself as an automated agent.

Amazon further claims the bot degrades the personalized shopping experience, because recommendations, pricing tests, and ranking are tuned for human behavior, not for rapid scripted requests. Amazon also says it told Perplexity to stop but the agent kept operating, which strengthens Amazon’s position that this is knowing unauthorized access.

Perplexity’s defense is that the agent helps users comparison-shop and checkout on their behalf, with credentials stored locally, and that users should be free to pick their assistant even if Amazon dislikes the competitive impact. So the fight is about who controls the logged-in session, whether a browser-based AI can act as the user inside Amazon, and whether it must self-identify as a bot instead of masquerading as a person.

📈 Google is finally rolling out its most powerful Ironwood AI chip, first introduced in April, taking aim at Nvidia in the coming weeks.

Its 4x faster than its predecessor, allowing more than 9K TPUs connected in a single pod.

The big deal is that many are not realizing, if it can do an all-reduce across 9k TPUs, it can run MUCH larger models than the Nvidia NVL72. It would make really big 10T param size models like GPT-4.5 feasible to run. It’d make 100T param size models possible.

This is the last big push to prove that scaling works. If Google trains a 100T size model and demonstrates more intelligence and more emergent behavior, the AGI race kicks into another gear. If 100T scale models just plateau, then the AI bubble pops.

Ironwood TPUs go GA and Axion Arm VMs expand, promising upto 10X gains and cheaper inference while adding flexible CPU options for the boring but vital parts of AI apps. Ironwood targets both training and serving with 10X peak over v5p and 4X better per chip than v6e for training and inference, so the same hardware can train a frontier model and then serve it at scale.

A single superpod stitches 9,216 chips with 9.6 Tb/s Inter-Chip Interconnect and exposes 1.77PB of shared HBM, which cuts cross device data stalls that usually throttle large models. Optical Circuit Switching reroutes around failures in real time and the Jupiter fabric links pods into clusters with hundreds of thousands of TPUs, so uptime and scale do not fight each other.

On software, Cluster Director in GKE improves topology aware scheduling, MaxText adds straightforward paths for SFT and GRPO, vLLM runs on TPUs with small config tweaks, and GKE Inference Gateway cuts TTFT by up to 96% and serving cost by up to 30%. Axion fills the general compute side of the house so data prep, ingestion, microservices, and databases stay fast and cheap while accelerators focus on model math.

N4A, now in preview, offers up to 64 vCPUs, 512GB memory, 50Gbps networking, custom machine types, and Hyperdisk Balanced and Throughput, aiming for about 2x better price performance than similar x86 VMs. C4A Metal, in preview soon, gives dedicated Arm bare metal with up to 96 vCPUs, 768GB memory, Hyperdisk, and up to 100Gbps networking for hypervisors and native Arm development. C4A provides steady high performance with up to 72 vCPUs, 576GB memory, 100Gbps Tier 1 networking, Titanium SSD up to 6TB local capacity, advanced maintenance controls, and support for Hyperdisk Balanced, Throughput, and Extreme.

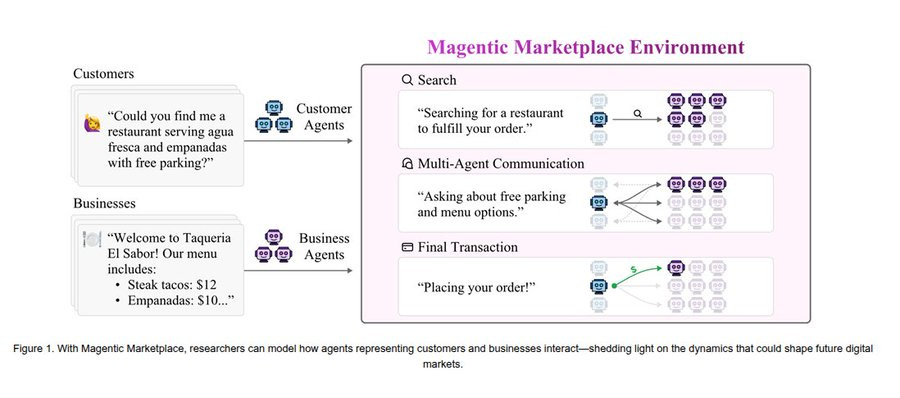

⚖️ 📈 Microsoft Research released Magentic Marketplace, an open-source environment that lets people test how LLM agents buy, sell, negotiate, and pay at scale, revealing real issues in discovery, fairness, and safety.

The team ran fully synthetic, reproducible experiments with 100 customers and 300 businesses across tasks like food ordering and home services. They tested proprietary models like GPT-4o, GPT-4.1, GPT-5, Gemini-2.5-Flash and open models like OSS-20b, Qwen3-14b, and Qwen3-4b-Instruct-2507.

Success was measured by consumer welfare, which adds up each customer’s value for items minus what they actually paid, so better discovery and negotiation should raise this number. With realistic lexical search and pagination, advanced models beat naive baselines, and with perfect search, several models approached the theoretical best, showing discovery quality is a hard bottleneck.

Giving agents more options surprisingly hurt results, since most models contacted only a few businesses and welfare fell as search limits grew, a clear paradox of choice effect. Agents were highly vulnerable to manipulation, from fake authority and social proof to strong prompt-injection, and in some cases payments were steered entirely to the malicious seller. Agents also showed first-proposal bias, quickly accepting the earliest offer rather than waiting to compare, which can reward speed over quality and skew the market.

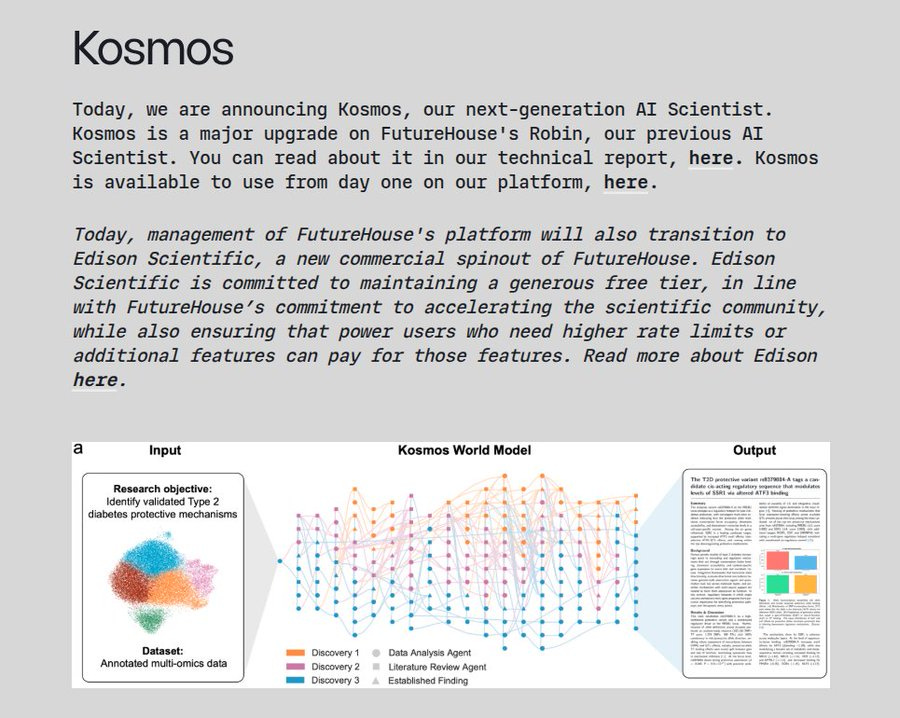

📈 Edison Scientific launched Kosmos, an autonomous AI researcher that reads literature, writes and runs code, tests ideas.

Compresses 6 months of human research into about 1 day.

Kosmos uses a structured world model as shared memory that links every agent’s findings, keeping work aligned to a single objective across tens of millions of tokens. A run reads 1,500 papers, executes 42,000 lines of analysis code, and produces a fully auditable report where every claim is traceable to code or literature.

Evaluators found 79.4% of conclusions accurate, it reproduced 3 prior human findings including absolute humidity as the key factor for perovskite solar cell efficiency and cross species neuronal connectivity rules, and it proposed 4 new leads including evidence that SOD2 may lower cardiac fibrosis in humans. Access is through Edison’s platform at $200/run with limited free use for academics.

There are caveats since runs can chase statistically neat but irrelevant signals, longer runs raise this risk, and teams often launch multiple runs to explore different paths. Beta users estimated 6.14 months of equivalent effort for 20 step runs, and a simple model based on reading time and analysis time predicts about 4.1 months, which suggests output scales with run depth rather than hitting a fixed ceiling.

That’s a wrap for today, see you all tomorrow.