Alibaba Cloud says its updated pooling setup slashed Nvidia AI GPU usage by 82%, achieving up to a 9x jump in performance

Alibaba’s GPU pooling for faster LLM serving, Unitree’s new humanoid, GPT-5’s Erdős hype breakdown, and live Google Maps in Gemini apps.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (20-Oct-2025):

🤯 Alibaba introduced a new GPU pooling system Aegaeon that makes AI model serving much more efficient.

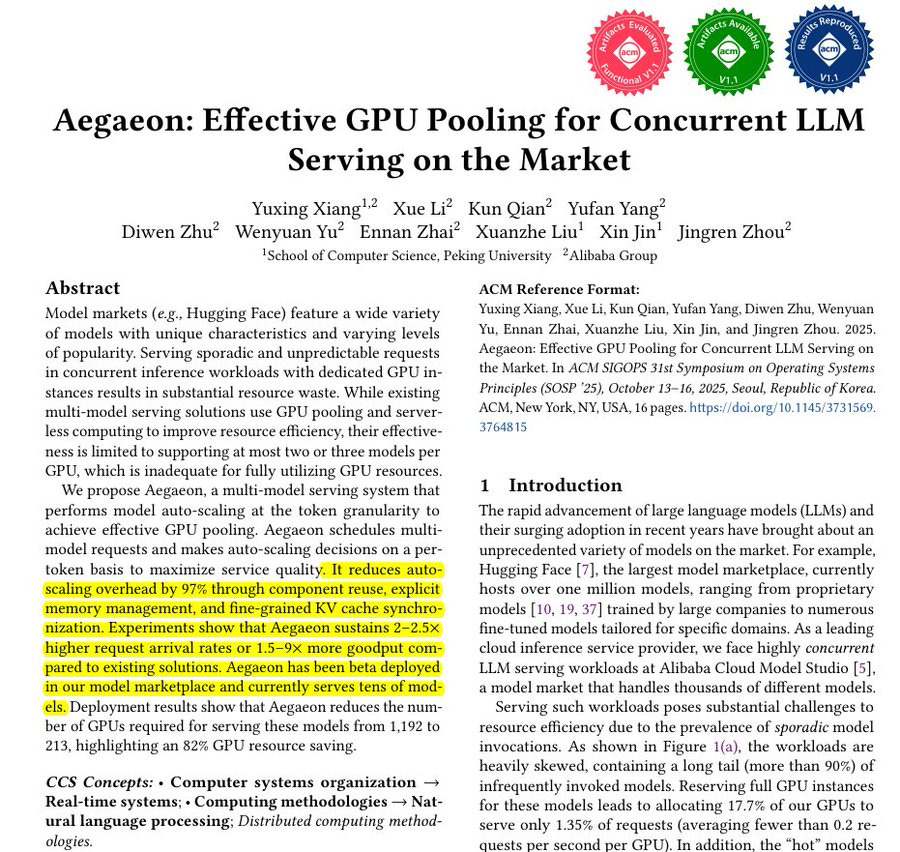

The Alibaba paper behind Aegaeon: Effective GPU Pooling for Concurrent LLM Serving on the Market

🦾 Unitree unveiled the H2 humanoid, a 1.8 meters, 70 kg flagship aimed at fluid, agile motion, while keeping R1 as the low cost developer workhorse.

🛠️ GPT-5 vs Erdős, how a viral “breakthrough” turned into a literature search

Developers can now add live Google Maps data to Gemini-powered AI app outputs

🤯 Alibaba introduced a new GPU pooling system Aegaeon that makes AI model serving much more efficient.

Claims an 82% cut in Nvidia GPU use for serving LLMs by pooling compute across models.

In a 3+ month beta on Alibaba Cloud’s marketplace, H20 GPUs dropped from 1,192 to 213 while serving dozens of models up to 72B parameters. The regular Cloud model hubs skew toward a few hot models, so many GPUs sit idle for cold models, and Alibaba measured 17.7% of GPUs handling only 1.35% of requests.

Aegaeon addresses this with token-level auto-scaling, which lets a GPU switch between models during generation instead of waiting for a full response to finish. By slicing work at token boundaries and scheduling small bursts quickly, the system keeps memory warm and compute busy with little waste.

With Aegaeon, a single GPU supports up to 7 models versus 2 to 3 in other pooling systems, and switching latency drops by 97%. Cold models load weights just in time when a request lands, then borrow a brief slice of compute without locking an entire GPU.

Hot models keep priority, so heavy traffic stays smooth while sporadic models borrow capacity in short bursts. The wins apply to inference, not training, because generation happens token by token and fits fine-grained scheduling.

The timing suits China’s chip limits, where H20 targets inference workloads and domestic GPUs are ramping, so fewer chips can cover more traffic. If Aegaeon generalizes, operators can lower cost per token, raise fleet utilization, and delay new GPU purchases without hurting latency for popular models. Tradeoffs still exist, like uneven memory needs across models, long sequences that reduce preemption points, and scheduler overhead during traffic spikes.

🏆 Aegaeon: Effective GPU Pooling for Concurrent LLM Serving on the Market

Claims 82% cut in Nvidia GPU use for serving LLMs by pooling compute across models. The big deal is, it turns slow, request-level switching into fast, token-level switching.

Most model requests are sporadic, so dedicated GPUs sit idle while hot models spike. Older systems cram 2 or 3 models per GPU or scale at the end of a request, which still causes long waits.

Aegaeon fixes this by preempting running models between tokens and admitting new ones quickly. It separates work into 2 pools, one for the first token and one for later tokens.

The first-token pool groups same-model jobs to cut waste and speed the first token. The later-token pool time-slices decoding in a weighted round robin to keep output steady.

Switching is cheap because it reuses the engine, manages memory directly, and prefetches weights. It also streams the stored token history with fine-grained GPU events to avoid stalls. In tests and production, this reaches up to 7 models per GPU and about 82% GPU savings.

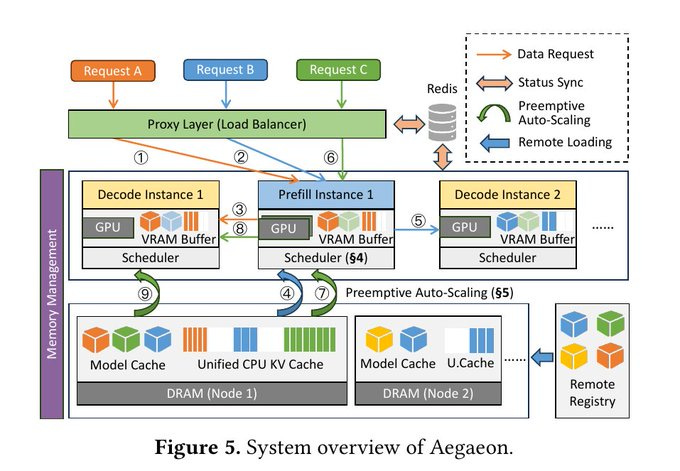

This is how the Aegaeon system handles requests from users and manages multiple LLMs efficiently.

When new requests (A, B, and C) arrive, they first go through a load balancer called the Proxy Layer. This layer decides which GPU instance will process each request.

Aegaeon splits its work into two main stages. The “Prefill Instance” handles the first part of the response, where the model reads the prompt and sets up its internal memory. The “Decode Instances” handle the next part, where the model actually generates text token by token.

The system uses schedulers inside each GPU instance to manage timing and resource allocation. These schedulers coordinate with the Proxy Layer and a Redis database to track system status and share updates.

The key feature shown is “Preemptive Auto-Scaling.” This means Aegaeon can pause one running model and start another almost instantly, without waiting for the first one to finish.

It manages this through shared caches between GPUs and CPUs. These caches store model weights and token history so they can be quickly loaded without full reinitialization.

🦾 Unitree unveiled the H2 humanoid, a 1.8 meters, 70 kg flagship aimed at fluid, agile motion, while keeping R1 as the low cost developer workhorse.

Unitree Robotics has released a new humanoid model in a short video on its social media channels, its most humanlike model to date.

Early reports point to 31 joint degrees of freedom for H2, up from 27 on H1, which should help reach, balance, and fine more human-like motion. The full size at 180 cm keeps tools, doorways, and work surfaces at human scale so the robot can use existing spaces without special rigs.

Unitree is clearly running a 2 platform play with H2 at the high end and R1 at about $5,900 for labs and developers to seed software and skills. Last month, R1 also landed on TIME’s Best Inventions list.

Unitree has not disclosed full technical details for the H2. Adoption will hinge on practical details like joint torque, battery endurance, reliable hands, safety, and an SDK that teams can ship with.

The company’s previous model, the H1, appeared at China’s 2024 Spring Festival Gala and could run at speeds up to 3.3 meters per second, with a potential top speed exceeding 5 meters per second. The H1 is equipped with 3D LiDAR and depth cameras for high-precision spatial awareness.

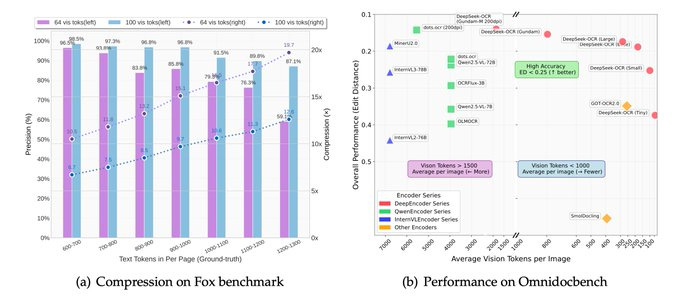

🔥 DeepSeek releases DeepSeek OCR

Sets a new standard for open-source OCR. A 3B-parameter vision-language model designed for high-performance optical character recognition and structured document conversion.

Can parse and re-render charts in HTML

Optical Context Compression: Reduces visual token usage by 20x while maintaining 97% accuracy

combines CLIP and SAM features for stronger grounding, runs efficiently with a great vision token-to-performance balance, and supports 100 languages.

Superior Performance: Outperforms GOT-OCR2.0 and MinerU2.0 on the OmniDocBench benchmark

Enterprise-Ready: Processes over 200,000 pages per day with inference speeds of approximately 2,500 tokens/s on A100-40G GPU

Open Source: Released under the MIT license with seamless vLLM integration

A significant advancement in document understanding AI, enabling efficient, high-accuracy OCR on resource-constrained hardware while remaining fully open-source and production-ready. More details can be found in the

🛠️ GPT-5 vs Erdős, how a viral “breakthrough” turned into a literature search

GPT-5 and the Erdős claims, what actually happened 🧩

An OpenAI leader posted that GPT-5 had “found solutions to 10 previously unsolved Erdős problems” and made progress on 11 more, which sounded like brand-new proofs to hard number theory questions. That wording came from a now-deleted post.

But the fact is, GPT-5 did not generate new Erdős proofs, it found the location of an existing solution. This is still super useful. GPT-5 is good at cross-referencing messy math literature, which is exactly where many researchers can use help.

The firestorm started within hours. Google DeepMind’s Demis Hassabis replied “this is embarrassing”, which set the tone for the wider pushback from researchers and engineers who read the tweet as “GPT-5 proved new theorems” rather than “GPT-5 helped find old papers”. Meta’s Yann LeCun added a sharper jab that traveled just as fast, which only amplified the perception that the claim had jumped the gun.

Why the claim collapsed so quickly 🔎

The heart of the confusion was the word “open.” The Erdős problems site is a community tracker. On that site, “open” means the maintainer has not yet recorded a solution, it does not mean that the math community has no solution. Thomas Bloom, who runs the site, said exactly this in his public clarification, stressing that the model had surfaced existing solutions that he had not yet linked on the tracker. The site itself asks readers not to assume that an “unsolved” label is definitive and to search the literature first, which is the key detail that got missed in the viral framing.

Once that nuance was out in the open, OpenAI’s Sebastien Bubeck acknowledged the reality, “only solutions in the literature were found,” then added that even this is hard work for messy math topics, which is fair if you have ever chased old combinatorics or number theory citations across journals and preprints.

So what did GPT-5 actually do well here? 📚

The model acted like an aggressive literature review assistant. It mapped problem statements from the tracker to papers that already solved them, sometimes under different names or with notation that hides the connection. Terence Tao has been arguing that this is the near-term productive use of AI for math, not cracking the grand conjectures, but speeding up the grind, especially when topics have scattered terminology. In a short series of posts last week he pointed out that this kind of tool can clean up status pages and, in live cases, move entries from “open” to “solved” once the right references are attached.

GPT-5 did not produce new proofs. It connected tracker entries to existing results, which is a real contribution to curation and onboarding, just not a research breakthrough.

How the wording went wrong, in plain language 🧠

The phrase “found solutions to 10 previously unsolved Erdős problems” reads like proof discovery. What actually happened is closer to “found the right papers for 10 listed problems.” The difference is small in English, huge in math. On the Erdős site, “open” is a working label, not a ground truth, and the FAQ explicitly warns readers to do a literature search before assuming the status is accurate. This is why the community reacted so strongly, because the wording collapsed a status-cleanup into a breakthrough.

Why this assistant role still matters for real math work 🧰

Math literature is messy. Names drift across subfields, equivalent statements get published under different formulations, and proofs live in places that standard keyword search will miss. A strong model can scan definitions, match synonyms, and suggest candidate equivalences that a human can then verify line by line. That is not glamorous, but it can save days, sometimes weeks. Tao’s posts outline exactly this benefit, and he also notes another upside, better reporting of negative results, since models can surface attempts and partials that rarely get indexed cleanly.

A simple workflow you can implement for this 🛠️

Start by stating the problem in 1-2 clean sentences using the exact objects and constraints. Ask the model to propose alternate names and equivalent formulations. For each candidate, request citations with author, title, venue, year, then pull the PDFs and check that the statement in the paper matches your target problem, including edge cases. If it matches, update your tracker entry with the specific theorem or corollary that closes it. If it does not match, keep the citation on a “related” list and move on. This is essentially what the GPT-5 run did at scale, and it is why “only solutions in the literature were found” still cleaned up real entries.

👨🔧 Developers can now add live Google Maps data to Gemini-powered AI app outputs

🧭 Google is rolling out a new feature for developers using its Gemini AI models—grounding with Google Maps, so apps answer location questions using live Maps data.

Competing platforms like ChatGPT, Claude, or various Chinese open source models probably won’t have this anytime soon.

This update lets developers link Gemini’s reasoning skills with real-time Google Maps data, so apps can respond with accurate, location-based info like business hours, reviews, or what a place feels like.

This ties Gemini’s reasoning to 250M places, routes, hours, ratings, photos, and reviews for location aware responses.

Grounding means the model calls a trusted tool, pulls factual records, and uses those facts while generating the answer.

Developers enable the Google Maps tool in a request and can pass lat_lng to anchor results to a specific spot.

The response can include grounding metadata plus a context token that lets the app render an interactive Maps widget in the app interface.

The widget shows place details like photos, open hours, ratings, and directions so users can quickly verify and act.

Typical asks include full day itineraries with distance and travel time, hyper local picks for housing or retail, and precise place answers.

Combining Maps + Search grounding lets the model use Maps for structured facts and Search for timely schedules or event info, which boosts quality.

The feature is generally available on the latest Gemini models with tool pricing and can be mixed with other tools as needed.

This closes a common gap where language models guessed local facts and now they can base replies on a maintained location database.

That’s a wrap for today, see you all tomorrow.