🥇Alibaba’s Qwen dropped open-source Qwen3Guard-Gen-8B model on Huggingface

Alibaba drops Qwen3Guard-Gen-8B, DeepSeek hits v3.1, Google upgrades Gemini voice, Stargate scales to 10GW, and RL emerges as key for LLM reasoning.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (24-Sept-2025):

🥇Alibaba’s Qwen dropped open-source Qwen3Guard-Gen-8B model on Huggingface

🏆 DeepSeek-V3.1-Terminus was released yesterday.

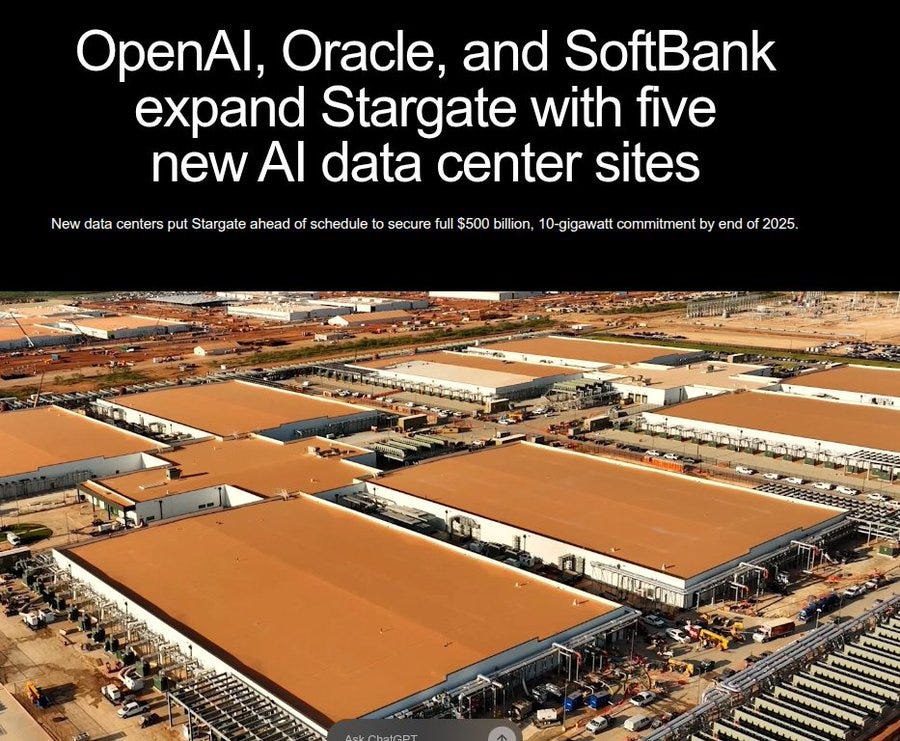

🏭 OpenAI, Oracle, and SoftBank are adding 5 new U.S. AI data center sites, pushing Stargate to ~7GW planned capacity and >$400B committed, with a clear path to $500B and 10GW by end of 2025.

🗣️ Google announced an update to the Gemini Live API with a new native audio model that makes voice agents more reliable and natural

👨🔧 A solid “Survey of Reinforcement Learning for Large Reasoning Models”

🥇Alibaba’s Qwen rolled out Qwen3Guard-Gen-8B on huggingface

It ships 2 variants (Qwen3Guard-Gen full-context, Qwen3Guard-Stream per-token), 0.6B/4B/8B sizes, supports 119 languages, and is released under Apache-2.0. The training set has 1.19M prompt and response samples with human and model outputs, safe versus unsafe pairs per category, translation, and ensemble auto-labeling with voting.

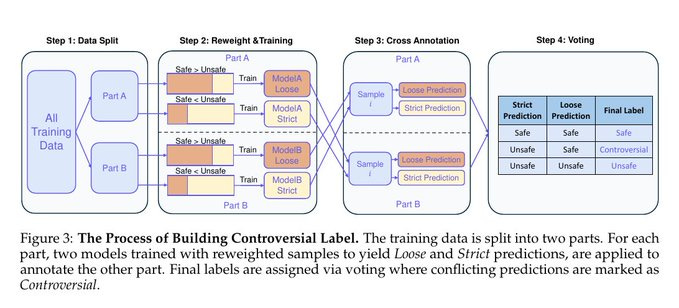

A strict versus loose training scheme flags disagreements as controversial, and label distillation then cleans noise to sharpen boundaries. The core idea is that it takes the messy problem of AI safety moderation and builds a clear, structured architecture around it.

At the foundation, most moderation models either look at a whole response at the end or just apply a filter to the input text. Qwen3Guard splits this into two specialized tracks.

Qwen3Guard-Gen takes the entire conversation in full context and then judges the safety of the whole thing, while Qwen3Guard-Stream works token by token, in real time, almost like watching words as they come out of the model’s mouth and checking instantly if they are going off track.

This two-part setup is the big deal because it balances accuracy and speed. Another key architectural twist is the 3-tier classification system: Safe, Controversial, Unsafe. Instead of forcing a hard yes/no decision, it leaves room for gray areas.

This works because they train the model in two modes, “strict” and “loose”, and whenever those disagree, the example gets labeled as Controversial. That gives more flexibility and better aligns with how human moderators actually think.

Then there’s the label distillation step, which is essentially a clean-up pass that removes noisy or inconsistent data and sharpens the decision boundaries. This is what lets even the small 0.6B model perform competitively with bigger moderation models.

✅ 3 sizes available: 0.6B, 4B, 8B

⚡ Low-latency, Real-time streaming detection with Qwen3Guard-Stream

How Qwen3Guard-Stream works as a real-time safety filter.

The user types a dangerous request, and the model begins generating a reply word by word. For each token the assistant outputs, the stream guard checks it immediately. Safe tokens like “Well, here are the methods to…” pass through without issue.

As soon as harmful content starts appearing, such as “make a bomb”, the stream guard instantly marks those tokens as unsafe and violent. Both prompt moderation (yellow boxes) and response moderation (blue boxes) run in parallel, so the system can catch harmful intent both in what the user asks and what the model says.

The big point here is that this doesn’t wait until the end of a sentence. It acts live, token by token, which makes it possible to stop dangerous output before it ever fully forms.

This image explains how Qwen3Guard builds the Controversial label during training. The training data is first split into 2 parts so the system can cross-check predictions.

Each part is trained with 2 different weightings: a loose model that is more tolerant and a strict model that is harsher on unsafe content.

These models then annotate the opposite data split, giving both loose and strict predictions for each sample. When both predictions agree, the label is clear: safe if both say safe, unsafe if both say unsafe.

When they disagree, for example loose says safe but strict says unsafe, the system marks that example as Controversial. This process creates a middle category that reflects real-world gray areas, instead of forcing a binary safe/unsafe decision.

🏆 DeepSeek-V3.1-Terminus was released yesterday

DeepSeek-V3.1-Terminus is an updated iteration of the company’s hybrid reasoning model, DeepSeek-V3.1. The prior version was a big step forward, but Terminus seeks to deliver a more stable, reliable, and consistent experience.

It improves on two pain points:

It no longer mixes Chinese and English text randomly.

It has much stronger performance when using “agents” like the built-in Code Agent (for coding) and Search Agent (for web tasks).

On tool tasks it beats V3.1 in SimpleQA 96.8 vs 93.4, BrowseComp 38.5 vs 30.0, SWE Verified 68.4 vs 66.0

There are 2 modes, deepseek-chat for function calling and JSON, and deepseek-reasoner for longer thought without tools, with tool requests auto routed to chat.

Context length is 128,000 tokens, and outputs cap at 8,000 for chat and 64,000 for reasoner, with defaults 4,000 and 32,000.

API pricing is $0.07 per 1M input (cache hit), $0.56 per 1M input (miss), and $1.68 per 1M output.

Language mixing and odd characters are reduced, and the built in Code Agent and Search Agent are strengthened for cleaner runs.

One known issue remains where self_attn.o_proj is not yet in UE8M0 FP8 scale format, so some FP8 paths may be underused until a new checkpoint.

The model was trained on an additional 840 billion tokens, using a new tokenizer and updated prompt templates.

🏭 OpenAI, Oracle, and SoftBank are adding 5 new U.S. AI data center sites, pushing Stargate to ~7GW planned capacity and >$400B committed, with a clear path to $500B and 10GW by end of 2025.

SoftBank and OpenAI are adding Lordstown in Ohio and Milam County in Texas that can scale to 1.5GW in ~18 months, using fast build designs and powered infrastructure to shorten time to capacity. The Abilene flagship is already live on Oracle Cloud Infrastructure, with NVIDIA GB200 racks arriving since Jun-25, and it is already running early training and inference jobs.

The July-25 OpenAI and Oracle agreement covers up to 4.5GW and >$300B over 5 years, which anchors the bulk of the near term buildout. Across all 5 sites, the group projects >25,000 onsite jobs plus additional indirect jobs, which signals very large scale construction, power, and network rollouts.

Getting to 10GW means thousands of GB200 class servers with very high power density, which in practice drives big needs for substation capacity, high voltage interconnects, liquid cooling, and water or dry cooler planning. The site mix across Texas, New Mexico, Ohio, and the Midwest spreads grid risk, shortens fiber routes to major regions, and lets them sequence power procurements across utilities.

Oracle’s pitch is cost per flops and fast delivery, so racking GB200 early in Abilene and overlapping construction across multiple sites reduces supply chain bottlenecks. SoftBank’s role focuses on standardized shells and energy expertise, which helps turn greenfield land into compute ready pads that can accept accelerators as soon as they ship.

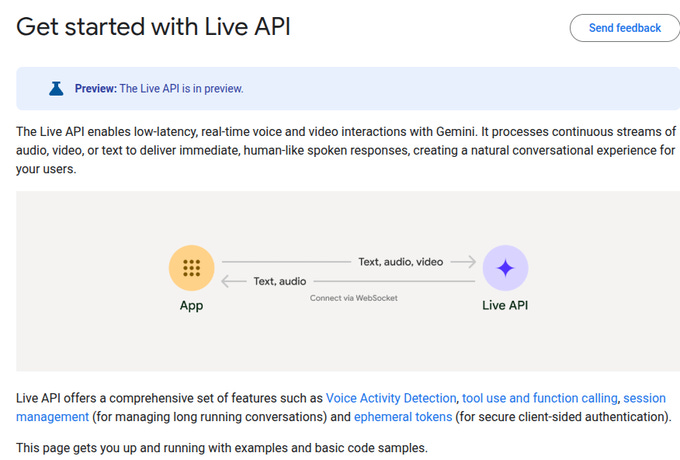

🗣️ Google announced an update to the Gemini Live API with a new native audio model that makes voice agents more reliable and natural

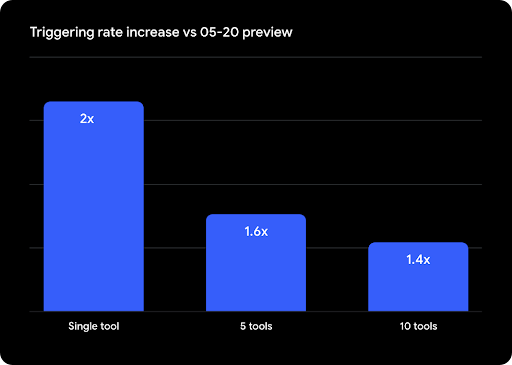

The update centers on two things, more robust function calling so agents pick the right tool and avoid calling the wrong one, and more natural conversations so the model waits, resumes, and ignores background talk like a real assistant.

Boosting proactive audio to handle interruptions, pauses, and side chatter gracefully. There are two ways the system makes speech.

The native audio path uses a single model that takes your voice as input and gives back voice output directly, which makes the timing and tone sound more human-like. The half-cascade path first takes in audio with a native model, then converts the model’s text output back into speech using text-to-speech, which is a bit less natural but more reliable for production apps.

The supported models include gemini-2.5-flash native audio variants for native audio, and gemini-live-2.5-flash-preview and gemini-2.0-flash-live-001 for half-cascade. Google says thinking support is rolling out soon so developers can set a thinking budget for harder questions, matching the reasoning features in Gemini 2.5 families.

Apps can connect client-to-server over WebSockets for the best streaming latency, or server-to-server if routing through a backend helps with control or compliance. For client connections, the recommended auth is ephemeral tokens, which limits exposure of long-lived keys in browsers or mobile apps. Voice Activity Detection lets the model auto-detect when to listen and when to speak, which reduces barge-in bugs and awkward silences.

-—

What exactly is Gemini Live API ?

Gemini Live API is basically Google’s real-time interface for voice and video conversations with its Gemini models. Instead of just typing text and waiting for a reply, the Live API lets apps stream continuous audio or video in and get immediate spoken responses out. It’s built for making voice agents feel natural, with features like detecting when someone starts or stops talking, handling background noise, managing sessions, and even letting the model call external tools in real time.

https://ai.google.dev/gemini-api/docs/live

👨🔧 A solid “Survey of Reinforcement Learning for Large Reasoning Models”

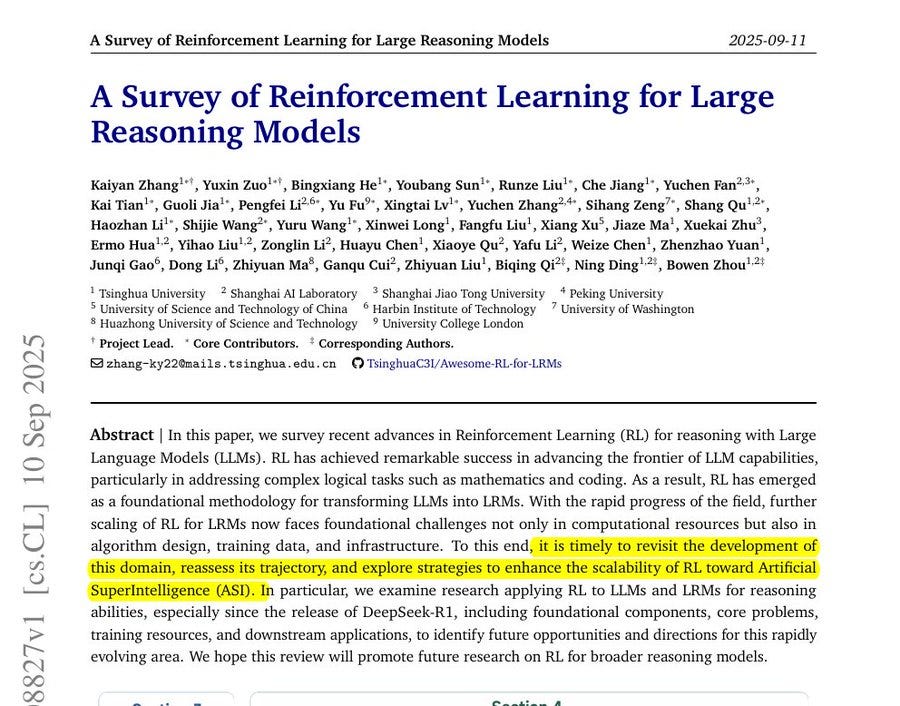

114 pages covering foundational concepts, development of this domain, reassess its trajectory, and explore strategies to enhance the scalability of RL toward Artificial SuperIntelligence (ASI). Progress rests on 3 pillars, reward design, learning algorithms, and data plus infrastructure.

Reward design anchors training by turning correctness checks, program outputs, unit tests, or human preferences into scores that steer updates. For open tasks like writing or explaining steps, it covers preference models, pairwise ranking, debate critics, and process rewards that grade intermediate steps to curb reward hacking.

On algorithms, policy gradient methods such as Proximal Policy Optimization update token policies from whole trajectory rewards, often with self play and curriculum to lengthen chains of thought. Scaling needs fast simulators, synthetic task generators, and guardrails that block shortcuts like answer copying.

Evaluation goes beyond accuracy, the survey stresses judging reasoning traces, robustness to small prompt edits, and generalization. Overall, reinforcement learning turns chatty models into agents that plan, call tools, verify work, and learn from outcomes, but gains stay fragile without solid rewards and validation gates.

Roadmap of how reinforcement learning connects to large reasoning models. 3 core building blocks.

Reward design sets up the signals that guide learning, like checking answers or scoring intermediate steps. Policy optimization adjusts how the model updates itself based on those signals.

Sampling strategies decide which examples or situations to focus on during training. It also points out open problems. For example, whether a model should rely more on its prior knowledge or on reinforcement learning, how to balance learning recipes, and how to avoid models only memorizing instead of generalizing.

The figure highlights the role of training resources. Some come from static datasets, like math or code problems, while others come from dynamic environments, such as games or rule-based tasks. Infrastructure and frameworks help make this scalable.

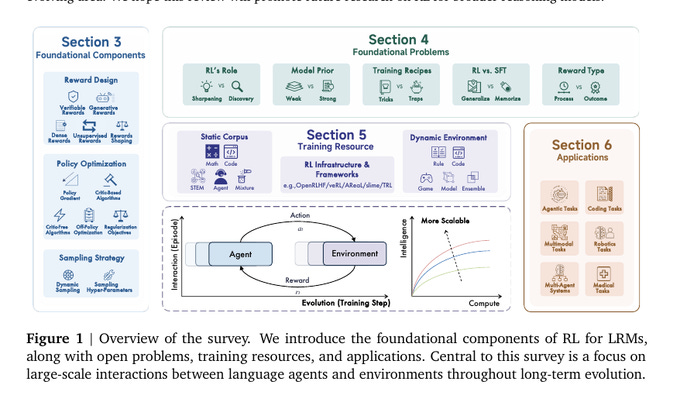

How reinforcement learning methods for language models have evolved over time and how they affect task-solving ability. In 2022, reinforcement learning from human feedback was the main method. This trained models like GPT-3.5 and GPT-4 by rewarding them based on human judgments, but it was heavily dependent on human effort.

In 2023, direct preference optimization came in. Instead of building reward models, it directly optimized models like Llama 3 and Qwen 2.5 from pairwise comparisons of outputs, making it more efficient and less reliant on reward engineering.

By 2025, reinforcement learning with verifiable rewards is seen as the next step. Models like o1 and DeepSeek-R1 use rule-based checks, such as correctness in math or code, to give more reliable signals than subjective human ratings.

The chart also points toward open-ended reinforcement learning as the future direction. Means models could learn from broader, less predefined environments, potentially giving them much higher problem-solving capacity but also making training far more difficult to control.

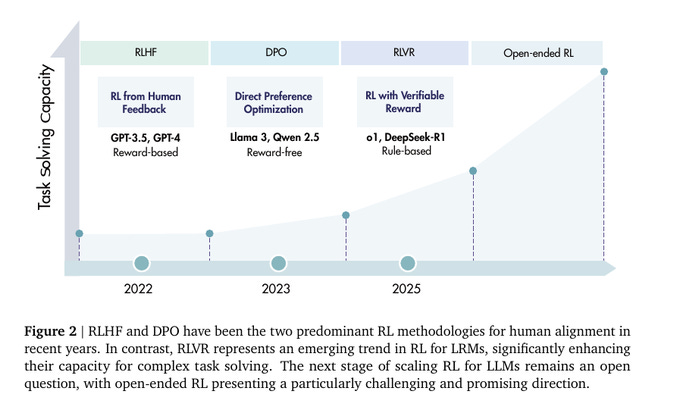

How reinforcement learning fits with language models by comparing the classic agent-environment setup to how a model works with tokens. On the left, the standard loop is shown. An agent takes an action, the environment reacts by giving a new state and a reward, and this cycle repeats over time. This is the usual way reinforcement learning problems are framed.

On the right, the same idea is applied to language models. Here, the model itself acts as the agent. The state is the sequence of input tokens it has already seen. The action is the next token it generates. After producing a response, a reward function evaluates it, often at the full sequence level, and sends that back to guide learning. So the diagram is showing that training a language model with reinforcement learning is like treating text generation as a sequence of decisions, where each token is an action and the overall response gets judged with a reward.

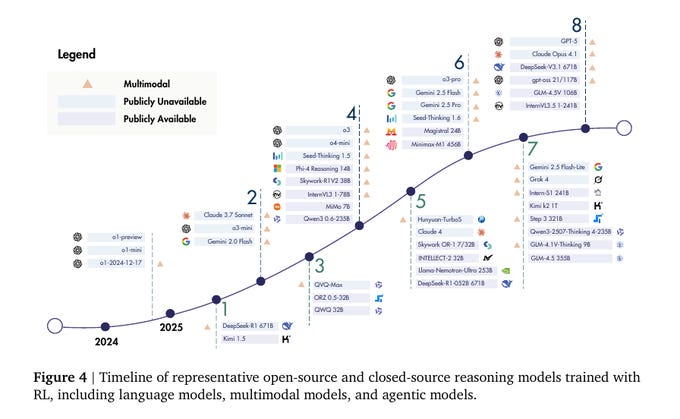

A timeline of reasoning models trained with reinforcement learning, marking when they appeared and whether they are open or closed source. A steady rise in capability from 2024 to 2025. At each step, new models are added, ranging from language-only models to multimodal ones that handle text and images, and even agentic ones that can act in environments.

Datasets for reinforcement learning in reasoning are improving and broadening. Instead of just relying on huge piles of raw text, researchers are now creating higher-quality datasets.

They do this by using techniques like distillation, filtering, and automated evaluation, so the training signals are more reliable and effective. The goal is to make sure the process itself is consistent and trustworthy, not just the final answer.

The range of data is also expanding. It’s no longer limited to math, code, or other STEM fields. It now includes data for search, tool use, and agent-style tasks where the model has to plan, act, and then verify its work step by step.

That’s a wrap for today, see you all tomorrow.