"AlignVLM: Bridging Vision and Language Latent Spaces for Multimodal Understanding"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2502.01341

The challenge for Vision-Language Models (VLMs) is effectively aligning visual and textual information because current methods produce noisy visual features, leading to misalignment. This paper introduces AlignVLM to address this issue.

This paper proposes AlignVLM, a novel method that guides visual features into the LLM's language space by mapping them to a weighted average of LLM text embeddings.

-----

📌 AlignVLM innovatively uses LLM's own vocabulary embedding space as a semantic anchor for visual features. This forces vision and language modalities into a shared, interpretable space, enhancing multimodal understanding without extensive parameter increase.

📌 By mapping visual features to a probability distribution over text embeddings, AlignVLM implicitly performs a soft tokenization of visual input. This method bypasses the limitations of discrete visual tokens and better preserves continuous visual information.

📌 The AlignModule leverages pre-trained linguistic priors by initializing its second linear layer with the LLM's language model head. This smart initialization efficiently transfers semantic knowledge from text to vision, improving alignment.

----------

Methods Explored in this Paper 🔧:

→ The paper uses a Vision Encoder to process document images into visual features. This encoder tiles high-resolution images and extracts patch-level features using SigLip-400M.

→ The core innovation is the AlignModule. This module takes visual features and projects them into the LLM's vocabulary embedding space. It uses two linear layers and a softmax function to create a probability distribution over the LLM's tokens.

→ The AlignModule then computes a weighted average of the LLM's text embeddings based on this probability distribution. This generates aligned visual features that are inherently within the LLM's semantic understanding.

→ Finally, these aligned visual features are concatenated with text embeddings and fed into a LLM (Llama 3 family) for document understanding tasks.

-----

Key Insights 💡:

→ Mapping visual features to a probability distribution over LLM text embeddings ensures that visual inputs reside within the LLM's interpretable semantic space. This method leverages the LLM's pre-existing linguistic knowledge to guide visual feature alignment.

→ This approach, embodied in AlignModule, avoids direct, unconstrained projection of visual features, which often leads to out-of-distribution or noisy inputs.

→ By constraining visual features to the convex hull of text embeddings, AlignVLM enhances vision-language alignment and improves robustness, especially in document understanding tasks.

-----

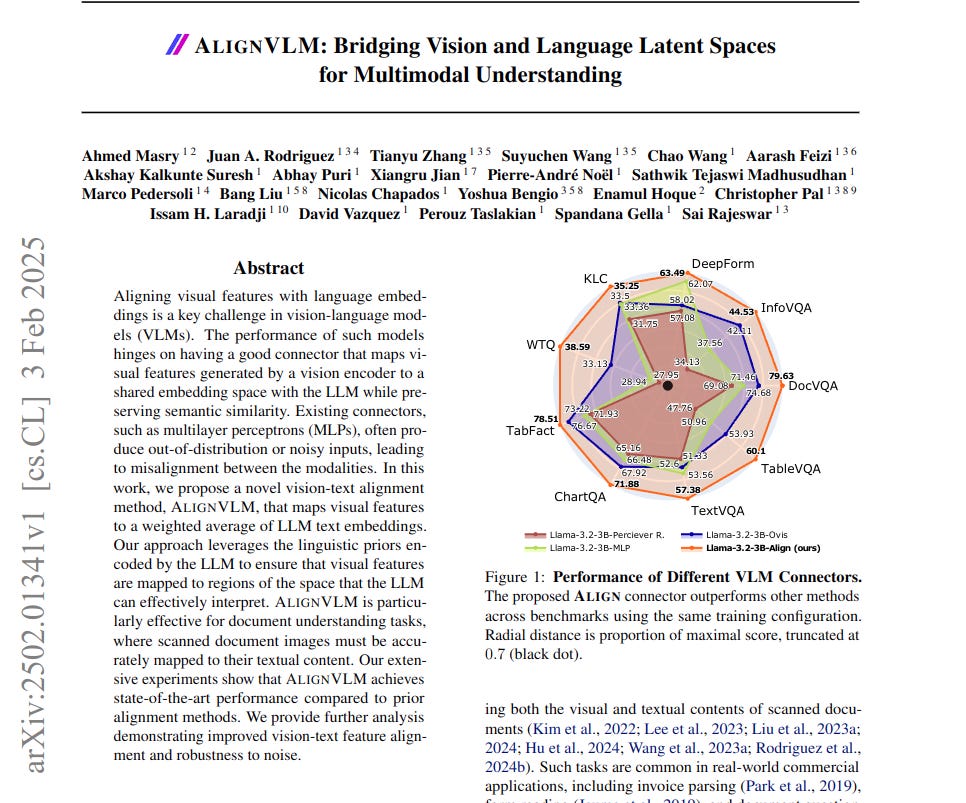

Results 📊:

→ AlignVLM outperforms Base VLMs by up to 9.22% on document understanding benchmarks.

→ AlignVLM achieves 58.81% average score, surpassing MLP (53.06%), Perceiver Resampler (50.68%), and Ovis (54.72%) connectors.

→ Robustness to noise is significantly improved. AlignVLM experiences only a 1.67% performance drop with added noise, compared to a 25.54% drop for MLP.