Amazon takes on OpenAI and Meta with Nova model family

Latest AI innovations: Nova vs GPT-4, Genie 2 world model, photo-to-3D spaces, and evolutionary training breakthrough by Sakana AI.

In today’s Edition (4-Dec-2024):

🔮 Amazon takes on OpenAI and Meta with Nova model family

🌎 Google just introduced a breakthrough foundation world model Genie 2

🚁 ‘Godmother of AI’ Fei-Fei Li’s startup World Labs just revealed an AI that transforms static images into walkable 3D spaces, an AI lets you step inside any photo and walk around

🔬 Sakana AI introduces CycleQD framework, enabling 8B models to match the performance of much larger ones, using evolutionary AI training method outperforming traditional fine-tuning by 5.4%

Byte-Size Brief:

OpenAI plans for 12 days of livestreams, which will include Sora AI, new reasoning capabilities.

AWS Trainium2 cluster achieves 2.8Tb/s with custom AI chips.

LLMs solve math through patterns, not computational reasoning.

ElevenLabs releases multilingual voice AI with minimal latency.

New browser Dia integrates cursor-based AI for automated web navigation.

Hailuo converts static images to fluid animations automatically.

Google's GenCast predicts weather on TPU chips at 97% accuracy.

🔮 Amazon takes on OpenAI and Meta with Nova model family

The Brief

Amazon launches Nova model family. Nova Pro outperforms GPT-4o at 1/3rd cost on on the Artificial Analysis Quality Index. Costing only $0.8/1M input, $3.2/1M output tokens.

The Details

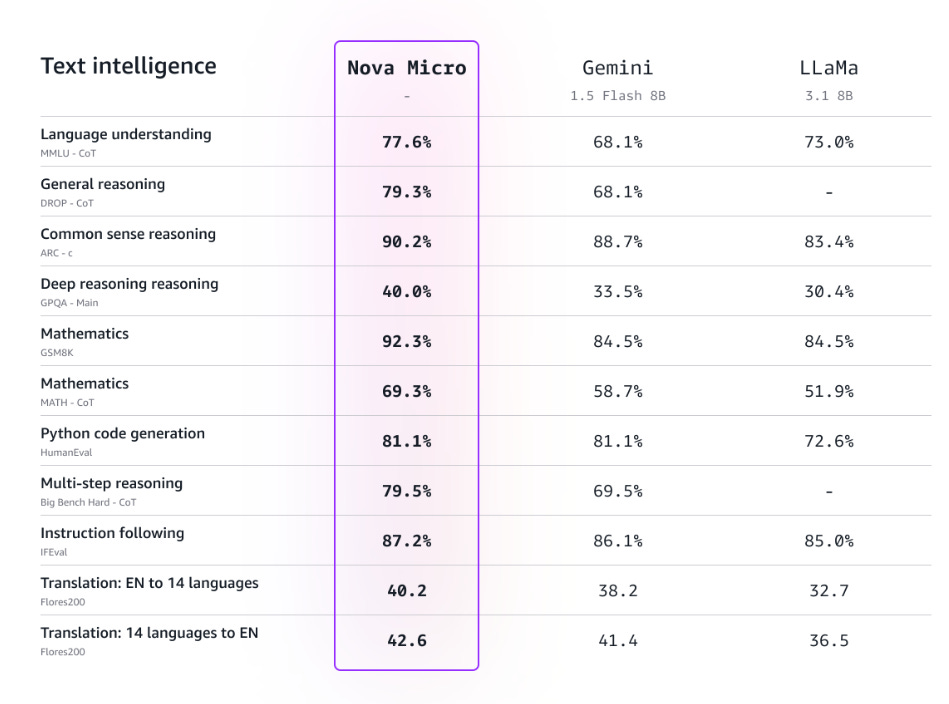

Amazon Nova Micro: A low-cost text-only model. Its a Text-only model optimized for minimal latency with 128K token context. Handles core NLP tasks like summarization, translation, and basic mathematical reasoning.

Amazon Nova Lite: A low-cost option with 300K tokens context length for processing images, video, and text input. Processes multiple images and 30-minute videos in single requests. Maintains high accuracy for real-time interactions while keeping costs low through optimized architecture.

Amazon Nova Pro: Also multimodal with better accuracy, speed, and a higher cost. Scores 75 on Quality Index with 300K context window

Amazon Nova Premier: Highest tier multimodal model that is good for complex tasks and creating custom models. This version is expected to be available in Q1 2025.

Amazon Nova Canvas: Image generation model.

Amazon Nova Reel: Video generation model.

→ Nova includes three models: Pro , Lite , and Micro achieves 210 tokens/second. Pro costs $0.8/1M input and $3.2/1M output tokens.

→ Direct API access through Amazon’s Bedrock runtime client.

→ The models also support custom fine-tuning, which allows customers to point the models to examples in their own proprietary data that have been labeled to boost accuracy.

The Impact

This new Nova models now positions AWS for market leadership in both model capabilities and training infrastructure.

🌎 Google just introduced a breakthrough foundation world model Genie 2

The Brief

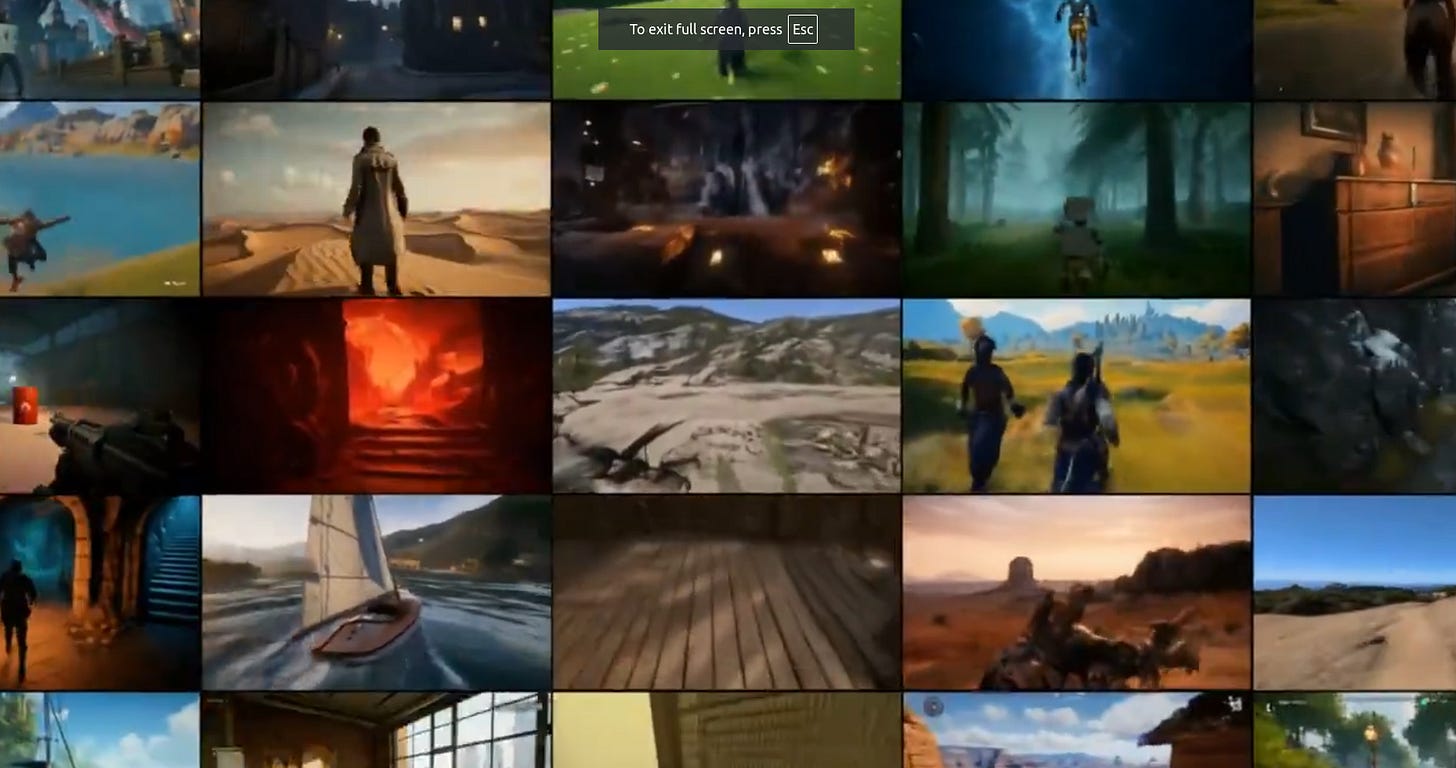

DeepMind unveils Genie 2, a foundation world model that generates infinite playable 3D environments from single image prompts. The model can create diverse worlds lasting up to 60 seconds, supporting both human and AI agent interaction through keyboard/mouse controls.

The Details

→ For every example, the model is prompted with a single image generated by Imagen 3, Google’s state-of-the-art text-to-image model. This means anyone can describe a world they want in text, select their favorite rendering of that idea, and then step into and interact with that newly created world (or have an AI agent be trained or evaluated in it). At each step, a person or agent provides a keyboard and mouse action, and Genie 2 simulates the next observation.

→ The system employs an autoregressive latent diffusion architecture with a transformer-based dynamics model. It processes video frames through an autoencoder, using classifier-free guidance for enhanced action control.

→ Genie 2 demonstrates emergent capabilities including complex physics modeling, character animations, NPC behaviors, and realistic lighting effects. The model maintains object permanence and generates consistent world states even for off-screen elements.

→ Integration with SIMA agent enables natural language-driven interaction in generated environments. The system supports rapid prototyping of diverse scenarios from concept art and real-world images.

→ Unlike previous 2D-constrained world models, Genie 2 handles full 3D environments with multiple perspectives including first-person, isometric, and third-person views.

The Impact

Creates unlimited training environments for embodied AI systems, solving major bottleneck in developing general AI capabilities.

🚁 ‘Godmother of AI’ Fei-Fei Li’s startup World Labs just revealed an AI that transforms static images into walkable 3D spaces, an AI lets you step inside any photo and walk around

The Brief

World Labs, launches AI system transforming single images into explorable 3D worlds with real-time browser rendering, revolutionizing digital content creation.

The Details

→ The system generates complete 3D environments beyond the visible input image, maintaining geometric consistency and scene persistence during exploration. Users navigate freely using standard keyboard/mouse controls within browser environments.

→ Technical capabilities include real-time camera manipulation, depth mapping, and interactive effects like sonar, spotlight, and ripple. The platform features dolly zoom and depth-of-field controls for artistic expression.

→ Integration with text-to-image models enables creators to generate custom 3D worlds from text prompts. The system works seamlessly with existing creative tools including Midjourney, Runway, Suno, ElevenLabs, Blender, and CapCut.

The Impact

3D generation supersedes traditional 2D AI tools, enabling persistent worlds with geometric accuracy for games, films, simulations.

🔬 Sakana AI introduces CycleQD framework, enabling 8B models to match the performance of much larger ones, using evolutionary AI training method outperforming traditional fine-tuning by 5.4%

The Brief

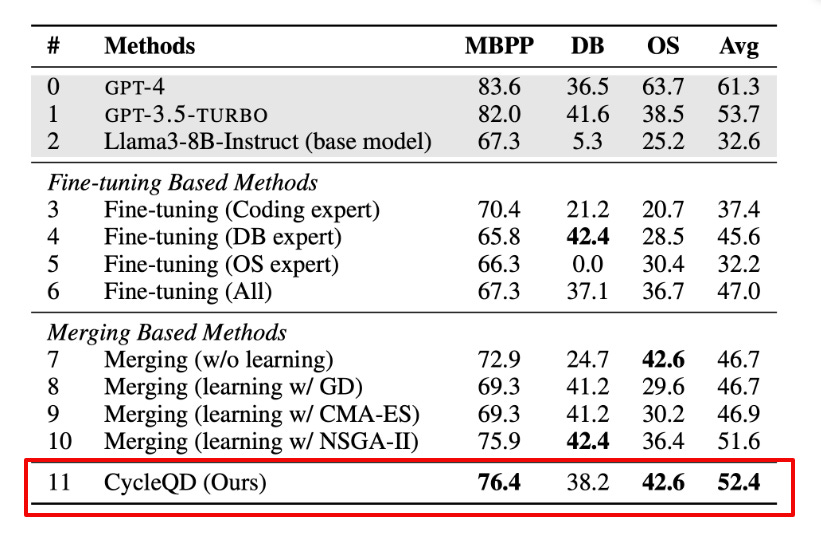

CycleQD, is a revolutionary framework leveraging Quality Diversity for evolving swarms of specialized LLM agents. This approach is able to produce a population of relatively small LLM agents, each with only 8B parameters, that are highly capable in various challenging agentic benchmarks, particularly in computer science tasks.

The Details

→ The framework employs model merging for crossover and SVD-based mutation, creating diverse populations of specialized agents. Each agent occupies a unique capability niche, similar to ecological species specialization.

→ Performance testing on computer science tasks shows impressive results: 76.4% on MBPP (coding), 38.2% on DB (database), and 42.6% on OS (operating systems). The overall 52.4% average performance surpasses traditional fine-tuning (47.0%) and model merging (51.6%).

→ CycleQD's innovation lies in its cyclic quality rotation system, preventing single-skill dominance while maintaining balanced multi-skill development across the agent population.

The Impact

Creates smaller, specialized AI models that match larger models' capabilities while reducing computational demands.

Byte-Size Brief

OpenAI just announed '12 Days of Innovation' , starting tomorrow, December 5, 2024. This event includes twelve consecutive days of livestreams where new technologies, including the AI model Sora and a new reasoning model, will be introduced or demonstrated. This initiative showcases OpenAI's continuous innovation in the AI sector amidst a competitive landscape.

AWS unveiled Project Rainier, a massive AI compute cluster powered by hundreds of thousands of custom Trainium2 chips. The Trainium2 chips pack 8 NeuronCores with specialized AI engines, 96GB memory, and The chips leverage 96GB of high-bandwidth HBM memory, achieving data transfer speeds of 2.8 Tb/s between memory and processing cores to minimize latency.

New paper shows, LLMs solve math by pattern matching instead of computing step-by-step, like humans memorizing answers without understanding. Experiments with Qwen2.5-72B show missing intermediate steps in model's hidden states. Performance crashes when problems are slightly modified, proving LLMs don't truly reason step-by-step.

ElevenLabs unveiled Conversational AI, your AI assistant can now speak 31 languages as fluently and quickly as a human. And now you can build AI agents that can speak in minutes with low latency, full configurability, and seamless scalability. It provides instant voice responses, seamless turn-taking abilities, and works with any LLM. The system helps developers create voice-enabled AI agents within minutes.

A new Chromium-based browser packed with AI features was just announced by The Browser Company, called Dia. Type a request, watch Dia's AI handle your web tasks while you relax. This new browser from Arc's creators eliminates tab-switching by embedding AI directly in the cursor and search bar. It automates emails, shopping, and document searches through natural language commands.

Hailuo AI introduced l2V-01-Live, a new AI video model. It turns your artwork into smooth animations - no animation skills needed. Hailuo's I2V-01-Live model specializes in natural movement generation, expression control, and style preservation.

Google just released GenCast, a revolutionary AI based weather forecasting system. Generates weather forecasts in in 8 minutes versus traditional systems' hours using diffusion models. Single AI chip matches thousands of processors in weather prediction speed and accuracy. GenCast generates forecasts in 8 minutes on one TPU, competing with supercomputer results that take hours. The model achieves 97% accuracy across 1,320 prediction combinations, outperforming traditional systems.