Andrej Karpathy Releases NanoChat: The Minimal ChatGPT Clone - The Best $100 ChatGPT Alternative

Karpathy’s nanochat drops, ChatGPT to allow adult content, OpenAI builds its own chips, and a deep dive into GPU ops behind MoEs and GB200 NVL72.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (15-Oct-2025):

👨🔧 Andrej Karpathy Releases nanochat, a Minimal ChatGPT Clone

🎭 Sam Altman announced ChatGPT will relax some mental-health guardrails and allow erotica for verified adults by Dec-25.

📡 Nvidia and AMD aren’t enough, OpenAI is designing its own chips now.

🛠️ Tutorial: What ops do GPUs execute when training MoEs, and how does that relate to GB200 NVL72? - SemiAnalysis explains

👨🔧 Andrej Karpathy Releases nanochat, a Minimal ChatGPT Clone

It covers tokenizer training, pretraining, midtraining, supervised finetuning, optional reinforcement learning, evaluation, and an inference server so the flow runs end to end. The tokenizer is a new Rust byte pair encoding that the scripts train on the corpus shards, which keeps the pipeline fast and consistent.

Pretraining runs on FineWeb to learn general text patterns, while a composite CORE score tracks capability across simple proxy checks during the run. Midtraining adds user assistant chats from SmolTalk, multiple choice questions, and tool traces, which steers the model toward dialog and tool use.

Supervised finetuning tightens instruction following, and the sample report shows MMLU ~31%, ARC Easy ~35% to 39%, HumanEval ~7% to 9%, and GSM8K ~3% to 5% at the $100 tier. Optional reinforcement learning uses GRPO (group relative policy optimization), which compares several sampled answers per problem and nudges weights toward the strongest, pushing GSM8K to ~7% in the demo table.

Inference uses a key value cache for fast prefill and decode, supports simple batching, and exposes lightweight tool use with a sandboxed Python interpreter. Each run writes a single report[.]md with metrics, benchmark snapshots, code stats, and a small ChatCORE sanity check so forks can compare apples to apples.

Scaling is mostly a depth knob, for example depth 26 trains in ~12 hours for roughly $300 and edges past a GPT-2 grade CORE score, while ~41.6 hours lands around $1000 for noticeably cleaner behavior. Memory is managed by lowering device_batch_size until it fits, letting gradient accumulation keep the effective batch, and a single 1 GPU setup works with the same code at ~8x wall time. A longer ~24 hours depth 30 run reaches about 40% MMLU, 70% ARC Easy, and 20% GSM8K, at roughly GPT-3 Small 125M compute and about 0.1% of GPT-3 training FLOPs.

🎭 Sam Altman just announced ChatGPT will relax some mental-health guardrails and allow erotica for verified adults by Dec-25.

Sam Altman says ChatGPT will soon allow erotica for adult users.

And will also allow toggle for more human-like personalities that can act like a friend and use lots of emoji. Altman seems to be celebrating early success, saying OpenAI has “been able to lessen the major mental health problems” related to ChatGPT.

The announcement is a notable pivot from OpenAI’s months-long effort to address the concerning relationships that some mentally unstable users have developed with ChatGPT.

OpenAI says earlier policies were “pretty restrictive” around mental-health topics, and claims it has reduced serious failure risks, so it will ease refusals while keeping crisis protections. The new update connects to an age-gating system that separates minors from adults under a “treat adults like adults” rule, while blocking illegal or exploitative content.

Letting chatbots take part in romantic or sexual role play has worked well as an engagement tactic for other AI chatbot companies. But OpenAI is feeling the heat to expand its audience. Even with 800 million weekly users, the company is in a tight race with Google and Meta to create AI products that reach the masses.

The change follows pressure over misuse in other AI platforms, including incidents of non-consensual intimate image generation, pushing for stricter identity verification and moderation. Past ChatGPT versions showed issues with sycophantic or uncritical behavior, especially around mental-health topics, so OpenAI kept a tight evaluation and red-teaming to prevent harm. But now they are going for a controlled expansion of adult freedom

📡 Nvidia and AMD aren’t enough, OpenAI is designing its own chips now.

The plan is to co‑design (OpenAI and Broadcom) a programmable accelerator and the surrounding racks so the silicon, the memory, the compiler, and the network all match how modern transformer models actually run. OpenAI designs the ASIC and racks, Broadcom builds and deploys them over Ethernet for scale-out.

Broadcom develops and deploys it at scale using its Ethernet gear, with first racks targeted to start shipping in 2H‑2026 and build‑out through 2029, totaling about 10GW (10GW here means aggregate data center power). OpenAI also locked 6GW with AMD and received a warrant for up to 160M AMD shares at $0.01 per share tied to deployment milestones.

In Sep-25, OpenAI and Nvidia signed a letter of intent for at least 10GW of Nvidia systems with a planned $100B Nvidia investment as rollouts happen. OpenAI said “embedding what we’ve learned directly into the hardware” - means the micro‑architecture, data formats, memory hierarchy, and compiler are shaped by transformer workloads, not that model weights are fixed in transistors.

💻 Now what direction they are possibly moving

Transformers spend most of their time in a few kernels, especially matrix multiplies and attention. “Embedding the algorithm in silicon” means adding compute units, instructions, and fused datapaths that execute those kernels with fewer memory trips and at lower precision where accuracy holds.

An OpenAI‑designed accelerator can pick the precisions and fusions that match its models and compiler. The chip stays fully programmable, new models load as weights into memory, the speedups come from the hardware’s built‑in assumptions about the workload.

Foundry, packaging, and memory choices are undisclosed, and those will drive cost, yield, and timing more than any headline. If OpenAI can build really good software tools that manage how its new custom chips (the ASICs) run programs, then those chips could perform more work per unit of power i.e. better “perf per watt”.

Right now, Nvidia’s big advantage isn’t just its hardware, it’s the CUDA software ecosystem — a full platform that developers already know how to use for training AI models. If OpenAI wants to move away from Nvidia chips, it must create its own version of that ecosystem — a strong compiler (which turns AI code into instructions the chip can run) and a runtime (which manages the chip’s operations during training or inference).

Broadcom makes chips for Google’s TPU stuff. They know custom AI silicon at scale. OpenAI’s basically hiring them to build exactly what they need instead of buying whatever Nvidia sells.

🛠️ Tutorial: What ops do GPUs execute when training MoEs, and how does that relate to GB200 NVL72? - SemiAnalysis explains

MoE training has 3 heavy steps, send tokens to chosen experts, pull results back, and sync gradients, and GB200 NVL72 speeds this up by keeping 72 GPUs on one high bandwidth fabric.

GB200 NVL72 is a rack-scale system from NVIDIA that links 72 Blackwell GPUs and 36 Grace CPUs via a massive NVLink fabric to act like one unified GPU.

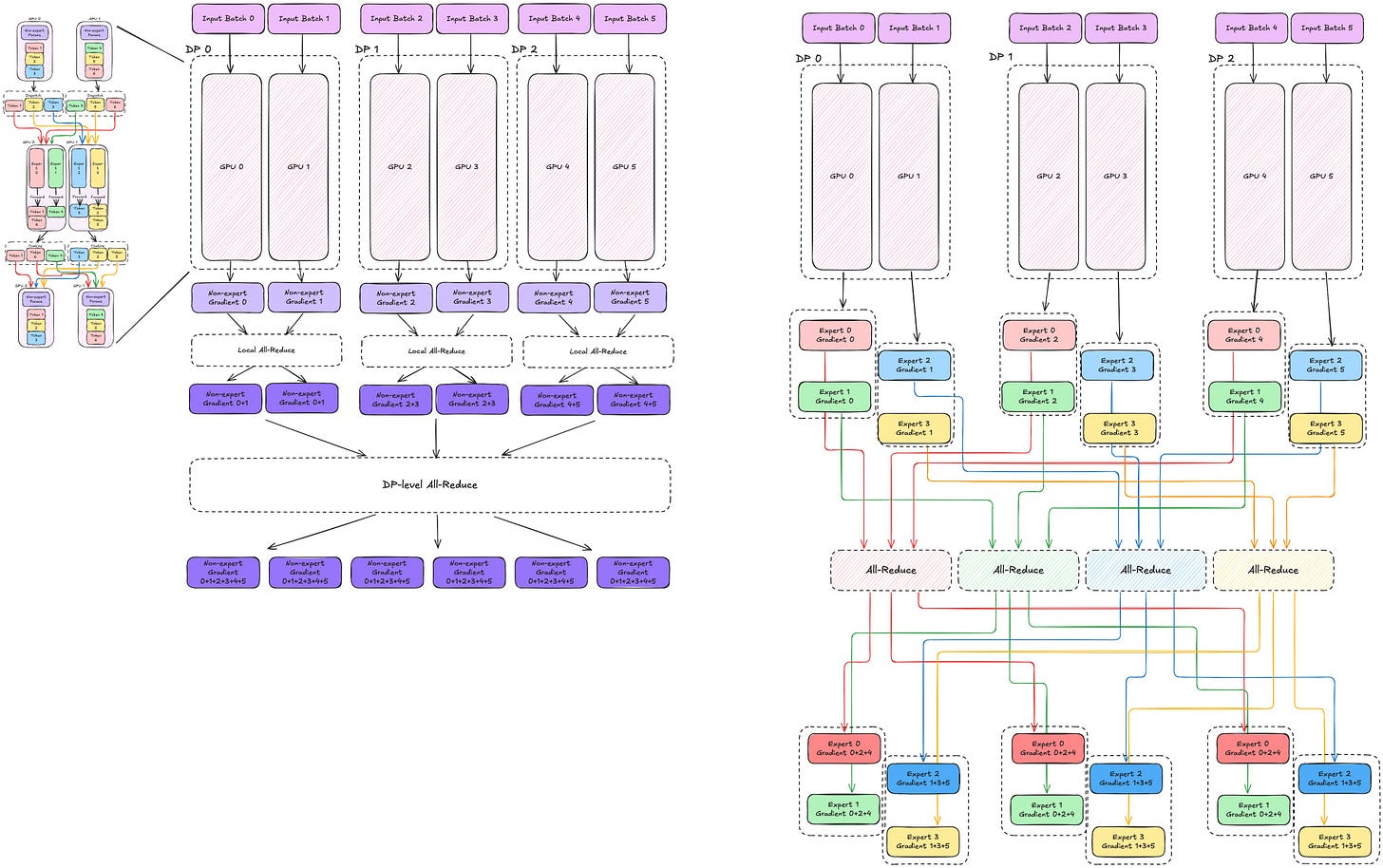

Gradient Synchronization with Data Parallelism (DP) and Expert Parallelism

Think of Data Parallelism (DP) and Expert Parallelism (EP) as two different ways to share work across GPUs.

In DP, every GPU keeps a full copy of the model. Each GPU trains on different input batches, and then they share and average their gradients so all copies stay synced.

In EP, the large “expert” layers are split across GPUs. Each GPU only stores a few experts, and tokens are routed to the right GPU that has their experts. So teams run DP x EP together.

A gate picks top k experts for each token.

Tokens move from their home GPU to the GPUs that store those experts, which is an All-to-All exchange where every GPU may send to every other GPU.

After compute on the experts, tokens return to their home GPU in a reverse All-to-All.

The same routes get used in backprop when gradients flow.

For expert parts, only the data parallel replicas that host the same expert need to match, so the sync is scoped to that expert group.

For non expert parts like attention and embeddings, parameters are shared everywhere, so the All-Reduce spans all GPUs and this is usually the heaviest sync.

Hierarchical All-Reduce cuts time by reducing inside each node first, then across nodes, then broadcasting inside the node.

The All-to-All traffic grows with tokens, hidden size, and top k, so slow inter node links hurt scale.

Node limited routing tries to keep tokens inside a node, which avoids slow links but can waste some expert capacity and needs extra load balancing.

A large scale up domain like GB200 NVL72 gives uniform fast links across 72 GPUs, so dispatch and combine stay on local NVLink instead of crossing a slower network.

A good layout is to keep Expert Parallelism inside one NVL72 and place Data Parallelism across boxes if needed, so noisy All-to-All stays local while global All-Reduce is the only cross box step.

This layout lines up well with hierarchical All-Reduce since early steps ride NVLink and only the final step uses the cluster network.

So MoE training is lots of All-to-All for tokens and All-Reduce for gradients, and NVL72 keeps more of that on fast links.

That yields simpler parallel plans, higher hardware use, and fewer stalls in dispatch, combine, and non expert All-Reduce.

That’s a wrap for today, see you all tomorrow.

Couldn't agree more. Karpathy's NanoChat is a phenomenal step toward democratizing AI development, making serious training accessible for just $100. It really highlights the power of efficient, focused pipelins. I wonder if the current performance benchmarks are robust enough for practical, local applicaitons, or if this primarily serves as an educational framework.