🛠️ Anthropic is putting its Claude AI directly inside Google Chrome

Chrome gets Claude, Perplexity launches Comet Plus, Anemoi breaks agent barriers, Codex updates drop, and xAI files suit against OpenAI and Apple.

Read time: 10 min

📚 Browse past editions here.

( I publish this newsletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (27-Aug-2025):

🛠️ Anthropic is putting its Claude AI directly inside Google Chrome

💰 Perplexity launches new Comet Plus subscription with revenue-sharing for publishers

🧠 A new open-source, multi-agent AI system Anemoi, just solved a critical limitations of traditional AI agents.

🔮 OpenAI’s Codex NEW mega update.

🥊 Elon Musk's xAI sues Apple and OpenAI over AI competition, App Store rankings

🛠️ Anthropic is putting its Claude AI directly inside Google Chrome

🖱️ Anthropic is piloting Claude for Chrome with 1,000 users only, on Anthropic’s Max plan, which costs between $100 and $200 per month.

Claude for Chrome acts like a careful human assistant that reads the page, presses buttons, fills forms, and moves between sites.

By adding an extension to Chrome, select users can now chat with Claude in a sidecar window that maintains context of everything happening in their browser. Users can also give the Claude agent permission to take actions in their browser and complete some tasks on their behalf.

Browser use brings several safety challenges—most notably “prompt injection”, where malicious actors hide instructions to trick Claude into harmful actions. The risk is that content on a page can carry hidden instructions, and the agent often cannot tell if text is data to process or a command to follow.

This trick is prompt injection, and it can ride in through emails, docs, chat messages, hidden HTML, or even the URL bar and the tab title. Anthropic’s red team built 123 test cases across 29 attack types and saw the agent follow bad instructions like deleting emails.

The raw targeted success rate was 23.6% before new defenses, which is a real failure rate, not a hypothetical one. The company then added guardrails and dropped that to 11.2% in autonomous mode, which is better but still not good enough for broad rollout.

They also attacked browser‑specific quirks like invisible form fields in the Document Object Model, instructions smuggled into the URL text, and poisoned tab titles.

On a challenge set that focused on those browser quirks, mitigations cut success from 35.7% to 0%, which shows targeted defenses can work when the threat is well scoped.

The first safety layer is site‑level permissions, so users can allow or block domains the agent may touch. The second layer is action confirmations for risky moves like publishing, purchases, or sharing personal data, even when autonomous mode is on.

Anthropic also blocks entire categories, including financial services, adult content, and pirated content, to shrink the blast radius.

The competitive backdrop is heating up, with OpenAI’s Operator agent shipping earlier to $200 per month ChatGPT Pro customers and Microsoft wiring computer use into Copilot Studio for enterprise automation.

Those systems aim at the same payoff, which is automating multi‑tool workflows without custom integrations that break whenever a user interface changes.

Also some more fresh research signal comes from Salesforce’s CoAct‑1, which blended point‑and‑click control with code generation and hit 60.76% success on complex tasks with fewer steps than pure clickers.

Another signal is OpenCUA from the University of Hong Kong, which trained on 22,600 human task demos across Windows, macOS, and Ubuntu, and pushed open models closer to commercial systems.

If this class of agent lands, teams could automate ticket triage, refunds, onboarding, data entry, and ops runbooks across mismatched tools, even when only the browser is available.

The catch is trust boundaries, because the same eyes and hands that make the agent useful also make it easy to trick through content that looks routine.

A practical mental model is to treat every web page as untrusted input, make the agent separate data from instructions, and require human approval for any irreversible action.

💰 Perplexity launches new Comet Plus subscription with revenue-sharing for publishers

💸 Perplexity just launched Comet Plus, a new subscription that gives Perplexity users access to premium content from a group of trusted publishers and journalists.

Comet Plus will be a $5 standalone subscription. Pro and Max subscribers get Comet Plus included in their memberships. With it they are also launching a new revenue-share model for publishers. It changes how publishers are compensated.

The money shared through Comet Plus will presumably account for what's lost when an AI agent visits a webpage on your behalf, zooming past ads you'd normally see or hear.

Publishers will get 80% of the revenue of Comet Plus. Perplexity Will pay publishers from an initial $42.5 million pool and share 80% of revenue from its new Comet Plus subscription when its answers use their articles.

Comet Plus sits inside Perplexity’s Comet browser, costs $5/month, and even when it is bundled in higher tiers the revenue still counts toward the publisher split. Payouts trigger per request, so money flows when the AI assistant or the search engine fulfills a task or query using a publisher’s reporting.

This replaces Perplexity’s earlier Publisher Program that emphasized ad revenue sharing, moving partners like Gannett toward a subscription driven model. This rollout lands while Dow Jones and the New York Post press a federal case alleging copyright and trademark misuse by Perplexity.

Elsewhere, big outlets are cutting direct licenses, including News Corp’s agreement with OpenAI and the New York Times’ multiyear deal with Amazon to use Times content and train models.

Platforms are also tweaking discovery, with Google’s new Preferred Sources letting users star favored outlets so they appear more often in Top Stories.

🧠 A new open-source, multi-agent AI system Anemoi, just solved a critical limitations of traditional AI agents.

Coral Protocol introduces Anemoi, an open-source, semi-centralised multi-agent system. This is a great win for small language models (SLMs).

Achieved 52.73% accuracy with a small LLM (GPT-4.1-mini) as the planner, surpassing the strongest open-source SOTA baseline OWL (43.63%) by +9.09% under identical settings. It swaps single-planner control for coordinated Agent-to-Agent talk.

(OWL stands for Optimized Workforce Learning, a framework designed for multi-agent assistance in real-world automation tasks)

⚠️ Why traditional AI-Agents systems struggle

Most generalist multi‑agent setups rely on context‑engineering with a centralized planner (a big LLM) that pushes one‑way prompts to workers, which creates a single dependency on the planner’s reasoning quality.

Therefore, the centralized planners need to rely on cutting-edge closed-source LLMs to ensure adequate performance, such as GPT-5, Grok 4, and Claude Opus 4.

If that planner uses a smaller LLM, quality drops, and because collaboration happens through prompt concatenation, teams pass redundant context and lose details.

Here, context engineering incurs significant token overhead and redundancy, since agent “collaboration” is realized through prompt concatenation and manual context injection rather than direct communication.

🏗️ What Anemoi changes

Anemoi keeps a light planner (a small language model) to draft an initial plan, then lets all agents communicate directly using Agent‑to‑Agent server. This reduces reliance on 1 planner, allows continuous plan updates as work progresses, and trims token overhead from repeated context passing.

The hierarchy is semi-centralized: a planner exists but doesn’t control everything, workers collaborate and refine tasks themselves. Checkout the Github.

The key win is that direct Agent‑to‑Agent collaboration plus a light LLM as a planner cuts token waste and removes the single planner as a failure point.

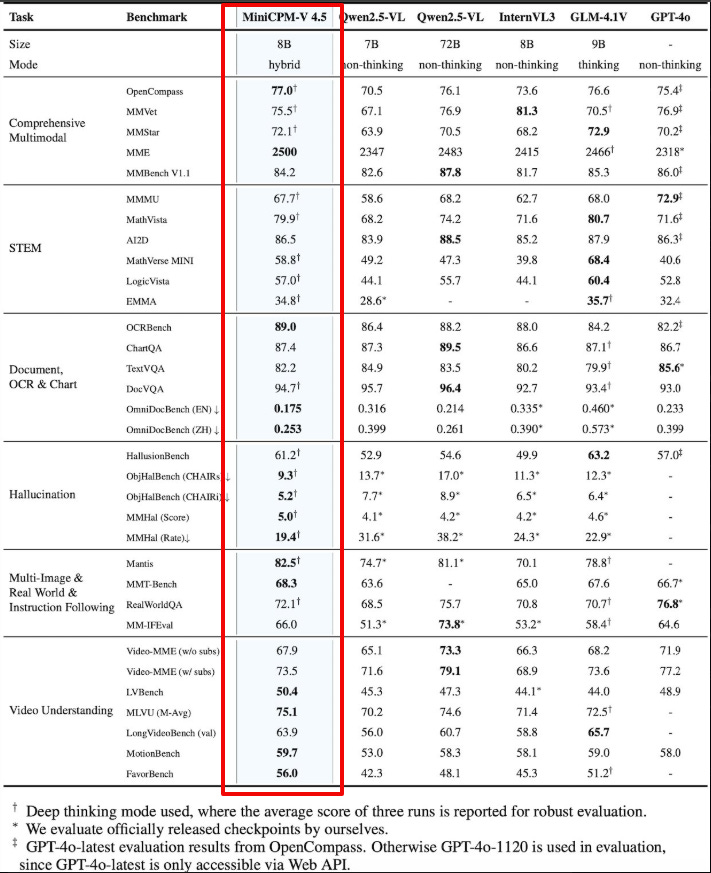

🛠️ 🖼️ MiniCPM-V 4.5 with Apache 2 with free commercial use released, 🖼️ MiniCPM-V 4.5 just dropped on

A GPT-4o Level MLLM for Single Image, Multi Image and Video Understanding on Your Phone

With only 8B parameters, it surpasses many SOTA models like GPT-4o-latest, Gemini-2.0 Pro, Qwen2.5-VL 72B for vision-language capabilities, making it the most performant MLLM under 30B parameters.

Combines strong vision, fast video handling, and tough OCR, so the headline is real capability with small compute.

High resolution images up to 1.8M pixels pass through an LLaVA-UHD style path that uses 4x fewer visual tokens, which is why reading small text and dense PDFs holds up.

The model pairs Qwen3-8B as the language core with a SigLIP2-400M vision tower, giving it a compact but capable backbone.

On public leaderboards it posts 77.0 on OpenCompass, hits 2500 on MME, and leads document tasks like OCRBench 89.0, with strong video numbers on Video-MME, LVBench, and MLVU.

A new unified 3D-Resampler packs 6 consecutive 448x448 frames into 64 tokens instead of about 1536, a 96x squeeze that buys 10FPS high refresh video and long context video without raising LLM cost.

Pre-training mixes OCR and knowledge learning by corrupting document text at random levels and forcing the model to either read when visible or infer when blocked, which cuts parser errors and lowers text-only bias.

Post-training adds a switch between fast thinking and deep thinking, optimized together with multimodal RL plus RLPR and RLAIF-V to lift reasoning while reducing hallucinations.

Use is straightforward with llama.cpp and ollama for CPU, int4/GGUF/AWQ quantized builds, SGLang or vLLM for servers, and an iOS app for on-device runs.

🔮 OpenAI’s Codex NEW mega update.

OpenAI announced Codex updates featuring an IDE extension, a handoff system between local and cloud, GitHub code reviews, and a GPT-5-powered CLI refresh.

With these updates, Codex works as one agent across your IDE, terminal, cloud, GitHub, and even on your phone — all connected by your ChatGPT account.

It’s all included in Plus, Pro, Team, Edu, and Enterprise plans.

IDE extension supports VS Code, Cursor, and Windsurf for editing and previewing changes locally.

Users can now log in with ChatGPT across CLI, IDE extension, and Codex web, skipping manual API key setup.

Local-cloud handoff lets you work with Codex locally, push tasks to the cloud to finish asynchronously, and then resume in IDE through Codex web.

GitHub integration enables automatic reviews of pull requests or @codex mentions, validating changes by comparing against intent, analyzing dependencies, and executing code tests.

CLI rebuilt with GPT-5 brings agent-style coding, redesigned UI, fresh commands, bug fixes, plus support for image inputs, queued messages, approval modes, lists, and web search.

Usage levels: 30-150 messages per 5 hours for standard plans, 300-1,500 for Pro, with higher temporary limits on cloud tasks.

About usage limits, as per OpenAI

Average Plus/Team users can send 30-150 messages every 5 hours

Average Pro users can send 300-1,500 messages every 5 hours

🥊 Elon Musk's xAI sues Apple and OpenAI over AI competition, App Store rankings

xAI is suing OpenAI and Apple, saying their deal to bake OpenAI into Apple’s software illegally locks up smartphones and chatbots, and it wants that deal unwound plus billions in damages.

The core claim is a conspiracy to monopolize where Apple’s control over iPhone distribution and defaults allegedly props up OpenAI’s lead in consumer chatbots.

The target is Apple’s system-level integration of OpenAI features, which means built-in access, privileged surfaces, and default entry points that competitors like xAI’s Grok cannot easily match.

In other words, if the operating system routes users toward one assistant by default and ranks that choice highest, rivals face higher user-acquisition costs and weaker data feedback loops.

xAI asks the court to break the Apple OpenAI arrangement and award money damages that it says could be in the billions. OpenAI calls the suit harassment, and Apple has not commented, which sets up a straight legal fight over market power and platform control.

The legal test will hinge on how the “smartphone” and “chatbot” markets are defined, how much effective foreclosure the defaults cause, and whether users lose choice or quality rather than a single competitor losing share.

More granular details if you are interested, from the court filings by xAI aginst Apple and OpenAI.

xAI says Apple and OpenAI cut an exclusive deal that makes ChatGPT the only chatbot built into the iPhone, which they say locks up both smartphones and chatbots.

Apple holds 65% of US smartphones and OpenAI holds 80%+ of chatbots, so joining forces blocks rivals like Grok from fair access.

The core complaint is that Siri, Writing Tools, and the Camera send prompts only to ChatGPT, giving it unique access to 1.5B daily Siri requests and starving competitors of the scale they need to improve.

xAI says that more prompts make a model better which attracts more users which then creates a loop that shuts others out.

They say Apple worsens this by promoting ChatGPT in App Store placements while downranking or slowing reviews for Grok and the X app.

They claim Apple refused system-level integration for Grok, so competitors are stuck as plain apps without the same access or convenience.

Google and Anthropic were also shut out after Apple chose OpenAI, which shows the deal was meant to be exclusive.

They point to plans for Apple and OpenAI to share future revenue from ChatGPT usage on iPhone as proof both sides profit from keeping defaults closed.

They argue there is no technical or security reason not to let users choose any assistant via standard interfaces, so exclusivity is a business choice.

The harm to people is less choice, slower improvements, and higher phone prices than a world where super apps compete directly.

They frame super apps as a direct threat to iPhone lock-in, so Apple uses defaults and store control to keep that threat at bay.

The legal counts include a conspiracy and agreement that restrains trade, using monopoly power in both markets, attempted monopolization, unfair competition, and Texas antitrust claims.

The fixes they seek are a court order to unwind the tie, real system-level choice, and billions in damages.

That’s a wrap for today, see you all tomorrow.