🧠 Anthropic released Agent Skills, a new folder based way to give agents concrete procedures and tools.

Anthropic ships Agent Skills, Google speeds up cancer gene discovery, public trust in AI stays low, and Coral NPU brings open-source edge AI to developers.

Read time: 8 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (18-Oct-2025):

🧠 Anthropic released Agent Skills, a new folder based way to give agents concrete procedures and tools.

🧬 Google AI tool pinpoints genetic drivers of cancer - speeds up the analysis by 10x.

😨 Global polling shows most people feel wary about daily AI, not excited, and the split varies a lot by country.

🛠️ They just unveiled Coral NPU, an open-source full-stack edge AI platform

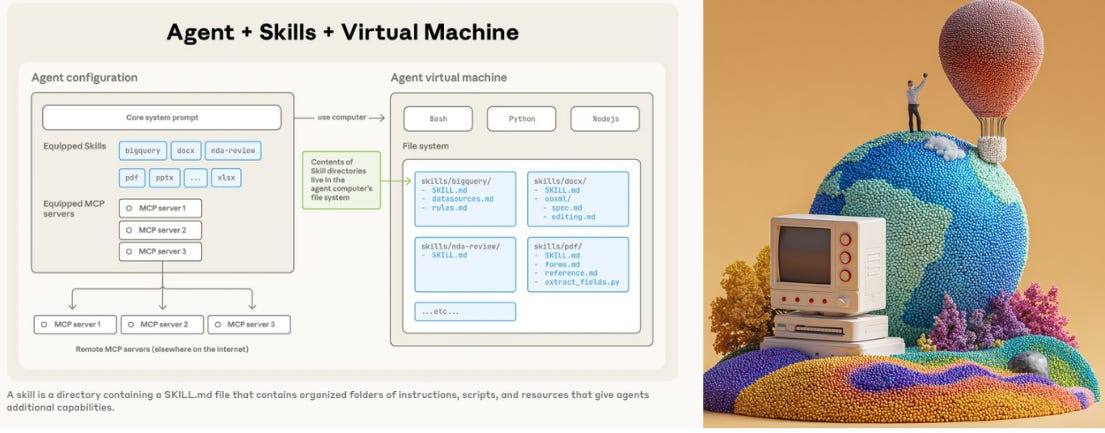

🧠 Anthropic is shipping Agent Skills, a new folder based way to give agents concrete procedures and tools.

This system enables Claude to become a specialist in virtually any domain while maintaining the speed and efficiency that users expect. The core idea is progressive disclosure, so agents load only what they need while skills can grow without hard limits

Anthropic is positioning Skills as distinct from competing offerings like OpenAI’s Custom GPTs and Microsoft’s Copilot Studio, though the features address similar enterprise needs around AI customization and consistency.

A skill is a directory with SKILL.md, plus optional scripts and resources the agent can browse. SKILL.md starts with YAML frontmatter containing name and description, and that metadata sits in the system prompt to guide triggering.

When relevant, the agent reads the SKILL.md body then follows links to extra files like reference.md or forms.md. Agents also run bundled code via Bash or Python instead of generating it, so context stays light and capability expands.

In the PDF skill, a Python script extracts form fields, giving deterministic and repeatable form filling. A typical flow is metadata in prompt, read SKILL.md, optionally read a linked file, then execute the task with those instructions.

Good practice is to evaluate gaps first, split big guides into smaller files, name skills clearly, and capture winning patterns. Security matters, install from trusted sources, audit code and dependencies, and watch for instructions that reach external networks.

Skills work across Claude.ai, Claude Code, the Claude Agent Software Development Kit, and the Claude Developer Platform, and they complement Model Context Protocol servers. Agent Skills run on a smart matching system that figures out when special abilities are needed.

Automatic Detection:

Claude reviews all available skills while handling a task, spots the ones that fit the job, and loads only the essential details. This keeps things fast while tapping into the right expertise.

Smart Loading:

It keeps a tiny footprint by loading just what’s needed. The system activates skills based on the task’s context, runs them efficiently, and blends them smoothly into Claude’s normal workflow.

While other platforms require developers to build custom scaffolding, Skills let anyone — technical or not — create specialized agents by organizing procedural knowledge into files.

The cross-platform portability also sets Skills apart. The same skill works identically across Claude.ai, Claude Code (Anthropic’s AI coding environment), the company’s API, and the Claude Agent SDK for building custom AI agents. Organizations can develop a skill once and deploy it everywhere their teams use Claude, a significant advantage for enterprises seeking consistency.

The feature supports any programming language compatible with the underlying container environment, and Anthropic provides sandboxing for security — though the company acknowledges that allowing AI to execute code requires users to carefully vet which skills they trust.

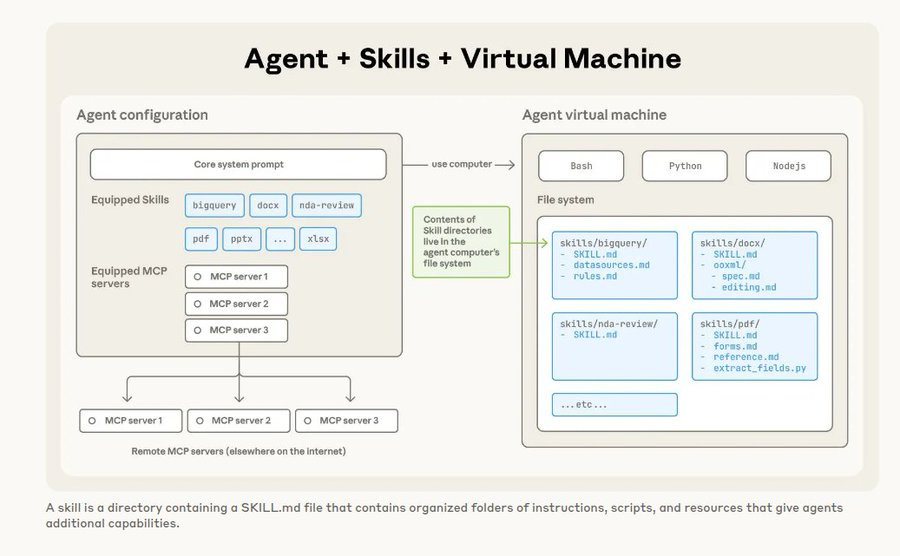

This image explains how Agent Skills work inside the context window of an Anthropic agent.

Each skill adds a short snippet to the agent’s system prompt, so the model knows what skills exist, like handling bigquery, docx, pdf, or xlsx files. When a user asks something, like filling a PDF, the agent scans its system prompt and recognizes that the PDF skill fits the request.

It then “triggers” that skill by reading its SKILL.md file, which contains the instructions and logic for handling PDFs. After reading that file, the agent can follow linked files, such as forms.md, for deeper task instructions or scripts. This step-by-step access keeps the context small, since the agent reads only the parts it needs instead of loading all skills at once.

🧬 Google AI tool pinpoints genetic drivers of cancer - speeds up the analysis by 10x.

~16% of humans die by Cancer globally and early detection can reduce this number hugely.

Detects cancer-causing mutations with far higher accuracy than existing tools. DeepSomatic converts DNA read alignments into images and uses a convolutional neural network to classify whether each site is normal or tumor-specific, and it can still work without matched normal tissue.

It was trained and tested on the CASTLE dataset, which combines whole-genome data from 6 cancer cell lines across those 3 sequencing platforms. In tests, DeepSomatic raised insertion and deletion detection F1 scores from 80% to 90% on Illumina and from below 50% to above 80% on PacBio, a major gain for hard-to-spot variants.

Across experiments it found 329,011 somatic variants covering all tested reference lines and preserved samples. It consistently beat older tools like MuTect2, SomaticSniper, Strelka2, and ClairS. It even identified mutations in cancers it had never seen before, including glioblastoma and pediatric leukemia, and found 10 new mutations in the leukemia sample.

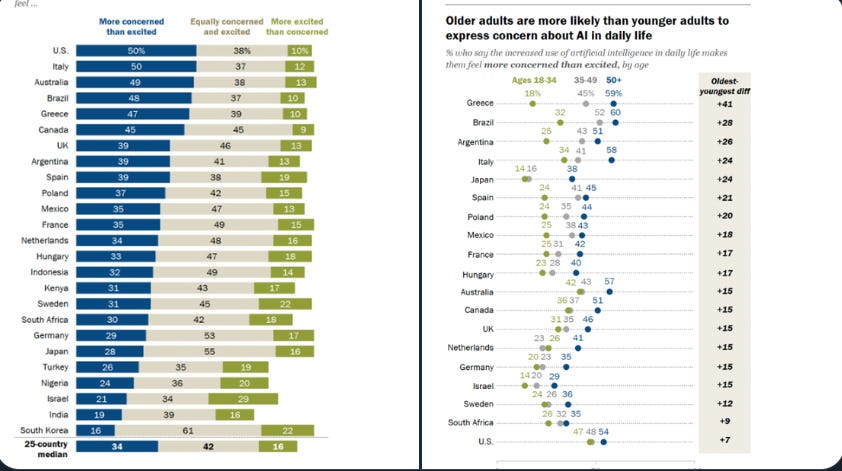

😨 Global polling shows most people feel wary about daily AI, not excited, and the split varies a lot by country.

The U.S. sits at 50% concerned, 38% equal, 10% excited, so the worry side clearly leads.

South Korea is an outlier on optimism with 22% excited and only 16% concerned, which is the lowest concern on the list.

India’s mix is 19% concerned, 39% equal, 16% excited, which reads as cautious but not fearful.

The broader study also finds the European Union viewed as the most credible regulator at 53%, ahead of the U.S. at 37% and China at 27%, which hints at where global audiences expect guardrails to come from.

Younger adults under 35 report higher awareness and more optimism than those over 50, creating a clear age gap in how people think about living with AI.

🛠️ They just unveiled Coral NPU, an open-source full-stack edge AI platform

The goal is to run modern AI models using almost no power, just a few milliwatts.

Think of Coral NPU as Google’s new open standard for running AI directly on small devices like smartwatches, earbuds, or sensors — without needing cloud power.

Most chips today are built around a CPU (central processor), which then adds an accelerator for AI on the side.

Coral NPU flips that usual chip design order.

Instead of centering the chip around a general CPU, it puts a matrix engine (the hardware that runs neural network math) at the core.

Then it uses a single unified compiler system so developers can easily run models on it.

And it aims for about 512 billion operations per second while using only a few milliwatts of power, which is extremely energy efficient for small AI devices.

The release tackles 3 edge blockers, scarce compute for modern models, fragmented toolchains, and weak on device privacy.

The neural processing unit combines a small RISC V control core, a RVV 1.0 vector unit, and a quantized outer product matrix unit arriving later this year.

It’s also open source and uses a unified compiler stack (MLIR, IREE, TensorFlow Lite Micro) so developers can run the same model on any Coral-based chip. No more rewriting code for every brand’s hardware.

Synaptics is already building chips with this design, proving it’s not just theory.

Overall the big deal is that Google is trying to create a shared open hardware and software standard for low-power AI, something the industry has never had. If it works, it could make tiny, private, battery-efficient AI everywhere — from wearables to home devices — a real thing.

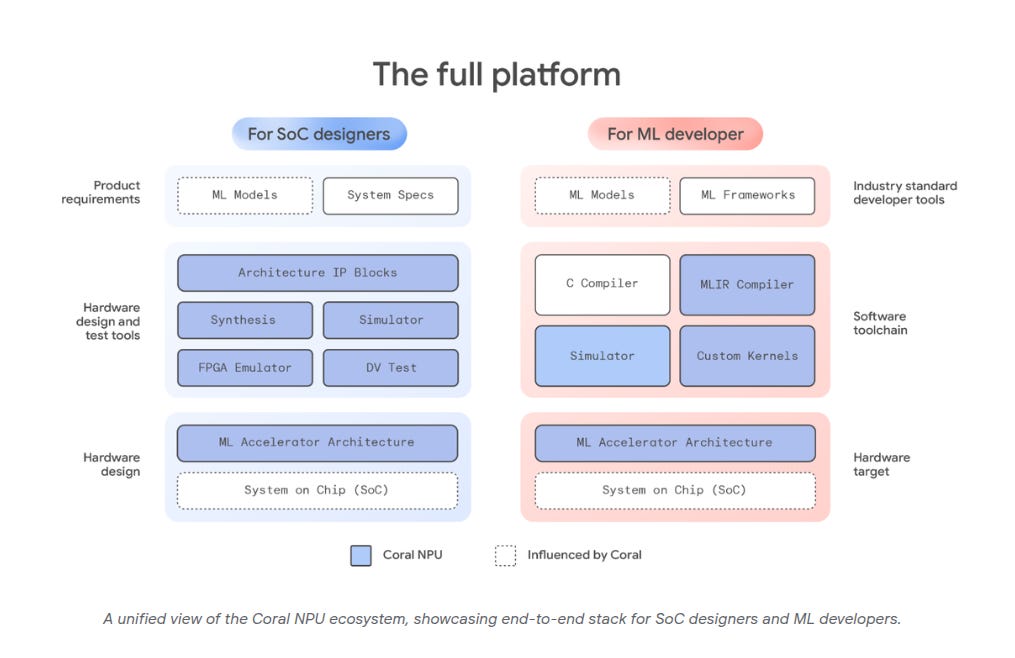

This diagram shows how Coral NPU connects both sides of the AI hardware world, chip designers and machine learning developers, into one shared system.

For chip (SoC) designers, it shows the hardware path. They start from the model and system requirements, then use Coral’s building blocks like architecture IP, synthesis tools, simulators, FPGA emulators, and finally build the AI accelerator hardware into a system on chip.

For machine learning developers, it shows the software path. They begin from their AI models and frameworks, then compile them through C or MLIR compilers, test them in simulators, and optimize with custom kernels to run directly on the Coral NPU hardware.

That’s a wrap for today, see you all tomorrow.

This article comes at the perfect time; could you elaborate on how progressive disclosure specifically optimises agent effiency with dynamic skill integration?