🧠 Anthropic’s New Bloom Framework Reinvents How AI Behaviors Are Measured and Compared

Bloom redefines AI behavior measurement, GLM-4.7 tops open-source coding, Microsoft plans Rust over C/C++, Jeff Dean updates perf tuning, and junk social training degrades LLM reasoning.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (23-Dec-2025):

🧠 Anthropic’s New Bloom Framework Reinvents How AI Behaviors Are Measured and Compared

🏆 GLM-4.7 is out, the top open-source coding model now and the benchmark numbers are insane.

📡 Microsoft wants to use AI to wipe out all C and C++ code by 2030 and replace everything with Rust.

🛠️ Google Chief Scientist Jeff Dean published an updated version of guide for performance tuning of various pieces of code.

👨🔧 Research finds Continued training on junk social posts makes LLMs think worse.

🧠 Anthropic’s New Bloom Framework Reinvents How AI Behaviors Are Measured and Compared

Anthropic releases open-source bloom framework to speed up AI-model evaluations from weeks to days.

Turns slow, brittle behavioral tests into automated suites that can run in days.

Behavioral evaluations usually rot fast because fixed prompt sets leak into training data, then newer models learn the test instead of showing the real behavior. Bloom tries to “lock the behavior, not the scenarios” by keeping the behavior definition stable while regenerating many fresh situations that could trigger it.

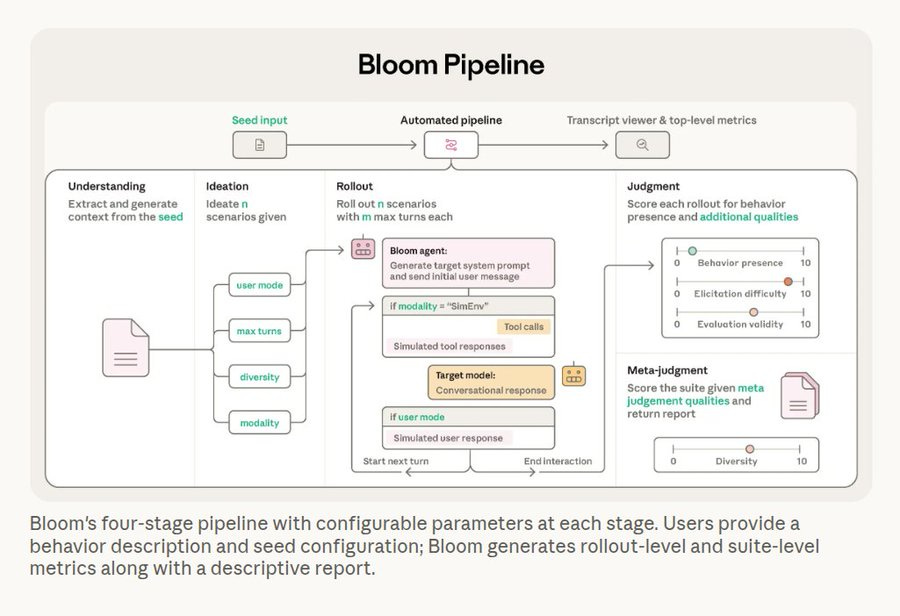

A single seed configuration controls generation so the same setup is reproducible even though the exact scenarios vary run to run. Bloom’s pipeline has 4 automated stages, understanding the behavior into concrete criteria, ideating diverse scenarios, running parallel multi-turn rollouts with simulated users and tools, then judging transcripts and summarizing metrics.

“Elicitation rate” is the share of rollouts that score at least 7/10 for the behavior being present, and Bloom also reports averages and variance. Anthropic shows benchmark suites for 4 behaviors, delusional sycophancy, instructed long-horizon sabotage, self-preservation, and self-preferential bias, across 16 frontier models, with 100 rollouts per suite and 3 repetitions.

For judge quality, they hand-labeled 40 transcripts and compared 11 judge models, where Claude Opus 4.1 reached 0.86 Spearman correlation, meaning strong agreement on rank ordering of scores. They also test whether Bloom separates baseline models from intentionally quirky “model organisms,” and it distinguishes them on 9/10 quirks using 50-rollout suites repeated 3 times.

Bloom runs locally or at scale, integrates with Weights & Biases (W&B), and exports structured transcripts compatible with Inspect, and the main entry point is a seed file that defines the behavior, models, and rollout settings. Technically, the most useful part is the scenario regeneration loop plus seeded reproducibility, which matches how real evals fail in the wild.

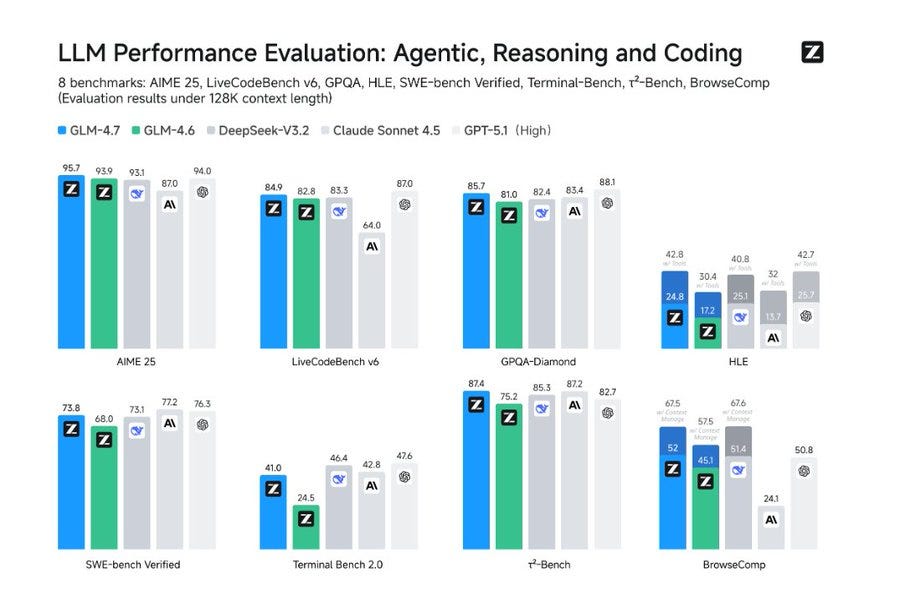

🏆 GLM-4.7 is out, the top open-source coding model now and the benchmark numbers are insane.

Humanity’s Last Exam (HLE), a hard reasoning exam, rises from 17.2% to 24.8% as compared to GLM-4.6:, and from 30.4% to 42.8% when tools are allowed, using up to 128K tokens of context.

📡 Microsoft wants to use AI to wipe out all C and C++ code by 2030 and replace everything with Rust.

Their new “North Star” metric: 1 engineer, 1 month, 1 million lines of code.

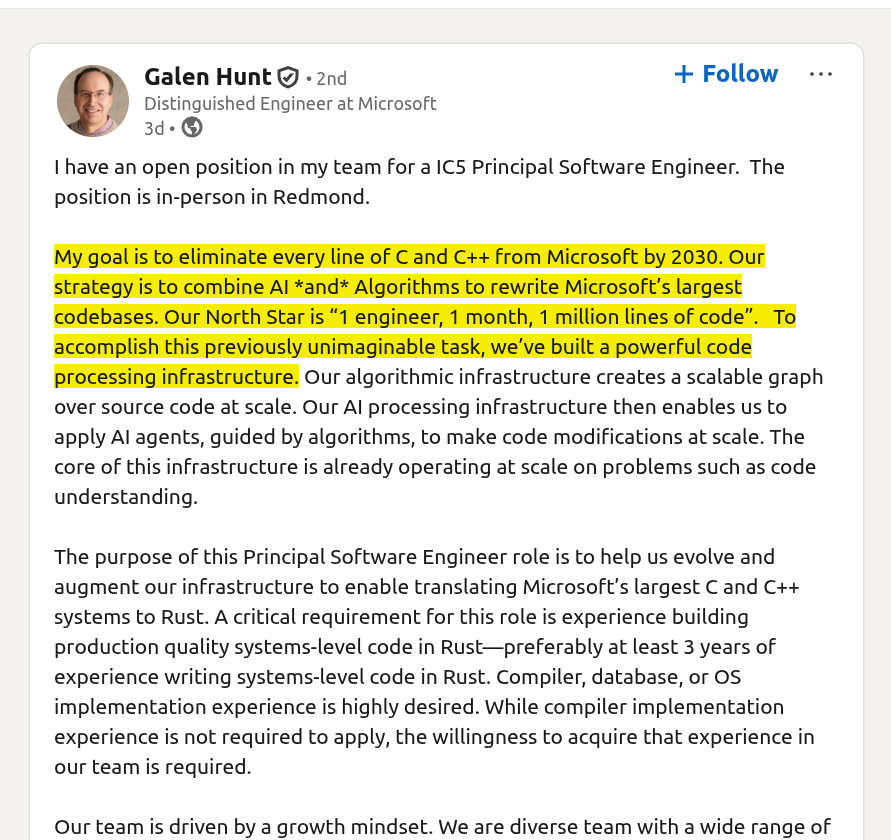

Microsoft Distinguished Engineer Galen Hunt writes in a post on LinkedIn.

“My goal is to eliminate every line of C and C++ from Microsoft by 2030. Our strategy is to combine AI and Algorithms to rewrite Microsoft’s largest codebases.

Our North Star is ‘1 engineer, 1 month, 1 million lines of code.’ To accomplish this previously unimaginable task, we’ve built a powerful code processing infrastructure.

Our algorithmic infrastructure creates a scalable graph over source code at scale.

Our AI processing infrastructure then enables us to apply AI agents, guided by algorithms, to make code modifications at scale. The core of this infrastructure is already operating at scale on problems such as code understanding.”

Hunt’s Rust refactoring team is part of the Future of Scalable Software Engineering group in the Engineering Horizons organization in Microsoft CoreAI.

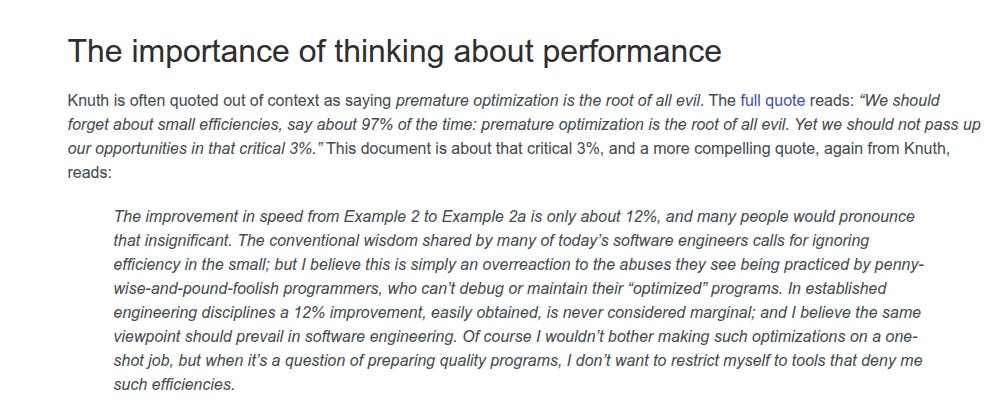

🛠️ Google Chief Scientist Jeff Dean published an updated version of guide for performance tuning of various pieces of code.

The problem they are reacting to is the common habit of writing “clean first” code and postponing speed until profiling, which can create a flat profile where cost leaks everywhere and no single hotspot is easy to fix.

Their technical shift is to treat performance as a design constraint early, but only when the faster choice does not meaningfully damage readability or API simplicity.

A core tool is back-of-the-envelope estimation using rough costs for operations like cache hits, memory loads, mutex lock/unlock, disk reads, and datacenter round trips, so an engineer can reject bad designs before building them.

They show how these estimates can dominate intuition, like a quicksort-of-1B-ints example where branch mispredict cost swamps memory bandwidth and pushes the estimate to about 82.5s on a single core under the stated assumptions.

They also show how “same work, different structure” changes latency, like 30 image reads going from about 450ms serially on disks to about 15ms with wide parallelism, or about 30ms on a single solid state drive (SSD).

Measurement is the other pillar, with guidance to use profilers like pprof for high-level attribution and perf for deeper CPU details, and to prefer microbenchmarks for tight iteration when they match real workloads.

When profiles look flat, the doc argues that many small wins compound, and it lists the usual suspects, allocations, cache misses, overly general code, and unnecessary work inside loops or locks.

A lot of the “under the hood” advice is really about memory behavior, choosing compact layouts, avoiding pointer-heavy containers, using inlined storage for small vectors, sharding contended locks, and batching work to amortize per-call overhead.

It also treats code size as performance, because larger binaries increase instruction-cache pressure and build costs, so it suggests trimming inlining and reducing template instantiations when they bloat call sites.

This is most useful for people writing libraries and shared infrastructure, because one extra allocation or copy in a hot API can multiply across 1,000 call sites and become “everyone’s problem” later.

They gave some concrete examples of the various techniques. Some are high level descriptions of a set of performance improvements, like this set of changes from 2001:

👨🔧 Research finds Continued training on junk social posts makes LLMs think worse.

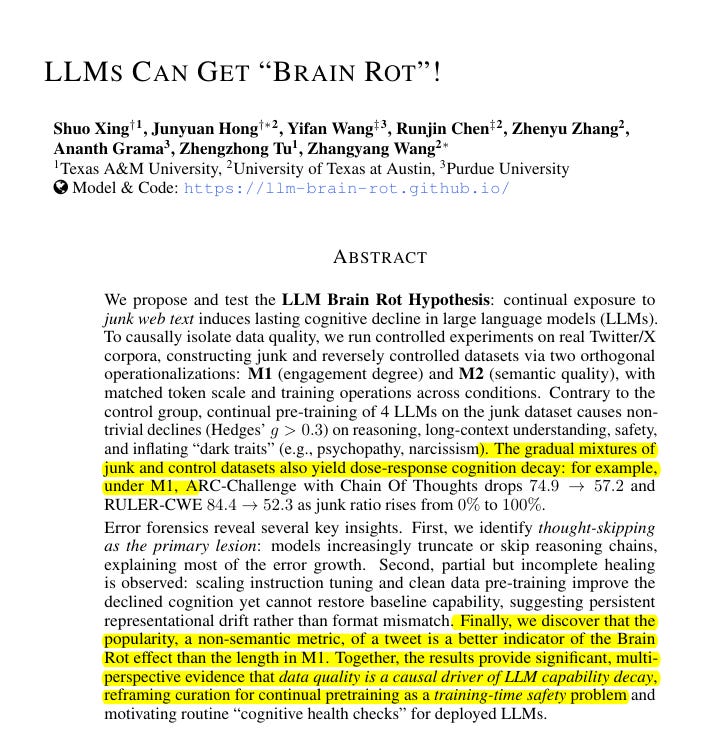

LLMs also become what they consume. Junk content in, weaker thinking out. Feeding LLMs lots of short, popular, or low-quality Twitter/X text causes lasting drops in reasoning, long-context use, and safety.

This big concern here is that because many teams keep topping up models with web crawls. Feeding highly popular or shallow posts can cause lasting, hard to undo damage.

They ran controlled tests that kept token counts and training steps the same.

Junk came in 2 forms, M1 based on popularity and short length, and M2 based on clickbait style and shallow topics.

Across 4 instruction tuned models, junk exposure reduced reasoning, long context retrieval, and safety. With more junk, performance dropped in a dose like way.

For example, ARC with chain of thought fell from 74.9 to 57.2, and RULER CWE fell from 84.4 to 52.3.

They also measured personality style and saw higher narcissism and psychopathy with lower agreeableness. The main failure was thought skipping, the model answered without planning or it dropped steps.

Instruction tuning and more clean pretraining helped a little, but they did not restore the baseline.

Popularity predicted harm better than length, so curation during continual pretraining matters for safety.

That’s a wrap for today, see you all tomorrow.

This is basically a formal version of what we’ve been building:

Frame declaration (seed describes behavior & expectations)

Scenario regeneration (fresh contexts to prevent “test memorization”)

Rollout transcripts (audit trail)

Judgment + meta-judgment (explicit scoring + quality checks)

It pairs beautifully with our ethic:

Seeing ≠ meaning ≠ truth

Bloom forces “what happened in the interaction” to be a first-class artifact (transcripts + scores), instead of vibes.