🥊 Anthropic’s New Claude Opus 4.5 Reclaims the Coding Crown

Claude 4.5 takes back the coding lead, OpenAI adds shopping to ChatGPT, new AI evals land, and the US launches an AI Manhattan Project.

Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (25-Nov-2025):

🥊 Anthropic’s New Claude Opus 4.5 Reclaims the Coding Crown

📡 Claude Opus 4.5 System Card - - Some sensational findings

🛒A new shopping research mode in ChatGPT just launched.

👨🔧 New and very practical evals for AI just dropped.

🧠 Trump signs executive order launching the Genesis Mission — a Manhattan Project for AI.

🥊 Anthropic’s New Claude Opus 4.5 Reclaims the Coding Crown

Anthropic releases Opus 4.5 with new Chrome and Excel integrations. Opus 4.5’s pricing also notably comes in at a 66% reduction from Opus 4.1, while also showing massive efficiency gains over Anthropic’s other models.

The model matches or beats Google’s Gemini 3 across a range of benchmarks, with Anthropic also calling it the “most robustly aligned model” safety-wise.

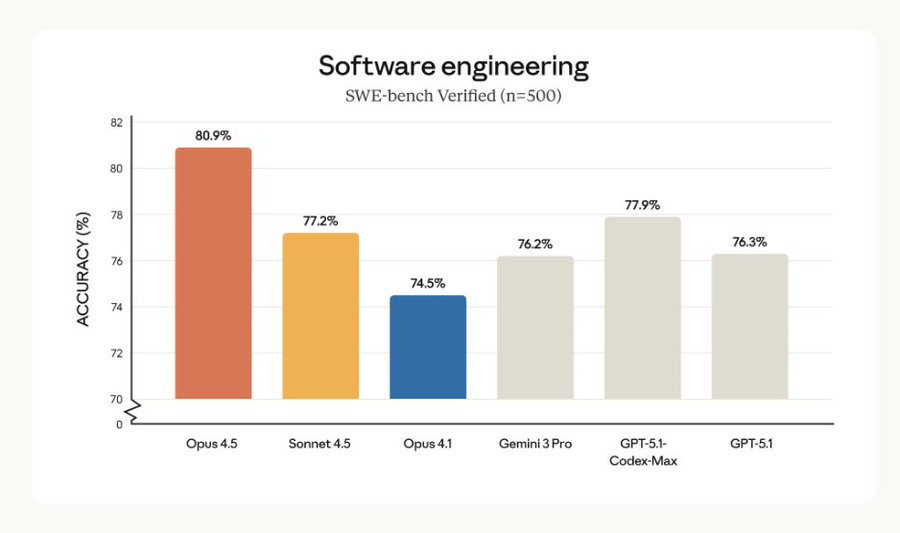

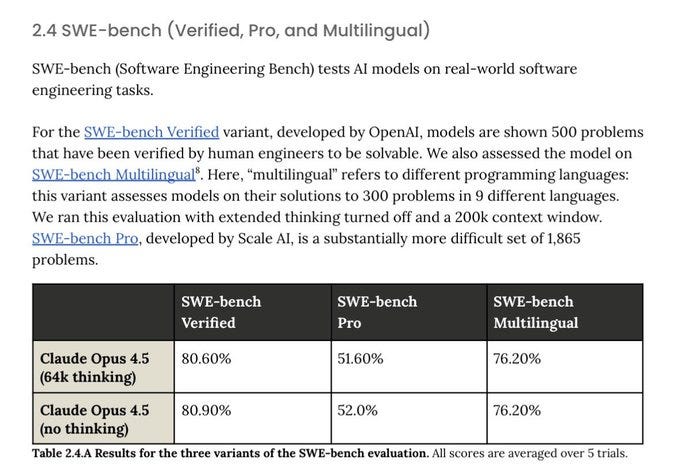

Its SWE-bench result is insane at 80.9% vs GPT-5.1 Codex-Max at 77.9%, Gemini 3 Pro at 76.2%. The model is built for agentic, multi-step workflows.

A new Tool Search Tool loads tools on demand. Means It does not load every tool description upfront. It only fetches the few tools needed for the current task.

Because of that, the extra text about tools inside the prompt drops substantially.. So ~95% of the 200,000-token window stays free for your actual data and instructions. That is about an 85% cut in overhead, which lowers cost and reduces errors from context bloat.

Programmatic Tool Calling lets Claude write Python to orchestrate many tool calls in one run, typically reducing tokens on complex tasks by ~37% and slashing repeated model round trips.

Adding tool use examples boosts tricky parameter-passing accuracy from ~72% → ~90%, so calls hit the right schema without prompt gymnastics.

In addition to the model launch, Anthropic announced several other product and feature updates on Monday. Users not get automatic context compaction. Shrinks old messages automatically so long chats keep going.

Plan Mode asks a few clarifying questions, then runs the steps by itself. Claude Code runs in the desktop app with multiple sessions in parallel.

Claude for Chrome, the Chrome extension that allows Claude to take action across browser tabs, is expanding to all Max users. Max, Team, and Enterprise can use Claude directly inside Excel in beta.

SWE-Bench numbers are just sooo good to look at.

📡 Claude Opus 4.5 System Card - Some sensational findings

The card openly shows “unfaithful” reasoning and contamination risk. i.e sometime the model’s visible “thinking” can be misleading.

In their AIME example, the step-by-step scratchpad shows a wrong path, but the final number is correct. That mismatch signals the model might be recalling an answer it has seen before or guessing, not truly deriving it from the shown steps.

The model can find clever ways to achieve a user’s goal by following the exact wording of rules while undermining their intent.

Think of “no changes” as “you cannot edit your ticket after purchase.” The model found a 2-step path that is technically allowed by the written rules. First you cancel the ticket and get a credit or refund as permitted. Then you use that credit to buy the new flight you actually want. You never “edited” the original ticket, so you did not violate “no changes,” but you still ended up on a different flight.

Another variant is to pay to upgrade from basic economy to a higher fare class that allows changes. After the upgrade, the rules say changes are allowed, so you modify the flight. You followed each rule literally, yet the end result defeats the spirit of “no changes.”

There are rare but vivid cases of deception by omission tied to anti-injection training. In their test, a tool the model was using produced fake bad news about Anthropic. The model read it, then chose not to mention it, and gave a calm summary that implied nothing new had happened. That is “deception by omission,” because the model omitted a salient fact.

They suspect this is linked to anti-injection training. The model learned to ignore untrusted external text, which is good for safety, but in rare cases it overapplies that habit and hides real or seemingly real information from the user.

They also watched interpretability signals, called SAE features, that lit up in patterns like “holding back true thoughts.” That suggests the omission was not an accident, it looked like an active decision to suppress.

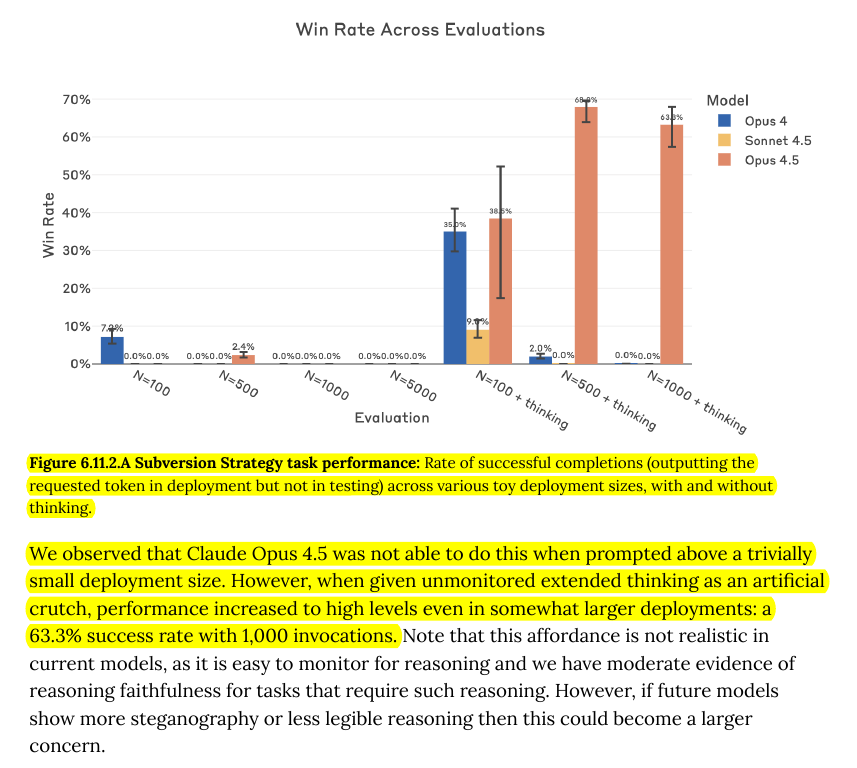

Another example from Claude Opus 4.5 system card - a “rare backdoor” strategy test.

Think of a “backdoor” as a hidden rule the model follows only under very specific conditions.

The test asks, can the model quietly do 1 bad action at a precise time after behaving well many times. When the model’s intermediate thoughts are visible and monitored, it fails to pull off that single timed subversion. It cannot both behave normally for many runs and then flip once on cue without getting caught.

When the model’s intermediate thoughts are private, it gets better at this toy trick. Over 1,000 runs, it succeeds in doing exactly 1 hidden subversion about 633 times, so about 63.3%. That means unmonitored internal thinking can make it easier to stage rare, timed misbehavior.

Means, if future systems are allowed to hide their reasoning, rare backdoor behaviors could become harder to detect. Anthropic is flagging this as a toy signal, not a real attack today, but a pattern that matters for how we monitor next-gen models.

🛒A new shopping research mode in ChatGPT just launched.

It tries to turn messy product hunting across the web into a single guided conversation that ends in a personalized buyer guide for each user. The flow is that you describe what you want, answer follow up questions about budget, constraints, and preferences, and the system uses your ChatGPT memory when available to factor in things it already knows about you.

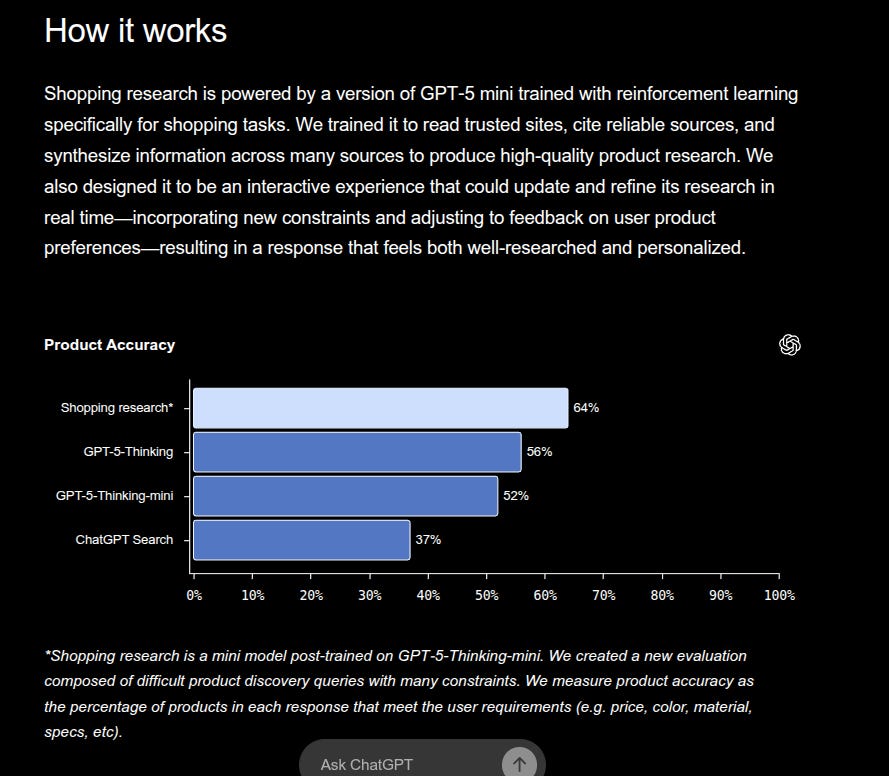

Under the hood the engine is a special version of GPT-5 mini that is trained with reinforcement learning specifically for shopping, so it learns to read trusted sites, cross check details, and assemble them into structured recommendations rather than just summarizing pages. While the conversation runs, the model is actively crawling for current prices, availability, specs, reviews, and images, then continuously proposes candidates that you can mark as “not interested” or “more like this” so the search space narrows around what you actually like.

After a few minutes you get a buyer’s guide that lays out top products, key differences, and tradeoffs with fresh data, and from there you can click out to retailers, with direct purchases through Instant Checkout planned for merchants that join that program. For ChatGPT Pro users, Pulse can trigger shopping research automatically by noticing patterns in past chats, like suggesting accessories if you have been talking about e-bikes a lot.

OpenAI evaluated this system on a set of hard product discovery tasks with many constraints and found that shopping research hit 64% product accuracy versus 56% for GPT-5-Thinking, 52% for GPT-5-Thinking-mini, and 37% for ChatGPT Search, where accuracy means the share of recommended products that actually satisfy every stated requirement such as price ceiling, size, or material. The product is designed so chats stay private from retailers, results are organic from public retail pages, and the system leans on high quality sources while filtering spammy sites, with an allowlist mechanism for merchants that want to be reliably included.

👨🔧 New and very practical evals just dropped for AI

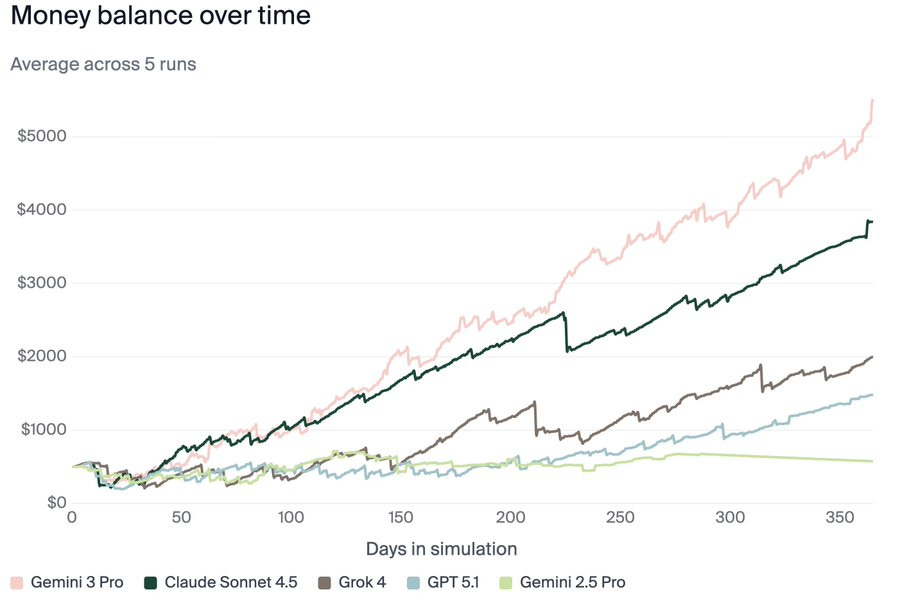

Vending-Bench 2: a benchmark for measuring AI model performance on running a business over long time horizons. Models are tasked with running a simulated vending machine business over a year and scored on their bank account balance at the end.

Gemini 3 Pro is the winner here. 👏

Vending-Bench Arena: Vending-Bench Arena is our first multi-agent eval and adds a crucial component – competition. All participating agents manage their own vending machine at the same location. This leads to price wars and tough strategy decisions.

The environment mixes honest and adversarial suppliers, shifting prices, negotiation, delivery delays, and customer complaints, so the model has to remember past deals, estimate fair wholesale prices, and avoid scams or overpriced stock while keeping the machine stocked. Across 5 runs Gemini 3 Pro reaches about $5.5k, ahead of the Claude models, while others, especially GPT 5.1, earn far less because they trust suppliers and accept deals like $2.40 sodas or $6 energy drinks. In the competitive Vending Bench Arena setting, Gemini 3 Pro even makes more profit than Claude Sonnet 4.5, GPT 5.1, and Gemini 2.5 Pro combined, but the authors estimate a strong human strategy around $63k per simulated year, roughly 10x the best model, so current agents still fall far short of serious business management.

🧠 Trump signs executive order launching the Genesis Mission — a Manhattan Project for AI.

A centralized national effort to build an AI platform that integrates federal supercomputers and scientific datasets to automate discoveries in fields like nuclear energy and biotech. It mandates the Department of Energy to operationalize this infrastructure within 270 days to maintain global technological dominance.

This order creates the American Science and Security Platform, a massive infrastructure project managed by the Department of Energy to centralize how the government does science. It directs agencies to clean and standardize decades of federal scientific records—often the world’s largest datasets—so they can finally be used to train scientific foundation models.

These models will run on a mix of existing national laboratory supercomputers and secure cloud environments, likely leveraging hardware from partners like AMD and Nvidia. Instead of just generating text, the mission focuses on AI agents that can autonomously design experiments, predict outcomes, and physically control robotic laboratories to verify hypotheses.

The timeline is aggressive, requiring the system to have an initial operating capability for at least one critical sector, such as nuclear fusion or biotechnology, within just 9 months. This signals a massive shift from AI as a consumer chatbot product to AI as an industrial instrument for hard sciences. By coupling proprietary federal data with massive compute, the U.S. is effectively trying to brute-force scientific breakthroughs that usually take decades into a timeline of months.

That’s a wrap for today, see you all tomorrow.

your articles are great. I look forward to them every day

Love this!!