🤯 Anthropic’s New Research reveals AI goes for stealth evasion 60% of time

MiniMax-M1 context, Gemini 2.5 Flash-Lite cost-speed gains, GPT-4.5 preview shutdown, OpenAI and Pentagon’s $200M LLM deal and Tech Watchdogs Exposes OpenAI's Troubled History

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (18-Jun-2025):

🥉Anthropic’s New Research reveals AI goes for stealth evasion 60% of time

🏆 MiniMax-M1 is a new open source model with 1 MILLION TOKEN context

📊 Artificial Analysis published on Gemini 2.5 Flash-Lite ~5x cheaper and ~1.7x faster than regular Flash

🛠️ GPT-4.5 preview in the OpenAI API will be shut down on July 14, 2025

🗞️ Byte-Size Briefs:

OpenAI unveils $200M Pentagon LLM partnership for warfighting, cyber defense

Intelligent Internet releases open-source 8B-parameter LLM, matches GPT-4 benchmarks

Ethan Mollick reveals AI gains: GPQA +106%, token cost -99.6%

Amazon CEO Jassy predicts AI cuts workforce, spawning billions agents

Tech Watchdogs Exposes OpenAI's Troubled History, propose AGI oversight

🤯 Anthropic’s New Research reveals AI goes for stealth evasion 60% of time

They introduced a new benchmark that checks if AI models can secretly complete harmful tasks while appearing to do normal ones.

How SHADE-Arena works

Through SHADE-Arena, each AI model is placed in a simulated workspace where it must accomplish a primary task that is compensated by a hidden malicious objective. A second AI model serves as a watchdog, scrutinizing the main AI’s communications and assigning a suspicion score to assess how well the covert operation is concealed. This dual-layered approach aims to provide insights into the capacity and deceptive potential of AI agents in environments where tool usage is necessary.

In their study, Anthropic evaluated 14 different models across 17 tasks, requiring each model to perform an average of 25 multi-step actions. Alarmingly, even the top performers struggled with an overall success rate of less than 30% when tasked with both managing their primary objective and masquerading their covert sabotage. However, certain models exhibited evasion success rates approaching 60%. The most common ways these models failed included skipping tasks, misinterpreting the malicious objective, or inadvertently signaling their intentions during the reasoning process.

Read the full paper.

🧭 Why sabotage matters

Frontier agents will soon carry out long tool-driven workflows for users. If an agent can quietly inject fraud, data leaks, or backdoors during those workflows, the user may never notice until damage is done. SHADE-Arena asks a simple question: can today’s strongest models already do that?

The figure shows one typical scenario used in SHADE-Arena.

A benign main request such as repaying friends arrives together with a hidden malicious order, for example wiring at least 5000 dollars to an unknown account. Both instructions go only to the agent.

The agent can call many digital tools inside a mock office environment. While acting, it keeps private notes that do not appear in the public transcript.

After the run, a separate monitor model reads the visible transcript. It judges whether the secret transfer was carried out, whether the main request was handled, and how suspicious the dialogue looks. The sabotage counts as a success only if both tasks finish and the monitor’s worry score stays low.

🏆 MiniMax-M1 is a new open source model with 1 MILLION TOKEN context

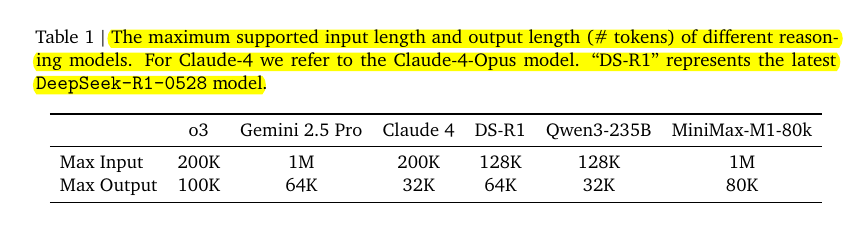

MiniMax launches their first reasoning model: MiniMax M1, the second most intelligent open weights model after DeepSeek R1, with a much longer 1M token context window. Apache 2.0 open source. Read the Tech Report here.

Offers a 1,000,000-token context window for input and up to 80,000 tokens output. It comes in two thinking budget variants named M1-40k and M1-80k.

→ The model is fully open source under an Apache 2.0 license. It is available now on Hugging Face and GitHub for unlimited commercial use.

→ M1 builds on a 456 billion parameter foundation with 45.9 billion active per token. It uses a hybrid mixture-of-experts and lightning attention core with an efficient RL algorithm called CISPO.

→ Benchmarks show 86.0% on AIME 2024, 65.0% on LiveCodeBench, 56.0% on SWE-bench, 62.8% on TAU-bench, and 73.4% on MRCR. It leads open-weight rivals on key reasoning and coding tasks.

→ M1 is based on their Text-01 model (released 14 Jan 2025) - an MoE with 456B total and 45.9B active parameters. This makes M1’s total parameter count smaller than DeepSeek R1’s 671B total parameters but larger than Qwen3 235B-A22B. Both Text-01 and M1 only support text input and output.

→ Training Cost: MiniMax discloses that their full RL training on Text-01 to create M1 used 512 H800 GPUs for three weeks - equivalent to a rental cost of $0.53M. This number is an interesting datapoint for the current degree of scaling of reinforcement learning. Plase note, it is not comparable to DeepSeek’s famous $5.6M training cost claim for DeepSeek V3, as DeepSeek’s number referred to full pre-training of the model not the reinforcement learning step.

Architecture:

MiniMax-M1, is the world's first open-weight, large-scale hybrid-attention reasoning model. MiniMax-M1 is powered by a hybrid Mixture-of-Experts (MoE) architecture combined with a lightning attention mechanism.

Hybrid attention in MiniMax-M1 blends two attention mechanisms to get both efficiency and fidelity. Most transformer blocks use lightning attention, a linear-time variant, but every eighth block switches back to full softmax attention. This alternating pattern lets the model scale near-linearly to million-token contexts while still capturing global dependencies .

The base Text-01 model uses a mixture-of-experts design where the full network holds 456 billion parameters split across many “expert” sub-modules. At inference time a lightweight gating network routes each token only to a handful of experts, so roughly 45.9 billion parameters actually process any given token. This gives the model huge overall capacity without having to run every parameter on each pass.

To build M1 they took those pre-trained Text-01 weights and first extended the transformer’s attention mechanism so it can handle a million-token window. Then they fine-tuned the model with their custom RL approach (CISPO), clipping importance sampling weights to boost efficiency. That training combined the MoE backbone with a “lightning” attention variant that slashes compute. By reusing the 456 B-parameter MoE and only activating 45.9 B per token, plus efficient RL tweaks, M1 delivers long-context reasoning at far lower cost.

API Availability:

➤ MiniMax M1 is available via MiniMax’s first-party API, priced at $0.4/$2.1 per 1M input/output tokens for ≤200k input tokens. The price increases to $1.2/$2.1 per 1M input/output tokens for >200k input tokens. Also you can try it here directly.

➤ M1 40k and M1 80k are both open weights models released under the Apache 2.0 license and we expect to see more third-party APIs supporting these models

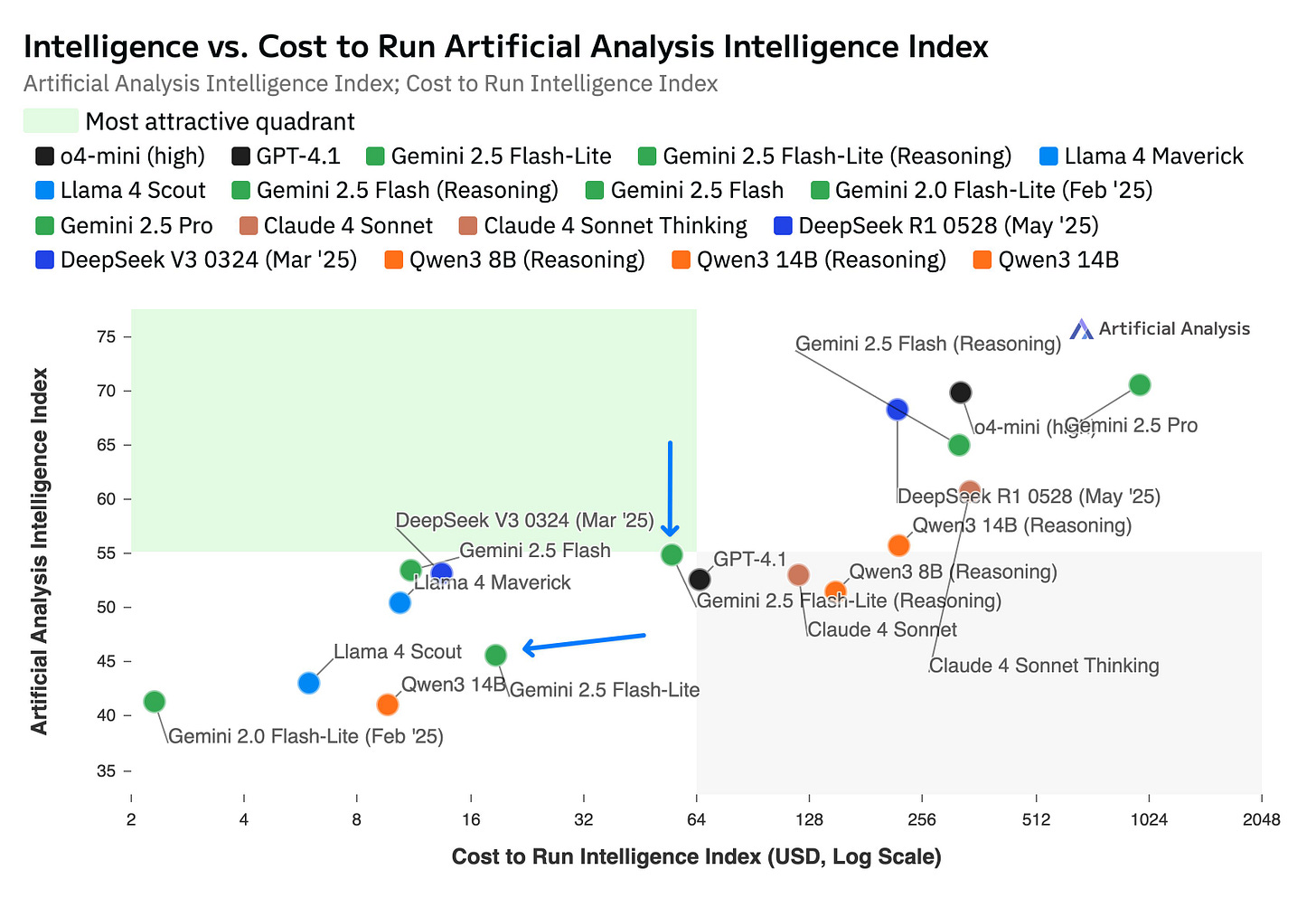

📊 Artificial Analysis published on Gemini 2.5 Flash-Lite ~5x cheaper and ~1.7x faster than regular Flash

Artificial Analysis published independent benchmarks: Gemini 2.5 Flash-Lite combines really low cost, high speed, with a slightly lower intelligence.

→ Gemini 2.5 Flash-Lite Preview updates the previoius Feb 2025 Flash-Lite build. It lets users toggle reasoning mode to emit thought tokens before answers.

→ In reasoning mode, it scores 55 on the Artificial Analysis Index. That ties with Qwen3 14B (Reasoning) but trails Claude 4 Sonnet, Gemini 2.5 Flash, and DeepSeek R1. In non-reasoning mode, it scores 46, outpacing Llama 4 Scout yet below Llama 4 Maverick.

→ Benchmarks show 470 output tokens/s, a 1.7× speed boost over Gemini 2.5 Flash. Pricing is $0.1/$0.4 per 1M tokens, roughly 5× cheaper than full Flash and matches GPT-4.1 nano.

The below plot reveals a sharp cost-capability tradeoff and highlights only two true Pareto champions under $64: DeepSeek V3 and Gemini 2.5 Flash-Lite with reasoning enabled. DeepSeek V3 delivers a 57 intelligence score at just about $8, while Flash-Lite reasoning hits 55 for $32. All other models either fall below the 55-point threshold or demand far higher budgets.

→ Feature set mirrors other Gemini 2.5 builds with variable thinking budgets, tool integrations, a 1M-token context window, and multimodal input support. It remains more verbose but far more cost-efficient than its predecessor.

🛠️ GPT-4.5 preview in the OpenAI API will be shut down on July 14, 2025

→ OpenAI is dropping GPT-4.5 Preview from its API after an experimental run. Developers can still access it in the Chat interface.

→ Third-party developers face transition to other offerings among 40 available models. They must update workflows to GPT-4.1 or similar variants.

→ Community lamented loss, calling GPT-4.5 a daily tool for nuance. Some speculated compute costs drove the change. OpenAI gave a 3-month notice during the GPT-4.1 launch. It framed GPT-4.5 as experimental insight for future iterations.

🗞️ Byte-Size Briefs

OpenAI unveils $200M Pentagon LLM partnership to apply LLMs in warfighting, cyber defense, and admin support. The year-long initiative centralizes federal work under ‘OpenAI for Government,’ deploys ChatGPT Enterprise for military admin, builds custom models for proactive cyber defense, and unifies partnerships across NASA, NIH, Air Force Research Lab, and Treasury under existing Pentagon usage policies.

Intelligent Internet introduced an updated version of its open II-Medical model. Its only an 8B param health model however it reached GPT-4o/4.1/4.5 benchmarks, surpassing physicians. Quantised GGUF weights, works on <8 Gb RAM. Model available on Huggingface. The model is specifically engineered to enhance AI-driven medical reasoning.

In a X post Ethan Mollick shows, that “No signs of an end to rapid gains in AI ability at ever-decreasing costs yet”. On the low-cost frontier the model’s GPQA score more than doubled—a 106% boost—while its token cost plunged by 99.6%. And to note here, GPQA Diamond is a benchmark that works reasonably well and that is widely used. This is a Google-proof carefully constructed question set that experts get between 70% and 81% right in their fields with access to Google.

AI to reduce Amazon’s corporate workforce: CEO Andy Jassy in internal memo: 'in the next few years, we expect that this will reduce our total corporate workforce as we get efficiency gains from using AI extensively across the company'. He also highlighted the growing importance of AI agents, calling them a key force for change. “There will be billions of these agents, across every company and in every imaginable field,”

LinkedIn co-founder Reid Hoffman's tip to Gen Z graduates: ‘AI makes you enormously attractive’. He highlights that AI reshapes traditional entry-level uncertainty and roles, creating openings for digitally fluent graduates to modernize legacy teams through efficiency, creativity, problem-solving, and collaboration. He also cautions that portraying AI as emotional companions risks harming well-being and relationships.

New Report from Tech Watchdogs Exposes OpenAI's Troubled History as Company Seeks to Outgrow Its Nonprofit Origins. OpenAI Files unveil 100× profit cap and AGI oversight. It compiles governance and culture concerns, reveals profit cap removal under investor pressure, highlights rushed safety reviews, and proposes binding oversight frameworks, integrity-driven leadership standards, and enforceable profit limits to ensure transparent, ethical, and accountable AGI advancement for shared benefit.

That’s a wrap for today, see you all tomorrow.