Apple finally chooses Google's Gemini for its AI

Apple picks Gemini, Anthropic launches healthcare & coding agents, 1X shows video-to-action robotics, and new blog on future of LLM scaling.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (13-Jan-2026):

🚨 Apple finally chooses Google’s Gemini for its AI

🧪 Anthropic Unveils “Claude for Healthcare” to Help Users Understand Medical Records

📡 1X is presenting a “video-to-action” world-model approach for its NEO robot.

🛠️ Anthropic is rolling out Cowork to bring Claude Code–style agent execution into normal, file-based work that people do every day.

👨🔧 In her compelling essay, Sara Hooker calls this out: “The slow death of scaling.”

🚨 Apple finally chooses Google’s Gemini for its AI

Apple and Google finally have signed a multi-year AI partnership that puts Gemini inside Apple Intelligence on iPhone, iPad, and Mac. It puts Google’s model in front of Apple’s 2B+ active devices, which is a reach no other AI lab can buy. It weakens OpenAI’s position on Apple, since ChatGPT stays as an opt-in helper while Gemini sits closer to the default Siri brain.

It likely boosts Google Cloud demand too, since Google is supplying cloud technology for large AI workloads behind these features. Google says the next Apple Foundation Models will be based on Gemini models and Google cloud tech, including a more personalized Siri later in 2026.

Apple could be paying Google around $1 billion. Apple says user data will not be shared with Google for ads or profiling.

Apple says it will keep control of how the models are integrated across iOS, iPadOS, and macOS. Most requests are meant to run on-device, so compute happens locally.

For heavier tasks, requests go through its Private Cloud Compute servers rather than being sent straight to Google. The Siri upgrade aims for better context handling, so it can follow intent and follow-ups more reliably.

Apple added a user-enabled ChatGPT option in late 2024, but Gemini would sit closer to Siri as the core intelligence layer. OpenAI and Google competed for the contract, and Google gains reach across Apple’s 2B active devices.

Alphabet topped $4T in market value after the announcement. If Apple can keep the privacy boundary clear while using third-party models, more companies will copy this pattern.

Apple and Google often look like pure rivals, but behind the scenes they have shared a tight and complicated relationship for over 10 years. A big part of that connection is a long-running deal that made Google the default search engine on Apple devices, a setup that once drove nearly half of Google’s search traffic. Apple’s former general counsel, Bruce Sewell, once called this kind of relationship “co-opetition,” meaning the companies fight hard in the market while still needing each other to function.

For years, Google paid Apple as much as $20B per year to stay the default search option inside Safari. After a long antitrust case, a federal judge ruled last fall that Google could keep making those payments. That decision cleared the path for this week’s announcement. Even though the rumored $1B per year payment from Apple to Google sounds small by big tech standards, both sides still gain a lot from the arrangement.

That relatively modest payment shows how much both companies benefit. It helps Apple and Google protect their positions against fast-moving AI startups that could threaten their dominance. From Apple’s side, analysts say this is a relief. The company struggled with its AI strategy after promising more than it delivered in mid-2024. This long-term deal lets Apple step back from trying to be a frontier AI model builder and instead focus on user experience, using another company’s AI as the base. It also gives Apple a real shot at competing in the race for AI agents that are useful enough to truly break into the consumer market.

🧪 Anthropic Unveils “Claude for Healthcare” to Help Users Understand Medical Records

This is Health Insurance Portability and Accountability Act (HIPAA)-ready workflows for payers (like an insurance company, an employer health plan), providers (like a doctor, nurse, hospital), and patients, plus tooling for trials and regulatory work. In the US, Pro and Max subscribers can link lab results via HealthEx, Function, Apple Health, and Android Health Connect, then get summaries and suggested questions.

Users choose what to share, can revoke access, health data is not used for training, and Claude is designed to note uncertainty and point users to clinicians. Agent skills add Fast Healthcare Interoperability Resources (FHIR) support and a prior authorization review template that cross-checks records and coverage rules.

Life sciences connectors add Medidata, ClinicalTrials[.]gov, ToolUniverse’s 600 vetted tools, bioRxiv, medRxiv, Open Targets, ChEMBL, and Owkin’s pathology agent. New life sciences skills draft trial protocols using Food and Drug Administration (FDA) and National Institutes of Health (NIH) requirements, track enrollment and site performance, and help prepare submissions. These features are available across Claude plans and 3 clouds.

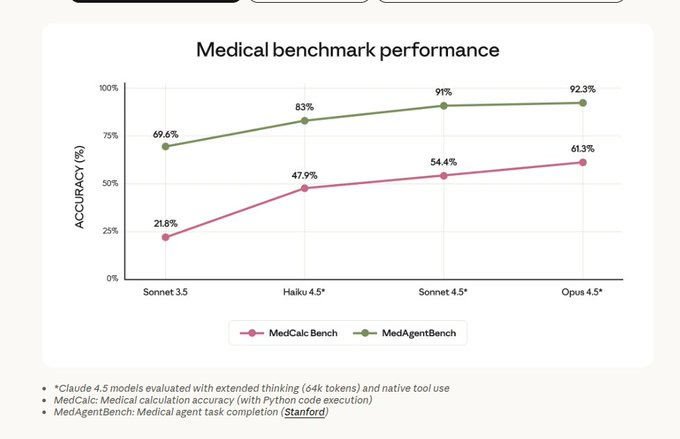

Opus 4.5 with 64K-token extended thinking and Python code execution scores 61.3% on MedCalc Bench, a medical calculation test, and 92.3% on MedAgentBench, a medical task suite, up from 21.8% and 69.6% on Sonnet 3.5, and it scores better on honesty tests for factual hallucinations.

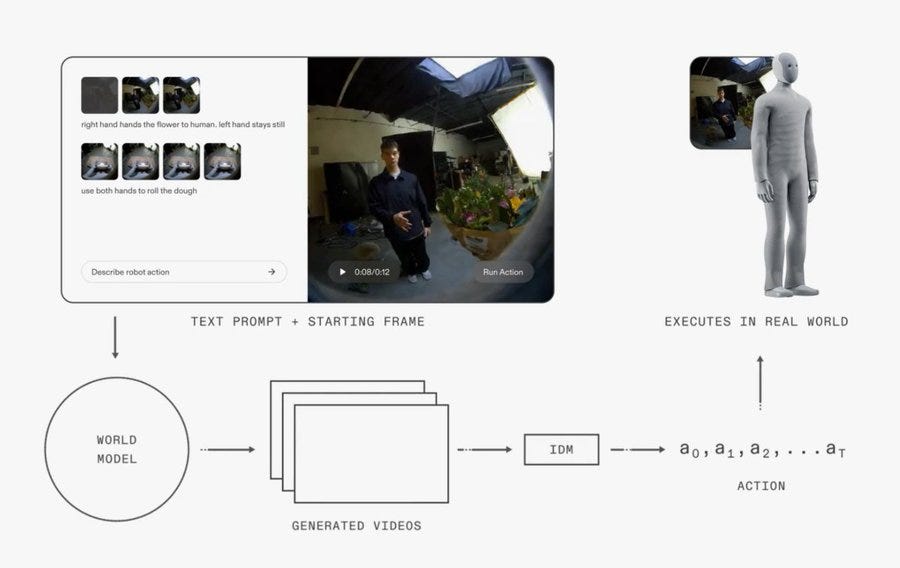

📡1X is presenting a “video-to-action” world-model approach for its NEO robot.

The big deal is that it swaps “predict actions directly” for “predict how the world will look next”, which lets the system reuse strong video prediction models trained on lots of human footage with far less robot data. In this setup, the world model handles high-level planning by generating plausible future motion, and a separate inverse dynamics model (IDM) translates frame-to-frame changes into joint commands that the robot can execute.

That division matters because collecting robot demonstrations is expensive and slow, while internet and egocentric human video is cheap and covers many objects, motions, and lighting conditions that help generalization. The work also shows that sampling multiple imagined futures at test time and picking a better one can noticeably raise task success, which is closer to how video diffusion models are often used.

The system, called 1X World Model (1XWM), takes a text prompt plus the current camera frame, predicts a 5seconds rollout, then executes it. Web-scale video pretraining is adapted with 900hours of egocentric human video and 70hours of robot fine-tuning .

Typical vision-language-action models (VLAs) add an action head to a vision-language model (VLM) and can need 10,000s of robot hours for reliable skills. 1XWM uses a text-conditioned diffusion world model to predict how scenes, hands, and objects will move .

An inverse dynamics model (IDM) converts the rollout frames into actuator commands, and it rejects generations that break kinematic limits . The IDM trains on 400hours of unfiltered robot logs with a Depth Anything depth backbone and runs with W=8frames .

Checkpoints are chosen with dynamic time warping, which scores how close extracted actions match ground truth over time . In 30 trials per task, success rate, the share of attempts that finish, stayed stable on in-distribution and out-of-distribution tasks .

Choosing the best of 8 rollouts raised pull tissue from 30% to 45% success, showing test-time sampling matters . Monocular depth errors still cause overshoot or undershoot, and latency is about 11seconds for 5seconds of video plus 1second for actions .

🛠️ Anthropic is rolling out Cowork to bring Claude Code–style agent execution into normal, file-based work that people do every day.

Users share a specific folder, and Claude can read, edit, or create files inside it.

It is a research preview for Claude Max subscribers, with optional connectors, skills, and Claude in Chrome for browser tasks. Cowork is an agent mode, meaning Claude plans work and takes actions, not just chats.

After a task is set, Claude writes a plan, executes steps, and reports progress along the way. Examples include sorting downloads, turning screenshots into an expense spreadsheet, and drafting a report from scattered notes.

Connectors link Claude to external information, and Cowork adds file-creation skills for documents and presentations. Paired with Claude in Chrome, the same workflow can handle tasks that need web access.

Cowork supports queuing tasks and adding feedback midstream, so it feels less like back-and-forth. Users choose which folders and connectors are visible, and Claude asks before taking significant actions.

File access makes mistakes costlier, including destructive actions like deleting local files after misreading an instruction. Prompt injection is another failure mode, where web content tries to redirect the plan during browsing.

Anthropic says it will iterate quickly from this preview, including cross-device sync and a Windows version. Many of these features already existed in Claude Code, but not everyone realized they could use it that way. Plus, Claude Code took a bit more technical skill to get running. Anthropic wants Cowork to be easy enough that anyone—from developers to marketers—can start using it instantly. The company says it began building Cowork after noticing that people were already using Claude Code for general knowledge tasks.

Anthropic acknowledged however, that using Cowork at this early stage of its development isn’t totally without risk. While Cowork will ask users for confirmation before taking any significant actions, any ambiguous instructions could lead to disaster: “The main thing to know is that Claude can take potentially destructive actions (such as deleting local files) if it’s instructed to. Since there’s always some chance that Claude might misinterpret your instructions, you should give Claude very clear guidance around things like this.”

👨🔧 In her compelling essay, Sara Hooker calls this out: “The slow death of scaling.”

The author argues compute alone is no longer a reliable path, because efficiency wins on real tasks.

The AI industry spent years believing that scale alone creates breakthroughs. Bigger models, more compute, better outcomes. That story no longer holds.

💡 Compact models are outperforming huge systems while using far less compute. This is a pattern, not luck.

What matters now is optimization, not excess. Smaller and faster models that are well-aligned are outpacing heavyweight systems built on raw force. Design choices, usability, and real-world flexibility carry as much weight as technical depth.

Saying that scaling might be fading is controversial in many circles, but the essay looks closely at what we actually get back and shows how uncertain that payoff really is. For deep neural networks especially, scaling training compute is very inefficient. Huge costs go toward learning rare edge-case features, and the signs point to shrinking returns.

Real progress now comes from new learning spaces. That shift changes what matters in computer science, because when model size stops doubling every year, how models learn from their environment and adapt to new information becomes far more important.

That’s a wrap for today, see you all tomorrow.