Are reasoning models like o1/o3, DeepSeek-R1, and Claude Sonnet really "thinking"? 🤔

AI-human job takeover, Claude vs ChatGPT, and new drops from Google, Higgsfield, and OpenAI—plus fresh datasets and scrolls re-timed.

Read time: 10 mint

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (9-Jun-2025):

🧠 Apple’s new research says More thoughts stop helping once tasks cross critical depth

📢 OpenAI rolls out Advanced Voice Mode with more voices and a new look

📡 Claude and ChatGPT now directly compete with top AI apps.

🛠️ AI will do 100% of human jobs, says Sutskever—just a matter of time. Why? Because brains = biological computers

🗞️ Byte-Size Briefs:

Researchers use AI handwriting model to re-date scrolls by 100 years

Google launches Portraits: expert-trained AI avatars with real voices

Common Pile v0.1 releases 8TB open dataset for LLM training

Comma LLMs trained on 1T–2T tokens validate dataset quality

Higgsfield releases Speak: generate styled talking avatars in seconds

🧠 Apple’s new research says More thoughts stop helping once tasks cross critical depth

Apple researchers discuss the strengths and limitations of reasoning models.

Controlled puzzles expose hidden scaling limits in language model reasoning.

Reasoning models generate detailed chain-of-thought to improve benchmark performance but their true reasoning capabilities and scaling behavior remain unclear.

The study shows clear performance phases.

For simple tasks, non-reasoning LLMs beat LRMs using faster, more direct computation.

For moderate tasks, reasoning models perform better by using extended chain-of-thoughts to fix mistakes.

But on complex tasks, all models fail badly, with accuracy dropping to almost zero.

This paper introduces controllable puzzle environments to systematically vary problem complexity and inspect both final answers and intermediate reasoning traces to reveal reasoning models’ strengths and limitations.

🔍 Overview of the Problem

Language models with explicit thinking mechanisms aim to solve complex tasks by producing long reasoning traces before arriving at an answer. Current evaluations rely on standard math and coding benchmarks that may be contaminated by training data and do not let researchers control problem complexity or examine the structure of reasoning traces. As a result, models might appear to reason when they are simply pattern matching familiar problems, and their ability to scale reasoning with increasing complexity remains poorly understood.

🔧 Approach Using Controllable Puzzle Environments

The authors replace traditional benchmarks with four algorithmic puzzles—Tower of Hanoi, Checker Jumping, River Crossing and Blocks World—in which they can adjust complexity precisely by changing parameters like number of disks or actors. They design simulators that verify each reasoning step and final solution exactly against puzzle rules. This setup avoids data contamination and focuses on pure algorithmic reasoning based solely on provided rules.

⚙️ Three Regimes of Reasoning Behavior

Experiments compare paired thinking and non-thinking models under the same token budgets. At low complexity, standard models often match or exceed the accuracy of models with explicit thinking while using fewer tokens. At moderate complexity, thinking models gain a clear edge by exploring longer reasoning traces. Beyond a threshold complexity both model types collapse to zero accuracy. This collapse occurs even when reasoning models have unused token budgets and despite learned self-reflection mechanisms.

🧠 Insights from Reasoning Traces

By extracting intermediate solutions from chain-of-thought traces, the paper shows how correct and incorrect paths evolve during reasoning. In simple puzzles, reasoning models often find the correct path early but then pursue wrong moves, illustrating an overthinking effect. In medium complexity they discover correct solutions only after exploring many incorrect alternatives. At high complexity they fail to produce any correct intermediate steps, indicating a breakdown of self-correction.

❓ Surprising Findings and Open Questions

Providing explicit solution algorithms to models does not improve their performance or delay collapse, suggesting they struggle to execute exact logical steps even when guidelines are available. Models also behave unevenly across different puzzle types, succeeding in lengthy Tower of Hanoi sequences yet failing quickly on simpler River Crossing tasks. These results raise fundamental questions about their symbolic manipulation and algorithm execution capabilities.

How the Researchers evaluated Large Reasoning Models (LRMs)

Rather than relying on conventional math benchmarks, which suffer from contamination and lack structure, the authors evaluate LRMs using four controllable puzzles (Tower of Hanoi, Checker Jumping, River Crossing, and Blocks World) that allow fine-grained complexity scaling and transparent trace analysis.

The left panel shows how the language model tags its own reasoning with a “think” section, listing each move it plans and then a separate “answer” section with the final solution. The right side translates those move lists into visual puzzle states, showing how disks shift from the starting arrangement through a middle step to the target arrangement.

⚙️ How moves map to states

The model’s reasoning trace records moves as triples indicating which disk moves from which peg to which peg. By applying each triple in order, the diagram recreates the puzzle’s progression from its initial setup to an intermediate layout and finally to the solved state. This makes it possible to check every step against the puzzle rules.

📊 Performance and reasoning length

Below, three charts plot accuracy, response length, and the position of correct versus incorrect steps as puzzle complexity grows. Accuracy stays high at low complexity but drops to zero once problems become too large. The token cost of chain-of-thought reasoning climbs sharply with complexity, even when it no longer yields correct answers.

🧠 Patterns in reasoning traces

The third chart tracks when correct moves appear in the reasoning trace versus wrong moves. At simple levels the model often finds the right move early yet continues exploring wrong options. At medium complexity it only reaches the correct path after many mistakes. At high complexity it fails to produce any valid intermediate steps, showing that extra thinking does not prevent collapse.

Four simulators (Tower of Hanoi, Checker Jumping, River Crossing, Blocks World) raise complexity smoothly while rules stay fixed.

The illustration highlights that all puzzles share a consistent logical structure while allowing complexity to vary—such as disk count in Tower of Hanoi or token positions in Checkers Jumping. The mapping makes it possible to quantitatively measure reasoning effort and accuracy in terms of step-by-step compliance. It also shows how models behave across three regimes—low, medium and high complexity—revealing where chain-of-thought adds value and where it collapses.

More disks make the Tower of Hanoi puzzle harder. At 1-3 disks the plain model is both accurate and brief; at 4-7 disks the thinking version gains accuracy by spending many extra tokens; past about 8 disks both crash to 0% and the thinking model even writes fewer thoughts.

This shows current chain-of-thought scaling breaks beyond a small depth.

📢 OpenAI rolls out Advanced Voice Mode with more voices and a new look

OpenAI upgrades ChatGPT Voice: adds live translation + smoother cadence + emotional expressiveness

⚙️ The Details

→ OpenAI is rolling out Advanced Voice Mode (AVM) to all ChatGPT Plus and Team users. Enterprise and Edu users get it next week. This update adds live translation, five new voices, and better emotional nuance during speech.

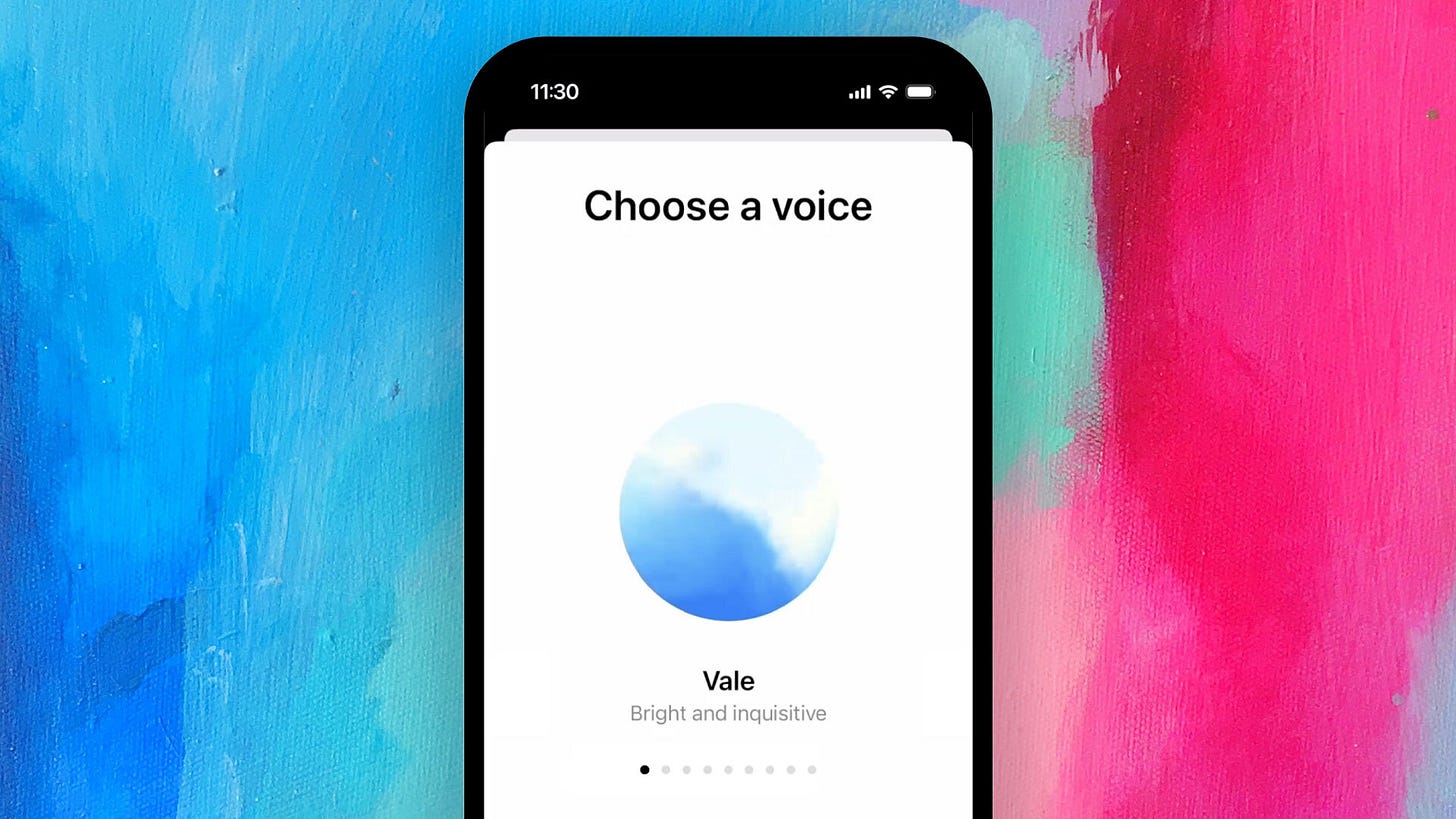

→ AVM now features a redesigned UI. The old black dots are replaced with a blue animated sphere. Users will see a pop-up in the ChatGPT app when the feature becomes available.

→ The new voices—Arbor, Maple, Sol, Spruce, and Vale—bring the total to nine. Voice selection focuses on sounding natural. Sky, the voice linked to legal threats from Scarlett Johansson, is removed.

→ ChatGPT’s voice now handles real-time translation across 50+ languages. Once activated, it continues translating without needing repeated prompts.

→ Expressiveness has improved with more natural cadence, better intonation, and support for emotions like empathy or sarcasm. Accent understanding is also better, and conversations flow faster.

→ AVM now supports Custom Instructions and Memory. These features let users personalize how ChatGPT speaks and remember context across chats.

→ Some features shown earlier, like video or screen-sharing, are not yet available. OpenAI hasn’t given a timeline for these multimodal tools.

→ Known issues include occasional pitch glitches and hallucinated sounds. AVM is still not available in the EU, UK, Switzerland, Iceland, Norway, and Liechtenstein.

📡 Claude and ChatGPT now directly compete with top AI apps.

OpenAI & Anthropic start squeezing top AI apps: Windsurf blocked, Granola targeted by ChatGPT’s new feature. API startups now face model providers as direct competitors.

⚙️ The Details

→ Anthropic abruptly cut off Windsurf’s access to Claude 3.x with less than 5 days’ notice. Windsurf, a top coding assistant, is reportedly in acquisition talks with OpenAI. Anthropic claims it's focusing on “sustainable partnerships,” signaling a refusal to serve apps backed by rivals.

→ Windsurf was paying for full capacity but still got shut down. Anthropic’s move is seen as defensive posturing against OpenAI, who would directly benefit if the acquisition goes through.

→ OpenAI launched a new “record mode” in ChatGPT, starting with enterprise users. It transcribes meetings and writes summaries — the core function of Granola, which just raised $43M and launched a mobile app.

→ These moves highlight a growing conflict: model providers like OpenAI and Anthropic are building features that copy successful apps built on their own APIs.

→ Claude already competes with dev tools like Windsurf and Cursor. OpenAI is building teams to help enterprise users productize ideas, putting them further in competition with their own API customers.

→ Investors are warning: if AI labs keep absorbing the value chain, startups may shift to cloud incumbents (Google, Amazon, Microsoft) for infrastructure stability and neutrality.

→ Industry leaders now question whether model providers can be trusted as platforms, or if they’ll chase every vertical themselves.

🛠️ AI will do 100% of human jobs, says Sutskever, just a matter of time. Why? Because brains = biological computers

Ilya Sutskever, co-founder of OpenAI, argues that AI will eventually perform all tasks humans can, not just some. His core claim: if the brain is just a biological computer, then a digital computer can do everything the brain does. No task is out of reach.

→ He ties this inevitability to accelerating AI capability. Even today, AI can hold fluent conversations, generate working code, and respond in real time. The deficiencies still visible are temporary and shrinking fast.

→ He avoids specific timelines but references commonly quoted windows like 3 to 10 years. The key idea: improvement is constant and exponential once AIs start assisting in AI development itself.

→ He frames this as both a challenge and opportunity. If AI reaches general capability, society must decide how to direct this intelligence—toward more research, more production, or potentially misuse.

→ Sutskever warns that no one can ignore this transformation. Like politics, AI will impact everyone—regardless of their interest in it. The only way to adapt is by paying attention and staying technically literate as it evolves.

→ He also briefly discusses the emotional difficulty of fully internalizing this future. Even AI leaders, he says, struggle to process how radical the shift will be.

→ His final point is that AI will likely be the biggest challenge—and biggest reward—humanity has ever faced.

🗞️ Byte-Size Briefs

⚡️AI re-dates Dead Sea Scrolls (a collection of ancient Jewish manuscripts found close to the Dead Sea) by 100 years using handwriting analysis. Researchers trained an AI model to link handwriting styles to known radiocarbon dates, letting it non-destructively estimate the age of ancient texts. This method suggests some scrolls date back 2,300 years, aligning them closer to their authors’ lifetimes.

Google launched Portraits, a Labs experiment allowing users to have personalized experiences with AI versions of experts based on their voice and knowledge base. Portraits is an AI chat experience where users interact with avatars of real experts. These are not generic assistants but models fine-tuned on an expert’s own materials and voice.

The Common Pile v0.1 is made open-sourced. Its an 8TB Dataset of Public Domain and Openly Licensed Text. It pulls from 30+ sources including arXiv, GitHub, Project Gutenberg, Wikipedia, Common Crawl, and U.S. government sites. This mix spans research, code, literature, and policy documents.

Each document goes through cleaning: language filtering, deduplication, PII removal, and quality scoring. This avoids noisy or low-value training data and ensures usable examples.

A custom tokenizer was trained on this dataset. A heuristic-based mixing strategy weights higher-quality sources more heavily during training prep.

To test the dataset’s quality, the team trained two LLMs: Comma v0.1-1T and Comma v0.1-2T. Both models are 7B parameters. One was trained on 1 trillion tokens, the other on 2 trillion tokens.

Unlike many datasets mixed from web crawl or semi-private sources, this one is 100% reproducible and legally safe for commercial use and open research.

The dataset is intended as a foundation for fully open-source LLM pipelines that avoid copyright and licensing ambiguity.

Higgsfield releases Speak: talking avatars from script to styled video in seconds. Speak turns your text into lifelike, motion-driven avatars with custom voices, emotions, and cinematic movement—no video editing needed, just pick a style, write a script, and it auto-generates the rest.

That’s a wrap for today, see you all tomorrow.