"ARR: Question Answering with LLMs via Analyzing, Retrieving, and Reasoning"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2502.04689

The paper addresses the issue that current zero-shot Chain-of-Thought prompting offers generic guidance for LLMs in Question Answering, which may not be sufficient for complex questions. The paper proposes a new prompting method to enhance LLM performance in question answering tasks.

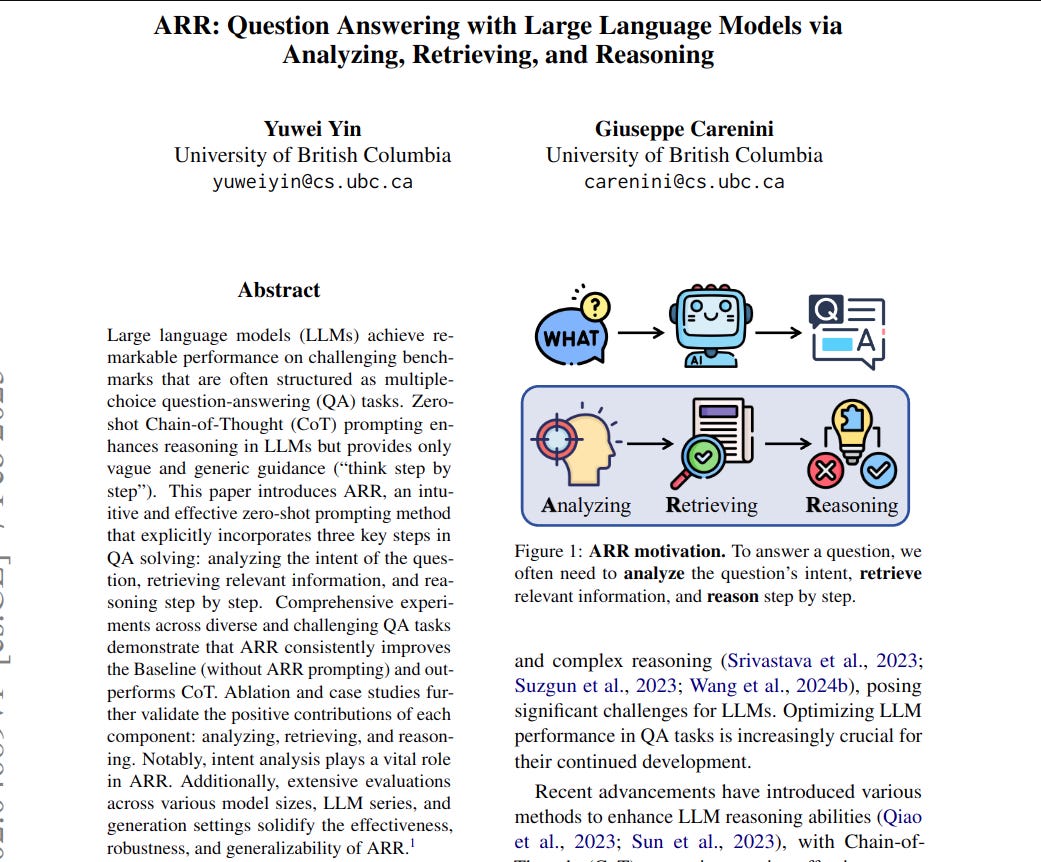

This paper introduces ARR, a zero-shot prompting method. ARR guides LLMs through Analyzing question intent, Retrieving relevant information, and Reasoning step-by-step.

-----

📌 ARR strategically decomposes question answering. It moves beyond generic 'think step by step'. ARR explicitly prompts for intent analysis before reasoning. This structured approach aligns better with human problem-solving. It yields consistent accuracy gains.

📌 ARR leverages implicit knowledge within LLMs more effectively. By prompting retrieval and analysis, it guides the model to access and utilize relevant internal representations. This contrasts with standard Chain-of-Thought which relies on less guided generation.

📌 The effectiveness of "Analyzing" in ARR ablation studies highlights a key insight. Question understanding, not just reasoning, is a bottleneck in LLMs. ARR's intent analysis step directly addresses this, leading to significant performance improvements.

----------

Methods Explored in this Paper 🔧:

→ The paper proposes ARR, short for Analyzing, Retrieving, and Reasoning.

→ ARR is a novel zero-shot prompting method for Question Answering.

→ It explicitly incorporates three key steps into the prompt: analyzing question intent, retrieving relevant information, and step-by-step reasoning.

→ The ARR prompt is: "Let’s analyze the intent of the question, find relevant information, and answer the question with step-by-step reasoning."

→ This structured prompt aims to guide LLMs to perform these essential steps for effective question answering.

-----

Key Insights 💡:

→ Explicitly prompting LLMs to analyze question intent, retrieve information, and reason step-by-step significantly improves Question Answering performance.

→ Intent analysis is particularly crucial for performance gains in Question Answering.

→ Each component of ARR (Analyzing, Retrieving, Reasoning) individually contributes positively to improved performance compared to baseline and Chain-of-Thought methods.

→ ARR demonstrates effectiveness across different LLM sizes, series, and generation settings, showing strong generalizability.

-----

Results 📊:

→ ARR improves average accuracy by +4.1% compared to the Baseline method across ten datasets.

→ ARR consistently outperforms zero-shot Chain-of-Thought prompting across all tested Question Answering datasets.

→ In ablation studies, even using only the "Analyzing" component of ARR achieves better average performance than Baseline and Chain-of-Thought.

→ ARR achieves 55.08% average accuracy on BBH, MMLU, and MMLU-Pro datasets on LLaMA3-8B-Chat model.