🔬 Bad news for AI-based radiology. “doctor-level” AI in medicine is still far away.

Radiology AI underperforms, Gemini gets Nano Banana in CLI, few IT teams trust autonomous agents, Sora adds IP control, and Bezos calls the AI boom a healthy bubble.

Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (4-Oct-2025):

🔬 Bad news for AI-based radiology. “doctor-level” AI in medicine is still far away.

🏆 Gartner Survey Finds Just 15% of IT Application Leaders Are Considering, Piloting, or Deploying Fully Autonomous AI Agents

📡 Now Google’s Nano Banana is available in Gemini CLI Extension.

🎬 Sam Altman announced that they will let rightsholders more ‘granular’ control on how their characters appear in Sora videos and may share revenue from those uses.

🎯 Jeff Bezos explains how AI boom is a ‘good’ kind of bubble that will benefit the world

🔬 Bad news for AI-based radiology. “doctor-level” AI in medicine is still far away.

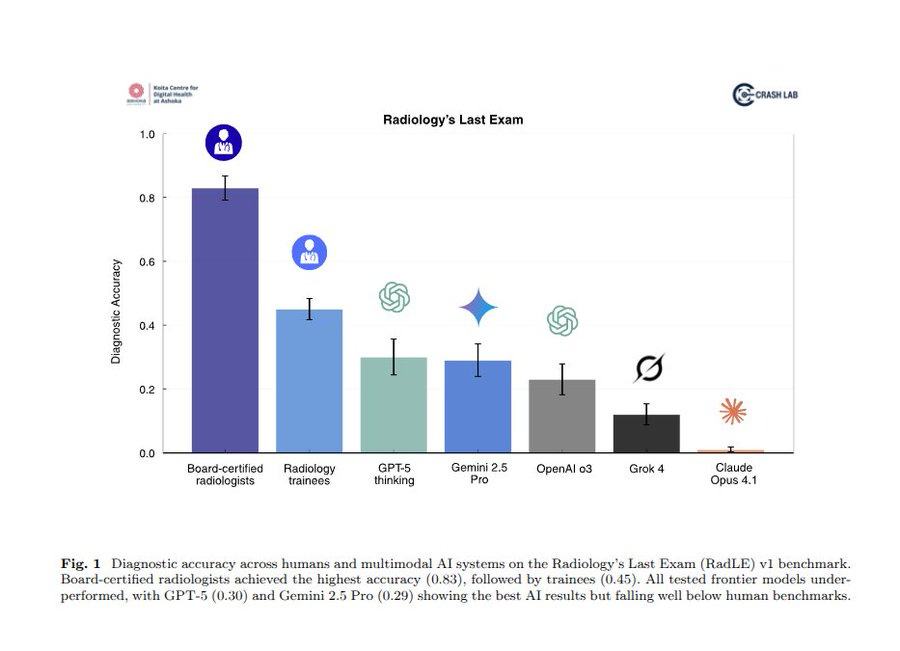

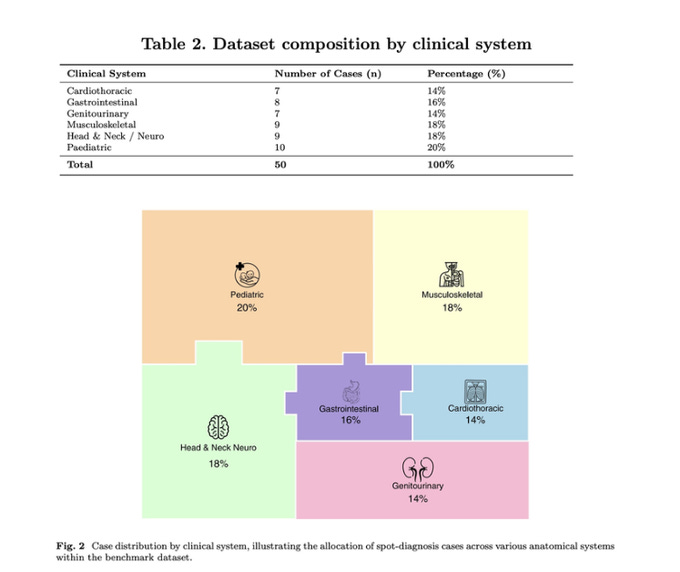

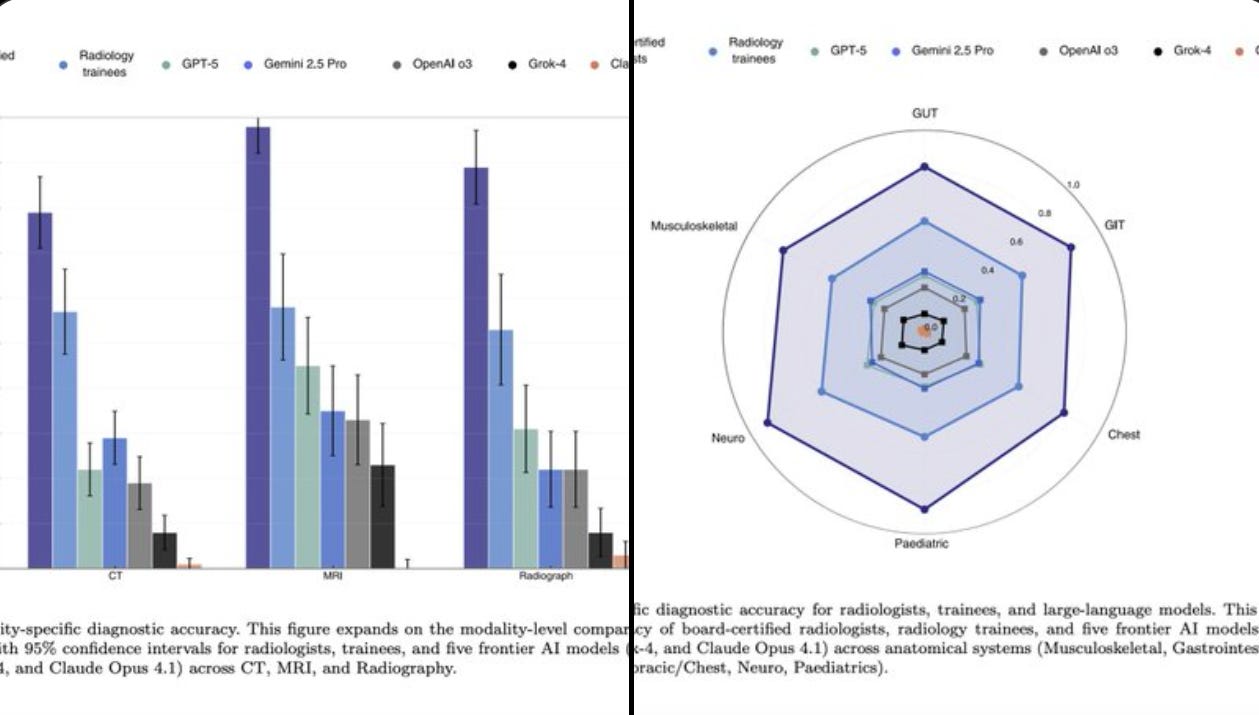

The paper checks if chatbots can diagnose hard radiology images like experts. Finds that board-certified radiologists scored 83%, trainees 45%, but the best performing AI from frontier labs, GPT-5, managed only 30%. 😨

Claims “doctor-level” AI in medicine is still far away. The team built 50 expert level cases across computed tomography (CT), magnetic resonance imaging (MRI), and X-ray.

Each case had one clear diagnosis and no extra clinical history. They tested GPT-5, OpenAI o3, Gemini 2.5 Pro, Grok-4, and Claude Opus 4.1 in reasoning modes.

Blinded radiologists graded answers as exact, partial, or wrong, then averaged scores. Experts beat trainees, and trainees beat every model by a wide margin.

More reasoning barely helped accuracy, but it made replies about 6x slower. Models did best on MRI and struggled more on CT and X-ray.

To explain mistakes, the authors built an error map covering missed or false findings, wrong location or meaning, early closure, and contradictions with the final answer. They also saw thinking traps like anchoring, availability bias, and skipping relevant regions. General purpose models are not ready to read hard cases without expert oversight.

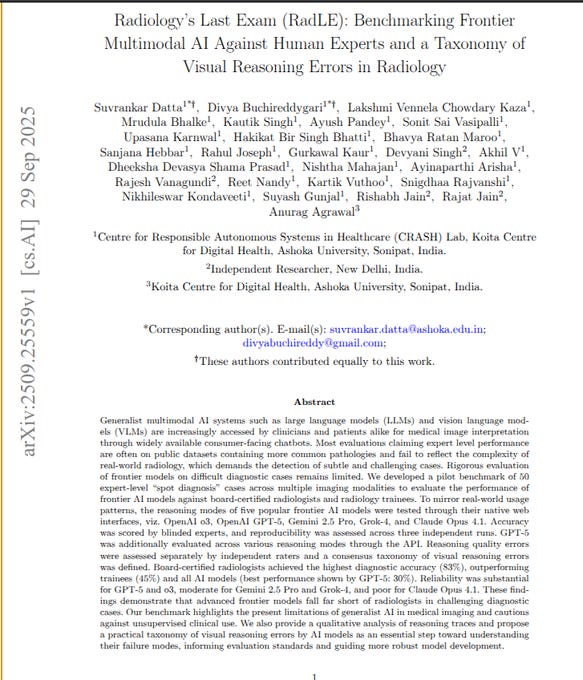

They built RadLE v1: a benchmark of 50 expert-level spot diagnosis cases deliberately designed to mimic board-exam style difficulty, not simplified datasets.

Cases span 3 modalities (CT, MRI, X-ray) and 6 systems (neuro, chest, GI, GU, MSK, pediatrics).

This was spectrum-biased toward the hardest cases that separate novices from experts.

They found humans held the clear edge over frontier AI!

Radiologists led with 83% (95% CI 75–90%), trainees followed at 45% (39–52%), while every AI stayed under 30% (GPT-5 30%, Gemini 2.5 Pro 29%).

Claude Opus 4.1 collapsed to just 1%.

Statistically overwhelming: Friedman χ² = 336, p < 10⁻⁶⁴.

⚠️ Even when tested by modality and system, the pecking order never shifted:

Human Radiologists led with 79% on CT, 98% on MRI, and 89% on X-ray. GPT-5 reached 45% on MRI, the top AI mark, but it could not match humans. Across all 6 systems, radiologists stayed ahead of trainees, and trainees stayed ahead of AI, always by ≥0.25.

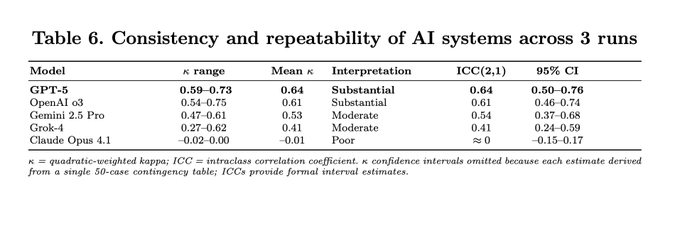

They cranked up GPT-5 using API “reasoning effort” modes (low, medium, high).

Net effect: accuracy stuck at 25% to 26%, but latency blew up 6x from 10s to 65s per case, plus rising compute costs.

Consistency readout: GPT-5 and o3 had substantial repeatability (ICC around 0.6); Gemini and Grok landed moderate; Claude about random.

Clinical reliability demands excellent repeatability.

🏆 Gartner Survey Finds Just 15% of IT Application Leaders Are Considering, Piloting, or Deploying Fully Autonomous AI Agents

Says leaders expect AI agents to boost productivity but most are not ready to let them run on their own. Gartner treats fully autonomous agents as goal driven tools that operate without human oversight, which is the part creating the hesitation.

While 75% are piloting or deploying some kind of agent, only 15% are considering or moving ahead with systems that make decisions on their own. Trust and security are the choke points, only 19% have high trust in a vendor’s safeguards against hallucinations, and 74% see agents as a new attack path.

Internal readiness is also thin, only 13% strongly agree their governance and controls are ready for agents at scale. On productivity expectations the picture is confident but restrained, 20% expect marginal gains, 53% expect a strong boost that stops short of a step change, 26% expect a step change, 1% expect no impact, and 0% expect a decline.

📡 Now Google’s Nano Banana is available in Gemini CLI Extension.

So you can do image generation, editing, and restoration directly from the Gemini CLI.

also adds specialized commands for creating icons, patterns, textures, visual stories, and technical diagrams. Each command supports advanced options like multiple variations, style choices, and preview modes, so users can fine-tune results quickly.

Under the hood, it uses the Model Context Protocol SDK for structured client-server handling and integrates with the Gemini image generation API. Clear error handling, debugging tools, and fallback systems for when images or responses fail.

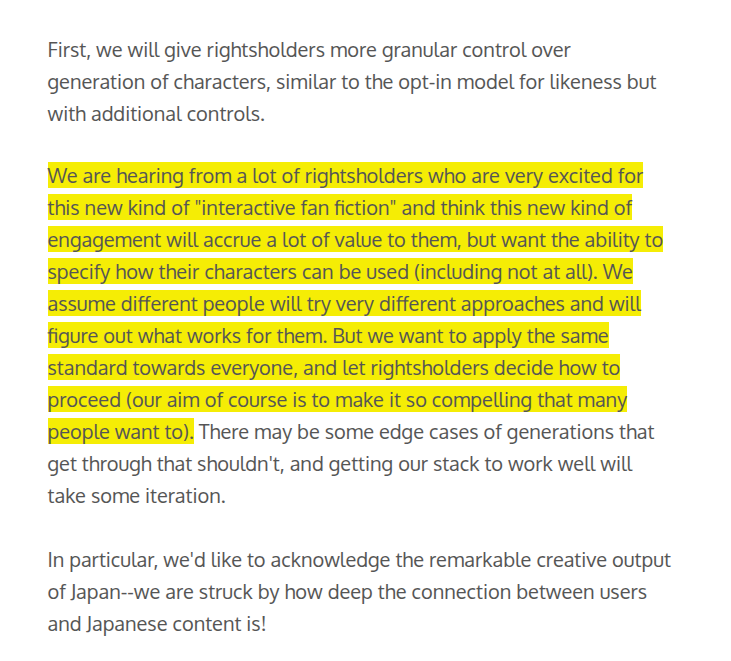

🎬 Sam Altman announced that they will let rightsholders more ‘granular’ control on how their characters appear in Sora videos and may share revenue from those uses.

This helps avoid unauthorized use of copyrighted or famous characters in AI-generated clips. OpenAI also plans to test a revenue-sharing system where rightsholders who allow their content to be used receive part of the income from video generation. This is because people are making far more videos than expected, often for small audiences, so OpenAI needs a sustainable way to pay for the compute costs.

The approach will start with some trial and error, similar to how ChatGPT evolved in its early stages. They’ll refine the process and apply what works across other OpenAI products.

This move is meant to balance creator freedom with legal and ethical protection. It could also make Sora more appealing to major studios and independent creators, since both control and compensation are built into the platform.

OpenAI has faced pressure from studios and IP holders. Some major rights owners (e.g. Disney) reportedly have opted out from letting their characters be used.

In my opinion, Technically enforcing these rules is tricky. Systems must detect when a generated video uses a protected character in violation of their rules. False positives or omissions may happen.

🎯 Jeff Bezos explains how AI boom is a ‘good’ kind of bubble that will benefit the world

He compared today’s AI boom to the dotcom era’s spending on fiber optics and the biotech wave of the 1990s, where even though many companies failed, the technology and discoveries that remained were hugely valuable.

AI’s impact is “real” and will change every industry, though investors right now may struggle to tell the difference between strong ideas and weak ones. He also predicted that in the coming decades millions of people will live in space, with robots handling much of the work and massive AI data centers powered by the sun operating there.

That’s a wrap for today, see you all tomorrow.

nice one!