"Beyond Prompt Content: Enhancing LLM Performance via Content-Format Integrated Prompt Optimization"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2502.04295

This paper addresses the overlooked problem of prompt formatting in LLM performance. Current methods mainly focus on prompt content, ignoring format's crucial role.

This paper proposes Content-Format Integrated Prompt Optimization (CFPO). CFPO iteratively refines both prompt content and format to boost LLM performance.

-----

📌 CFPO effectively tackles prompt optimization as a joint content-format problem. Iterative refinement using distinct optimizers for content and format is technically sound and practically impactful.

📌 Dynamic format exploration via Upper Confidence Bounds applied to Trees and LLM generation in CFPO is a key advancement. It smartly navigates the expansive format search space, unlike static methods.

📌 CFPO demonstrates significant performance gains, achieving 53.22% on GSM8K with Mistral-7B-v0.1. This empirically validates the importance of integrated content-format optimization, especially for pre-trained models.

----------

Methods Explored in this Paper 🔧:

→ Introduces Content-Format Integrated Prompt Optimization (CFPO). CFPO is a new method for optimizing LLMs.

→ CFPO jointly optimizes both prompt content and format. It uses an iterative refinement process.

→ CFPO uses separate optimizers for content and format. This acknowledges their interdependence.

→ Content optimization uses performance feedback. It also uses Monte Carlo sampling and natural language mutations. This enhances prompt effectiveness.

→ Format optimization explores format options. It uses a dynamic strategy to find optimal formats. It avoids prior format knowledge.

→ CFPO uses a structured prompt template. This template separates prompts into content and format components.

→ The template includes Task Instruction, Task Detail, Output Format, and Examples as content components.

→ It includes Query Format and Prompt Renderer as format components.

→ CFPO's format optimizer uses a format pool. This pool contains Prompt Renderer and Query Format configurations.

→ A scoring system evaluates each format's performance. This system is updated across different prompt contents.

→ CFPO uses an LLM-assisted format generator. This generator creates new formats based on the existing pool.

→ The format optimizer uses Upper Confidence Bounds applied to Trees (UCT). UCT balances exploration of new formats and exploitation of effective ones.

→ CFPO iteratively optimizes content and format. This integrated approach aims to find their best combination.

-----

Key Insights 💡:

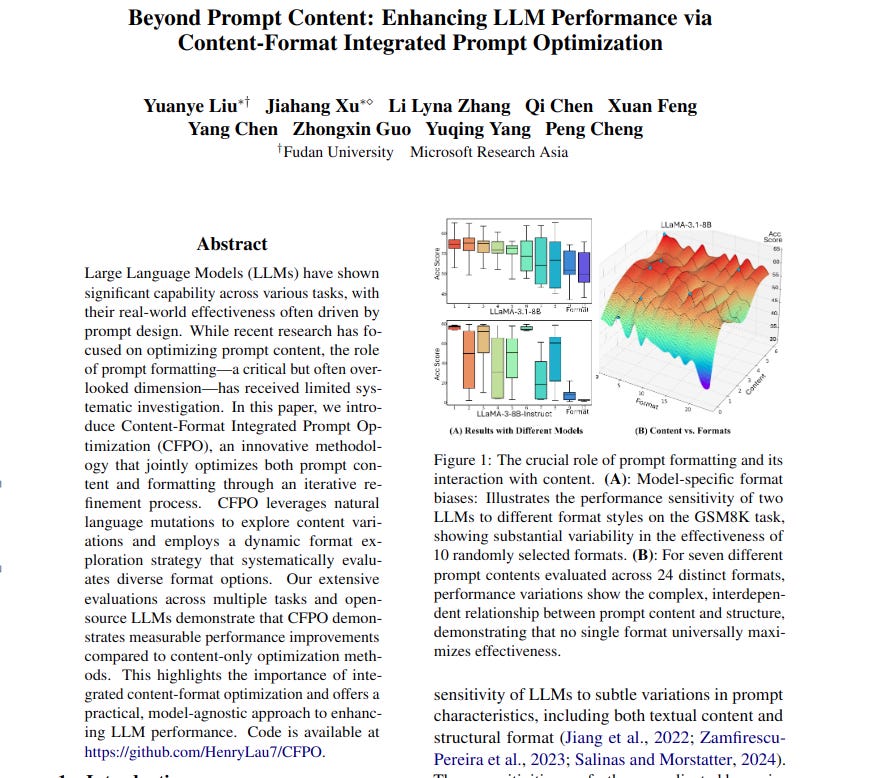

→ Prompt formatting significantly impacts LLM performance. Different LLMs show format preferences.

→ No single prompt format works best across all contents. Content and format are interdependent.

→ Joint optimization of prompt content and format is crucial. This leads to measurable performance gains.

→ CFPO's dynamic format exploration is effective. It enhances prompt quality and diversity.

-----

Results 📊:

→ CFPO outperforms baseline methods like GRIPS, APE, ProTeGi, and SAMMO across tasks.

→ On GSM8K, CFPO achieves 53.22% accuracy with Mistral-7B-v0.1. Baselines are significantly lower.

→ On MATH-500, CFPO reaches 44.20% accuracy with Phi-3-Mini-Instruct. Baselines are again lower.

→ On ARC-Challenge, CFPO achieves 88.23% accuracy with Phi-3-Mini-Instruct. Outperforming baselines.

→ On Big-Bench Classification, CFPO achieves 94.00% accuracy with Mistral-7B-v0.1. Showing superior performance.

→ Ablation studies show integrated content and format optimization is key. CFPO variants without format optimization underperform.

→ CFPO with format generation outperforms variants without it. This highlights format generation's effectiveness.

→ UCT-based format selection in CFPO is more effective. It outperforms random and greedy format selection.