🧠 Brilliant New Google Research Decoded In-Context Learning

Google cracks how in-context learning works, Qwen3 outsmarts all other open-source models, Grok enters prediction markets, and Sam Altman drops wild AI takes in new podcast.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (25-July-2025):

🧠 Brilliant New Google Research Decoded In-Context Learning

🏆 Qwen’s next big open source release: Qwen3-235B-A22B-Thinking-2507 tops OpenAI, Gemini reasoning models on key benchmarks

🔮 Grok steps into prediction markets with xAI, Kalshi partnership

🧑🎓 This 1.5-hour podcast by Sam Altman with Theo Von has a lot of super interesting predictions.

🧠 Brilliant New Google Research Decoded In-Context Learning

This new GoogleResearch paper is probably the most beautiful exploration of ICL (In Context Learning).

LLMs can learn in context from examples in the prompt, can pick up new patterns while answering, yet their stored weights never change. That behavior looks impossible if learning always means gradient descent.

The mechanisms through which this can happen are still largely unknown. The authors ask whether the transformer’s own math hides an update inside the forward pass.

They show, each prompt token writes a rank 1 tweak onto the first weight matrix during the forward pass, turning the context into a temporary patch that steers the model like a 1‑step finetune.

Because that patch vanishes after the pass, the stored weights stay frozen, yet the model still adapts to the new pattern carried by the prompt.

Overall, it signifies that If a model is capable of dynamically adjusting its behavior at the prompt level without changing its weights, then adding memory and a persistent adaptive layer on top of this leads to something more: a model that begins to live within your reality, forming shared beliefs and effectively fine-tuning on the human, even though the actual weights remain untouched.

In this way, we get either a deeply, meaningful, personalized interaction, or, if the signals are distorted, we risk feeding the model our own delusions, which it may eventually absorb as truth.

🧭 So Overall, Where This Capability Takes Us

Large language models can pick up new tricks on the fly because every token in the prompt writes a rank 1 “sticky note” onto the first weight matrix during the forward pass, then tosses that note away when the pass ends.

This note is algebraically identical to one tiny gradient‑descent step on a proxy loss, so stacking prompt tokens looks like walking a path in weight space without ever saving those steps to disk.

Why this matters for everyday users

A low‑rank note is just two skinny vectors, so developers can save a skill such as legal Q&A or medical triage in a file that is often under 1 MB.

Loading that file at inference time adds the outer‑product to the frozen weights in milliseconds, delivers responses that match a full fine‑tune, and then vanishes, leaving the base model clean.

Because the base model stays on disk in 4‑bit form, even a single 48 GB GPU can run models that once demanded racks of hardware, and the adapter keeps its original 16‑bit precision where it counts.

A serving stack can juggle hundreds of these micro‑skills per model session with only a small hit to throughput, so one service can hand each user a custom mix of domain expertise on demand.

Prompt‑time patches also boost privacy and agility: a phone or browser can build and apply a personal adapter locally, share nothing upstream, and still get near finetune accuracy.

For teams that simply want zero‑code tweaks, the same idea explains why a few well‑chosen examples in the prompt often beats hours of parameter tuning: the model is silently wiring those examples into a one‑step weight update while you watch.

Where it pays off in practice

Fast verticals – spin up a finance, health, or legal persona by swapping in the matching adapter, answer, then swap back.

Edge deployment – keep the 4‑bit base model on device, stream tiny task adapters when needed, stay under tight memory caps.

Secure scenarios – apply a customer’s private adapter in RAM, generate, and drop it so nothing persists.

Rapid A/B – compare draft adapters side by side without downtime or risky weight merges.

Interpretability – inspect or even edit the single rank 1 patch to see which direction in weight space a prompt is pushing.

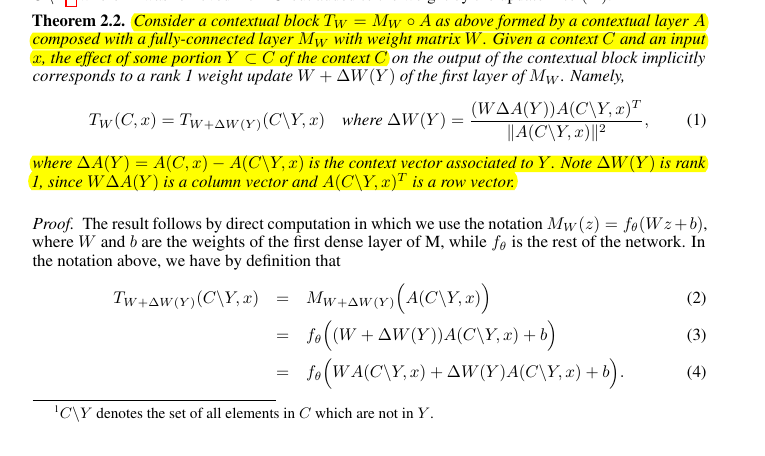

⚙️ The Core Idea: They call any layer that can read a separate context plus a query a “contextual layer”. Stack this layer on top of a normal multilayer perceptron and you get a “contextual block”.

For that block, the context acts exactly like a rank 1 additive patch on the first weight matrix, no matter what shape the attention takes.

🧩 How the Patch Works: Theorem 2.2 shows a formula, multiply the base weights by the context change vector, then project it with the query representation, boom, you get the patch. Because the patch is rank 1, it stores almost no extra parameters yet still carries the full prompt signal. So the network behaves as if it fine‑tuned itself, even though no optimizer ran.

📐 Hidden Gradient Descent: Feeding tokens one by one stacks these tiny patches. Proposition 3.1 proves each added token shifts the weights the same way online gradient descent would, with a step size tied to the query vector length. The shift shrinks as soon as a token stops adding new info, matching the feel of a converging optimizer.

🔬 Testing on Simple Linear Tasks: They train a small transformer to map x→w·x using 50 prompt pairs plus 1 query. When they swap the prompt for its equivalent rank 1 patch and feed only the query, the loss curve overlaps the full‑prompt run almost perfectly. That overlap stays tight through 100 training steps.

📊 Watching the Patch Shrink: They measure the norm change between successive patches as more prompt tokens arrive.

The curve drops toward 0, confirming the implicit learning calms down once the model has absorbed the context.

🤝 Finetune vs. Implicit Patch: They compare classic gradient finetuning on the same examples to the single‑shot patch strategy.

Both methods cut test loss in a similar pattern, yet the patch avoids any real back‑prop and keeps the rest of the network frozen.

🔎 Limits They Admit: Results cover only the first generated token and one transformer block without MLP skip, so full‑stack models need more work.

Still, the finding hints that many in‑context tricks come from weight geometry rather than quirky attention rules.

Why the paper calls it “gradient descent” even though there’s no re-training??

This is the key math for that.

The authors define a per‑token loss Li(W)=trace(Δiᵀ W) where Δi is the change in attention output caused by adding token i. Taking the matrix gradient of that trace gives ∇W Li=Δi.

Plug this into an online update W←W−h ∇W Li with learning rate h=1/‖A(x)‖² and you get the same closed‑form patch they derive in Theorem 2.2.

So the “gradient” lives in activation space, not in a loss computed after seeing a label. That is why no explicit error term is required.

The update direction comes from the difference between “context+token” and “token alone”, which acts like a proxy error.

Outer‑product stacking gives a sequence of tiny steps that match online gradient descent on that proxy loss.

Because the patch is applied on‑the‑fly and discarded right after generation, the checkpoint on disk never changes.

This view aligns with other results showing transformers, state‑space models, and even RNNs can encode learning algorithms inside forward computation rather than in back‑prop weight edits.

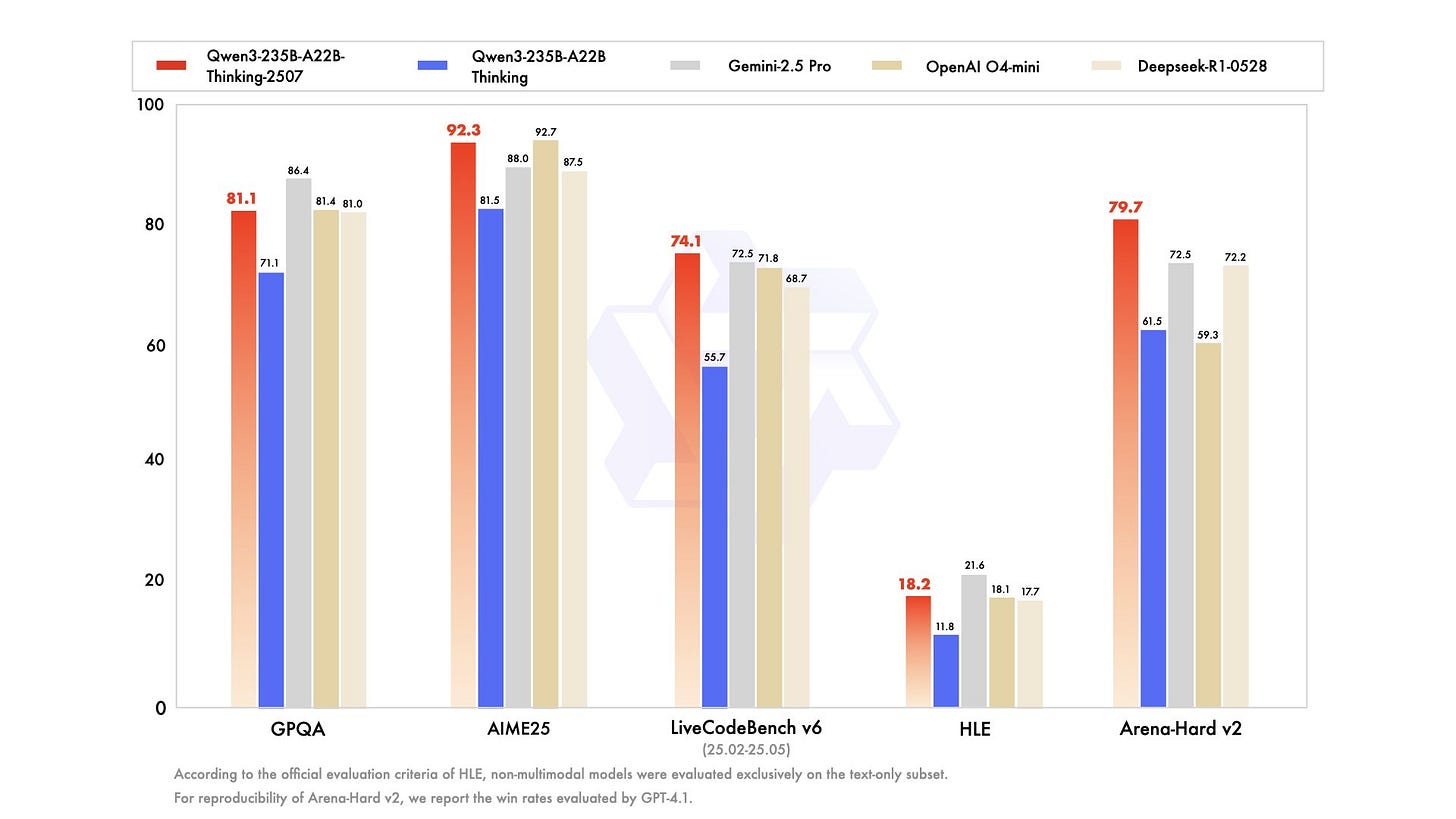

🏆 Qwen’s next big open source release: Qwen3-235B-A22B-Thinking-2507 tops OpenAI, Gemini reasoning models on key benchmarks

Alibaba’s Qwen Team just released its strongest reasoning model, and it is at the frontier.

On AIME25 math it posts 92.3, almost level with OpenAI o3 and above most open models. Apache‑2.0

packs 235B total parameters, fires only 22B per token by using a Mixture‑of‑Experts layout.

128 total experts number 128, with 8 picked each step to trim compute while keeping breadth of knowledge.

The native context window stretches to 262K tokens, letting complete books fit in one prompt.

every response starts inside a hidden <think> block and ends at </think>, so the reasoning trail is always exposed.

GPQA shows 81.1, only a few points shy of Gemini‑2.5 Pro.

MMLU‑Redux clocks 93.8, placing it near the closed‑source leaders.

LiveCodeBench v6 records 74.1, edging Gemini‑2.5 Pro 72.5 and GPT‑4 mini 71.8.

User‑preference Arena‑Hard v2 win rate lands at 79.7%, just behind OpenAI O3 80.8% and well above Deepseek‑R1.

MultiIF multilingual eval hits 80.6%, beating most open competitors except the very top closed ones.

Qwen‑Agent templates offer drop‑in JSON function calling, turning REST endpoints into natural‑language tools.

With open weights, transparent reasoning, and benchmark scores neck‑and‑neck with GPT‑4 class models, Qwen3‑235B‑A22B‑Thinking‑2507 stands as the strongest freely available reasoning LLM today.

📲 Google AI Labs released Opal experiment where users can "build and share AI mini-apps by linking together prompts, models, and tools"

Google Labs just rolled out Opal, a US‑only public beta that turns plain language into shareable AI mini apps in a snap. Opal chains prompts, models, and external tools into a visual workflow so anyone can build useful AI helpers without writing code.

Opal starts by letting users describe a workflow goal. The system then draws a flowchart of every step, from prompt crafting to model calls and tool integrations. Each block can be tweaked in a drag‑and‑drop editor or by chatting new instructions. This mix of text and visuals lowers the usual “read the docs, fight the SDK” barrier that keeps many prototypes stuck on the drawing board.

Finished projects publish as standalone web apps tied to the creator’s Google account. Anyone who opens the shared link can immediately run the mini app, swapping in their own data and settings. A built‑in template gallery seeds ideas such as personal report generators, spreadsheet summarizers, or support ticket triagers, all powered by Gemini or any other model available through Google’s tooling.

Because everything happens inside Google’s cloud, deployment headaches disappear. There is no server setup, no authentication glue, and no version skew to chase. That makes Opal handy for fast proofs of concept or quick internal tools where speed matters more than pixel‑perfect UI polish. Developers gain a playground to test chaining logic, while non‑coders get a taste of building with AI instead of just using it.

🔮 Grok steps into prediction markets with xAI, Kalshi partnership

xAI is partnering with Kalshi to provide real-time AI-generated insights for prediction markets.

will enable xAI to process news articles and historical data, providing tailored insights and context for Kalshi users betting on real-world events, including central bank decisions, political races, and global affairs.

Earlier this year, xAI and X named Polymarket — an unregulated crypto-based competitor to Kalshi — as their official prediction market partner.

Now, with Kalshi and Polymarket effectively operating in parallel under Musk’s orbit, the market appears to be a testing ground for Grok’s AI capabilities across different regulatory frameworks.

i.e Grok will learn in two very different sandboxes.

Prediction markets let people trade tiny contracts that pay out if a real‑world event happens. Until now most traders sifted articles and social chatter by hand, which is slow and misses shifts in mood. Grok will scan news, X posts, and historical numbers in real time, then push a short probability readout next to each Kalshi contract, shaving research time to seconds.

🧑🎓 This 1.5-hour podcast by Sam Altman with Theo Von has a lot of super interesting predictions.

Traditional college will be obsolete for today’s babies.

GPT‑5 already outperforms him on specialized tasks and could manage the full CEO workload “not that long” from now, so automated leadership of large companies is approaching

The same capability gap (between AI and human) will extend across many roles, because future models will reason, write code, negotiate and plan faster than human staff, which means a significant slice of today’s white‑collar work will migrate to AI agents.

A permanent intelligence gap between people and machines is anticipated, and an AI system could soon take over the OpenAI CEO role, normalizing automated top leadership.

Universal extreme wealth, achieved through public ownership of AI output, will be preferred over universal basic income.

GPT‑5 solved a hard problem instantly, making Altman feel useless and previewing the jolt many workers may experience.

Nuclear fusion will essential for meeting AI’s future power demand, linking energy breakthroughs with rising intelligence.

Artificial wombs may replace natural pregnancy within 40 years, turning childbirth into a biotech process.

That’s a wrap for today, see you all tomorrow.