Can we only use guideline instead of shot in prompt?

Smart agents extract and combine task rules, helping LLMs reason better without examples.

Smart agents extract and combine task rules, helping LLMs reason better without examples.

Feedback, Guideline, and Tree-gather agents (FGT)

AI learns its own rulebook: no more spoon-feeding examples to language models

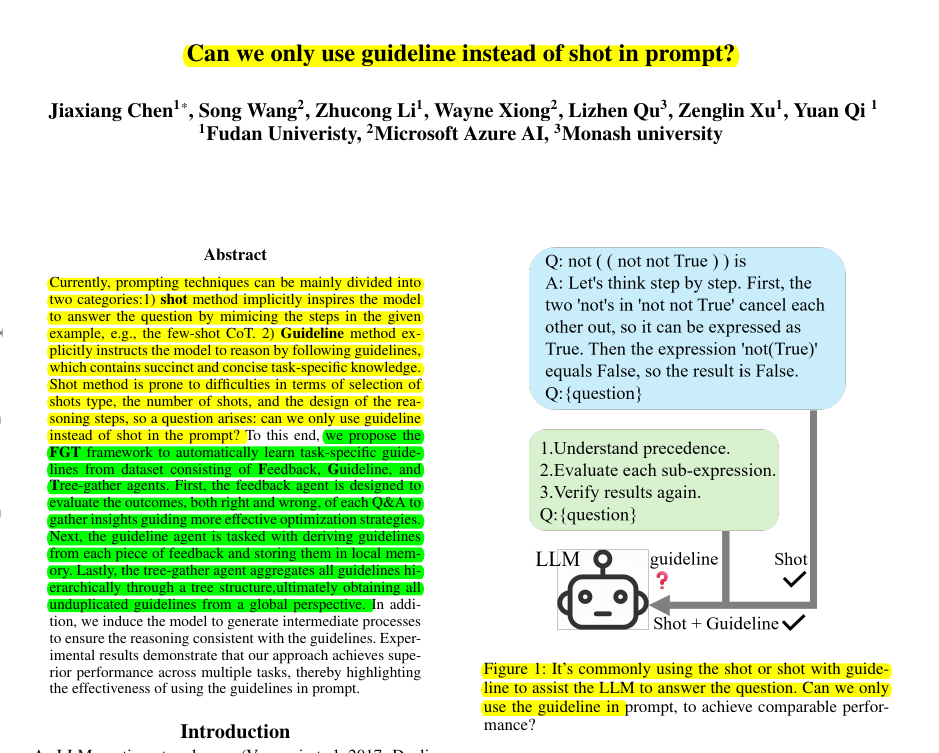

Original Problem 🔍:

Can we use only guidelines instead of few-shot examples in prompts for LLMs?

Solution in this Paper 🧠:

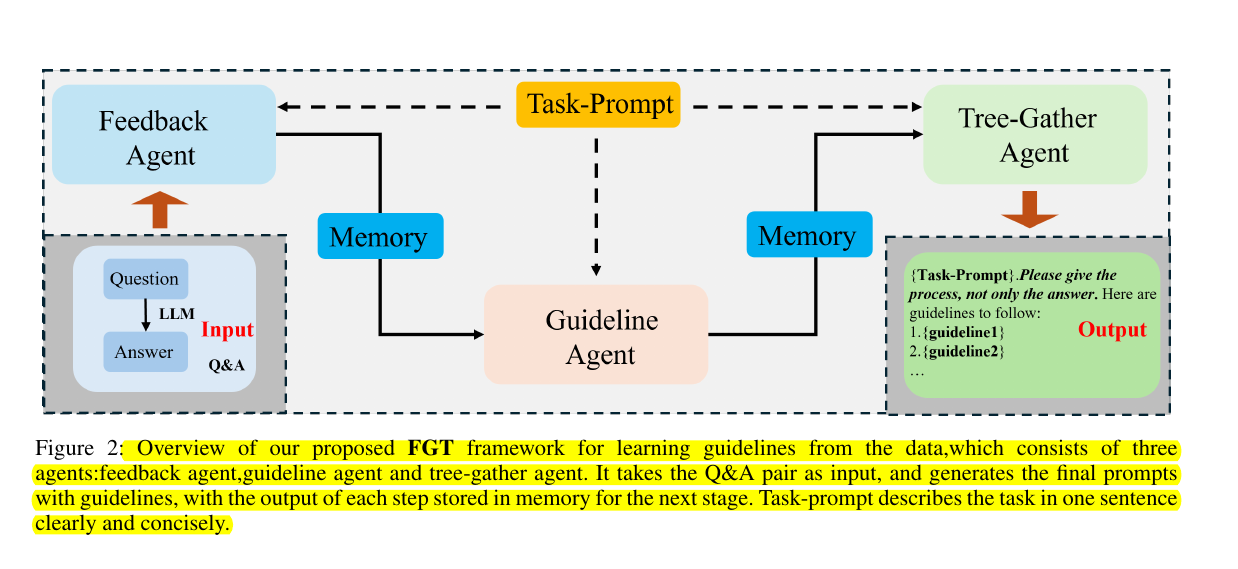

• Feedback, Guideline, and Tree-gather agents (FGT) framework: Feedback, Guideline, Tree-gather agents

• Feedback agent: Analyzes Q&A pairs for performance insights

• Guideline agent: Extracts guidelines from feedback

• Tree-Gather agent: Hierarchically aggregates guidelines

• Process prompt: Encourages LLM to show reasoning steps

Key Insights from this Paper 💡:

• Automatically learned guidelines can replace few-shot examples

• Tree-gather approach improves guideline aggregation

• Process prompt enhances guideline adherence and accuracy

• Effective for structured reasoning tasks (math, logic)

Results 📊:

• Outperforms baselines on Big-Bench Hard dataset

• Math calculating: 89.5% accuracy (vs 88.3% Leap)

• Logic reasoning: 93.9% accuracy (vs 89.1% Leap)

• Context understanding: 88.1% accuracy (vs 87.3% Leap)

• Surpasses few-shot and many-shot methods in most tasks

🧠 The paper investigates whether guidelines alone can be used effectively in prompts for LLMs, without relying on few-shot examples.

It explores if automatically learned task-specific guidelines can achieve comparable or better performance than traditional few-shot and chain-of-thought prompting methods.