🇨🇳 China’s Alibaba unveils open-source AI model Qwen3-Omni, takes on U.S. tech giants

China fires back in the AI race with Qwen3-Omni and LongCat-Flash-Thinking, while OpenAI and NVIDIA gear up for the biggest AI infra build ever.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (23-Sept-2025):

🇨🇳 China’s Alibaba unveils open-source AI model Qwen3-Omni, takes on U.S. tech giants

📢 NVIDIA and OpenAI are joining forces for "THE biggest AI infrastructure project in history.

💰 "How Nvidia Is Backstopping America’s AI Boom"

🇨🇳 New Chinese model LongCat-Flash-Thinking, a newly announced reasoning model, has achieved SOTA benchmark performance for open-source AI.

🇨🇳 China’s Alibaba unveils open-source AI model Qwen3-Omni, takes on U.S. tech giants

Means, unlike earlier non-native multi-modal-models that bolted speech or vision onto text-first models, Qwen3-Omni integrates all modalities from the start, allowing it to process inputs and generate outputs while maintaining real-time responsiveness.

🏆 SOTA on 22/36 audio & AV benchmarks

🌍 119L text / 19L speech in / 10L speech out

⚡ 211ms latency. This makes conversations, especially voice and video chats, feel instant and natural.

🎧 can process and understand up to 30 minutes of audio at once, allowing you to ask questions about long recordings, meetings, or podcasts.

🎨 Fully customizable via system prompts

🔗 Built-in tool calling

🎤 Open-source Captioner model (low-hallucination!)

📌 Context and Limits

Context length: 65,536 tokens in Thinking Mode; 49,152 tokens in Non-Thinking Mode

Maximum input: 16,384 tokens

Maximum output: 16,384 tokens

Longest reasoning chain: 32,768 tokens

Free quota: 1 million tokens (across all modalities), valid for 90 days after activation

📌 This model will unlock a very wide use cases:

Real-time speech-to-speech assistants for customer support, tutoring, or accessibility

Cross-language chat and voice translation across 100+ languages

Meeting transcription, summarization, and captioning of audio/video (up to 30 mins audio)

Generating captions and descriptions for audio and video content

Tool-integrated agents that can call APIs or external services

Personalized AI characters or assistants with customizable styles and behaviors

📌 Three distinct versions of Qwen3-Omni-30B-A3B, each serving different purposes.

The Instruct model is the most complete, combining both the Thinker and Talker components to handle audio, video, and text inputs and to generate both text and speech outputs. The Thinking model focuses on reasoning tasks and long chain-of-thought processing; it accepts the same multimodal inputs but limits output to text, making it more suitable for applications where detailed written responses are needed.

The Captioner model is a fine-tuned variant built specifically for audio captioning, producing accurate, low-hallucination text descriptions of audio inputs. Architecture and Design of Qwen3-Omni.

Qwen3-Omni runs on a Thinker-Talker setup. The Thinker handles reasoning and multimodal understanding, while the Talker turns outputs into natural audio speech. Both pieces use Mixture-of-Experts (MoE) to keep high concurrency and fast inference.

The Talker does not depend on the Thinker’s text. It conditions directly on audio and visual features, which makes audio and video line up more naturally and helps keep prosody and timbre during translation.

This split also lets outside tools, like retrieval or safety filters, step in on the Thinker’s output before the Talker speaks it. Speech comes from a multi-codebook autoregressive method plus a lightweight Code2Wav ConvNet, so latency drops while vocal detail stays clear. Streaming is the core idea: first audio packets arrive in 234 ms (0.234 s) and for video in 547 ms (0.547 s), staying under 1 RTF even when there are multiple requests at once. The model covers 119 languages for text, 19 for speech input, and 10 for speech output, including dialects like Cantonese.

Pricing via API of Qwen3-Omni:

Through Alibaba's API, billing is calculated per 1,000 tokens. Thinking Mode and Non-Thinking Mode share the same pricing, although audio output is only available in Non-Thinking Mode.

📌 Input Costs:

Text input: $0.00025 per 1K tokens (≈ $0.25 per 1M tokens)

Audio input: $0.00221 per 1K tokens (≈ $2.21 per 1M tokens)

Image/Video input: $0.00046 per 1K tokens (≈ $0.46 per 1M tokens)

📌 Output Costs:

Text output:

$0.00096 per 1K tokens (≈ $0.96 per 1M tokens) if input is text only

$0.00178 per 1K tokens (≈ $1.78 per 1M tokens) if input includes image or audio

Text + Audio output:

$0.00876 per 1K tokens (≈ $8.76 per 1M tokens) — audio portion only; text is free

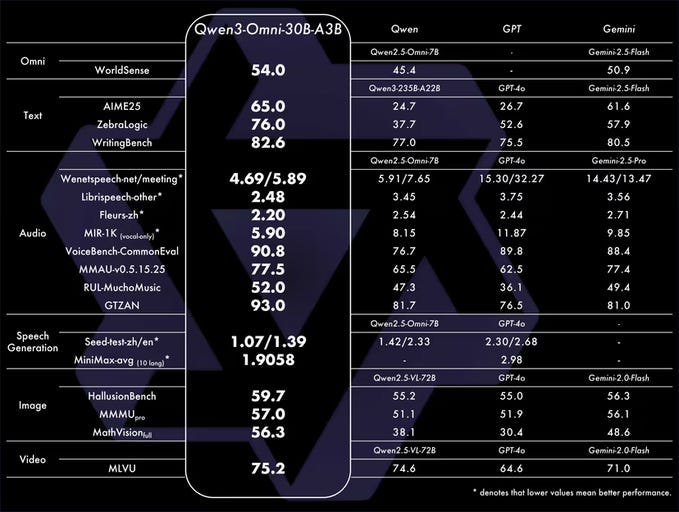

Benchmark Results of Qwen3-Omni

Across 36 benchmarks, Qwen3-Omni achieves state-of-the-art on 22 and leads open-source models on 32.

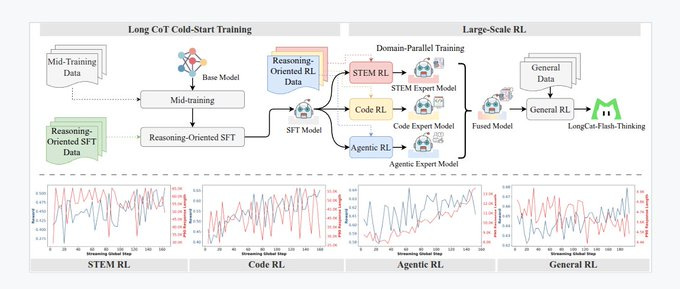

Training details of Qwen3-Omni

Qwen3-Omni’s build was split into pretraining and post-training. The audio side is powered by Audio Transformer (AuT). It was trained on 20M hours of supervised audio, mostly (80%) Chinese and English ASR, plus 10% ASR in other languages, and 10% audio understanding. The final model is a 0.6B parameter encoder that works smoothly in real-time and offline.

Pretraining came in 3 steps

First, encoder alignment where vision and audio encoders were trained while the LLM stayed frozen. Second, a general training run on about 2T tokens, mixing 0.57T text, 0.77T audio, 0.82T images, and smaller video portions. Third, a long context phase that expanded the token limit from 8,192 to 32,768, with more long audio and video added.

Post-training tuned both halves differently. The Thinker got supervised fine-tuning, strong-to-weak distillation, and GSPO optimization using rules plus LLM-as-a-judge. The Talker went through 4 training stages, fed with hundreds of millions of multimodal speech samples and continued pretraining on curated sets, aimed at lowering hallucinations and making speech more natural.

Licensing of Qwen3-Omni

Qwen3-Omni is distributed under Apache 2.0, a flexible license that allows commercial use, modifications, and redistribution. It does not force derivative projects to be open-sourced.

A built-in patent license is included, helping reduce legal risk when embedding it into proprietary systems. For enterprises, the model can be freely added to tools or services with no licensing fees. It can also be adapted to industry-specific needs or local laws, while companies still gain from improvements shared by the wider community.

Use the voice chat and video chat features on Qwen Chat to experience the Qwen3-Omni model. With weights of just ~70GB prior to quantization, this model is quite accessible for people wanting to run it locally.

📢 NVIDIA and OpenAI are joining forces for “THE biggest AI infrastructure project in history.

They will deploy 10GW of NVIDIA systems for OpenAI, with NVIDIA planning up to $100B of progressive investment. 10GW of AI power is massive, equal to about 10 typical nuclear reactors or about 5 Hoover Dams.

Some well-known research reports says, US AI data center demand could reach 123GW by 2035, up from 4GW in 2024, so 10GW is almost 8% of that future load. Net, 10GW sits at the scale of nation sized energy decisions, matching the biggest corporate power programs and dwarfing most single campuses.

So for this project, NVIDIA and OpenAI will co-plan hardware, interconnect, and cluster software with training, inference, and data flows. Co-optimization means kernels, compilers, memory, and scheduling line up with model design, so more useful work gets done per watt and per dollar.

The first phase is targeted to come online in the second half of 2026 using the NVIDIA Vera Rubin platform. Funding is milestone based, since NVIDIA invests as each 1GW actually goes live, linking capital to power, buildings, and delivered racks.

Execution will draw on Microsoft, Oracle, SoftBank, and Stargate partners for sites, grid capacity, and procurement at this size. The announcement comes after a series of high-profile investments by Nvidia, including a $5bn investment in Intel and a £2bn investment in the UK's AI sector.

Nvidia’s first investment of $10 billion will be deployed when the first gigawatt is completed, and investments will be made at then-current valuations. Nvidia stock rose almost 4% during on Monday, instantly adding close to $170 billion in value to the company’s market cap, which now sits near $4.5 trillion.

Nvidia stock surges above $180/share after announcing plans to invest up to $100 billion in OpenAI as part of a data center build-out. In August, Huang told investors on an earnings call that building one gigawatt of data center capacity costs between $50 billion and $60 billion, of which about $35 billion of that is for Nvidia chips and systems.

💰 "How Nvidia Is Backstopping America’s AI Boom"

A great article was published by WSJ analyzing how NVIDIA is single-handedly pushing the AI boom.

Nvidia is investing $100B in OpenAI to build 10GW of Nvidia-powered AI data centers, locking in GPU demand while giving OpenAI cheaper capital and a direct supply path. This is vendor financing, Nvidia swaps funding and its brand strength for long-term chip orders, a pattern critics call circularity.

NewStreet Research analysts tried to model how Nvidia’s investment into OpenAI plays out financially. They found that for every $10B Nvidia invests into OpenAI, OpenAI in turn commits to spending about $35B on Nvidia chips over time.

So Nvidia isn’t giving money away for free. It’s basically pre-funding its own demand. OpenAI gets cheaper access to capital, and Nvidia gets guaranteed GPU orders in return.

The trade-off is that Nvidia has to lower its usual profit margins on those advanced chips. Instead of charging OpenAI full price, it effectively offers them at a discount. But because the volumes are so large and locked in, Nvidia gains long-term sales stability, which investors love.

OpenAI has been getting access to Nvidia chips through cloud providers or “neo-clouds.” These middlemen front the money to build data centers, buy GPUs, and then rent them to OpenAI at a markup.

Because OpenAI is still unprofitable and seen as risky, the debt used to finance those deals can carry very high interest rates — sometimes around 15%. By contrast, if a company with stronger credit like Microsoft backs the project, the rates drop to about 6–9%.

Nvidia stepping in with its balance sheet gives lenders more confidence, so the effective borrowing cost for projects tied to OpenAI falls closer to that lower range. Nvidia has used similar backstops, including a $6.3B agreement to buy CoreWeave’s unused capacity through Apr-32.

Here also, Nvidia using its financial strength to stabilize its ecosystem. CoreWeave is a cloud provider that rents out clusters filled with Nvidia GPUs. If CoreWeave ever struggles to fully rent out those GPUs, Nvidia has promised to buy back unused capacity through Apr-32.

That means CoreWeave doesn’t carry as much risk of having idle, unprofitable infrastructure. In turn, this ensures GPUs keep running and generating revenue, even if demand temporarily dips.

NVIDIA also has committed $5B to Intel alongside a product tie-up to connect Nvidia GPUs with Intel processors for PCs and data centers. Beyond that, Nvidia invested in Musk’s xAI and later joined the AI Infrastructure Partnership with Microsoft, BlackRock and MGX to fund data centers and energy assets.

The move lands as OpenAI reports 700M weekly users yet still projects $44B cumulative losses before first profit in 2029, so cheaper capital tied to hardware is a bridge from usage to cash flow. There are real risks, including regulatory scrutiny of the structure and lower near-term margins for Nvidia, but the deal deepens lock-in around its platform.

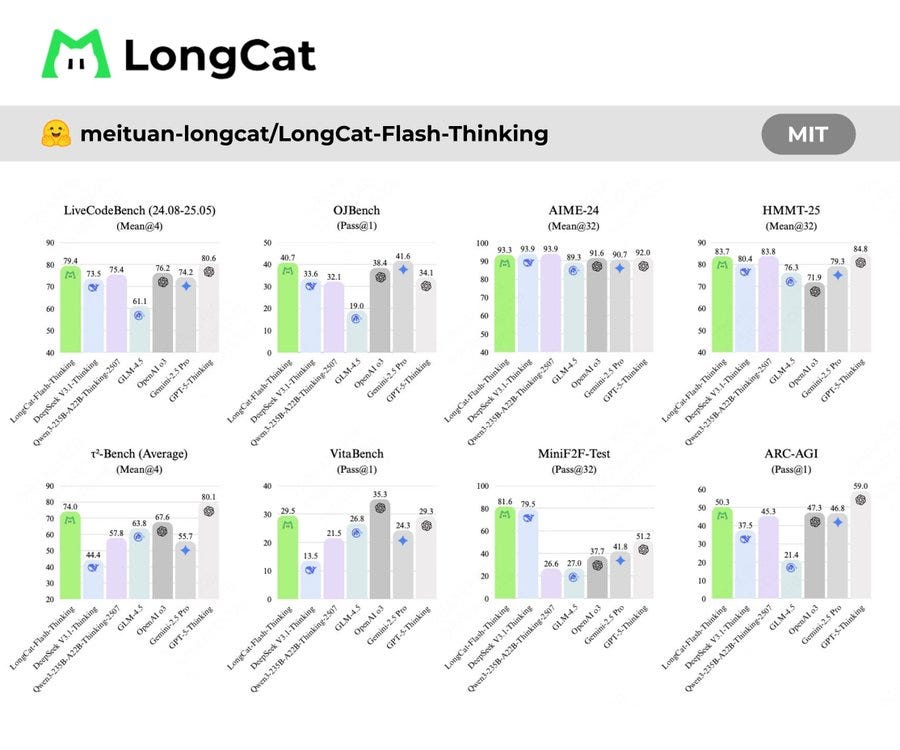

🇨🇳 New Chinese model LongCat-Flash-Thinking, a newly announced reasoning model, has achieved SOTA benchmark performance for open-source AI.

It an MoE model with, 560B total parameters, ant 27B activated parameters. Checkout LongCat-Flash Technical Report.

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

128k context, mid-training reinforcement on reasoning and coding, and multi-stage post-training with a multi-agent synthesis framework

Custom ScMoE kernels, distributed optimizations, and inference tweaks like KV-cache reduction, quantization, chunked prefill, stateless elastic scheduling, peer-to-peer cache transfers, heavy-hitter replication, and PD disaggregation make deployment efficient in SGLang and vLLM.

SOTA in tool use (τ²-Bench, VitaBench) and instruction following (IFEval, COLLIE, Meeseeks-zh);

That’s a wrap for today, see you all tomorrow.