🥉 Claude 3.7 Sonnet debuts with “extended thinking”

Claude 3.7 Sonnet lands with “extended thinking,” Google rolls out free Gemini Code Assist worldwide, and Google AI Studio introduces conversation branching for better workflow control.

Read time: 8 min 27 seconds

📚 Browse past editions here.

( I write daily for my 112K+ AI-pro audience, with 4.5M+ weekly views. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (25-Feb-2025):

🥉 Claude 3.7 Sonnet debuts with “extended thinking”

🏆 Google is launching a free version of Gemini Code Assist globally to help you build faster

🗞️ Byte-Size Briefs:

Conversation branching is now live in Google AI Studio. Just click on the 3-dots on the right on a response > select “Branch from here”.

🥉 Claude 3.7 Sonnet debuts with “extended thinking”

Anthropic Claude 3.7 Sonnet is the first Claude model to offer step-by-step reasoning, which Anthropic has termed “extended thinking”.

It can give quick answers in real time or switch into a step-by-step reasoning mode. The switch is user-controlled, so the same model can either respond almost instantly or take extra time to generate deeper explanations. This approach is described as hybrid reasoning.

Along with extended thinking, Claude 3.7 Sonnet allows up to 128K output tokens per request (up to 64K output tokens is considered generally available, but outputs between 64K and 128K are in beta).

In both standard and extended thinking modes, Claude 3.7 Sonnet has the same price as its predecessors: $3 per million input tokens and $15 per million output tokens—which includes thinking tokens

A feature called the “visible scratch pad” reveals the model’s internal planning process, particularly useful for complex tasks like coding. Developers can use the scratch pad to see exactly how the model approaches each challenge, from BFS graph traversal to advanced software patching. The intention is to give developers better transparency, along with the choice between near-instant answers and deeper, more stepwise solutions.

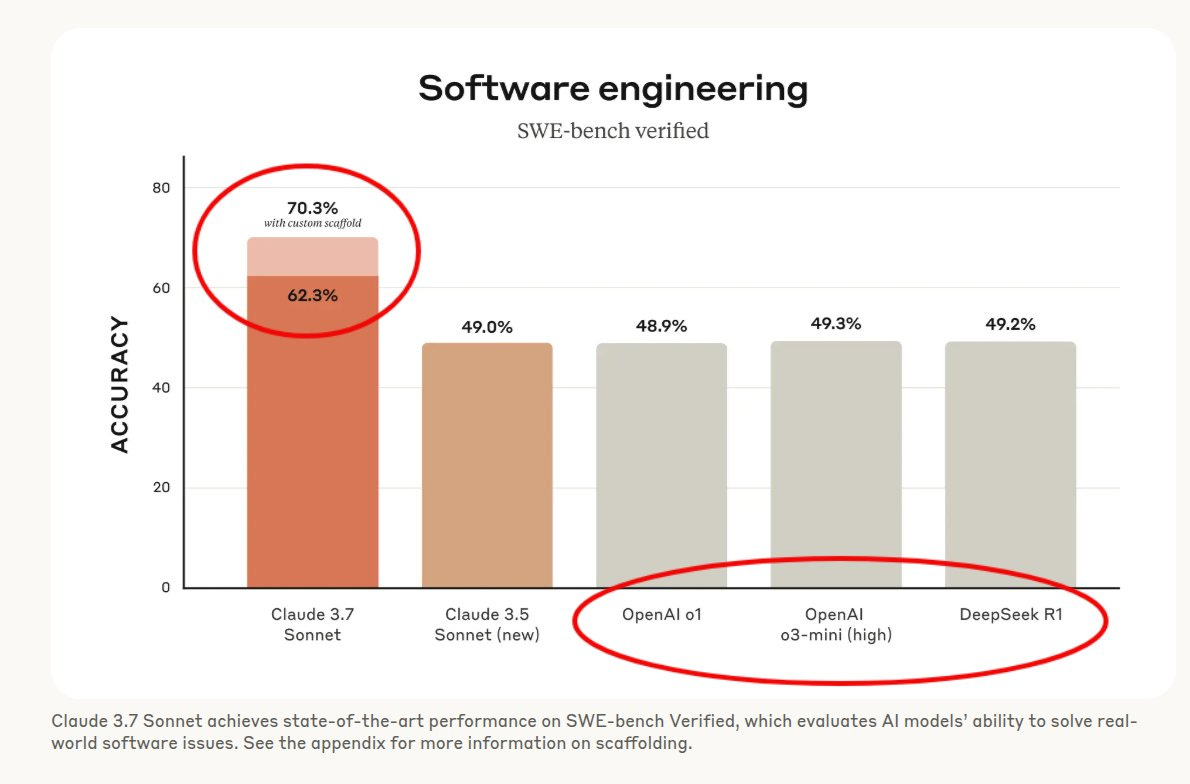

Performance on real-world coding benchmarks substantially improved. The model scored 62.3% on SWE-Bench, up from competitor results around 49.3%. On TAU-Bench, which measures how well an agent can handle user interactions in simulated retail tasks, Claude 3.7 Sonnet reached 81.2%, beating competitor scores of 73.5%. Unnecessary refusals dropped by 45% compared to the previous version, indicating more accurate decisions about which queries to refuse and which queries to address.

Rollout is open to all users. The extended reasoning feature is reserved for paid subscribers. Free users still gain improvements over previous generations, including faster replies. Future plans mention that Claude might decide for itself how long to think without needing user input, similar to how humans naturally budget their time for a difficult question.

A separate development is Claude Code

This is an agentic coding tool available in a limited research preview. It allows developers to launch tasks from a command line, edit files programmatically, and even commit code to GitHub. It runs tests and can apply regression checks to confirm that a change didn’t break anything. It does these tasks in a single session by combining a bash interface, string-based file editing, and prompts that let the model record its decisions. The agentic workflow inside Claude Code is valuable if your tasks involve iterative debugging or large-scale file modifications. The model can script multiple steps, reflect on the outcome, and then commit changes once everything looks good. That saves time compared to manually editing the same files repeatedly.

Hybrid reasoning becomes useful when code is complex. A shorter response might be enough for routine tasks like renaming variables. A lengthier chain of thought helps with multi-step logic such as implementing complex algorithms or orchestrating a large-scale refactoring.

The model uses custom scaffolding for coding tasks, including test execution in parallel, discarding any patch that fails, and ranking the rest with a scoring algorithm. Basically custom scaffolding enables deeper reasoning Custom scaffolding uses a toolset where the model runs commands and edits files in one session with a bash interface and string-based file editing. A prompt addendum instructs the model to use a planning tool, prompting it to record its reasoning steps during multi-turn problem solving. For TAU-bench, this setup increases the maximum steps from 30 to 100 i.e. deeper reasoning. For SWE-bench, a high compute variant samples parallel attempts, discards patches that fail regression tests, and ranks remaining attempts with a scoring model. All these raised the verified score from 63.7% to 70.3% on tasks.

Extended thinking also helps with agent tasks beyond code fixes. TAU-Bench results show how increasing the maximum steps from 30 to 100 gave the model more opportunities to plan, so it could handle advanced flows involving user inquiries, context switching, and long dialogues. The scratch pad logs each step, so developers see every sub-decision the model makes.

The system card for Claude 3.7 Sonnet discusses improvements in refusal policy, bias checks, child safety protection, and chain-of-thought reliability. The model’s extended reasoning can leak more internal tokens, so the release includes safeguards like streaming classifiers to prevent harmful or private data from being exposed in the scratch pad.

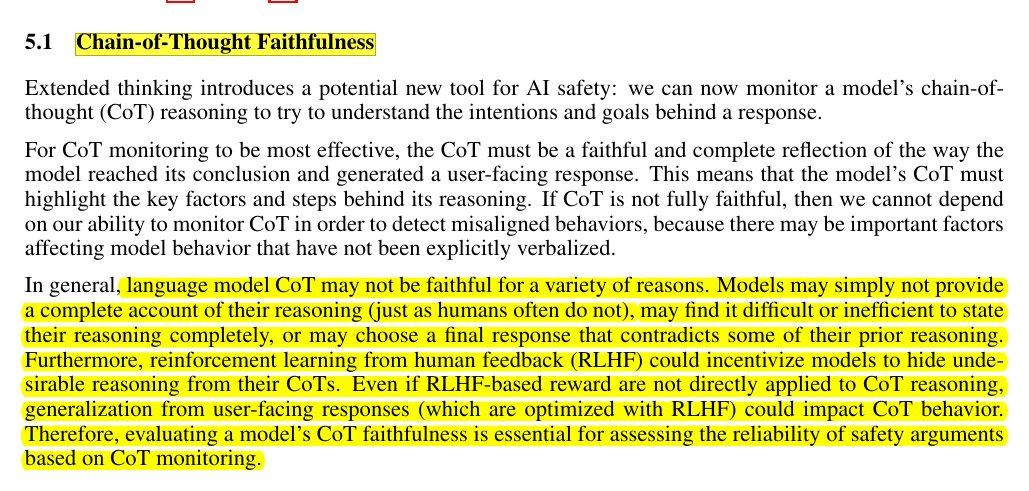

Meaning the Extended reasoning in text does not always reflect every factor driving the final outcome, meaning the chain-of-thought is not guaranteed to be a perfect window into the model’s hidden computations. Even though we can examine a model’s chain-of-thought (its step-by-step reasoning), we can’t always trust it to reveal how the model truly arrived at its final answer. Sometimes the model might leave out key details or outright hide certain thoughts, especially if it’s been trained in ways that reward giving “safe” or approved responses. As a result, reading the chain-of-thought can be helpful, but it won’t necessarily expose every internal calculation or motivation unless the model is truly disclosing all the relevant steps.

When using Claude 3.7 Sonnet through the API, users can also control the budget for thinking: you can tell Claude to think for no more than N tokens, for any value of N up to its output limit of 128K tokens. This allows you to trade off speed (and cost) for quality of answer.

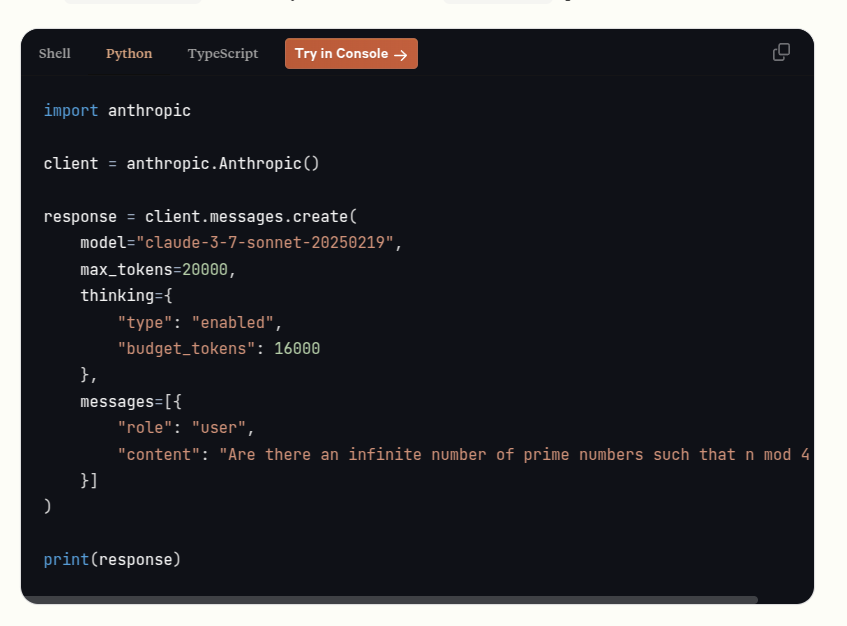

Below is a code snippet that shows how you might set the model’s thinking budget with Anthropic’s API in Python

With Claude 3.7 Sonnet, max_tokens (which includes your thinking budget when thinking is enabled) is enforced as a strict limit. The system will now return a validation error if prompt tokens + max_tokens exceeds the context window size.

This snippet demonstrates basic usage of extended thinking, along with an explanation of each part:

It sets a maximum of 20,000 tokens for the thinking portion. That means Claude can spend 2048 tokens on its scratch pad to plan its answer. Once that limit is reached, it must produce the final output. The approach balances cost and reasoning depth. It fits into the broader architecture because some tasks need a small planning budget (faster, cheaper), while others benefit from a higher one when you need accuracy or extended analysis.

A deeper reasoning pipeline is also possible for workflow tasks, such as coordinating multiple tool calls or performing advanced user simulations. The increased step limit in TAU-Bench (raised from 30 to 100) reveals how letting the model reason more thoroughly can boost success rates in multi-step tasks.

Claude 3.7 Sonnet is widely available now:

Claude 3.7 Sonnet is now available on all Claude plans—including Free, Pro, Team, and Enterprise—as well as the Anthropic API, Amazon Bedrock, and Google Cloud’s Vertex AI. Extended thinking mode is available on all surfaces except the free Claude tier.

🏆 Google is launching a free version of Gemini Code Assist globally to help you build faster

🎯 The Brief

Google has launched Gemini Code Assist for individuals, a free AI-powered coding assistant with the highest free-tier usage limits available. It offers AI-assisted coding, code review, and chat-based development help. Powered by Gemini 2.0, it supports all programming languages, integrates with VS Code, JetBrains IDEs, GitHub, Firebase, and Android Studio, and provides up to 180,000 code completions per month—90x more than other free coding assistants. It also features AI-powered code reviews for GitHub and supports up to 128,000 tokens in chat.

⚙️ The Details

→ Usage limits are extremely high: 180,000 completions/month compared to 2,000 in competing free tools, ensuring developers don’t hit constraints mid-project.

→ Gemini Code Assist for GitHub automates code reviews, detecting bugs, stylistic issues, and suggesting fixes. It allows teams to define custom style guides via .gemini/styleguide.md.

→ Large context window (128,000 tokens) enables handling of large files and deeper codebase understanding.

→ No payment required, just a personal Gmail account. For more advanced features, Standard and Enterprise versions integrate with Google Cloud services like BigQuery.

🗞️ Byte-Size Briefs

Conversation branching is now live in Google AI Studio. Just click on the 3-dots on the right on a response > select “Branch from here”. It’s a feature that lets you diverge from your main conversation thread with a Gemini model and explore alternative dialogue paths without losing context. In practice, you can branch off from an ongoing conversation to experiment with different prompts or explore tangents—almost like creating a separate sub-dialogue. Once you’ve pursued these alternate ideas, you can easily return to the original thread and continue where you left off. This approach not only helps in managing multiple conversation directions without overwhelming the model with mixed contexts but also serves as a way to bookmark particular discussion points, which is especially useful in long or creative interactions. To try it out, you need to enable Drive Access and Auto Save in the settings.

That’s a wrap for today, see you all tomorrow.

Great write up on Claude! Thanks for the Gemini heads up .. completely missed that in all the Claude research yesterday. 👍👍