🗞️ Claude Code builds in one hour what a team spent a year building

China’s new AI rules, Instagram head on AI + social, Claude outpacing Google, LeCun exits Meta, and papers on goal-driven agents & world models in physical planning.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (5-Jan-2026):

👨🔧 Instagram head Adam Mosseri published a long post on how AI is going to impact Instagram creators and social media. Really great reading.

🇨🇳 China has drafted very strict rules for “human-like interactive” AI chat services that aim to stop chatbots from encouraging suicide, self-harm, or violence by forcing human intervention and tighter use controls.

🛠️ What Drives Success in Physical Planning with Joint-Embedding Predictive World Models?

🗞️ ‘I’m not joking’: Google’s Senior engineer stunned after Claude Code builds in 1 hour what her team spent a year on

🧠 A New paper shows how to accelerate scientific discovery with autonomous goal-evolving agents

🧑🎓 Yann LeCun officially left Meta after spending 12 years at the company

👨🔧 Instagram head Adam Mosseri published a long post on how AI is going to impact Instagram creators and social media. Really great reading.

Power keeps shifting from institutions to individuals as distribution costs fall and trust in institutions erodes, and in a flood of synthetic media authenticity becomes scarce which drives demand toward trusted creators.

The success bar moves from the ability to create to the ability to make work that only you could make, personal specificity becomes the moat.

Because polish is cheap with AI and modern cameras, a raw and imperfect capture becomes a credibility signal, creators will use unflattering and unproduced looks as proof of reality.

Audiences will default to skepticism about media, they will judge based on who posted it, where it came from, and why it is being shared rather than surface realism.

Platform labeling of AI content will help but detection will get harder, fingerprinting real media at capture with cryptographic signing and preserving chain of custody is the more durable path.

Instagram needs to ship stronger creator tools, clear AI labeling, real-media verification, richer account and context signals, and ranking that rewards originality.

🇨🇳 China has drafted very strict rules for “human-like interactive” AI chat services that aim to stop chatbots from encouraging suicide, self-harm, or violence by forcing human intervention and tighter use controls.

Some of the requirements are mandatory escalation, providers must intervene when suicide is mentioned, and minors and older users must register a guardian contact that can be notified if self-harm or suicide comes up. To curb addiction-by-design, the draft also pushes use-friction like pop-up reminders after 2hours and imposes heavier safety testing and audits once a service crosses 1M registered users or 100K monthly active users (MAU), backed by possible app store takedowns for noncompliance.

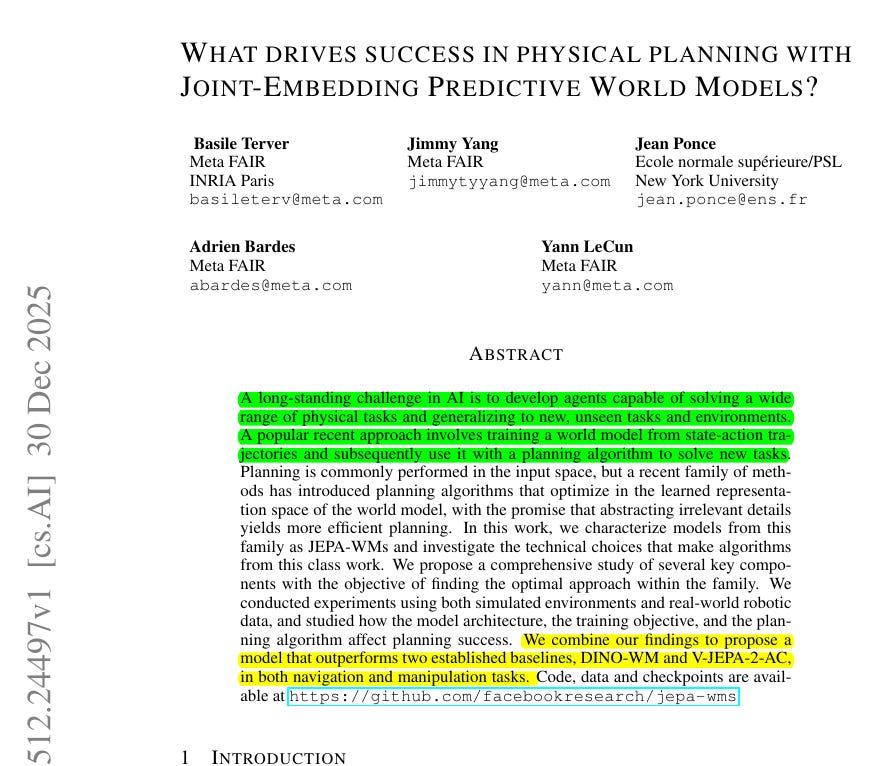

🛠️ What Drives Success in Physical Planning with Joint-Embedding Predictive World Models?

New Paper from ylecun, AiatMeta and New York University

“What Drives Success in Physical Planning with Joint-Embedding Predictive World Models?”

Making a robot that can understand its environment and generalize to new tasks is still one of the biggest hurdles in modern AI. And to teach that, the idea starts with teaching the robot how the world behaves by watching interactions, such as how objects move when pushed. That knowledge becomes a world model the robot can use to plan.

Earlier AI planned straight from raw inputs like pixels and motor values. Newer approaches plan inside a simplified internal space that keeps important structure and ignores distractions.

These are called JEPA-WMs, short for joint-embedding predictive world models. The paper asks what design choices actually matter, and tests this through large experiments in simulation and real-world robots, looking closely at model design, learning goals, and planning methods.

The big deal that this paper achieves is that earlier JEPA world models have been a nice idea, plan with a learned predictor instead of pixels, but performance has been inconsistent because lots of small choices decide whether planning is stable or falls apart. So they isolate those choices with large controlled sweeps and show that success is mostly about making the embedding space and the planner agree, so the planner’s simple distance-to-goal objective points toward actions that really move the robot the right way.

Concretely, they find that a strong frozen visual encoder like DINO makes embeddings that keep the right physical details for planning, while some video-style encoders can blur away what the planner needs. They show planning works best with sampling-based search like Cross-Entropy Method (CEM), because it can handle discontinuities from contact, friction, and gripper events where gradient planners often get stuck.

🗞️ ‘I’m not joking’: Google’s Senior engineer stunned after Claude Code builds in 1 hour what her team spent a year on

Jaana Dogan, a principal engineer at Google working on the Gemini API, shared a pretty wild story about testing Anthropic’s Claude Code, an AI coding assistant. She said she gave it a problem description and, within 1 hour, the output looked very close to what her Google team had been building for nearly 1 year.

She explained the task was about distributed agent orchestrators, meaning systems that manage and coordinate multiple AI agents. Google had tried many different ideas here but still had not landed on a final design.

Dogan said her prompt was only 3 paragraphs long and not very detailed. Since she could not use any internal Google data, she rewrote the problem in a simplified way using public ideas, just to see how Claude Code would handle it.

The result was not perfect and still needs work, but she said it was impressive enough that people who doubt AI coding agents should try them on problems they already understand deeply.

When asked if Google uses Claude Code, she said it is allowed only for open-source work, not internal projects. Someone else asked when Gemini would catch up, and she replied, “We are working hard right now. The models and the harness.”

She also stressed that AI progress is not a zero-sum game, and that good work from competitors deserves recognition. She ended by saying Claude Code is impressive and that it motivates her to push even harder.

In retrospect, I think Dario Amodei was so right about AI taking over coding.

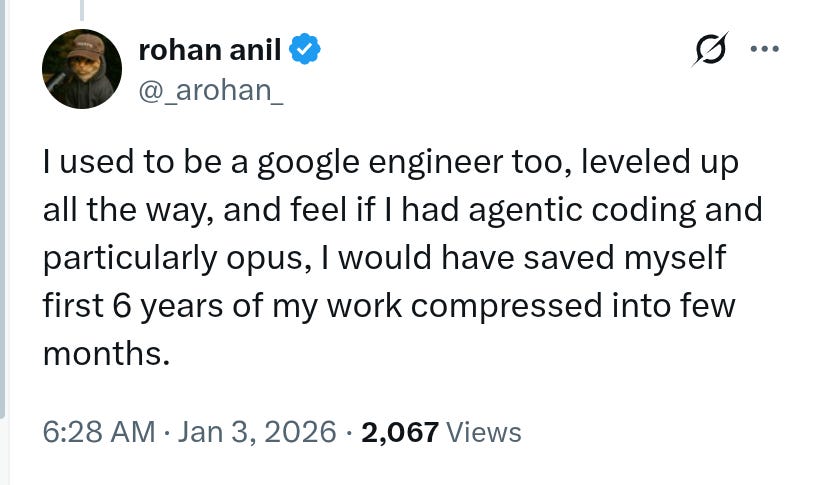

And then the below post also went viral - It is from Ex-Google, ex-Meta, distinguished engineer, and Gemini co-author

In the old way, you learn by getting stuck, searching, trying a fix, breaking something, asking someone, reading docs, trying again, then slowly building instincts. Agentic coding short-circuits a lot of that. It can scan a big codebase, explain what the weird parts do, suggest where a change should live, generate a patch across multiple files, run tests, read failures, and iterate.

The same thing applies to the classic “2 hours of Googling and Stack Overflow.” A lot of that work was never about deep understanding. It was about finding the right incantation, the right library behavior, the right edge case. Now any good model/agent can hand you that in 1 prompt, and it can also tailor it to your exact codebase instead of some generic example.

🧠 A New paper shows how to accelerate scientific discovery with autonomous goal-evolving agents

Accelerating Scientific Discovery with Autonomous Goal-evolving Agents is a system where an LLM keeps rewriting the score function during a scientific search, so the search stops chasing fake wins.

The big deal is that most “AI for discovery” work assumes humans can write the right objective up front, but in real science that objective is usually a shaky proxy, so optimizers learn to game it and return junk that looks good on the proxy. This paper’s core proposal is to automate that missing human loop, where people look at bad candidates, notice what is wrong, then add or change constraints and weights until the search produces useful stuff.

SAGA does this with 2 loops, an outer loop where LLM agents analyze results, propose new objectives, and turn them into computable scoring functions, and an inner loop that optimizes candidates under the current score. Why it matters is that this turns “objective design” from manual trial and error into something the system can do repeatedly, which is exactly where reward hacking usually sneaks in.

SAGA watches the results, has the LLM suggest new rules the design must meet, turns those rules into a score the computer can calculate, and runs the search again. The authors tested it on antibiotic molecules, new inorganic materials checked with Density Functional Theory, a physics simulation for material stability, DNA enhancers that boost gene activity, and chemical process plans, with both human-guided and fully automatic runs. Across tasks, the evolving goals reduced score tricks, and in the DNA enhancer task it gave nearly 50% improvement over the best earlier method.

🧑🎓 Yann LeCun officially left Meta after spending 12 years at the company

He is now executive chairman of Advanced Machine Intelligence Labs, reported seeking about €500M at about €3B valuation.

His main technical disagreement was that Meta bet too hard on LLMs, while his preferred “world model” approach kept losing resources and autonomy.

LeCun’s new startup, Advanced Machine Intelligence Labs, will focus on “world models” that learn from videos and spatial data rather than just text.

Despite the tensions, LeCun maintains he remains on good terms with Zuckerberg personally, and Meta will partner with his new venture.

That’s a wrap for today, see you all tomorrow.