Closed-form merging of parameter-efficient modules for Federated Continual Learning

LoRM (Low-rank Regression Mean) merges LoRA modules with closed-form solution while preserving privacy in federated learning

LoRM (Low-rank Regression Mean) merges LoRA modules with closed-form solution while preserving privacy in federated learning

• Outperformed 10 competing methods on CIFAR-100 and ImageNet-R

🎯 Original Problem:

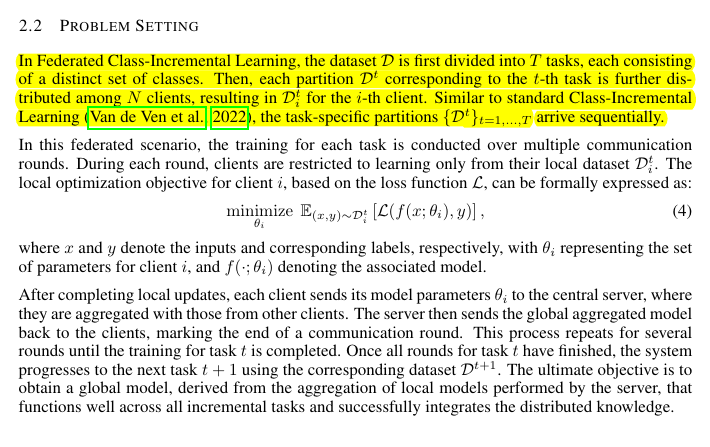

Merging parameter-efficient modules in federated continual learning is challenging due to the need to preserve model responses across clients and tasks while maintaining data privacy and efficiency.

🔧 Solution in this Paper:

• Introduces LoRM (Low-rank Regression Mean) - a closed-form solution for merging LoRA modules

• Uses alternating optimization strategy:

Trains one LoRA matrix (A or B) while keeping other fixed

Applies RegMean formulation to merge matrices across clients

• Maintains privacy by only sharing diagonal elements of Gram matrices

• Employs task-specific classification heads that concatenate for final merging

• Implements 5 communication rounds per task, each with 5 epochs

💡 Key Insights:

• Solving indeterminate system in LoRA merging through alternating optimization

• Privacy preservation through sharing only Gram matrix diagonals

• Strong performance on both in-domain and out-of-domain tasks

• Faster convergence compared to existing methods

📊 Results:

• Achieved 83.19% accuracy on CIFAR-100 (β=0.5)

• Showed 75.62% accuracy on ImageNet-R (β=0.5)

• Demonstrated 80.44% accuracy on out-of-domain EuroSAT dataset

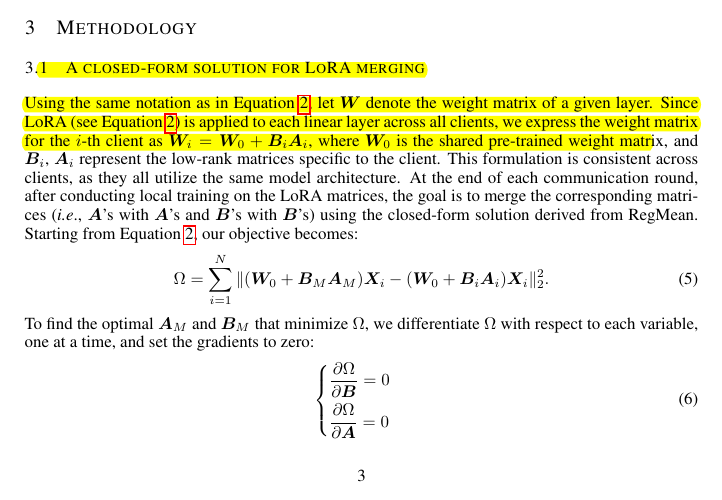

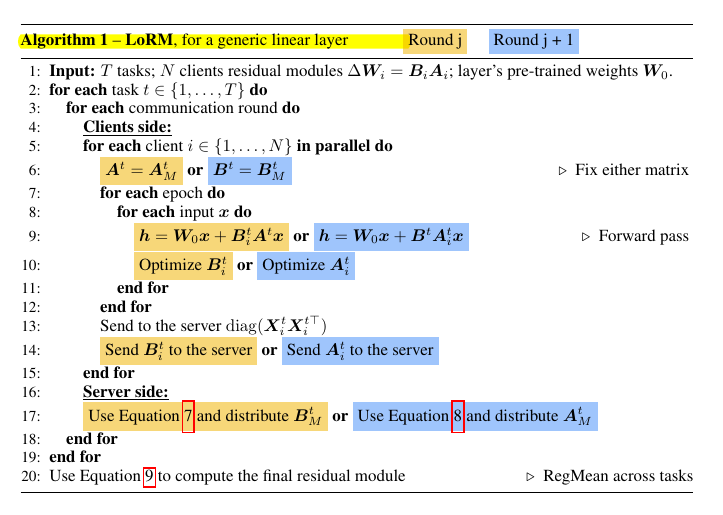

The key technical components and methodology of LoRM

LoRM employs an alternating optimization strategy that trains one LoRA matrix at a time, either A or B, while keeping the other fixed.

This allows finding unique solutions for merging the modules.

The method uses closed-form equations derived from RegMean to merge matrices across clients and tasks.