Composite Learning Units: Generalized Learning Beyond Parameter Updates to Transform LLMs into Adaptive Reasoners

Meet Composite Learning Units (CLUs): The AI that learns on the job, not just during training.

Meet Composite Learning Units (CLUs): The AI that learns on the job, not just during training.

Composite Learning Units blend memory with reasoning to solve complex tasks through continuous learning. Create dynamic knowledge spaces that learn and adapt without parameter updates, outperforming traditional LLMs

Composite Learning Units (CLUs) solve cryptographic reasoning task where traditional LLMs fail.

• Achieve near-perfect accuracy after initial learning period

• Employs feedback-driven adaptation without parameter updates

• Show evolution of internal understanding over multiple learning iterations

Original Problem 🔍:

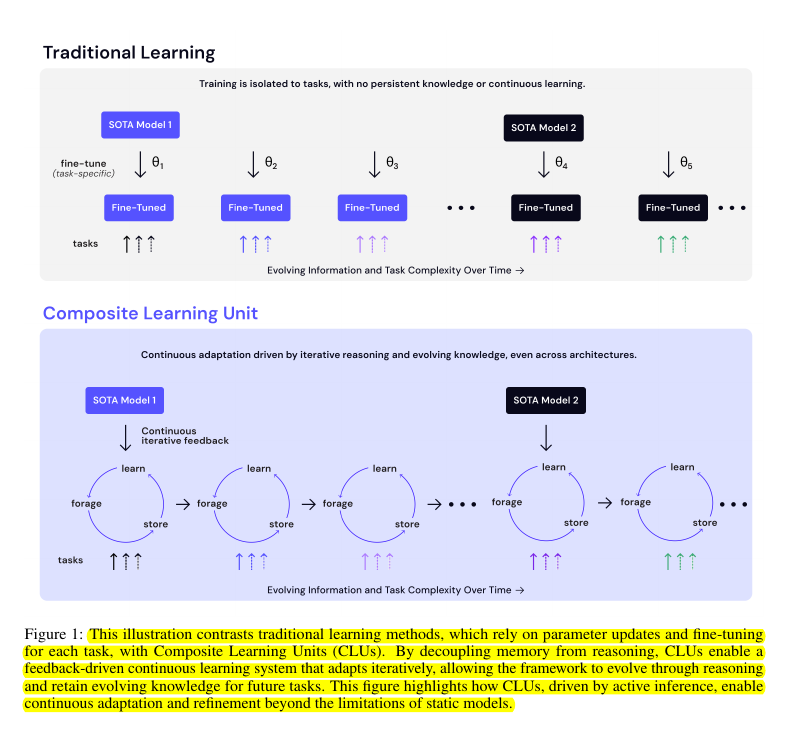

Large Language Models (LLMs) lack adaptability, requiring extensive retraining for new tasks. This limits their ability to learn continuously and reason autonomously in dynamic environments.

Solution in this Paper 🧠:

• Uses dynamic knowledge spaces: General Knowledge Space (GKS) and Prompt-Specific Knowledge Space (PKS)

• Integrates Constructivism, Active Inference, and Information Foraging principles

• Utilizes specialized agents for knowledge management, task execution, and feedback processing

• Implements iterative refinement of knowledge through continuous learning

Key Insights from this Paper 💡:

• CLUs enable continuous learning during inference

• Feedback mechanisms allow real-time adaptation without retraining

• Separation of memory from reasoning enhances flexibility

• CLUs can tackle complex tasks traditional LLMs struggle with

🧠 How does the CLU framework manage and update knowledge?

CLUs use two key components:

General Knowledge Space (GKS) for broad, reusable insights

Prompt-Specific Knowledge Space (PKS) for task-specific learning

These spaces are continuously refined through goal-driven interactions and feedback, allowing the system to adapt to complex tasks and build upon past experiences autonomously.

🔄 Feedback plays a crucial role in Composite Learning Units (CLUs) by:

Guiding the refinement of knowledge spaces

Allowing the system to learn from mistakes and successes

Enabling real-time adaptation without requiring parameter updates

This feedback-driven approach allows CLUs to continuously improve their performance across diverse tasks.

🧮 How does the Composite Learning Units (CLU) framework compare to traditional neural network training?

Unlike neural networks that update weights through backpropagation, CLUs refine knowledge representations in their GKS and PKS. This approach allows for:

Continuous learning during inference

Adaptation without extensive retraining

Retention of past knowledge while incorporating new information.

🤖 Composite Learning Units (CLU) address many of the limitations of current LLMs by

Enabling continuous learning without frequent retraining

Adapting to new information in real-time

Maintaining a balance between general and task-specific knowledge

Allowing for more efficient use of computational resources

📊 Key components of the CLU architecture:

Operational Agent: Processes inputs and generates outputs

Knowledge Management Unit: Handles knowledge storage and retrieval

Feedback Agents: Analyze task performance and guide knowledge refinement

Meta-Prompt Agent: Generates task-specific prompts

These components work together in a feedback loop to continuously improve the system's performance.

🔬 The cryptographic reasoning task used to evaluate CLUs?

The task involved deducing an encoding rule from limited examples, where the i-th letter from each word in a sentence was selected. CLUs demonstrated the ability to learn and apply this rule through iterative refinement, achieving near-perfect accuracy after an initial learning period.

An this exact task proved challenging for traditional LLMs