Compute Or Load KV Cache? Why Not Both?

Meet Cake: The LLM speedup tool that attacks KV cache from both sides :bulb. KV cache loading becomes twice as fast by computing from start while loading from end simultaneously

Why wait in line when you can load KV cache from both ends?

Meet Cake: The LLM speedup tool that attacks KV cache from both sides :bulb

KV cache loading becomes twice as fast by computing from start while loading from end simultaneously

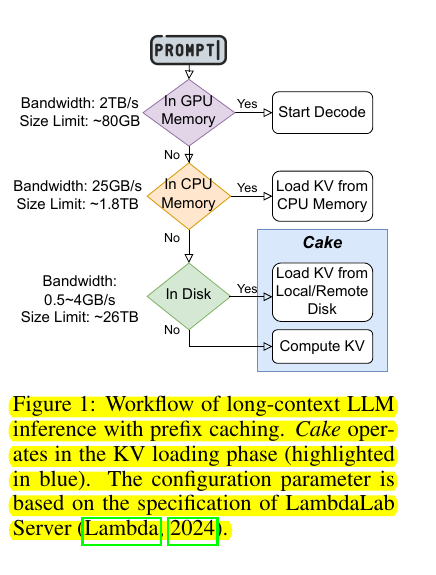

This paper propose Cake's (Computation and Network Aware KV CachE loader), a novel KV cache loader, which employs a bidirectional parallelized KV cache generation strategy.

📌 Upon receiving a prefill task, Cake simultaneously and dynamically loads saved KV cache from prefix cache locations and computes KV cache on local GPUs, maximizing the utilization of available computation and I/O bandwidth resources.

📌 Bidirectional KV cache generation strategy reduces latency in long-context LLM inference.

Results 📊:

• Up to 68.1% Time To First Token (TTFT) reduction compared to compute-only methods

• Up to 94.6% TTFT reduction compared to I/O-only methods

• Consistent performance improvements across various datasets, GPUs, and I/O bandwidths

Original Problem 🔍:

LLMs with expanded context windows face substantial computational overhead in the prefill stage, particularly in computing key-value (KV) cache. Existing prefix caching methods often suffer from high latency due to I/O bottlenecks.

For example, generating KV cache for a 200-page book like “The Great Gatsby” (approximately 72K tokens) requires about 180GB of memory. For a 70B parameter model, generating such a KV cache on an A100 GPU takes approximately 30 seconds, significantly impacting user experience.

Solution in this Paper 🛠️:

• Cake: A novel KV cache loader employing bidirectional parallelized strategy

• Simultaneously computes KV cache on GPUs from sequence start and loads saved KV cache from end

• Dynamically adapts to available computation and I/O resources

• Integrates with existing LLM serving systems (e.g., vLLM) with minimal overhead

• Compatible with KV cache compression techniques for further optimization

Key Insights from this Paper 💡:

• KV cache computation cost increases for later tokens, while I/O cost remains constant

• Balancing compute and I/O resources can significantly reduce prefill latency

• Adaptive strategies outperform static compute-only or I/O-only approaches

• Combining Cake with compression techniques yields additional performance gains

Cake's bidirectional strategy involves:

Computing KV cache from the beginning of the sequence using GPU resources

Simultaneously loading saved KV cache from the end of the sequence using I/O resources

Continuing these parallel processes until they meet in the middle

Cake's (Computation and Network Aware KV CachE loader) approach differs from traditional prefix caching mechanisms

Cake employs a bidirectional parallelized KV cache generation strategy, simultaneously computing KV cache on local GPUs and loading saved KV cache from prefix cache locations. This contrasts with traditional methods that rely solely on either computation or loading from storage.

Cake's (Computation and Network Aware KV CachE loader) adapts to varying system conditions

Cake automatically adjusts its strategy based on available GPU power and I/O bandwidth without requiring manual parameter tuning. It dynamically balances the workload between computation and data streaming to optimize performance across diverse scenarios.

Cake leverages the observation that computing KV cache for later tokens is more expensive than for earlier ones, while I/O costs remain constant.

It uses this insight to optimize its bidirectional strategy, computing earlier tokens on GPU and loading later tokens via I/O.

Cake (Computation and Network Aware KV CachE loader) is compatible with existing KV cache compression techniques. The paper demonstrates that combining Cake with 8-bit quantization and CacheGen compression can lead to even greater performance improvements.