"ConceptAttention: Diffusion Transformers Learn Highly Interpretable Features"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2502.04320

The problem is that while diffusion models excel at text-to-image synthesis, their internal workings, especially in state-of-the-art diffusion transformers (DiTs), remain unclear. Understanding how DiTs relate text prompts to generated images is not well explored.

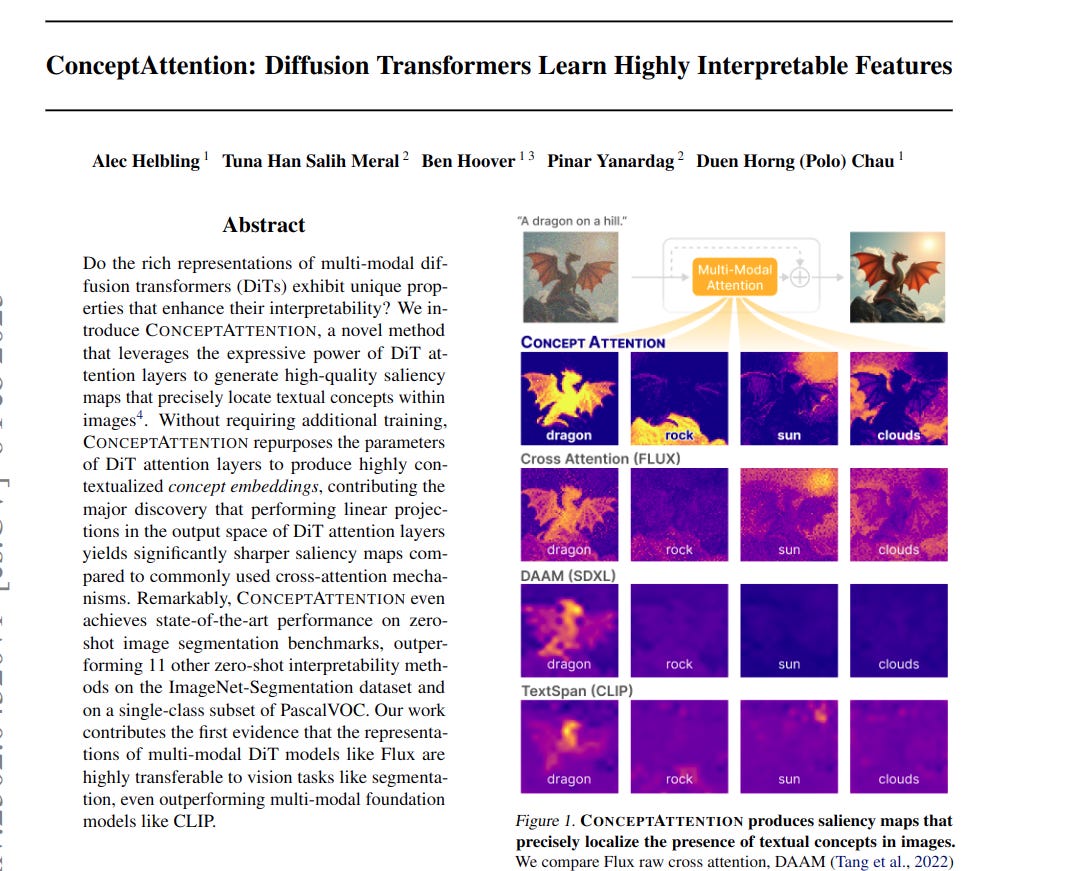

This paper introduces CONCEPTATTENTION. It is a method to create high-quality saliency maps. These maps pinpoint textual concepts within images generated by DiTs.

-----

📌 ConceptAttention cleverly repurposes DiT's existing attention mechanism. It introduces concept embeddings without retraining. This allows for efficient, training-free interpretability of complex DiT models by visualizing concept locations.

📌 The key insight is using DiT's attention output space, not just cross-attention, for saliency maps. This output space contains richer, more transferable representations, leading to superior zero-shot segmentation performance compared to CLIP-based methods.

📌 By combining cross and self-attention for concept embeddings, ConceptAttention achieves better segmentation. Self-attention among concepts likely reduces redundancy, improving the distinctiveness and quality of saliency maps for each concept.

----------

Methods Explored in this Paper 🔧:

→ CONCEPTATTENTION is proposed. It leverages multi-modal DiT models. It generates saliency maps. These maps highlight where textual concepts appear in images.

→ The method works by creating 'concept embeddings'. These embeddings represent textual concepts like "cat" or "sky". These concept embeddings are processed through DiT attention layers.

→ CONCEPTATTENTION repurposes the existing attention layers of DiTs. It does not require extra training. It projects concept embeddings and image patch representations in the attention output space. This produces saliency maps.

→ The method uses a combination of cross-attention and self-attention operations for concept embeddings. Cross-attention is between image patches and concept embeddings. Self-attention is among concept embeddings themselves. This combined approach enhances performance.

-----

Key Insights 💡:

→ A key discovery is that using the output space of DiT attention layers produces sharper saliency maps. This is better than using traditional cross-attention mechanisms.

→ CONCEPTATTENTION demonstrates that multi-modal DiT representations are highly transferable. They are transferable to vision tasks like image segmentation. They can even outperform models like CLIP in segmentation tasks.

→ The method generates contextualized concept embeddings. These embeddings provide rich semantic information. This allows for interpreting DiTs without altering image generation.

-----

Results 📊:

→ CONCEPTATTENTION achieves state-of-the-art zero-shot segmentation. It outperforms 11 other methods on ImageNet-Segmentation.

→ On PascalVOC (Single Class), CONCEPTATTENTION achieves superior performance. It surpasses other zero-shot interpretability methods.

→ Using both cross and self attention in CONCEPTATTENTION yields the best performance. Accuracy is 83.07%, mean Intersection over Union is 71.04%, and mean Average Precision is 90.45% on ImageNet-Segmentation.