DeepSeek created 3FS to handle multi-terabyte per second speed

DeepSeek’s 3FS hits multi-terabyte speeds, Tencent’s Hunyuan Turbo S outpaces DeepSeek, Meta debuts 8-hour AI glasses, and Karpathy breaks down GPT-4.5’s massive compute boost.

Read time: 8 min 56 seconds

📚 Browse past editions here.

( I write this newsletter daily. no-noise, actionable, applied-AI developments only).

⚡In today’s Edition (28-Feb-2025):

😱 DeepSeek created 3FS to handle multi-terabyte per second speed

🏆 Inception Labs Introduces 1000 Tokens/Sec Diffusion Model

📡 Meta Introduces Aria Gen 2: 8-Hour Wearable for AI Research

🚨 Tencent Unveils Hunyuan Turbo S — Faster Than DeepSeek

🗞️ Byte-Size Briefs:

Andrej Karpathy analyzes GPT-4.5’s 10x compute, enhancing creativity and emotional intelligence.

Ideogram releases 2a, generating images 2x faster with 50% lower cost.

Pika Labs launches Pika 2.2, enabling 10s 1080p AI videos with smooth transitions.

🧑🎓 Deep Dive Tutorial - “How I use LLMs” from Andrej Karpathy

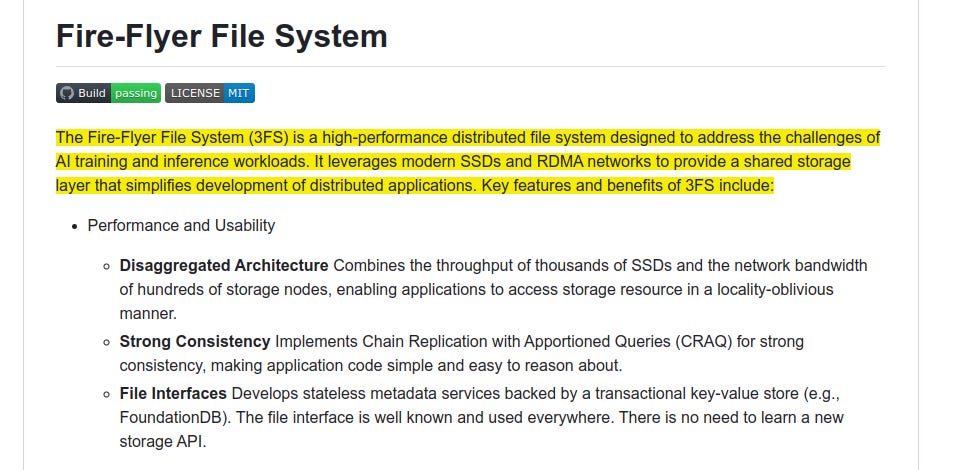

😱 DeepSeek created 3FS to handle massive training data at multi-terabyte speeds, boosting throughput for AI tasks.

DeepSeek unveiled 3FS (Fire-Flyer File System), an open-source file system reaching 6.6 TiB/s read throughput on a 180-node cluster, enabling faster AI data operations.

Its a parallel file system that utilizes the full bandwidth of modern SSDs and RDMA networks.

⚡ 6.6 TiB/s aggregate read throughput in a 180-node cluster

⚡ 3.66 TiB/min throughput on GraySort benchmark in a 25-node cluster

⚡ 40+ GiB/s peak throughput per client node for KVCache lookup

🧬 Disaggregated architecture with strong consistency semantics

✅ Training data preprocessing, dataset loading, checkpoint saving/reloading, embedding vector search & KVCache lookups for inference in V3/R1

Architecture ⚙️

Disaggregated design taps multiple SSDs and high-bandwidth NICs. It uses Chain Replication with Apportioned Queries for strong consistency. Metadata services rely on a transactional key-value store, avoiding complex new APIs.

Performance ⚡

Achieved 6.6 TiB/s read throughput under stress on 180 nodes with background training traffic.

Demonstrated 3.66 TiB/min on GraySort across 25 storage nodes and 50 compute nodes.

KVCache reads reach 40 GiB/s peak throughput during LLM inference.

Data Processing with smallpond ⛲

DuckDB-based framework leverages 3FS for high-speed input/output. Processes petabyte-scale datasets without long-running services. Completed GraySort on 110.5 TiB of data in 30 minutes and 14 seconds.

Large block reads let training pipelines load massive datasets quickly. A built-in KVCache optimizes repeated lookups for inference, offloading the overhead from main memory. Metadata handling relies on a transactional key-value store, making file management straightforward.

Why it Matters ✅

High throughput helps train and serve large models without bottlenecks. Consistency simplifies distributed workflows. Tools like smallpond integrate seamlessly, streamlining data preparation and inference steps.

High throughput and consistent writes reduce training stalls. Researchers skip manual data shuffling or complex caching setups. Model checkpointing, data preprocessing, and inference calls can run smoothly on the same system without special configuration.

Interestingly the team also provided formal specifications of the system in P in here. And checkout the 3FS Setup Guide for a manual deployment instruction for setting up a six-node cluster with the cluster ID stage.

🏆 Inception Labs Introduces 1000 Tokens/Sec Diffusion Model

🎯 The Brief

Inception Labs introduces Mercury, a commercial-scale diffusion large language models (dLLMs) delivering up to 10x faster text generation through a parallel coarse-to-fine approach. It brings near-instant outputs at lower costs.

⚙️ The Details

• Mercury Coder is a 5–10x faster dLLM for code generation, matching GPT-4o Mini and Claude 3.5 Haiku performance while exceeding 1000 tokens/sec on NVIDIA H100. It refines outputs in parallel, boosting overall text quality.

• Benchmarks show it outpacing other speed-optimized models in throughput. Its coarse-to-fine method corrects mistakes and reduces latency.

• In Copilot Arena, Mercury Coder Mini ranks near the top. It surpasses GPT-4o Mini and competes with larger LLMs.

• Enterprise users drop in Mercury dLLMs with minimal overhead. API and on-prem deployments include fine-tuning for code and general tasks.

Availability:

A code generation model, Mercury Coder, is available to test in a playground. And they offer enterprise clients access to code and generalist models via an API and on-premise deployments.

So how exactly Mercury achieves this 10x faster than frontier speed-optimized LLMs

• Traditional methods are autoregressive, generating tokens one at a time in a strict left-to-right pass. That forces many network evaluations across potentially thousands of tokens.

• Mercury’s diffusion-based approach breaks free from the sequential bottleneck by refining the entire text block in parallel. A small number of denoising iterations replaces a long token-by-token chain.

• Each iteration uses a Transformer that proposes global improvements. Multiple positions can be updated simultaneously, cutting down the overall compute time.

• By reducing the per-token overhead, Mercury can surpass 1000 tokens/sec on NVIDIA H100 hardware without requiring specialized custom chips. Speed optimizations compound further with advanced hardware acceleration.

• This parallel refinement maintains or improves generation quality because errors or partial missteps get corrected in subsequent denoising passes, avoiding the cascading errors often seen in purely left-to-right models.

📡 Meta Introduces Aria Gen 2: 8-Hour Wearable for AI Research

🎯 The Brief

Meta introduces Aria Gen 2 glasses for machine perception, contextual AI, and robotics research, featuring an advanced sensor suite, on-device processing, and up to 8-hour usage. This marks a powerful step in open research and accessibility innovations.

⚙️ The Details

The hardware integrates an RGB camera, 6DOF SLAM cameras, eye-tracking sensors, spatial microphones, and nosepad-embedded PPG and contact mic for heart rate and voice distinction. It weighs around 75g and folds for portability.

Meta’s custom silicon processes SLAM, hand tracking, eye tracking, and speech recognition at ultra-low power for continuous operation. Open-ear force-canceling speakers allow user-in-the-loop audio interactions.

Part of Project Aria, it follows Gen 1’s use in creating the Ego-Exo4D dataset and in robotics trials at Georgia Tech and BMW. Carnegie Mellon utilized Gen 1 for NavCog, aiding indoor navigation for blind users.

Envision, a company dedicated to creating solutions for people who are blind or have low vision, now explores on-device SLAM and spatial audio with Aria Gen 2 to enhance accessibility for low-vision communities. Academic and commercial labs can request access under Project Aria for further research.

🚨 Tencent Unveils Hunyuan Turbo S — Faster Than DeepSeek

🎯 The Brief

Tencent unveils Hunyuan Turbo S to outpace DeepSeek-R1, claiming faster responses with 44% reduced first-word delay, along with double the output speed. This release signals heightened rivalry in China’s AI ecosystem.

⚙️ The Details

Hunyuan Turbo S uses a hybrid-mamba-transformer fusion architecture that lowers computational complexity, cuts KV-Cache usage, and reduces training/inference costs. It merges Mamba’s proficiency in handling long sequences with the Transformer’s strength in complex contextual modeling.

Tencent states that Mamba is now deployed losslessly within an ultra-large Mixture of Experts (MoE) setup for the first time. It reports performance comparable to DeepSeek-V3, Claude 3.5 Sonnet, and GPT-4o on math, coding, and reasoning benchmarks.

The Mixture of Experts (MoE) strategy distributes workloads across specialized submodels. By applying Mamba to this setup without performance loss, the system preserves efficiency even as model capacity expands.

Reduced KV-Cache storage also trims cost. Real-time or high-throughput tasks become cheaper to run, making it appealing for large-scale inference.

The model’s API pricing is USD 0.11) per million tokens for input, and USD 0.28 per million tokens for output. It’s accessible on Tencent Cloud via API.

Competitors like Alibaba are also ramping up AI infrastructure, with DeepSeek planning an R2 model release to extend beyond English and boost coding capabilities.

🗞️ Byte-Size Briefs

Andrej Karpathy breaks down GPT-4.5’s tweaks, pointing out sharper creativity, deeper knowledge, and a better grasp of human emotions. This is what he basically says - First, recognize GPT4.5’s 10x greater compute yields nuanced gains in creativity and emotional intelligence. Next, focus on prompts that test humor, analogies, or broad knowledge, since it’s not tuned for advanced reasoning. Finally, leverage its improved EQ for more human-like and contextually rich responses.

Ideogram releases 2a, an upgraded text-to-image model with dramatically reduced generation times and improved text accuracy. Delivers rapid creation of professional-looking designs and realistic outputs. 2a generates image outputs in just 10 seconds, with an even faster ‘2a Turbo’ option delivering results at twice the speed. It refines text and graphic design tasks, creating homepages, posters, and ads with sharper text. It also handles photorealism with high consistency. Its cost is 50% less than the previous 2.0 version across both API and web. Users can tap it via Freepik, Poe, Gamma, or Ideogram’s platform.

Pika Labs releases Pika 2.2, enabling up to 10s AI video with 1080p resolution and smooth keyframe transitions. Creators gain extended storytelling ability and more polished results. “Pikaframes” introduces keyframe-based transitions, merging scenes more fluidly. Early testers report impressive prompt fidelity and cinematic aspect ratio support.

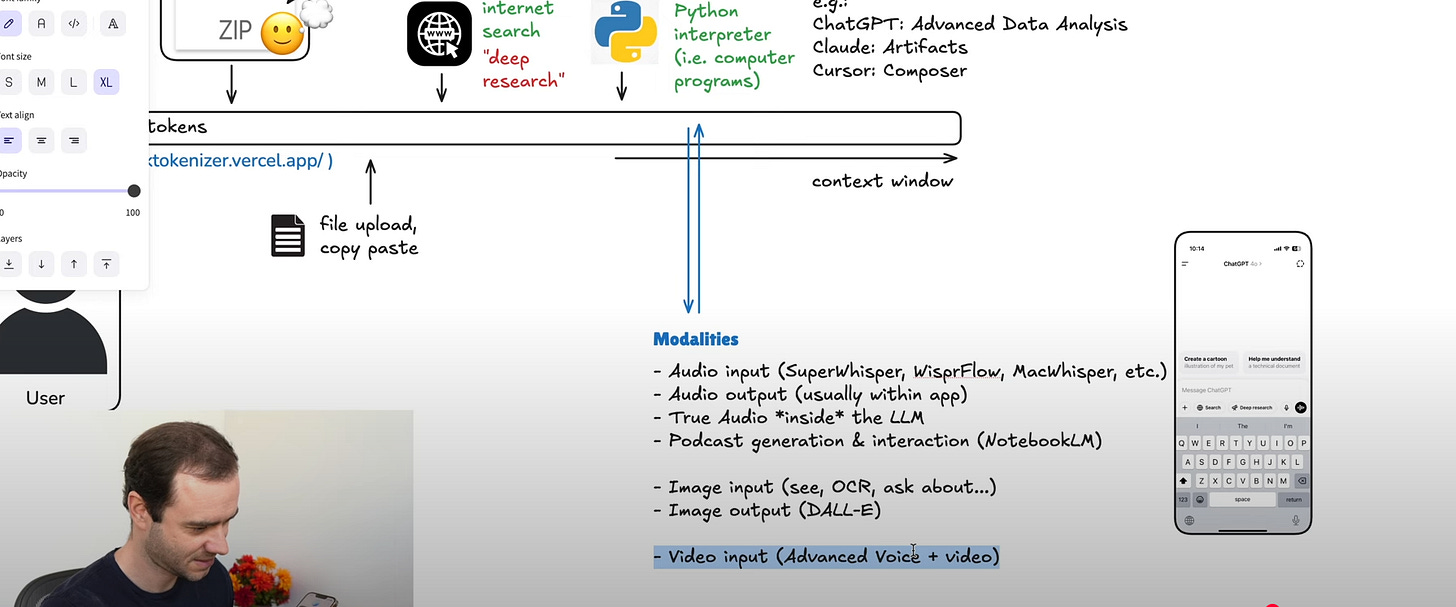

🧑🎓 Deep Dive Tutorial - “How I use LLMs” from Andrej Karpathy

Andrej Karpathy just release a 2H+ video example-driven, practical walkthrough of Large Language Models usage for our daily life.

Here he demonstrates more hands-on ways of using LLMs for coding, data exploration, tool integration, and multi-modal inputs, showing how they can streamline everyday tasks for faster, richer output.

Overall with LLMs you get, 3x faster coding turnaround, up to 80% fewer manual steps, and direct use of 1000+ token file uploads.

Karpathy demonstrates that leveraging an LLM for mundane setup (creating UI scaffolding, refactoring repetitive code) shortens prototyping by a factor of three. Instead of writing boilerplate or hunting documentation, he prompts the LLM with high-level instructions, which rapidly produces working code. This similarly applies to data tasks: about half the usual effort disappears when the LLM handles parsing, cleaning, and summarizing CSVs or unstructured text.

The automatic reading of large documents (e.g. PDFs or 1,000-token transcripts) integrates content into the LLM’s context window, enabling deeper analysis without manual copying. Direct Python interpreters handle advanced calculations and plotting, and real-time search taps fresh web data—both cutting extra steps and letting him iterate faster.

He highlights that an LLM is effectively a large “zip file” of internet knowledge plus a tuned assistant persona. When queries exceed the model’s cutoff or memory, it can do real-time web searches or run Python code to solve complicated parts. This flexibility enables everything from debugging gradient errors in neural networks to reading entire research papers or personal lab results.

He shows how additional reinforcement learning steps allow certain “thinking models” to tackle math or code with higher accuracy, albeit at slower speeds. He also shows how advanced voice modes can understand and generate speech natively, while memory features let the model retain personal context across different chats. Karpathy’s examples range from travel tips and health queries to code refactoring and building AI-based research workflows, underscoring how LLMs can transform daily routines.

That’s a wrap for today, see you all tomorrow.