🎖️DeepSeek launches new AI models aimed at challenging Google and OpenAI

DeepSeek’s new models, OpenAI’s legal pile-up, ChatGPT ads leak, and a deep dive on why TPUs beat Nvidia GPUs 4x on inference cost-performance.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (1-Dec-2025):

🎖️DeepSeek launches new AI models aimed at challenging Google and OpenAI

📚 OpenAI is getting hit with more legal trouble, and things keep stacking up.

💰 Leak confirms OpenAI is preparing ads on ChatGPT for public roll out.

🧮 Deep Dive Article: How TPUs deliver 4x better cost-performance than Nvidia GPUs for AI inference workloads

🎖️DeepSeek launches new AI models aimed at challenging Google and OpenAI

Deepseek reduced attention complexity from quadratic to ~linear through warm-starting. While preserving model performance, specifically optimized for long-context scenarios.

V3.2, near GPT-5-level performance on complex reasoning

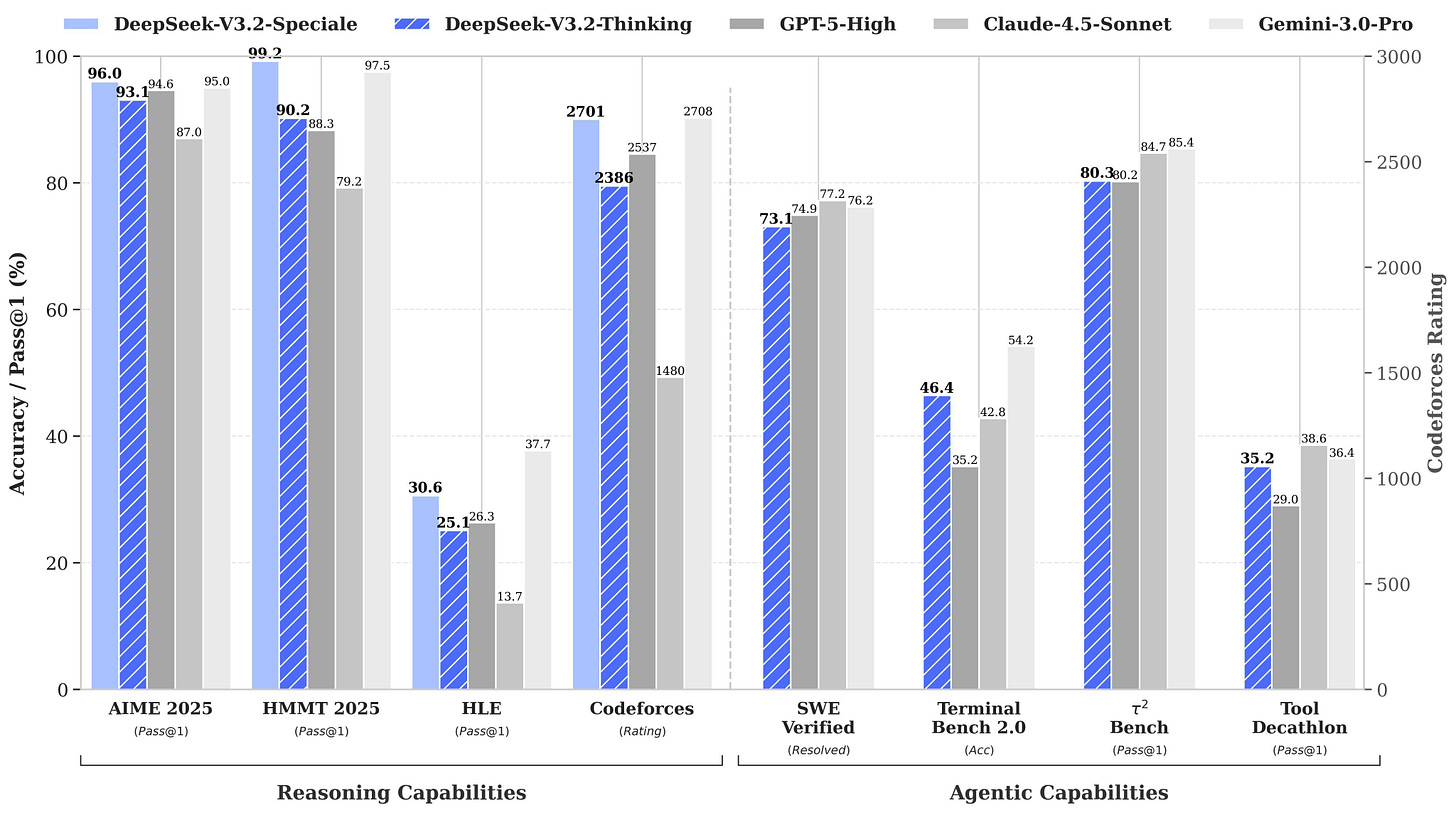

Today the released 2 models for different use cases. The V3.2-Speciale model, positioned for high-end reasoning tasks, “rivals Gemini-3.0-Pro,” the company said. According to DeepSeek, Speciale delivers gold-level (expert human proficiency) results across competitive benchmarks such as the IMO, CMO and ICPC World Finals.

The models introduce an expansion of DeepSeek’s agent-training approach, supported by a new synthetic dataset spanning more than 1,800 environments and 85,000 complex instructions. The company stated that V3.2 is its first model to integrate thinking directly into tool use, allowing structured reasoning to operate both within and alongside external tools.

Model architecture and training:

same core arch as DeepSeek V3.2-exp with DeepSeek Sparse Attention (DSA) for long context at lower cost

continued pretraining from DeepSeek V3.1-Terminus, then more than 10% of pretraining compute spent on reinforcement learning for reasoning

open weights, supports both LoRA fine-tuning and full-parameter training

Reinforcement learning setup (GRPO-style):

Unbiased KL estimate for a cleaner reward signal

off-policy sequence masking so older rollouts still train safely

Mixture-of-Experts routing kept consistent between training and sampling

truncation masks matched between training and sampling to avoid mismatch bugs

Now available across its app, web interface and API. The Speciale variant is offered only through a temporary API endpoint until December 15, 2025. The model is priced identically to V3.2 but does not currently support tool calls. DeepSeek is serving the model through a dedicated endpoint to gather feedback from researchers and developers before determining its long-term availability strategy.

Deepseek brought attention complexity down from quadratic to roughly linear by using warm-starting with separate initialization and optimization dynamics, and slowly adjusting this setup over about 1T tokens.

They did this by adding a sparse attention module that only lets each token look at a fixed number of important past tokens, and it trains this module in 2 warm start stages so it first imitates dense attention and then gradually replaces it without losing quality.

⚙️ What DeepSeek Sparse Attention (DSA):actually changes

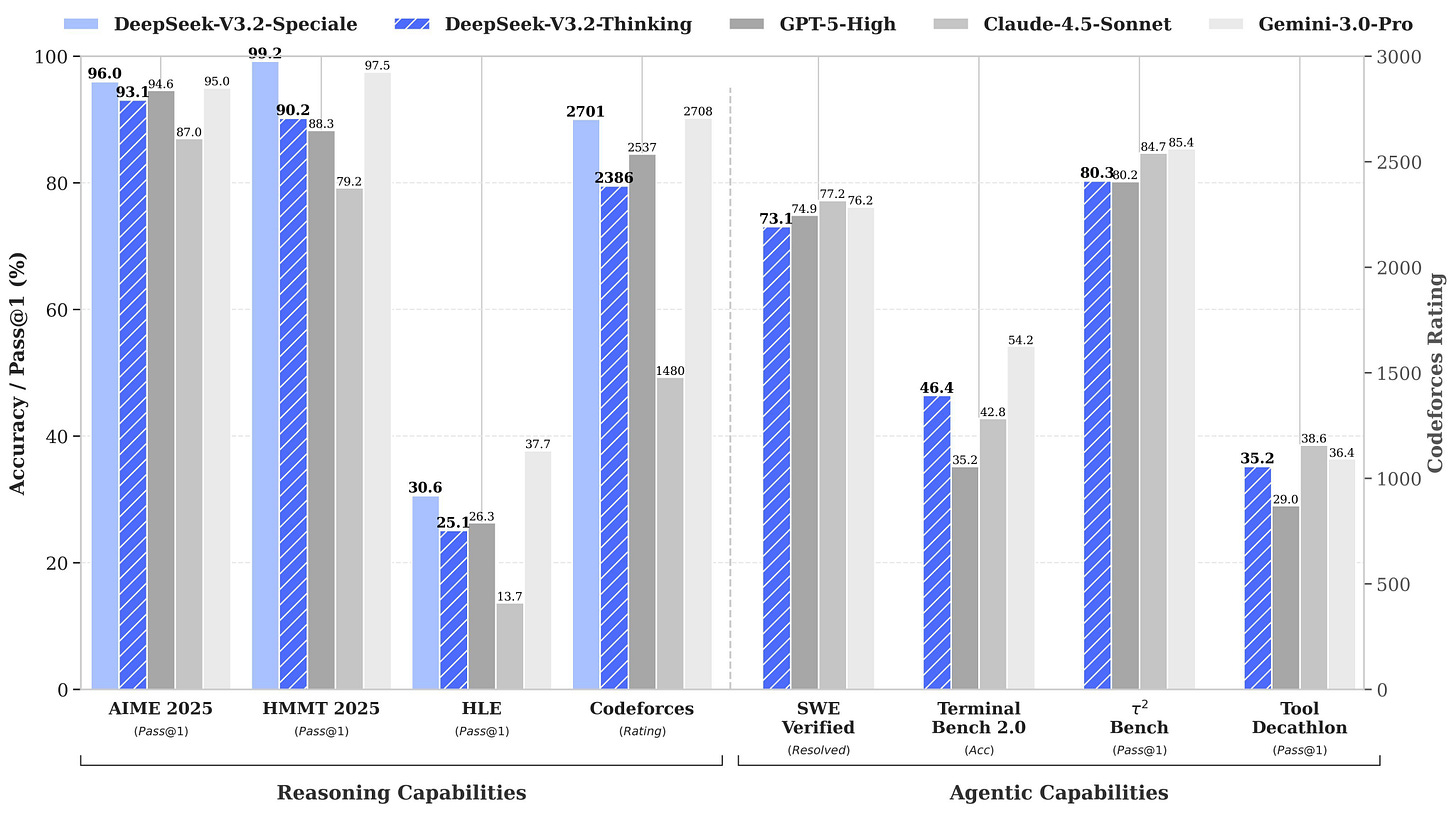

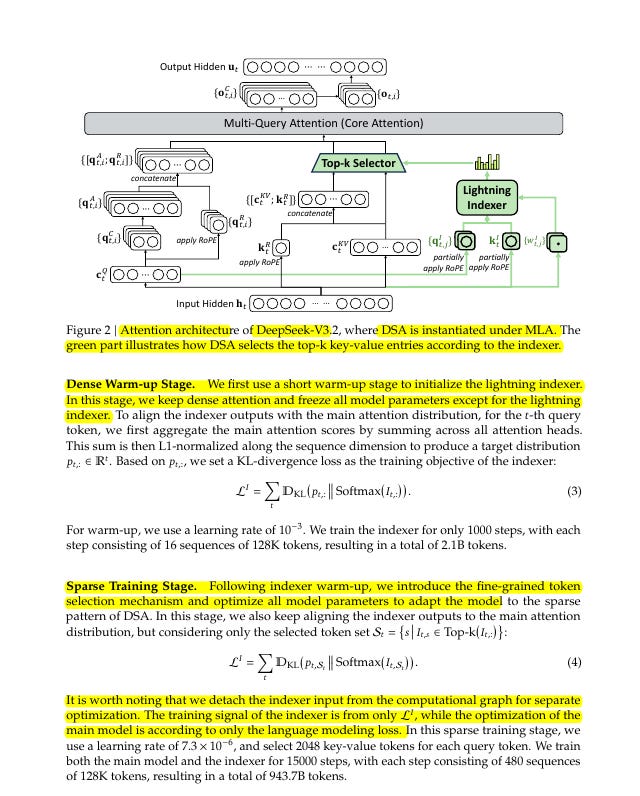

DeepSeek-V3.2 adds DeepSeek Sparse Attention, where a tiny “lightning indexer” scores all past tokens for each query token and then keeps only the top k ones, so the expensive core attention only runs over those selected entries and its work now grows like length times k instead of length times length.

The indexer itself still touches all token pairs, but it uses very few heads, simple linear layers, and FP8 precision, so in practice its cost is a small fraction of the original dense attention, and the main model’s cost becomes the dominant, almost linear term.

They also implement DSA on top of their Multi Head Latent Attention in multi query mode, where all heads share one compact key value set per token, which keeps the sparse pattern very friendly to kernels and makes the near linear behavior show up in real throughput.

🔥 Stage 1: dense warm up so the sparse module copies dense attention

DeepSeek-V3.2 does not switch to sparsity from scratch, it first runs a dense warm up stage on top of a frozen DeepSeek-V3.1-Terminus checkpoint whose context is already 128K.

In this stage, the old dense attention stays in charge, all model weights are frozen except the lightning indexer, and for each query token they compute the dense attention distribution, turn it into a target probability over positions, and train the indexer so its scores, after softmax, match that dense distribution as closely as possible.

They do this for about 2.1B tokens, which is just enough for the indexer to learn “if dense attention likes these positions, I should rank them high in my top k list”, so by the end of warm up, sparse selection is already a good approximation of what dense attention would look at.

🔁 Stage 2: switch to sparse attention and slowly adapt

After warm up, they enter the sparse training stage, where the main attention actually uses only the tokens selected by DSA instead of the full sequence.

Now all model weights are trainable again, the language model loss updates the usual transformer parameters, and the indexer is still trained, but its loss only compares dense attention and indexer probabilities inside the selected top k set, which keeps it aligned while letting it specialize.

They also detach the indexer’s input from the main graph, so gradients from the language modeling loss do not flow into the indexer, which prevents the selector from drifting in strange ways just to make prediction easier in the short term.

In this stage, each query token is allowed 2048 key value slots and they train for about 943.7B tokens with a small learning rate, so the model has a long time to adjust to seeing only sparse context while retaining its capabilities.

Because the indexer already learned to mimic dense attention during warm up, this second stage behaves like a gentle transition where the model learns to live with a fixed, small k rather than a sudden pruning that would wreck performance.

📉 Why this gives “almost linear” cost in practice

From the main model’s point of view, attention work now grows like sequence length times k, and k is fixed at 2048, so once sequences are long enough the curve is effectively linear in length.

The indexer’s quadratic pass is cheap because of FP8, small head count, and simple operations, so its contribution is a relatively flat overhead on top of the near linear core attention cost.

Figure 3 in the paper plots dollar cost per million tokens on H800 GPUs as a function of token position and shows that for both prefilling and decoding, DeepSeek-V3.2’s cost curve grows much more slowly with position than the old dense model, especially toward 128K tokens, which is exactly what this “quadratic to almost linear” story predicts.

So the reduction in complexity comes from the DSA design that lets the main attention see only 2048 tokens per position, and the warm start procedure is what makes it possible to replace dense attention with this sparse version without breaking quality while enjoying the near linear compute scaling.

📚 OpenAI is getting hit with more legal trouble, and things keep stacking up.

OpenAI now has to hand over internal chats about deleting 2 huge book datasets, which makes it easier for authors to argue willful copyright infringement and push for very high statutory damages per book. To the context, OpenAI is being sued by authors who say its models were trained on huge datasets of pirated books.

Authors and publishers have gained access to Slack messages between OpenAI’s employees discussing the erasure of the datasets, named “books 1 and books 2.” But the court held off on whether plaintiffs should get other communications that the company argued were protected by attorney-client privilege. In a controversial decision that was appealed by OpenAI on Wednesday, U.S. District Judge Ona Wang found that OpenAI must hand over documents revealing the company’s motivations for deleting the datasets. OpenAI’s in-house legal team will be deposed.

If those messages suggest OpenAI knew the data was illegal and tried to quietly erase it, authors can argue willful copyright infringement, which can mean much higher money damages per book and a much weaker legal position for OpenAI. OpenAI originally told the court that the datasets were removed for non use, then later tried to say that anything about the deletion was privileged, and this change in story is what the judge treated as a waiver that opens Slack channels like project clear and excise libgen to review. This ruling shifts power toward authors in AI copyright cases and sends a clear signal that how labs talk about scraped data, shadow libraries, and cleanup projects inside Slack or other tools can later decide whether they face normal damages or massive liability.

💰 Leak confirms OpenAI is preparing ads on ChatGPT for public roll out.

ChatGPT’s Android beta now contains an internal “ads feature” for search, suggesting OpenAI is about to turn the chat interface into a full ad platform similar to search ads, but sitting directly on top of user prompts and intent. The leak comes from Android app version 1.2025.329 where many found strings like “bazaar content”, “search ad”, and “search ads carousel”, which look like templates for sponsored product cards and swipeable ad carousels tied to search-style answers.

Right now these hooks appear scoped to search results, so the first step is likely sponsored answers or product rows blended into responses that already hit the web, instead of banners randomly dropped into every conversation. If OpenAI keeps ads inside the search layer, it can target them using extremely rich signals like current query, chat history, preferences, and even long running projects, which is a different level of intent than classic keyword search. The scale is huge here, with ~800M weekly users, 2.5B prompts per day, and traffic estimates around 5B to 6B visits per month, so even a very small ad load can turn into a serious revenue stream.

🧮 Deep Dive Article: How TPUs deliver 4x better cost-performance than Nvidia GPUs for AI inference workloads

TPUs deliver 4x better cost-performance than Nvidia GPUs for AI inference workloads

Inference costs 15x more than training over a model’s lifetime and growing exponentially

By 2030, inference will consume 75% of all AI compute resources ($255 billion market)

The central point is that inference dominates lifetime cost, with GPT-4 type models costing around 150M dollars to train but on the order of 2.3B dollars per year to keep answering user queries. Nvidia GPUs such as H100 are excellent for flexible training workloads, yet their general purpose design adds extra control logic and memory movement that burns energy during simple forward passes, making long running inference relatively expensive.

Google TPUs are application specific chips for tensor math that use systolic arrays and aggressive power engineering, so they consume roughly 60% to 65% less energy and reach about 4x better performance per dollar on transformer inference than H100 in benchmarks. Real usage backs this up, with Midjourney reporting about 65% lower inference spend after moving, Anthropic committing to as many as 1M TPUs, and other big operators like Meta, Salesforce, Cohere, and Gemini workloads shifting more traffic to TPU pods as inference is projected to reach roughly 75% of total AI compute by 2030.

That’s a wrap for today, see you all tomorrow.