🐋 DeepSeek-R1 Buzz Intensifies: Decoding the Hype and Real Impact - Analysis.

DeepSeek-R1 hype decoded, GroqCloud distills Llama 70B, new RL strategy, AI chip wars, Perplexity’s Sonar upgrade, $6K home inference, Hailuo’s T2V-01, and AI safety report.

Read time: 9 min 58 seconds

📚 Browse past editions here.

( I write daily for my 112K+ AI-pro audience, with 4.5M+ weekly views. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (29-Jan-2025):

🐋 DeepSeek-R1 Buzz Intensifies: Decoding the Hype and Real Impact - Analysis.

💰 “AI isn’t just about smart models—it’s about who can afford millions of chips” - says Anthropic CEO

🛠️ GroqCloud Makes DeepSeek R1 Distill Llama 70B, A version of Llama-3.3 fine tuned (distilled) with outputs from DeepSeek R1, Available

🐋 A new DeepSeek RL Replication Strategy: Small Data, Big Gains

🗞️ Byte-Size Briefs:

DeepSeek-R1 now available on-prem via DellTech and Hugging Face.

Perplexity AI integrates DeepSeek-R1 into Sonar API with grounded search.

Running DeepSeek-R1 at home costs $6K with high-end hardware.

Hailuo AI unveils T2V-01-Director for natural language camera control.

30 countries publish first International AI Safety Report on risks.

🐋 DeepSeek-R1 Buzz Intensifies: Decoding the Hype and Real Impact

First off, congrats to the DeepSeek team!👏 Its truly a Sputnik Moment for AI. And Thank you to them for open-sourcing the model and the detailed Technical Report.

They independently arrived at core ideas as some of the biggest names in the field like OpenAI or Anthropic. They proved out that having separate paradigms for pre-training and reasoning gives them huge advantage.

In super simple terms these were their training pipeline.

Step 1: DeepSeek-R1-Zero, which leverages RL directly on the DeepSeek V3 base model without any SFT data

Step 2: DeepSeek-R1, which initiates cold start and the same RL from a checkpoint fine-tuned with thousands of long Chain-of-Thought (CoT) examples

Step 3: DeepSeek Distillation, a distillation process to transfer reasoning capabilities from DeepSeek-R1 to smaller dense models.

In case you want more on DeepSeek-R’s training and architecture, I have a detailed writeup on that here.

Now, about the buzz and some misconceptions floating around.

The rumour mill says DeepSeek training cost was just ~$6M, but I think that’s just not the case. The training could not have been completed within ~$6M dollars. The compute for just the base model, without any Reinforcement Learning, already hit $5.5M in GPU hours. And that's not even counting all the extra runs, data generation, and the full DeepSeek R1 training.

DeepSeek isn't some small side project either. They are backed by High-Flyer, a serious Chinese hedge fund managing billions. We are talking about a team stacked with talent, including math and physics Olympians. And GPUs? Forget just a few, they are working with around 50,000 of them.

The DeepSeek R1 is a massive 671 Billion parameter Mixture of Experts (MoE) model. To run it, you're looking at needing more than 16 x 80GB of memory, meaning serious hardware like 16 H100s. And yes, the 671B model is super impressive. They have been doing solid open source work for a while now.

There are also "distilled" versions out there, smaller models fine-tuned from Qwen and Llama. These are trained on 800k samples, without Reinforcement Learning, and aren't the actual "R1". The smallest one, at 1.5B parameters, can run locally, but it’s nowhere near the full R1's capability.

If you are using the hosted DeepSeek chat platform, be aware of their Terms of Service. They might be using your data to train future models. But on the bright side, DeepSeek's open-source approach is a win for everyone in the long run. Hugging Face is even working on tools to fully reproduce their pipeline, aiming for complete open access.

DeepSeek R1 is the first top-tier model from outside the US, trained with less compute, and they've open-sourced the weights. It shows serious efficiency gains are possible in training, and that reasoning models can be replicated. While it might not be quite at the level of the absolute top models like o1-pro, it's surprisingly powerful.

Don't underestimate the compute implications though. These advanced models, especially for inference, will drive up global compute demand. Running AI assistants for billions, especially with added features like video understanding and reasoning, needs massive infrastructure. As Yann Lecun pointed out, much of the AI investment is now shifting to inference infrastructure, not just training.

Also some interesting side-developments around DeepSeek. On the security front, the U.S. Navy has actually banned its personnel from using DeepSeek AI, citing security and ethical worries about this Chinese platform, which shows the increasing sensitivity around foreign tech in critical sectors. Adding fuel to the fire, OpenAI is reportedly probing DeepSeek for allegedly using 'distillation' techniques on OpenAI's own models to train their open-source competitor (DeepSeek-R1).

So looks like, from now onwards the restriction on Distillation training will be more stringent from all the top labs. " Distillation is a common practice in the industry but the concern was that DeepSeek-R1 may be doing it to build its own rival model, which is a breach of OpenAI’s terms of service." - Reports Financial Times.

💰 “AI isn’t just about smart models—it’s about who can afford millions of chips” - says Anthropic CEO

🎯 The Brief

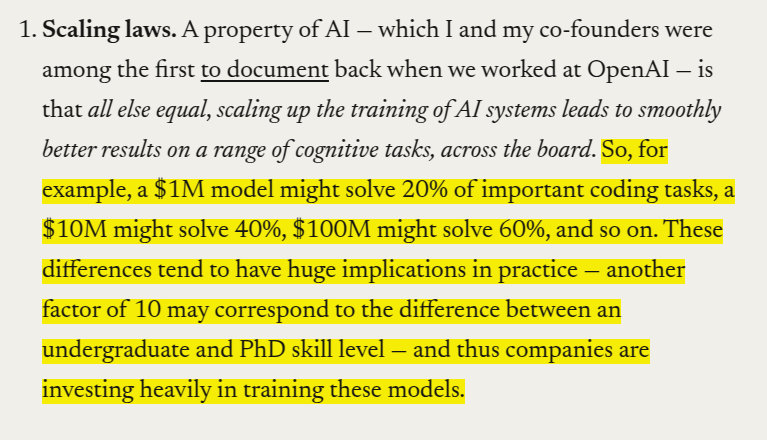

Dario Amodei, CEO of Anthropic publishes a lengthy blog saying that AI race follows predictable scaling laws, and DeepSeek's success aligns with expected cost reduction trends. The real concern is whether China will obtain millions of chips by 2026-2027, potentially shifting global AI leadership. Export controls remain the key mechanism to prevent China from achieving AI parity.

⚙️ The Takeaways

→ Scaling laws dictate AI progress: More compute leads to better model performance, with increasing investment in training. Cost efficiency gains don’t reduce spending; they fuel training of even larger models.

→ DeepSeek’s success isn’t a breakthrough: Their "DeepSeek-V3" model matched US models from 7-10 months ago but at lower cost due to engineering efficiencies. Their "R1" model, which adds RL-based reasoning, is comparable to OpenAI's o1-preview, not an outlier in AI progress.

→ Export controls are working: DeepSeek's 50,000-chip cluster includes a mix of H100, H800, and H20 chips. Some were legally acquired before bans, while others may have been smuggled—demonstrating that controls are limiting access to top-tier chips.

→ China lacks the chip supply for AI dominance: While DeepSeek had enough compute for innovation, scaling to human-level AI requires millions of chips and tens of billions of dollars. Export controls are essential to prevent China from reaching that scale.

→ AI leadership determines geopolitical power: A bipolar world (US and China both having cutting-edge AI) risks Chinese military and strategic advantage. A unipolar world (US dominance) could lead to a lasting technological edge.

→ No reason to lift export controls: DeepSeek’s models follow the expected cost trends; they don’t indicate a fundamental shift in AI economics. Restricting China's access to high-end AI chips remains crucial for US national security.

🛠️ GroqCloud Makes DeepSeek R1 Distill Llama 70B, A version of Llama-3.3 fine tuned (distilled) with outputs from DeepSeek R1, Available

🎯 The Brief

GroqCloud™ has launched DeepSeek-R1-Distill-Llama-70B, a fine-tuned version of Llama 3.3 70B, now supporting a 128k context window. This model excels in mathematical and factual reasoning, surpassing OpenAI’s GPT-4o in certain benchmarks. It scores 94.5% on MATH-500 and 86.7% on AIME 2024, making it one of the top models for advanced math. Groq ensures ultra-fast inference and data privacy, as queries are processed entirely on US-based infrastructure. Currently in preview mode, it is available for evaluation before its production release.

⚙️ The Details

→ DeepSeek-R1-Distill-Llama-70B is a distilled version of DeepSeek-R1, optimized within Llama 70B’s architecture. It delivers better accuracy than the base Llama 70B, particularly in math and coding tasks.

→ The model outperforms others in benchmarks: 94.5% on MATH-500, 86.7% on AIME 2024, 65.2% on GPQA Diamond, and 57.5% on LiveCode Bench.

→ Reasoning models like DeepSeek-R1 use chain-of-thought (CoT) inference, meaning each step builds on previous outputs, making low-latency inference crucial. Which is what GroqCloud optimizes for instant responses.

→ Data security is a priority. All inputs/outputs are stored temporarily in memory and are not retained after the session. Queries remain within US-based servers.

→ Best practices for usage: Temperature settings between 0.5-0.7 balance mathematical precision and creativity. Zero-shot prompting is recommended over few-shot.

→ Developers get 2X rate limits on GroqCloud’s Dev Tier, enhancing accessibility for testing and building applications. You can try it at console.groq.com.

🐋 A new DeepSeek RL Replication Strategy: Small Data, Big Gains

🎯 The Brief

Researchers replicated DeepSeek-R1-Zero and DeepSeek-R1 using only 8K math examples and a 7B model (Qwen2.5-Math-7B), achieving remarkable performance in math reasoning tasks. Their rule-based reward approach, without supervised fine-tuning (SFT) or reward models, boosted accuracy by 10-30% on AIME, MATH, and AMC benchmarks. The "Zero" model saw an 18.6% improvement, while SFT-warmed RL gained 20.7%. Surprisingly, this method rivals models like rStar-Math and Eurus-2-PRIME that use 50x more data and complex reward mechanisms.

⚙️ The Details

→ Model Setup: The team used Qwen2.5-Math-7B as the base model and trained it using PPO instead of GRPO, skipping complex reward models, MCTS, or extensive SFT.

→ Key Results: The Zero RL model (without SFT) reached 33.3% (AIME), 62.5% (AMC), and 77.2% (MATH). This outperforms Qwen2.5-math-7B-instruct and matches rStar-MATH and PRIME, despite using far less data.

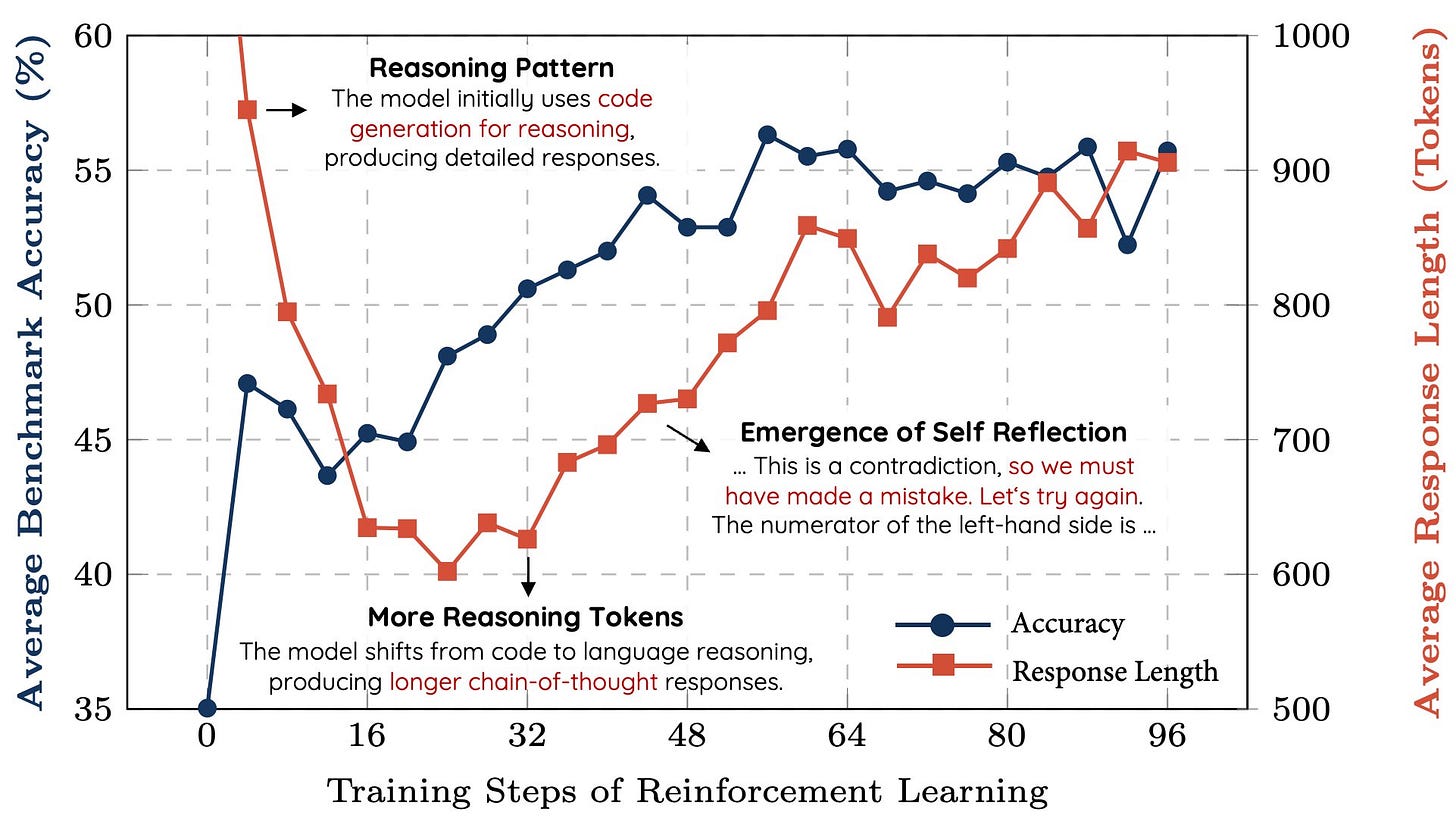

→ Emerging Self-Reflection: The model developed "regret-like" behavior, learning to refine and correct its reasoning over training.

→ Minimal Data, Big Gains: Compared to models using millions of samples, this approach uses just 8K examples, proving data efficiency in reinforcement learning.

→ Open-Source Availability: Full training code and methodology are available at their GitHub repository for further exploration.

They found Self-reflection behaviors emerge through RL training, with the model learning to balance shorter, focused outputs early in training and later adopting longer, reflective reasoning for accuracy gains.

The model starts to exhibit a "regret-like" mechanism, where it generates solutions, recognizes potential flaws in those solutions, and corrects itself. This is observed as an increasing ratio of "regret behavior" (e.g., revisiting or improving prior reasoning) relative to the total number of samples during RL training.

Early in training, the model generates shorter, simpler outputs, possibly aiming to optimize immediate performance metrics. As training progresses, it learns to produce longer, more detailed reasoning chains.

The self-reflection isn’t explicitly programmed. Instead, it "emerges" as a side effect of reinforcement optimization. The RL training incentivizes better final outputs, and the model learns that self-correction and detailed reasoning improve its reward, so it adopts those behaviors.

🗞️ Byte-Size Briefs

Deepseek- R1 is now available on-premise through DellTech and Huggingface collaboration.

Perplexity AI integrated DeepSeek R1 into Sonar API with search grounding.

A tweet went viral showing that you need $6,000 to run DeepSeek-R1 at Q8 quantization at home, with the following hardware stack.

- Motherboard: Gigabyte MZ73-LM0 or MZ73-LM1- CPU: 2x AMD EPYC 9004 or 9005 (9115 or 9015 for cost saving)

- RAM: 768GB DDR5-RDIMM (24 x 32GB modules)

- PSU: Corsair HX1000i (or cheaper option, >=400W)

- Heatsink: AMD EPYC SP5 compatible (Ebay/Aliexpress) + optional replacement fans

- SSD: 1TB or larger NVMe SSD

- Case: Enthoo Pro 2 Server (or standard tower case with server motherboard support)

Hailuo AI, a Chinese AI lab released a new video generation model: T2V-01-Director. It lets creators control camera movements using natural language, making AI filmmaking more intuitive. The model improves shot accuracy, reduces randomness, and enables seamless transitions—bringing automated, Hollywood-style cinematography closer to reality for storytellers and digital creators.

📡 The first-ever International AI Safety Report is published, backed by 30 countries and the OECD, UN, and EU. It focuses on general-purpose AI and categorizes risks into malicious use, malfunctions, and systemic risks.

That’s a wrap for today, see you all tomorrow.