🐋 DeepSeek-R1 fails every safety test thrown at it and failed to block any harmful request

DeepSeek-R1 fails every safety test, ByteDance's OmniHuman-1 stuns with lifelike videos, DeepMind proves RL beats fine-tuning, OpenAI eyes smartphone replacement, and AI outsmarts PhDs.

The above podcast on this post today was generated with Google’s Illuminate.

Read time: 7 min 27 seconds

📚 Browse past editions here.

( I write daily for my 112K+ AI-pro audience, with 4.5M+ weekly views. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (4-Feb-2025):

🐋 DeepSeek-R1 fails every safety test thrown at it and failed to block any harmful request

🎨 ByteDance released OmniHuman-1, it’s insanely good model for creating lifelike human videos based on a single human image

🥉 Google DeepMind’s new paper explains why reinforcement learning outperforms supervised fine-tuning for model generalization.

🗞️ Byte-Size Briefs:

OpenAI is reportedly developing an AI device to replace smartphones, according to CEO Sam Altman.

xAI’s Grok Android app enters beta testing in Australia, Canada, India, the Philippines, and Saudi Arabia. Users can pre-register on Google Play.

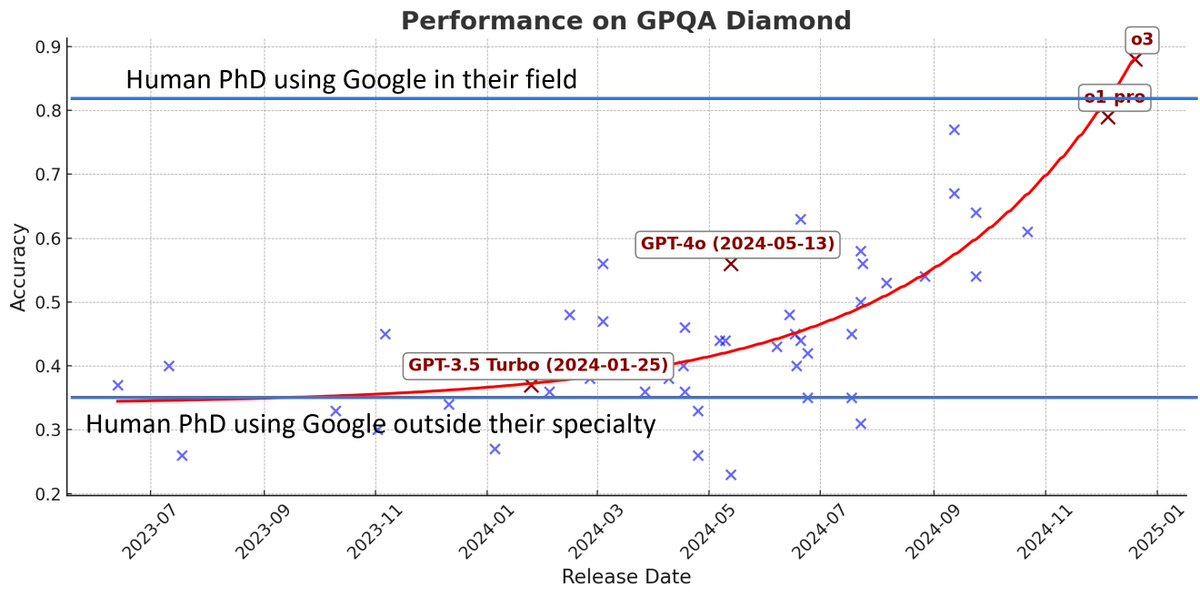

AI now surpasses PhD experts in their own fields, according to viral posts citing data from epoch.ai.

Developers deploy malware-based tarpits like Nepenthes and Iocaine to trap AI web crawlers, waste resources, and mislead models, increasing AI firms’ data collection costs.

🧑🎓 Top Github Repo: Oumi:build state-of-the-art foundation models, end-to-end

📚 Educational Resource: A Little Bit of Reinforcement Learning from Human Feedback

🐋 DeepSeek-R1 is failing every safety test thrown at it, 100% attack success rate

🎯 The Brief

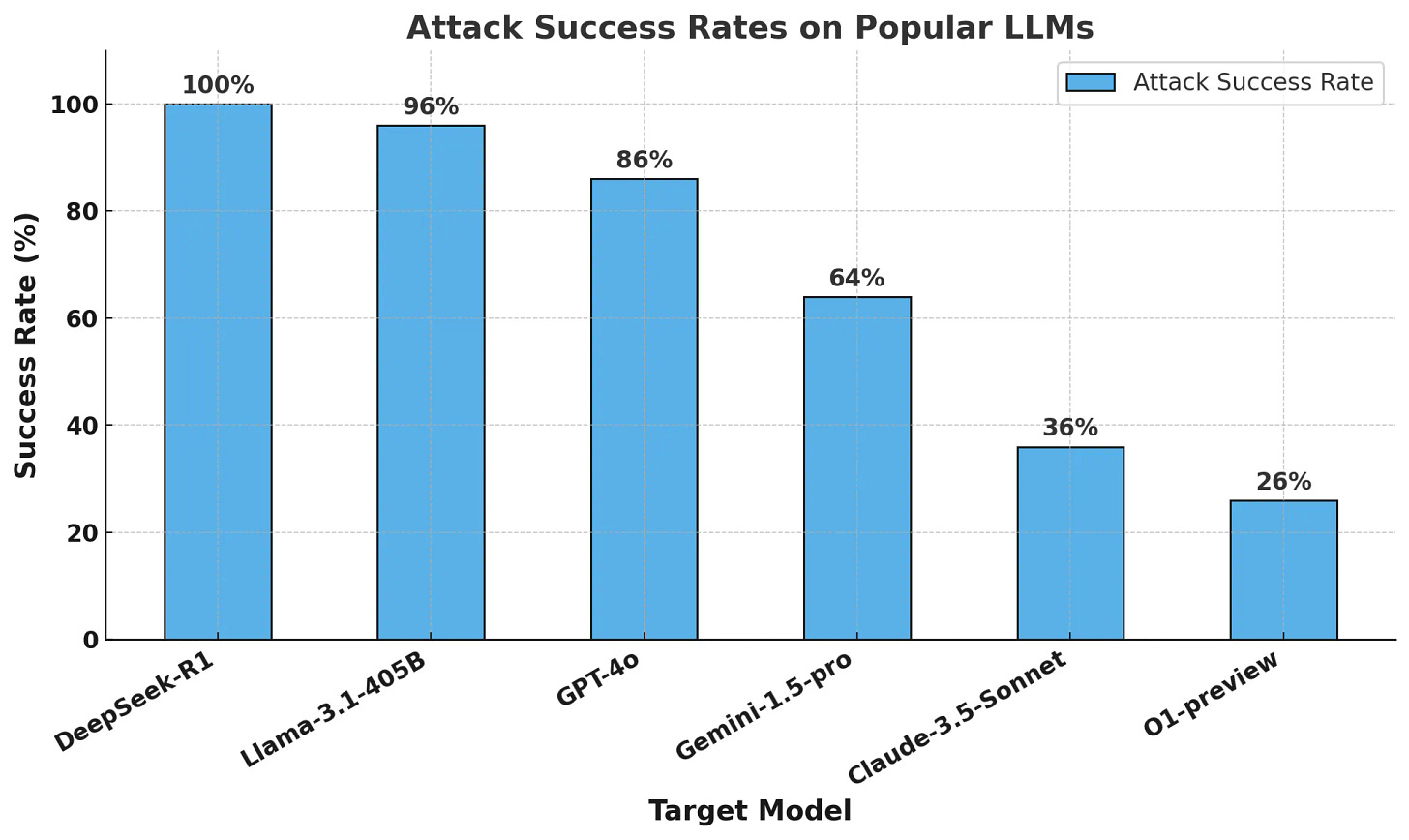

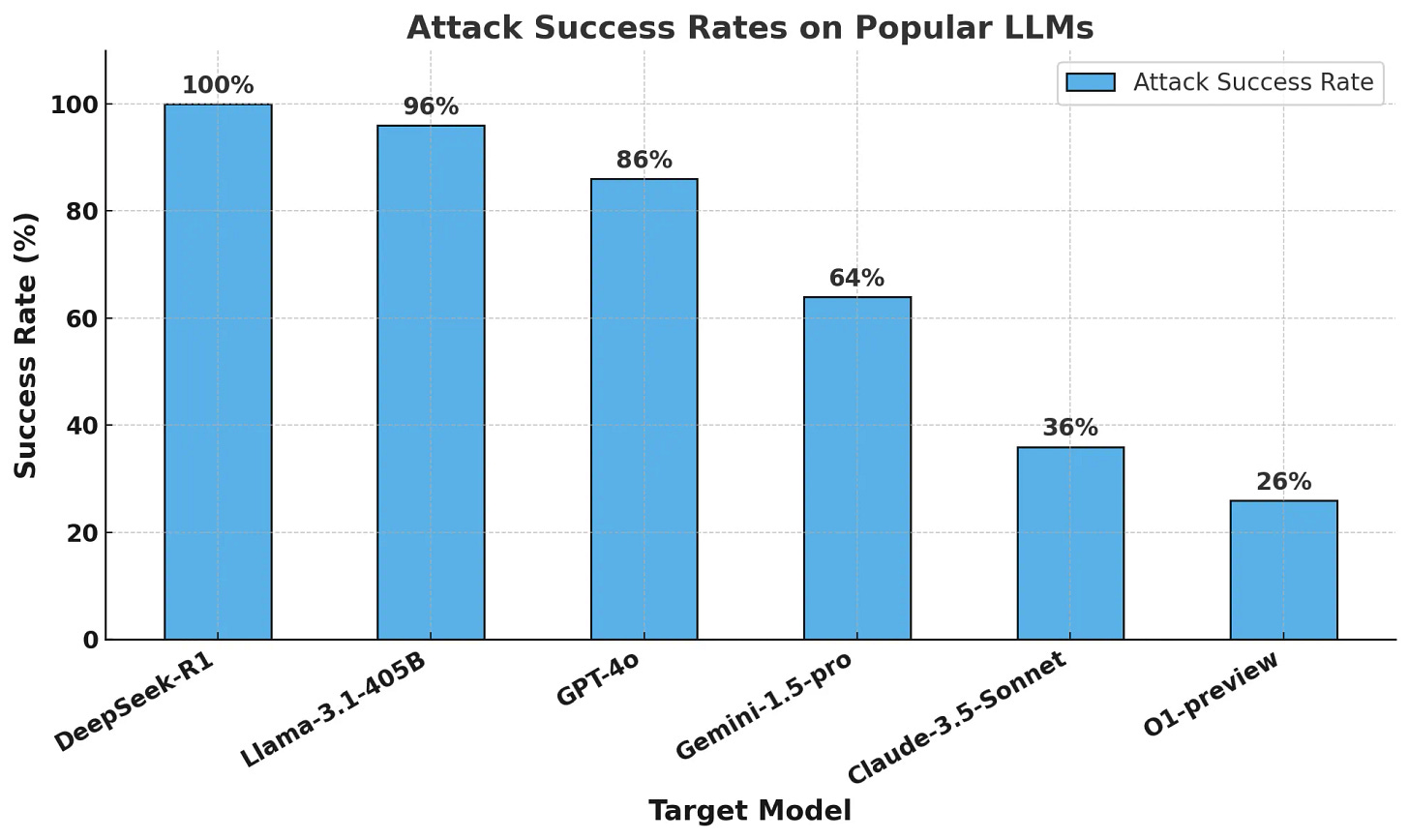

DeepSeek R1, has been found extremely vulnerable to security attacks. A study by Cisco’s Robust Intelligence and the University of Pennsylvania tested the model with 50 harmful prompts from the HarmBench dataset, covering areas like cybercrime and misinformation. Result: 100% attack success rate—it failed to block a single harmful request.

⚙️ The Details

→ Researchers from Cisco applied algorithmic jailbreaking using an automated attack methodology. The 100% attack success rate means DeepSeek R1 failed to block any of the 50 adversarial prompts.

→ Security firm Adversa AI confirmed the results, finding that DeepSeek R1 is susceptible to both basic linguistic tricks and advanced AI-generated exploits.

🎨 ByteDance released OmniHuman-1, it’s insanely good model for creating lifelike human videos based on a single human image

🎯 The Brief

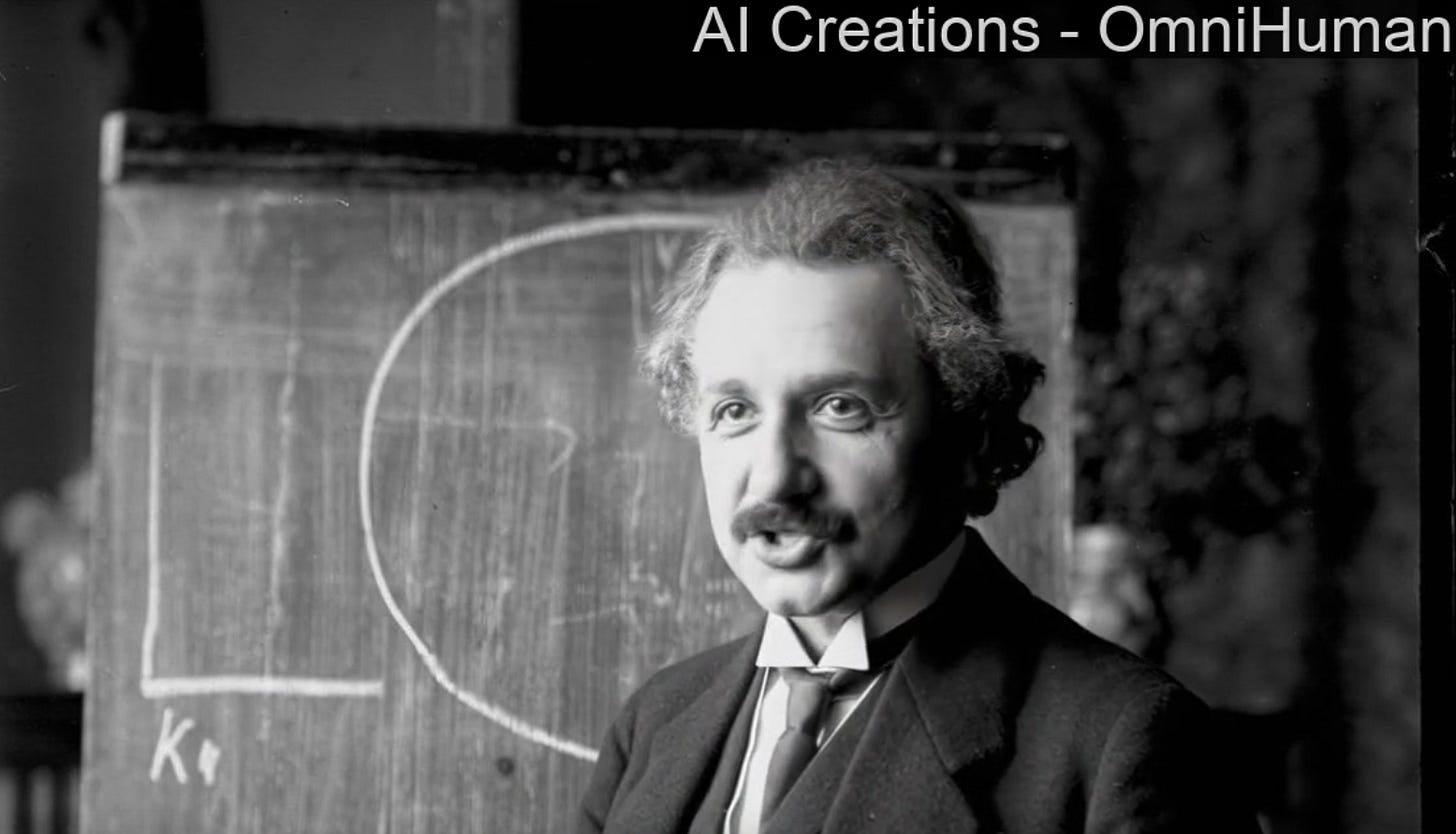

ByteDance's OmniHuman-1 is a Diffusion Transformer-based AI model that generates highly realistic human videos from a single image and audio/video input. Unlike previous models limited to facial or static full-body animation, OmniHuman-1 handles diverse aspect ratios, complex human-object interactions, and pose-driven motion. It outperforms existing methods in realism, achieving state-of-the-art lip-sync accuracy, gesture precision, and expressiveness.

⚙️ The Details

→ OmniHuman-1 extends traditional human animation by using audio, pose, and reference images in a unified training framework, improving realism across portraits, half-body, and full-body animations.

→ The model is built on DiT (Diffusion Transformer) architecture, leveraging multimodal motion conditioning to enhance training efficiency and video quality.

→ Supports video-driven animation, allowing motion replication from existing videos, or a combination of audio and video to control body parts independently.

→ Outperforms competitors (e.g., Loopy, CyberHost, DiffTED) in lip-sync accuracy (5.255 vs. 4.814), FVD (15.906 vs. 16.134), and gesture expressiveness, while supporting varied body proportions in a single model.

→ Handles diverse styles, including cartoons, stylized characters, and anthropomorphic objects, preserving unique motion characteristics.

🥉 Google DeepMind’s new paper explains why reinforcement learning outperforms supervised fine-tuning for model generalization

🎯 The Brief

Google DeepMind’s latest study demonstrates that reinforcement learning (RL) significantly outperforms supervised fine-tuning (SFT) for model generalization in both textual and visual domains. Using two benchmark tasks, GeneralPoints (arithmetic reasoning) and V-IRL (real-world navigation), the researchers show that SFT tends to memorize training data, while RL enables models to adapt to novel, unseen scenarios. The findings reinforce RL’s effectiveness in learning generalizable knowledge for complex multimodal tasks.

⚙️ The Details

→ The study compares SFT and RL in foundation model post-training and their ability to generalize beyond training data. RL is shown to be significantly better at rule-based and visual generalization, whereas SFT exhibits strong memorization tendencies.

→ In GeneralPoints (text-based arithmetic reasoning), RL enables models to compute unseen rule variations, whereas SFT-trained models struggle with novel rule applications.

→ In V-IRL (real-world navigation), RL improves out-of-distribution generalization by +33.8% (44.0% → 77.8%), demonstrating better adaptation to new navigation conditions.

→ RL enhances visual recognition capabilities, a crucial factor in vision-language models (VLMs), while SFT degrades visual recognition performance as it overfits to reasoning tokens.

→ Despite RL’s advantages, SFT remains useful for stabilizing the model’s output format, which helps RL achieve better learning efficiency.

→ The study suggests that scaling up inference-time computation (increasing verification steps) further boosts RL generalization.

→ Without SFT pre-training, RL training fails due to poor instruction-following ability, emphasizing the necessity of a structured initialization before RL fine-tuning.

🗞️ Byte-Size Briefs

OpenAI is reportedly developing a new AI device intended to replace smartphones, as stated by CEO Sam Altman.

The Grok Android app, developed by xAI, has been released for beta testing in Australia, Canada, India, the Philippines, and Saudi Arabia.

A viral post in Reddit and Twitter shows AI’s Exponential progress, which now surpasses human PhD experts in their own field. The data for this comes from epoch.ai

Developers are deploying malware-based tarpits like Nepenthes and Iocaine to trap and poison AI web crawlers that ignore robots.txt. These tools waste resources, mislead AI models with gibberish, and inflate AI companies' data collection costs. While AI firms like OpenAI have countermeasures, the rise of AI poisoning tactics signals growing resistance against unchecked AI scraping.

🧑🎓 Top Github Repo

Oumi:build state-of-the-art foundation models, end-to-end

Oumi is a fully open-source platform designed to train, evaluate, and deploy foundation models end-to-end. It supports models from 10M to 405B parameters, enabling fine-tuning using LoRA, QLoRA, DPO, and other techniques. It integrates with popular inference engines (vLLM, SGLang) and works across laptops, clusters, and cloud platforms (AWS, Azure, GCP, Lambda, etc.). Supports multimodal models like Llama, DeepSeek, and Phi.

⚙️ Key Benefits

→ Oumi simplifies model training with a unified API, allowing seamless model fine-tuning, data synthesis, and evaluation. Supports both open-source and commercial APIs like OpenAI, Anthropic, and Vertex AI, making it highly flexible.

→ Enables fast inference with optimized engines such as vLLM and SGLang, ensuring efficient deployment. Installation is straightforward with pip install oumi, supporting both CPU and GPU setups.

→ Supports cloud-based training with direct job execution on AWS, Azure, GCP, and Lambda. Includes prebuilt ready-to-use training recipes for LLM fine-tuning, distillation, evaluation, and inference.

→ 100% open-source under Apache 2.0 license, with an active community on Discord and GitHub.

📚 Educational Resource: A Little Bit of Reinforcement Learning from Human Feedback

This work systematically breaks down RLHF’s fundamental algorithms and optimizations for training more stable and efficient LLMs.

A concise overview of Reinforcement Learning from Human Feedback (RLHF) with a focus on policy gradient algorithms like PPO, REINFORCE, and GRPO. These algorithms optimize LLMs by directly updating policies based on rewards instead of storing them in replay buffers. The work explores new RLHF variants such as REINFORCE Leave One Out (RLOO) and Group Relative Policy Optimization (GRPO), which improve stability and efficiency for LLMs.

⚙️ Key Learning Takeaways

→ Policy gradient methods are core to RLHF, optimizing models by computing gradients based on expected returns from current policies. These include REINFORCE, PPO, and GRPO, which control policy updates differently.

→ Vanilla Policy Gradient methods suffer from high variance, mitigated using advantage functions that normalize rewards. REINFORCE is a Monte Carlo-based gradient estimator, operating without a value function.

→ REINFORCE Leave One Out (RLOO) improves stability by using batch-level reward baselines instead of full averages. This works well in domains where multiple completions per prompt are used.

→ Proximal Policy Optimization (PPO), a widely used RL method, controls updates via clipping to prevent excessive policy shifts. PPO requires a value network to estimate advantages.

→ Group Relative Policy Optimization (GRPO), introduced in DeepSeekMath, simplifies PPO by removing the need for a value function. This reduces memory consumption and avoids challenges in learning value functions for LLMs.

→ Implementation details cover practical aspects of log-probability ratios, KL penalties, and clipping constraints, which refine policy optimization.

→ Double regularization in RLHF ensures stable learning by balancing updates with KL divergence constraints, preventing drastic policy shifts.

That’s a wrap for today, see you all tomorrow.