🇨🇳 DeepSeek-R1’s original paper was re-published in Nature yesterday as the cover article

DeepSeek-R1 hits Nature’s cover, Google Chrome gets major AI boost, and IBM drops a compact doc-focused VLM.

Read time: 10 min

📚 Browse past editions here.

( I publish this newsletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (19-Sept-2025):

🇨🇳 DeepSeek-R1’s original paper was re-published in Nature yesterday as the cover article - this is Nature Magazine's tribute to one THE most important AI paper of recent times.

👨🔧 The DeepSeek R1 Nature paper’s supplementary notes are a goldmine across 83 solid pages.

🧭 Google Chrome is getting its biggest AI upgrade.

🖇️ IBM just released a tiny document VLM, Granite-Docling-258M.

🇨🇳 DeepSeek-R1’s original paper was re-published in Nature yesterday as the cover article - this is Nature Magazine's tribute to one THE most important AI paper of recent times.

On January 27, 2025, in reaction to the DeepSeek R1 release and its implications for the AI market:

Nvidia shares plunged nearly 17%, equating to a loss of approximately $589 billion in market value.

Broadcom shares fell by 17.4%.

Microsoft and Alphabet saw declines of 2.1% and 4.2%, respectively.

The Nasdaq Composite index dropped by 2.8%.

The S&P 500 fell 1.7% by midday.

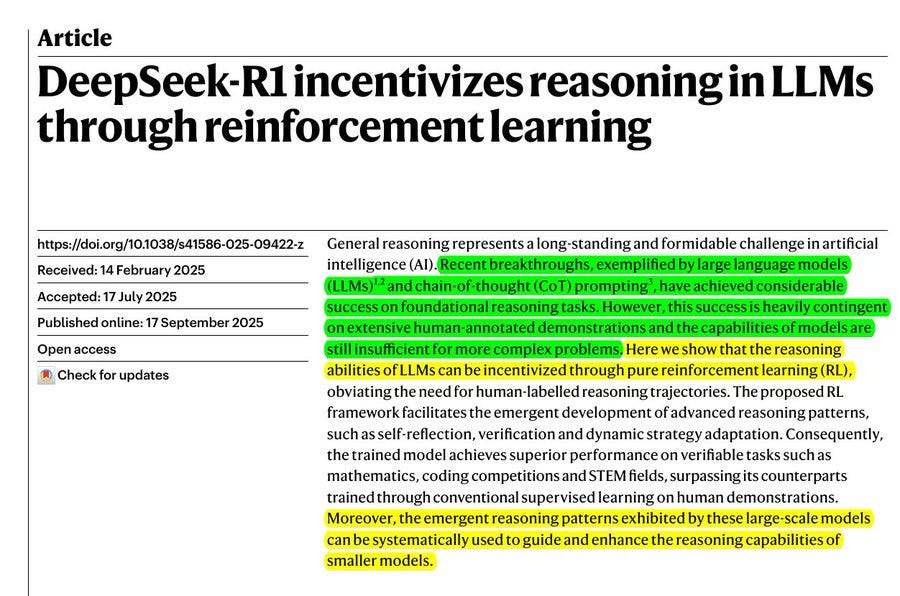

The paper shows that pure Reinforcement Learning with answer-only rewards can grow real reasoning skills, no human step-by-step traces required.

So completely skip human reasoning traces and still get SOTA reasoning via pure RL. It’s so powerful revelation, because instead of forcing the model to copy human reasoning steps, it only rewards getting the final answer right, which gives the model freedom to invent its own reasoning strategies that can actually go beyond human examples.

Earlier methods capped models at what humans could demonstrate, but this breaks that ceiling and lets reasoning emerge naturally. Those skills include self-checking, verification, and changing strategy mid-solution, and they beat supervised baselines on tasks where answers can be checked.

Models trained this way also pass those patterns down to smaller models through distillation. AIME 2024 pass@1 jumps from 15.6% to 77.9%, and hits 86.7% with self-consistency.

⚙️ The Core Concepts

The paper replaces human-labelled reasoning traces with answer-graded RL, so the model only gets a reward when its final answer matches ground truth, which frees it to search its own reasoning style. The result is longer thoughts with built-in reflection, verification, and trying backups when stuck, which are exactly the skills needed for math, coding, and STEM problems where correctness is checkable. This matters because supervised traces cap the model at human patterns, while answer-graded RL lets it discover non-human routes that still land on correct answers.

🧪 How R1-Zero is trained

R1-Zero starts from DeepSeek-V3 Base and uses GRPO, a group-based variant of PPO that compares several sampled answers per question, then pushes the policy toward the higher-reward ones while staying close to a reference model. Training enforces a simple output structure with separate thinking and final answer sections, and uses rule-based rewards for accuracy and formatting, avoiding neural reward models that are easy to game at scale. This minimal setup is intentional, because fewer constraints make it easier to observe what reasoning behaviours show up on their own.

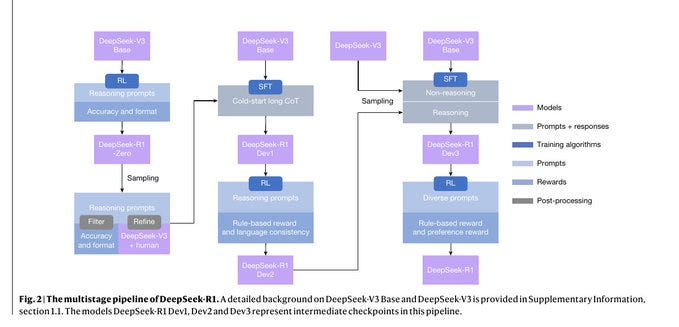

The multistage pipeline of DeepSeek-R1. First, R1-Zero is trained with pure RL using only accuracy and format rewards.

Then, Dev1 adds supervised fine-tuning with a small “cold-start” dataset to clean up readability and language. Next, Dev2 reintroduces RL with rule-based rewards to recover reasoning performance.

Finally, Dev3 combines reasoning and non-reasoning data with supervised tuning, and then applies RL again using both rule-based and preference rewards. The result is the full R1 model, which keeps strong reasoning but also improves language quality and instruction following.

So what exactly is NEW in DeepSeek-R1's self-improvement ?

The usual way of RL (before DeepSeek-R1)

Normally, to make a model good at reasoning, humans had to write out step-by-step reasoning traces for the model to copy. Think of it like teaching a kid math by showing every single line of the solution. The problem is, this caps the model at whatever humans show it. If humans make mistakes, or if the human way is not the smartest, the model never goes beyond that.

What DeepSeek-R1 did differently

Instead of spoon-feeding reasoning steps, they only gave the model the question and the final correct answer. The model then tries different reasoning paths on its own. If its final answer matches the correct one, it gets a reward. If not, it doesn’t.

Over thousands of problems, the model figures out which kinds of “thinking styles” actually lead to correct answers more often. This pushes it to invent new strategies, like checking its work, pausing with “wait,” or backtracking when it feels stuck.

Why no humans are needed

Because the only thing required is a verifier that checks whether the final answer is right or wrong. For math, the answer is either correct or incorrect.

For code, you can just run the program and see if it passes tests. So, unlike before, no human has to mark “this reasoning step is good” or “this one is bad.” The system automatically gives feedback, and the model evolves reasoning patterns on its own.

But how does this method know if the answer is correct or not. Correctness is known because they train only on tasks with automatic checkers, math answers are graded by a rule and code is judged by compilers and test cases, so a simple pass or fail produces the reward.

First note, DeepSeek-R1 isn’t trained on all types of questions. It’s trained mainly on tasks where a computer can automatically check the answer without needing a human.

In math problems, there’s a known correct answer. The model’s output is compared directly to that number or expression. If it matches, reward = 1. If not, reward = 0.

In coding tasks, the model’s solution is run against a test suite. If the program compiles and passes the tests, it’s correct. If it fails, it’s wrong.

In other STEM problems, similar verifiers are used, like symbolic solvers, unit checkers, or predefined datasets.

So the “judge” is not a human, it’s a simple automated checker. The model never needs humans to approve steps, it only sees whether the final answer matches the ground truth or passes tests, then it shifts toward the answer styles that win.

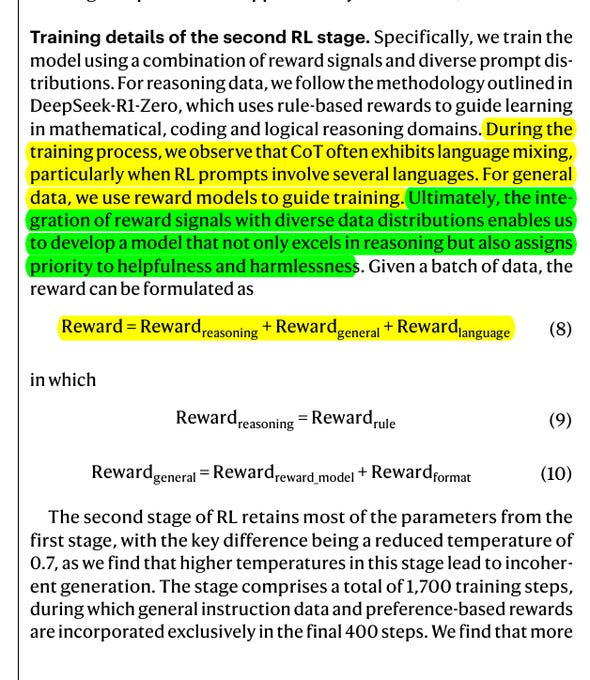

For problems without a reliable verifier, like open-ended writing or unknown frontier facts, they state this approach does not scale, they either add a small supervised set or limit RL, so answers beyond verification remain out of scope for now. This section from the DeepSeek R1's paper shows the second RL stage where they blend different rewards and varied prompts to make the model both smart and helpful.

That equation just says the model’s training score is the sum of 3 simple scores. Reasoning score is a strict rule check that says “correct” for a right final answer or passing tests, and “incorrect” otherwise. which creates a clean reasoning reward.

For general language tasks they score helpfulness and safety with a reward model, add a simple format check, and include a language-consistency reward to avoid mixing languages. All 3 signals are added together so the model is pushed to solve correctly, speak clearly, and stay aligned.

They lower sampling temperature to 0.7 for stability, run 1,700 steps, and include preference rewards only in the last 400 steps to reduce reward hacking. Net effect, it keeps the strong solver behavior while polishing readability, safety, and instruction following.

This chart says the DeepSeek-R1's accuracy on AIME 2024 keeps rising during training with pure reinforcement learning. Pass@1 climbs from 15.6% to 77.9%, and with self consistency over 16 samples it reaches 86.7%.

It beats the average human level partway through training, which shows answer-only rewards are enough to grow real reasoning. The extra boost from self consistency means sampling more candidate solutions and picking the most consistent one helps a lot.

👨🔧 The DeepSeek R1 Nature paper’s supplementary notes are a goldmine across 83 solid pages.

Everything from training data and hyperparameters to why the base model matters are covered here.

Reinforcement learning, not just supervised fine-tuning, is what pushes DeepSeek‑R1 to generate long, reflective reasoning that actually fixes its own mistakes. They train with Group Relative Policy Optimization, drop the value model, manage divergence to a moving reference, and let the model scale test‑time thinking to crack harder problems.

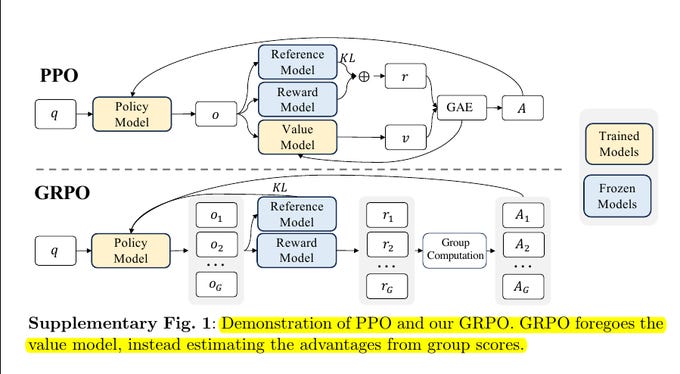

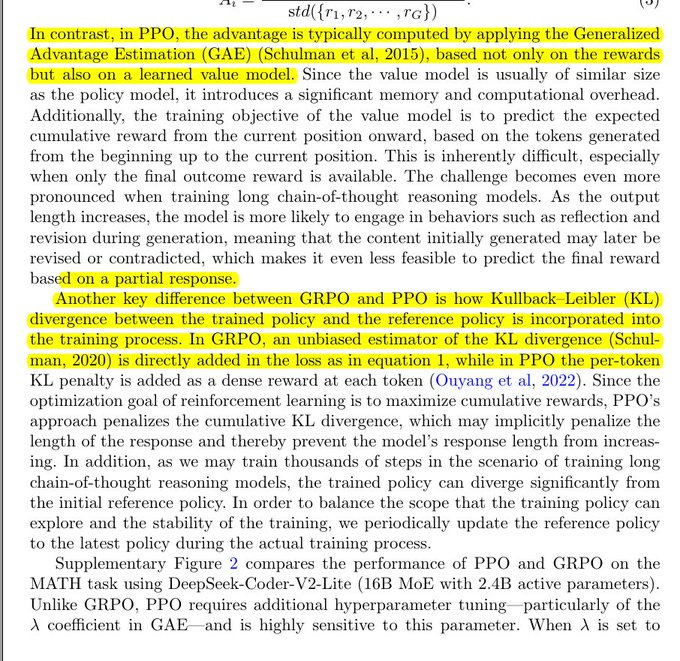

🔁 GRPO, not PPO

Group Relative Policy Optimization samples a small group of answers for each prompt, scores them, normalizes those scores within the group, and updates the policy toward the better ones while skipping a separate value model. They control drift with an unbiased Kullback–Leibler estimate against a reference policy and periodically refresh that reference, which avoids over‑penalizing long responses and cuts memory and compute. On the same backbone, Proximal Policy Optimization needed careful lambda tuning to approach GRPO on math, which made GRPO the lower‑friction choice in practice.

The difference between standard PPO and the GRPO method that DeepSeek-R1 used. In PPO, the policy model generates outputs, then each output is scored by a reward model, and a value model is used to estimate how good the output is compared to the baseline.

That value model is what produces the advantage signal, which tells the optimizer whether the chosen action was better or worse than expected. PPO then updates the policy model using that signal, while keeping it close to a frozen reference model through a KL penalty.

In GRPO, the value model is completely removed. Instead, the policy generates a group of outputs for the same prompt. Each of those outputs is scored by the reward model.

Then, instead of estimating advantage with a value function, they normalize the rewards within the group. This means each output’s advantage is computed directly relative to its peers in that batch.

The optimizer then uses those group-normalized scores to push the policy toward the better answers, still with a KL penalty to avoid drifting too far from the reference. So the main change is that GRPO replaces the value model with a simpler group-based comparison, which reduces complexity, saves compute, and avoids the bias problems that come with training a separate value network.

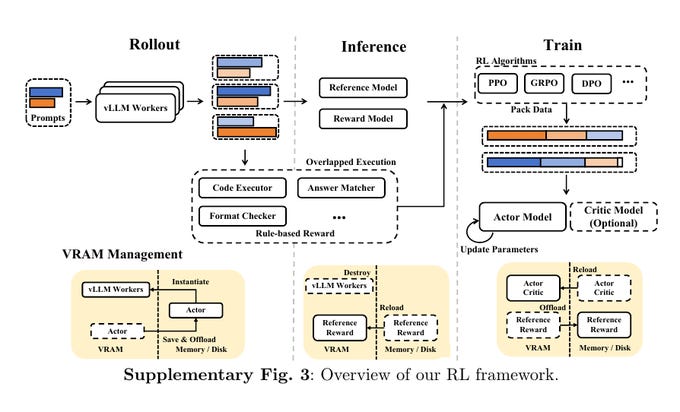

🧱 The RL pipeline of DeepSeek-R1

Training runs as 4 modules, rollout with vLLM workers, reward inference, rule‑based scoring, and optimization, with actors, reference, and reward models aggressively offloaded between stages to free VRAM. They use expert parallelism and hot‑expert replication for the MoE actor, multi‑token prediction for fast decoding, length‑aware best‑fit packing, and DeepSeek’s DualPipe for efficient pipeline parallelism. Slow checks like code executors and format validators run asynchronously so their latency overlaps with rollout and inference.

📦 Description of RL Data and Tasks for DeepSeek-R1

The reinforcement set mixes math 26K, code 17K plus 8K bug‑fix tasks, STEM 22K, logic 15K, and general 66K for helpfulness and harmlessness. Math, STEM, and many logic items use verifiable answers for binary rewards, code uses unit tests and executors, and the general split relies on reward models trained on ranked pairs. Later they add about 800K supervised samples, roughly 600K reasoning and 200K general, to stabilize style and non‑reasoning behaviors.

🧊 Cold start, style, and language consistency for DeepSeek R1 training.

They prime the actor with a small set of long chain‑of‑thought examples written in first‑person, which is a readability choice for users and not a claim of agency. Raw traces are filtered for correctness and format, then DeepSeek‑V3 rewrites them into concise solutions that keep the original reasoning steps, followed by human verification. To stop language mixing, they explicitly ask to translate the thinking process to the query language and add a language consistency reward that stabilizes language across steps with only a small coding tradeoff.

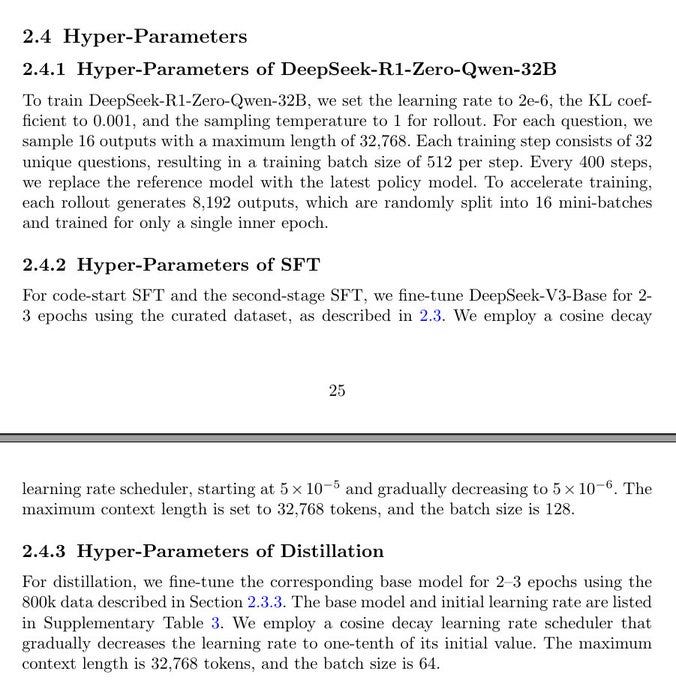

⚙️ Key hyperparameters and compute for DeepSeek R1 training

For the 32B R1‑Zero run, KL coefficient 0.001, rollout temperature 1, 16 samples per question, 32,768 max tokens, 32 unique prompts per step for an effective batch 512, with the reference policy refreshed every 400 steps and 8,192 rollouts split into 16 mini‑batches per outer step. They train on 64×8 H800 GPUs for about 198 hours for R1‑Zero and about 80 hours for R1, totaling 147K H800 GPU hours which they price at $294K at $2 per GPU hour.

🪞 What emerges during RL

The frequency of reflective tokens like “wait”, “verify”, and “check” rises by roughly 5–7x during training, and specific behaviors such as “wait” spike later, hinting that reflection strategies mature at distinct stages.

Emergence of reflective behaviours in training of DeepSeek-R1. The left chart shows the total count of reflection words rising across training steps, with bigger spikes over time.

That means the model starts using self-monitoring phrases like wait, check, and verify more often while solving. The right chart zooms in on the single word wait, which stays near zero for many steps then jumps sharply late in training.

That late jump signals a behavior that appears during learning, not something baked in from the start. The noisy ups and downs are exploration, but the baseline keeps trending up, which matches a reward that favors careful reasoning.

Why naive PPO baselines may not lead to inference-time scaling as per the DeepSeek-R1 paper and why GRPO fixes it. PPO needs a separate value model to guess future reward from partial text, which is expensive and shaky when the only reward arrives at the very end, GRPO removes that value model entirely.

This makes credit assignment for long chain-of-thought brittle under PPO, because the early tokens often get revised later, so partial predictions of “how good this answer will end up” are unreliable, this is exactly the case GRPO is designed to avoid by scoring whole sampled answers. Typical PPO also applies a per-token KL penalty to a reference policy, so the total KL cost grows with length, which implicitly pushes the policy toward shorter outputs, this length tax is absent when GRPO uses a single KL term on the group. (inference based on the standard PPO-RLHF formulation cited by the paper)

With GRPO the model learns that “more thinking” can pay off, and the paper shows response length rising during training along with accuracy, which is the desired inference-time scaling behavior. So the fine point is, PPO’s value-model plus token-wise KL setup couples reward to length and destabilizes long-horizon credit, while GRPO scores complete answers and uses a global KL, letting longer reasoning improve outcomes.

🧭 Google Chrome is getting its biggest AI upgrade.

Google is pushing out the "biggest upgrade to Chrome in its history" and stuffing it full of AI.

Agentic actions are coming next, so the browser can book tasks on websites for you while staying interruptible and under your control. Gemini shows up on desktop for Mac and Windows in the U.S. in English and it is coming to mobile, with Workspace rollout for businesses.

Multi-tab context will let Gemini compare and summarize across sites. e.g. turn travel tabs into a single itinerary. A memory feature will let you ask for pages you visited before, like “the walnut desk site last week,” instead of digging through history.

Deeper hooks into Calendar, YouTube, and Maps mean you can schedule, jump to a video moment, or pull location details without changing tabs. Search’s AI Mode sits right in the omnibox so you can ask longer, layered questions and carry the chat forward.

You can also ask questions about the page you are on and get an AI Overview beside it, with suggested follow-ups. For security, Chrome is now using Gemini Nano, inside its Enhanced Protection system.

It warns you about dangerous websites or downloads, spots more sophisticated scams that try to trick people. So now Chrome can detect things like fake tech-support popups, fake virus warnings, and fake prize giveaways and stop them before you get tricked.

Basically, it acts as an on-device scam filter inside Enhanced Protection. Chrome now mutes spammy site notifications and learns your permission preferences, which has already cut ~3B unwanted notifications per day on Android.

A new password agent lets you change compromised passwords in 1 click on supported sites like Coursera, Spotify, Duolingo, and H&M. Google plans to bring AI Mode, its chatbot-like search option, into Chrome’s Omnibox before September is over.

With it, users get a dedicated button and keyboard shortcut that call on Gemini to propose prompts from the content of a page. It’s an optional feature, so typing in a normal search query remains unchanged. Even so, generative AI will still be hard to miss, since AI Overviews usually appear first in search results.

Chrome plans to add agentic features to the browser over the next several months.

This basically means that a user could ask Gemini to complete a web-based task, like adding items to an Instacart order. Then, the generative AI tool will run in the background, attempt to choose groceries by clicking around, and then show you the results before you make the final purchasing decision. Aspects of this are similar to what Google has previously demoed with its Project Mariner experiment.

The AI browser battle is heating up fast.

The Browser Company transitioned from its Arc browser to Dia this June, adding a suite of new AI features.

Perplexity entered the space with Comet, which bakes its generative AI search into the browsing experience.

Reports hint that OpenAI may release its own competitor as well.

And Google’s Gemini app has already taken the top spot in iOS free downloads, surpassing ChatGPT, thanks to the buzz around its Nano Banana image generator.

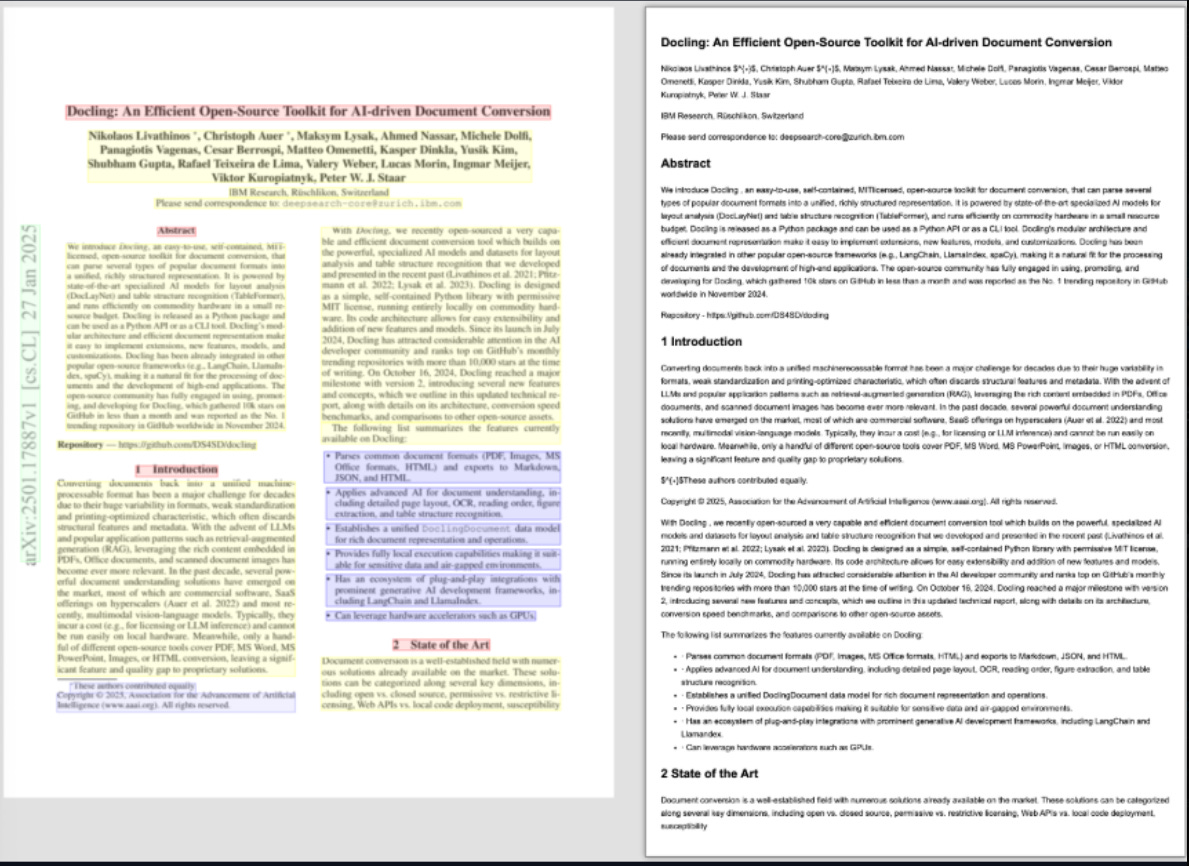

🖇️ IBM just released a tiny document VLM, Granite-Docling-258M.

converts PDFs into structured text formats like HTML or Markdown while preserving layout

accurately recognizes and format equations including inline math, handle tables, code blocks, and charts, support full-page or region-specific inference

answer questions about a document’s structure such as section order or element presence, and even process content in English plus experimental Chinese, Japanese, and Arabic.

Ships under Apache 2.0, and plugs straight into the Docling toolchain. It is a 258M parameter image+text to text model that outputs Docling’s structured markup so downstream tools can keep tables, code blocks, reading order, captions, and equations intact, rather than dumping lossy plain text, and IBM positions this as end-to-end document understanding, not a general vision system.

Under the hood it takes the IDEFICS3 recipe and swaps in siglip2-base-patch16-512 as the vision encoder plus a Granite 165M language model, connected by the same pixel-shuffle projector used in IDEFICS3, which explains how it stays small but layout aware. The main key capability is layout-faithful conversion with enhanced equation and inline math recognition. For usage it is already wired into Docling CLI and SDK, so you can run full-page or bbox-guided inference and export HTML, Markdown, or split-page HTML with layout overlays right from the terminal.

That’s a wrap for today, see you all tomorrow.