🐋 DeepSeek shares best paper award at top global AI research conference

Deepseek’s ACL-winning long-context paper, Manus’s 100-agent Wide Research, DeepMind’s planet-scale mapper, Amodei’s no-wall scaling pledge, and a warning that the AI arms race burns hot.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (31-July-2025):

🐋 Deepseek just won the best paper award at ACL 2025 with a breakthrough innovation in long context

🤖 Manus just fired up Wide Research, a feature that spins more than 100 cloud-hosted agents in parallel to finish big research or design jobs within minutes.

🚩 Google DeepMind’s new AI is claiming full planet-mapping powers—with a level of accuracy that sets a new bar.

🗞️ Byte-Size Briefs:

Anthropic CEO Dario Amodei just gave THE MOST accelerated talk on how scaling will continue to make models exponentially more powerful for a long time to come — and that there is "NO WALL."

🧑🎓 OPINION: Chasing AI dominance worldwide is beginning to feel like playing with fire.

🐋 Deepseek just won the best paper award at ACL 2025 with a breakthrough innovation in long context

A research paper from DeepSeek, was honoured with the best paper award at the Association for Computational Linguistics (ACL) conference in Vienna, Austria, widely recognised as the premier global conference for AI researchers.

The paper, titled “Native Sparse Attention: Hardware-Aligned and Natively Trainable Sparse Attention”. It shows a 11.6X decode speed jump on 64K-token inputs while scoring higher than dense attention on nearly every benchmark.

To give how MASSIVE DeepSeek R1 was, the model shot to the top of the App Store on 27 Jan 2025, and by the closing bell the next day Wall Street looked like it had been punched. Nvidia surrendered $593B in market cap, the biggest one-day hit in US history, while the Nasdaq slumped 3.1%, the Philadelphia Semiconductor Index slid 9.2%, and chip giants from Broadcom to TSMC tumbled. By the time traders went home, the Magnificent 7 and their suppliers had seen roughly $1T in paper value vaporize.

That overnight rout proved how a single algorithm can yank trillions from indexes and shake strategic confidence all at once. The slide knocked almost $100B off the personal fortunes of tech billionaires, spooked analysts who had banked on hundreds of billions in future AI capex, and even pushed Donald Trump to label DeepSeek a “wakeup call”. All this upheaval came from code that DeepSeek says it trained for under $6M on repurposed H800 GPUs, a reminder that one clever architecture tweak can jolt both the geopolitical chessboard and every 401(k) in America in less than 24 hours.

🔍 Why full attention hurts

Traditional full attention mechanisms, the backbone of transformer models, operate with quadratic complexity (O(n²)), making them impractical for sequences beyond a few thousand tokens. The cost of maintaining this architecture grows exponentially, creating challenges in real-world deployment.

Sparse attention mechanisms offer a promising alternative, selectively attending to only the most relevant tokens. The newly introduced Native Sparse Attention (NSA) by DeepSeek AI represents a groundbreaking advancement in this domain.

🧩 NSA’s (Native Sparse Attention) three-lane shortcut

NSA keeps one query talking to far fewer keys by mixing three views of the past:

Compressed chunks catch global gist, every 32 tokens collapse into a learned summary.

A top-n picker keeps the 16 most relevant 64-token blocks alive, giving sharp detail only where it matters.

A rolling window of the last 512 tokens guards local fluency.

A small MLP gate blends these paths so the model can decide, token by token, how much weight each view deserves.

⚙️ Hardware-friendly from day one

The team wrote Triton kernels that feed whole GQA groups into shared SRAM blocks, trimming redundant loads. Continuous blocks match tensor-core shapes, so the GPU stays busy instead of chasing random indices. That choice alone lifts arithmetic intensity enough to eclipse FlashAttention-2 for long contexts.

📈 What the numbers say

General suite: NSA beats dense on 7 / 9 tasks and posts a higher blended score even though it reads fewer keys.

LongBench: average rises from 0.437 to 0.469, with big gains on multi-hop QA (+0.087 HPQ) and code comprehension (+0.069 LCC).

Reasoning fine-tune: on the AIME-24 set, accuracy climbs from 0.092 to 0.146 when the generation budget grows to 16K tokens.

Speed: at 64K tokens the forward pass is 9X faster and back-prop is 6X faster, while each decode step loads data equal to roughly 1 / 11 of dense attention’s demand.

Overall, what's so special about this Paper?

Hardware-first thinking

DeepSeek’s team chased arithmetic intensity, the compute-to-memory ratio that decides whether the GPU waits on data or keeps its tensor cores humming. They grouped queries that already share key blocks, then fetched those blocks once into on-chip memory. This group-centric load pattern slashes redundant transfers and balances work across streaming multiprocessors, giving near-optimal throughput even at 64K tokens .

🔁 By turning sparsity into a learnable, hardware-aware routine, NSA manages to cut memory traffic

Traditional sparse attention starts by hard-coding a mask. Picture a big table where every query token checks some keys and skips the rest. Each cell in that table is frozen to 1 (keep) or 0 (drop) before training even begins. Because those choices are locked, gradients never reach them, so the model has no way to rethink which distant words matter. Engineers usually prune or patch the pattern after pre-training, but that late surgery often hurts accuracy and forces custom kernels that GPUs handle poorly.

Native Sparse Attention (NSA) replaces that frozen mask with trainable filters built from ordinary neural layers. It squeezes every 32-token block into a short summary, scores those summaries, keeps the top 16 blocks, and mixes them with a rolling 512-token window for local detail. All of that scoring, picking, and blending is continuous, so gradients flow through the entire path while the network learns language. The model therefore decides on the fly what to read closely and what to skim.

Key differences between Traditional sparse attention and NSA

Dynamic vs. static selection

Traditional: a query either always sees a key or never does.

NSA: the keep-or-skip score is a real number that shifts during training, then snaps to a sparse pattern only during the actual matrix multiply.End-to-end gradients

Traditional: masks block gradients, so long-range paths stay fixed.

NSA: every choice is differentiable, letting the network refine its own reading strategy.Hierarchy, not one pattern

Traditional: one sparse layout tries to serve both local grammar and far-away context.

NSA: three branches share the load—global summaries for gist, top-n blocks for specific links, and a short window for fluency.Hardware harmony

Traditional: irregular masks scatter memory reads, starving tensor cores.

NSA: equal-sized blocks line up with GPU tile shapes, so key blocks load once per group and stay in fast on-chip memory.No pruning stage

Traditional: dense pre-train, prune, then fine-tune, risking forgotten knowledge.

NSA: sparse from the first batch, saving compute and sidestepping quality loss.

🪢 Three branches, no cross-talk

A dedicated sliding window handles the last 512 tokens, freeing the compression and top-n branches to chase long-range cues without being hijacked by local syntax. Separate key-value sets and a tiny gate keep gradients from colliding, which stabilizes training on sequences the length of a novella .

⚙️ Plays nicely with modern backbones

The kernels assume Grouped-Query Attention and a Mixture-of-Experts stack, the same recipe used by many recent 27B models. Because all heads in a group re-use the same sparse key blocks, the Triton code remains short and portable while still filling the L2 cache .

📈 Speed that shows up in every phase

On an A100, the full forward pass runs 9× faster, back-prop jumps 6×, and step-wise decoding peaks at 11.6× for 64K tokens. Unlike most sparse systems, the gain is visible during training, inference and generation, so total compute dollars drop instead of just wall-clock latency .

🤖 Manus just fired up Wide Research, a feature that spins more than 100 cloud-hosted agents in parallel to finish big research or design jobs within minutes.

Manus just launched the Wide Research feature, ushering in a new era of parallel processing with multiple agents.

The swarm lives inside separate virtual machines, so users only write one prompt and receive ready-to-use spreadsheets, webpages, or ZIP files. It lands first in the $199/month Pro plan and will trickle down to cheaper tiers soon.

Manus built its platform around personal virtual machines that start up on demand.

Each machine runs a full Manus agent, meaning it can search, reason, and produce output without sharing memory with siblings. Wide Research simply clones that agent as many times as needed, so the system can hammer away at sub-tasks in parallel instead of queueing them.

Because every clone is identical, Manus skips the old manager-worker templates that force agents into fixed roles. That choice keeps things flexible and lets the swarm tackle any mix of research, data crunching, or creative work.

A launch demo compared 100 sneaker models. The swarm scraped price, design notes, and stock status, then handed back a sortable matrix and a clean webpage in under 5 minutes.

In another run the system generated 50 poster styles at once and zipped the files for download. Speed comes from spreading the load, yet Manus gives no hard numbers against single-agent methods, so the true efficiency gains remain unmeasured.

Heavy parallelism costs extra compute and can fail if clones trip over the same site protections or exhaust rate limits.

Developers on other multi-agent stacks report token bloat and slowdowns when subagents fight for resources, problems Manus still has to prove it solved.

Pricing follows the existing ladder. Free users keep 300 daily credits and one running task, while Pro buyers get 19,900 credits, 10 concurrent tasks, plus early betas like Wide Research. Annual payment cuts the bill by 17%, and Manus even throws in a T-shirt for the Pro crowd.

Under the hood, the company frames Wide Research as a first test of an agent cloud that could someday scale to hundreds or thousands of threads without manual setup.

If the approach holds up, Manus might show that general-purpose clones working side by side beat tightly scripted role systems on speed and variety.

🚩 Google DeepMind’s new AI is claiming full planet-mapping powers—with a level of accuracy that sets a new bar.

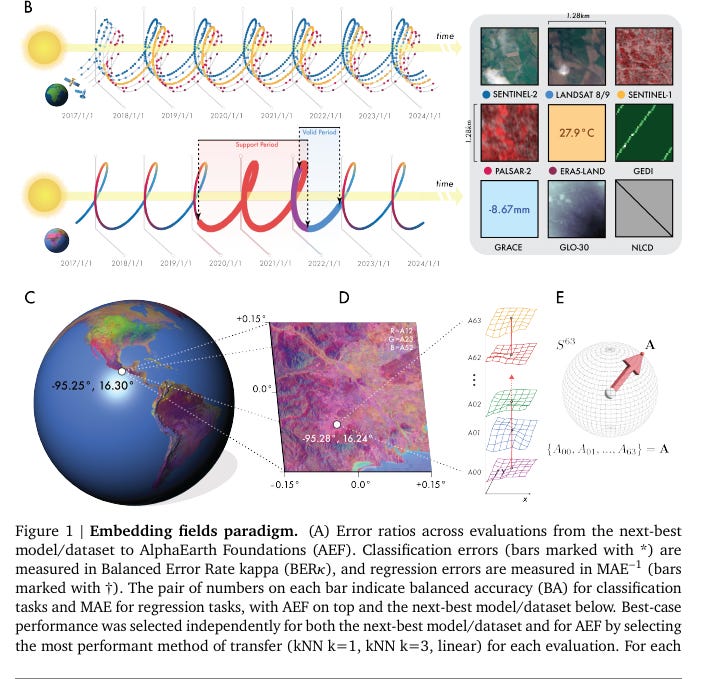

Google Deepmind Announces AlphaEarth a new AI model, "AlphaEarth Foundations," which analyzes vast Earth observation data and easily represents it in high-quality maps.

AlphaEarth Foundations squeezes petabytes of mixed satellite data into 64-byte summaries for every 10×10 m patch, trimming mapping errors by 24%.

💾 Tiny vectors, huge reach

The picture sums up how AlphaEarth Foundations turns a messy stream of satellite data into a tidy “embedding field”.

The zig-zag orbits at the top mark the raw observation timeline while the bold curves underneath highlight AlphaEarth’s trick: it calls earlier measurements the support period and learns from them, then tests itself in the later valid period to be sure it can generalise rather than memorize.

The little grid of thumbnails on the right shows the mix of sensors that feed the model. Optical imagers, radar, temperature tiles and even gravity readings each supply a slice of information that normally sit in separate silos.

Panels C and D zoom from the whole globe down to a single 10 × 10 m square. Every tile gets compressed into a 64-value vector, so the planet becomes a sea of compact numbers instead of petabytes of pixels.

Panel E sketches that vector living on a high-dimensional sphere. Treating the code this way lets simple nearest-neighbour look-ups beat heavyweight models, which is why the caption reports lower error scores for both classification and regression tasks.

📊 Benchmarks across land-use and surface-property tasks show an average 24% lower error than traditional or other AI approaches, especially when labels are scarce. Google Earth Engine now hosts an annual Satellite Embedding dataset with 1.4 trillion footprints per year, and more than 50 groups have tested it. Projects range from classifying unmapped shrublands to tracking Amazonian farming shifts.

🌍 Why the innovation really matters

Remote-sensing science often stalls at data wrangling, not modeling. By delivering an off-the-shelf embedding that is small, accurate and sensor-agnostic, AlphaEarth turns Earth observation into a plug-and-play exercise.

Analysts can now focus on the question—like how fast a river delta is shifting—rather than on downloading terabytes or aligning different satellite calendars. The result is faster insight for conservation, climate monitoring and land-use planning.

🗞️ Byte-Size Briefs

Anthropic CEO Dario Amodei just gave THE MOST accelerated talk on how scaling will continue to make models exponentially more powerful for a long time to come — and that there is "NO WALL." 🔥

Dario Amodei emphasizes that AI progress is continuing at an exponential pace, driven by increased compute, more data, and advances in training techniques. Initially, models improved through large-scale pre-training, but now a second stage involving reinforcement learning and reasoning is also scaling. Both stages are progressing in tandem, and he sees no clear barrier to continued scaling.He notes especially strong recent improvements in math and code tasks, and expects current gaps in more subjective areas to be temporary. He stresses that people often underestimate exponential growth because its early stages appear modest. But once the curve steepens, progress and impact become extremely rapid.

He illustrates this with Anthropic’s revenue: from $0 to $100 million in 2023, then $1 billion in 2024, and already over $4.5 billion by mid-2025. While he doesn't assume this growth will keep up forever, he highlights how easy it is to be misled about the pace and impact of exponential trends. He compares this to the internet’s growth in the 1990s, when a few years of infrastructure improvements suddenly enabled global digital communication. He suggests a similar dynamic is unfolding now with AI.

🕵️♀️ A post went viral on how lots of people are accidentally making their ChatGPT chats public, and some of those chats are getting listed on Google. I did a test myself (screenshot below), and confirmed that shared chats can be found through search.

Basically, if you hit the "Share" button—say, to show a friend or use it in a presentation—the conversation becomes a public link. That link can end up searchable.

In the test, no usernames showed up (just “anonymous”), but most users probably don’t realize these shared chats could be indexed. So they might share very personal stuff, not knowing it could appear in search results.

If you're using ChatGPT or any AI chatbot, some recommendation to follow:

Avoid the “Share” button when possible—shared chats might get indexed

Don’t include personal or private information in your conversations

Turn off memory, so less of your data gets saved or used across chats

Disable training and anonymize the chat to reduce data exposure

Review your privacy settings and adjust anything that could limit data collection.

🧑🎓 OPINION: Chasing AI dominance worldwide is beginning to feel like playing with fire.

The U.S. keeps pouring money into bigger models and bigger data‑center clusters, while China publicly talks almost only about day‑to‑day AI applications. That gap looks harmless until you remember how both countries react when a rival suddenly rolls out a strategic capability. Add Sam Altman telling Donald Trump that human‑level AGI could show up on his watch, China’s opaque decision‑making, and fresh export‑chip fights, and you get an AI race where either side could misread the other and push the world toward open conflict.

🚦 Scene‑setter: Same Race, Different Tracks

Washington is betting on raw model IQ, so Sam Altman pitched Trump on reaching human‑level AGI during the next 4 years, arguing that winning that milestone decides who writes the global rulebook. Beijing, at least in public, keeps saying, “Show me the factory robot schedule or the city traffic dashboard” rather than talking about consciousness in silicon.

🗽 America’s “Build It Bigger” Play

OpenAI, Oracle, and SoftBank have lined up the $500B Stargate program to drop multi‑gigawatt data centers across the U.S. and Europe. Trump’s new AI Action Plan promises faster permits, looser rules, and direct funding so these plants come online before 2029. Meanwhile, export controls keep tightening: the October 2024 rules block top‑shelf GPUs and even cloud rentals for any Chinese user the Commerce Department cannot vet.

🐲 China Says “Applications First,” but Is That the Whole Story?

Xi keeps telling ministries to aim at “real‑economy scenarios,” which is why you see AI rolled out in logistics, municipal services, and factory automation instead of demos of 2T‑parameter chatbots. Still, Chinese firms such as DeepSeek, Zhipu, and Stepfun openly talk about building AGI, and some have already shipped models that undercut U.S. offerings on price.

🔬 Inside the Private Labs

DeepSeek’s R1 stunned local users by beating OpenAI o1 on multiple reasoning tests while costing 20‑50x less per call. Its next‑gen R2 stalled, though, because Nvidia’s H20 shipments froze under U.S. rules, exposing just how fragile China’s compute supply really is.

⚔️ Why Tech Surprise Keeps Generals Up at Night

Knowing how tight-lipped the CCP usually is, they might already be knee-deep in AGI and just not talking about it, keeping things quiet is kind of their thing.

The Pentagon’s annual report says China likely passed 600 operational nuclear warheads in mid‑2024 but still downplays that build‑up in public briefings. If Beijing is hiding an AGI effort the same way, Washington could wake up to a system that can write better cyber exploits or war‑game campaigns faster than humans. Flip the scenario and imagine American labs cracking AGI first while Chinese planners assume it is still years away, and you see why both sides might overreact the moment a breakthrough leaks.

📝 Key takeaways

Neither country trusts open disclosure when a technology shifts the balance of power, and AGI would shift it faster than anything since nuclear weapons. Export controls slow China’s climb but also feed paranoia by obscuring real capabilities. China’s application‑heavy talk may be sincere or may be cover for an internal AGI sprint.

That’s a wrap for today, see you all tomorrow.