"DeltaLLM: Compress LLMs with Low-Rank Deltas between Shared Weights"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2501.18596

The paper addresses the challenge of large memory footprint in Large Language Models (LLMs), hindering their deployment on devices with limited resources. The paper introduces a novel compression technique to reduce LLM size while maintaining performance.

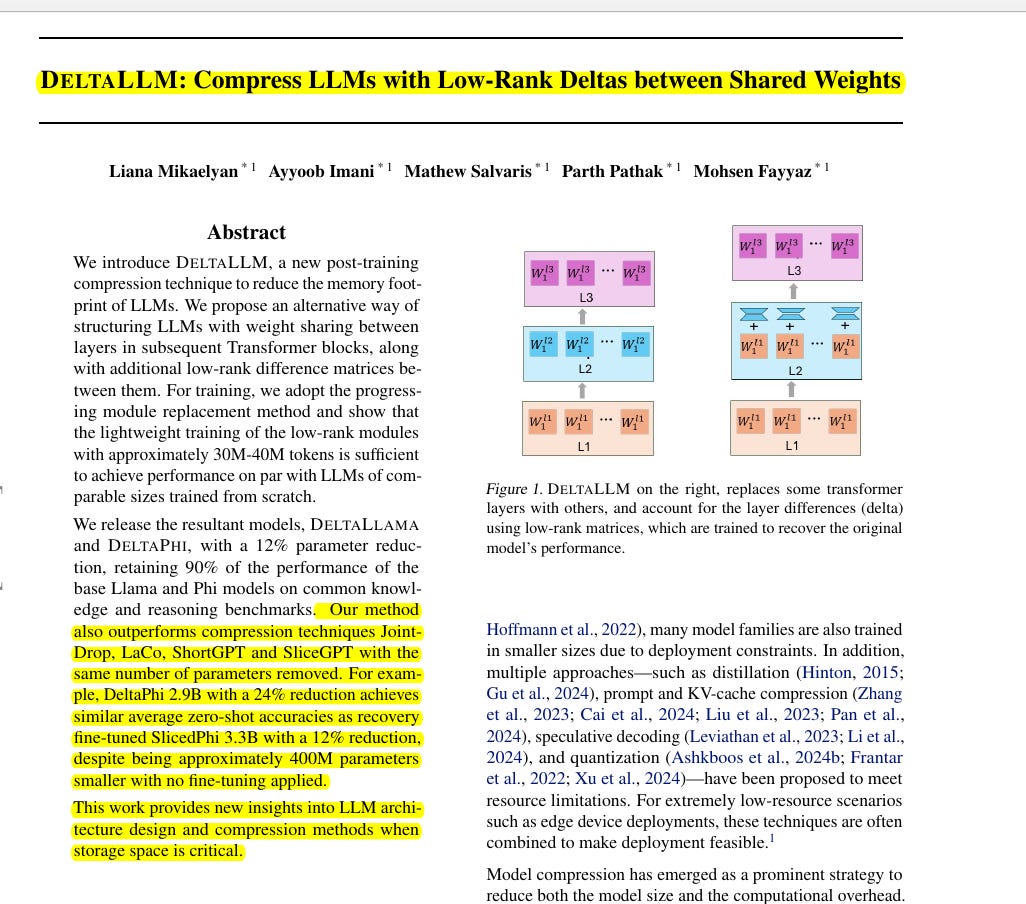

This paper proposes \genericmethodname, a post-training compression method. It shares weights between Transformer layers and uses low-rank matrices to capture the differences, reducing model size.

-----

📌 DeltaLLM achieves efficient LLM compression via weight sharing and low-rank deltas. This method reduces parameters significantly while retaining strong benchmark performance with minimal retraining.

📌 Progressive Module Replacement is crucial in DeltaLLM. It ensures stable and rapid training of delta layers, outperforming standard knowledge distillation for decoder LLM compression.

📌 DeltaLLM reveals that MLP layers are more amenable to compression than attention. Compressing MLP weights provides better parameter reduction with less performance degradation.

----------

Methods Explored in this Paper 🔧:

→ The paper introduces \genericmethodname, a compression technique for LLMs.

→ \genericmethodname restructures LLMs by sharing weights across Transformer blocks.

→ Low-rank "delta" matrices are added to account for differences between shared layers.

→ These delta matrices are trained using knowledge distillation, with the original LLM as the teacher.

→ Progressive Module Replacement (PMR) is used during training. PMR progressively replaces original layers with compressed layers based on a schedule to improve convergence.

→ Two training approaches are explored: delta-tuning only and delta-layer tuning with LoRA fine-tuning. The paper focuses on delta-tuning only where only the delta layers are trained, while base model weights are fixed.

→ The method is applied to compress Phi-3.5 and Llama-3.2 models, creating \phicompressedname and \llamacompressedname models.

-----

Key Insights 💡:

→ Weight sharing between Transformer layers, combined with low-rank deltas, effectively compresses LLMs.

→ Training only the low-rank delta matrices is sufficient to recover most of the original model's performance.

→ Progressive Module Replacement accelerates training convergence compared to standard distillation.

→ Compressing MLP layers within Transformer blocks is more effective than compressing attention layers for this method.

→ \genericmethodname compressed models outperform other compression techniques like JointDrop, SliceGPT, ShortGPT, and LaCo with the same parameter reduction.

-----

Results 📊:

→ \phicompressedname 2.9B achieves similar zero-shot accuracy as SlicedPhi 3.3B, despite being 400M parameters smaller.

→ \phicompressedname 3.35B outperforms Llama 3.2B and Qwen 3.2B on MMLU, WinoGrande, and ARC-Challenge benchmarks.

→ \phicompressedname 3.35B achieves a perplexity of 3.34 on the Alpaca dataset, outperforming Phi 3.5's 2.96, suggesting improved performance post-compression in some metrics.

→ \phicompressedname 2.9B model's delta-layers occupy only 90MB of storage.