Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

🧑🎓 Deep Dive Tutorial: Deploying Grok-3 on Microsoft Azure

Grok-3 landed in the Azure AI Foundry catalog in May 2025, so you can call the model without renting GPUs (devblogs.microsoft.com). Grok-3 handles 131,072 tokens in one request, which makes it great for long chats or document analysis. The model is also turning up on other clouds like Oracle, so cross-provider parity is becoming normal (oracle.com). And next week Grok-4 will most probably be released.

Azure adds built-in content safety and monitoring on top of the raw model (learn.microsoft.com).

🛠️ Prerequisites

Active Azure subscription and Azure CLI installed

Rights to create cognitive resources

Pick a region, for example eastus2

🚀 Step by Step

Set environment variables

🧩 2. Pick the subscription

az account set --subscription "${AZURE_SUBSCRIPTION_ID}"

This tells every later az command which subscription to bill.

🧩 3. Create a tidy resource group

az group create \\

\--name "${AZURE_RESOURCE_GROUP}" \\

\--location "${AZURE_REGION}" \\

\--subscription "${AZURE_SUBSCRIPTION_ID}"

Keeping Grok-3 in its own group helps when you need to track spend or tear it down.

A resource group is a container inside your Azure subscription. Every service you create, from a virtual network to the Grok-3 cognitive-services account, must live in one resource group, so Azure always asks for it when you run az group create or any later deployment command learn.microsoft.com.

Putting related pieces in the same group keeps life simple. You can roll out, update, lock, tag, or delete everything in that container with one action, instead of touching each item one by one. It also gives you a natural scope for role-based access control and budget checks, because cost and policy reports can be filtered by group.

If your Grok-3 project includes the AI account, a storage account for logs, and an insights workspace, dropping them all in one resource group lets you track spend, apply the same tags, and tear the stack down with a single delete when the demo ends. Azure will not let a resource sit outside a group, so even a tiny test still needs one, though you can keep several deployments in different groups under the same subscription for tidy billing and cleaner permissions.

🧩 4. Spin up the multi-service AI account

az cognitiveservices account create \\

\--name "${AZURE_AI_NAME}" \\

\--resource-group "${AZURE_RESOURCE_GROUP}" \\

\--location "${AZURE_REGION}" \\

\--kind AIServices \\

\--sku S0 \\

\--custom-domain "${AZURE_AI_NAME}" \\

\--subscription "${AZURE_SUBSCRIPTION_ID}"

S0 is the standard paid tier for an Azure AI Services resource. Azure labels it “Standard S0” and it is always pay-as-you-go, so you only get charged for the work the resource actually does instead of a fixed monthly fee learn.microsoft.com.

When you deploy a model into that resource, the CLI flag --sku-name "GlobalStandard" sticks the deployment on Azure’s worldwide pool for that model. This GlobalStandard pool runs on the same pay-per-call pricing that the S0 resource uses, and Microsoft meters each prompt and completion token rather than billing for idle time or hardware hours.

Because the platform owns the GPUs, it can spin capacity up and down behind the scenes. As your traffic grows, Azure routes requests to the next available cluster and you just see a bigger token count on the bill; if you need steadier concurrency you can bump --sku-capacity, but the underlying cost is still tied to tokens processed, not to reserved hardware.

So, “S0 maps to GlobalStandard” means the resource and the deployment both sit in Azure’s standard pay-as-you-go bucket, where billing is token based and scaling is automatic.

🧩 5. Deploy Grok-3

Azure calls the amount of compute you reserve for an AI deployment a capacity unit. Think of it as a small slice of the shared GPU pool that runs your model. When you deploy Grok-3 with --sku-capacity 1, you are asking Azure to keep just enough hardware on standby for light traffic, maybe a handful of requests each second. This keeps early bills low while you are still testing prompts or wiring the endpoint into your app.

If users show up and responses start to lag, you do not rebuild the deployment. You send a single update command that raises the number, for example --sku-capacity 3 or --sku-capacity 10. Behind the scenes Azure adds more replicas of Grok-3, routes traffic to them, and retires nothing until the new copies are healthy. Your endpoint stays online the whole time, so clients never see an error or timeout caused by the scaling event.

Because billing is token based, the extra capacity only matters when you have the traffic to use it. During quiet periods those extra replicas scale back automatically. In short, starting with 1 capacity unit keeps costs down while you experiment, and you can grow to any level later without taking the service offline.

🧩 6. Collect key and endpoint

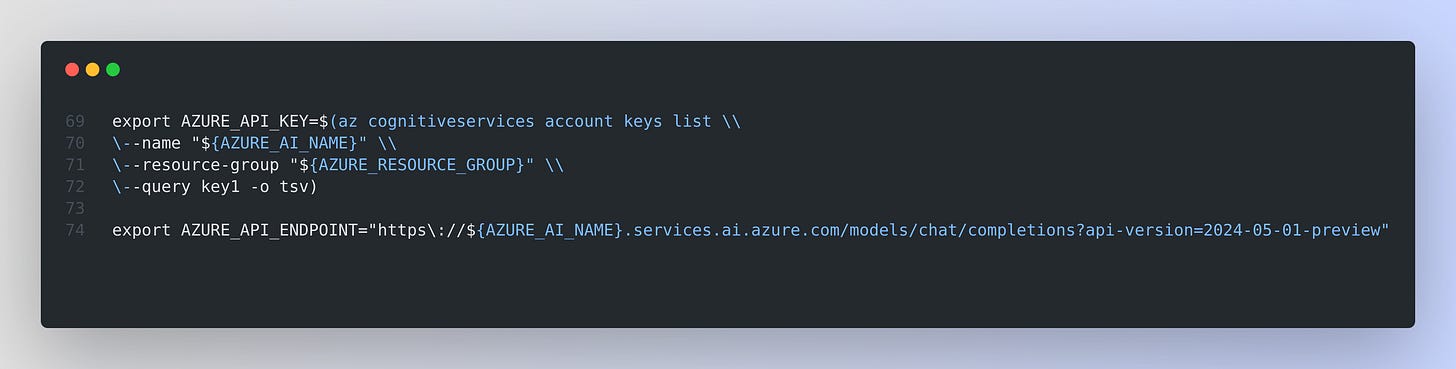

export AZURE_API_KEY=$(az cognitiveservices account keys list \\

\--name "${AZURE_AI_NAME}" \\

\--resource-group "${AZURE_RESOURCE_GROUP}" \\

\--query key1 -o tsv)

export AZURE_API_ENDPOINT="https\://${AZURE_AI_NAME}.services.ai.azure.com/models/chat/completions?api-version=2024-05-01-preview"

AZURE_API_KEY holds the secret string that proves you own the service. Azure checks every incoming request for this key. If the header is missing or wrong, the call fails with 401. Pulling the key once with az cognitiveservices account keys list and storing it in an environment variable lets every later curl, SDK, or script read it without you pasting the value by hand.

🧩 7. Smoke test with curl

curl -X POST "${AZURE_API_ENDPOINT}" \\

-H "Content-Type: application/json" \\

-H "Authorization: Bearer $AZURE_API_KEY" \\

-d '{

"messages":\[{"role":"user","content":"hello"}],

"model":"'"${AZURE_AI_NAME}-${MODEL}"'"

}'

The response JSON echoes usage counts that match OpenAI’s schema.

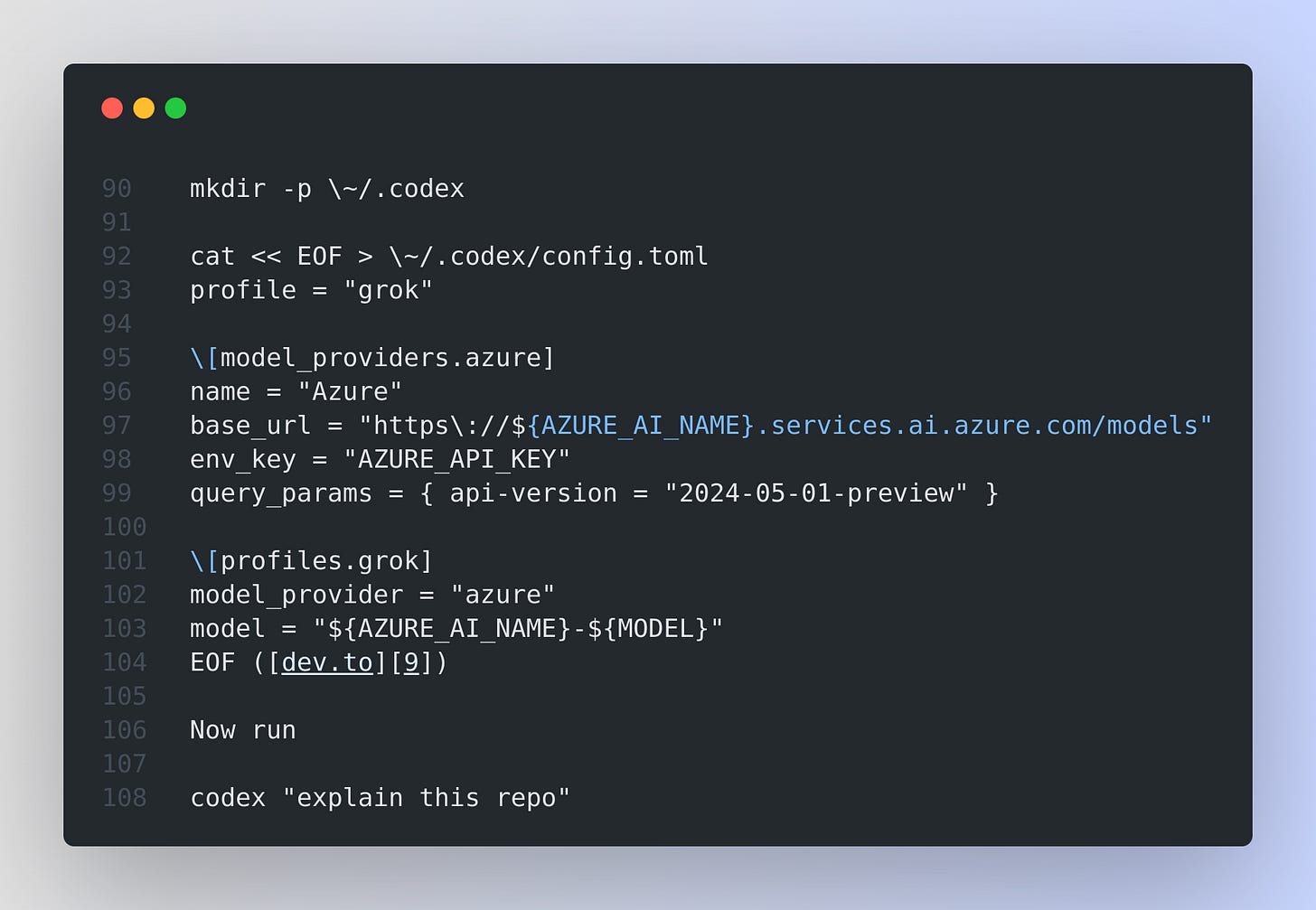

🧩 8. OPTIONAL STEP: Hook up OpenAI’s Codex CLI for quick chats

This step is completely optional and separate from the Azure CLI work. Azure CLI handled resource creation and model deployment. Codex CLI comes into play after the endpoint exists and you want a faster way to chat or automate code tasks without writing curl calls or full SDK scripts each time.

Codex CLI is an open-source command-line tool that OpenAI released so developers can talk to large language models from the terminal and also let the tool read files, write patches, and run shell commands inside their local repo. Unlike the older Codex model, this program is just a client that sends your prompts to whatever model endpoint you point it at, so the code above is not about OpenAI’s hosted Codex model, it is only about wiring the CLI to Grok-3 that you already deployed on Azure.

Codex forwards the prompt to Grok-3 using your key.

The small config file you dropped into ~/.codex sets three essentials. First, base_url tells the CLI to hit your Grok-3 endpoint instead of the default OpenAI cloud. Second, env_key lists the environment variable, AZURE_API_KEY, where the CLI should look for the secret key so you never hard-code credentials. Third, model names the exact deployment id you created earlier, for example groksvc-grok-3, so Codex knows which model to call

When you type codex "explain this repo", the CLI reads AZURE_API_KEY, builds a chat request that follows the same JSON schema you used with curl, and posts it to your Azure endpoint. It then prints the model’s reply and, if you ask, can run commands like tests or git diff, using that answer to guide the next step in the loop.

So the reason for this extra step is convenience: Codex CLI turns Grok-3 into an interactive coding assistant that lives in your terminal, while the Azure CLI work stayed focused on provisioning the service.

🧩 9. Watch metrics and logs

Azure Monitor surfaces request count, latency, and every token you spend under Microsoft.CognitiveServices/accounts.

Azure Monitor attaches a time-series feed to every Cognitive Services account you create. Each time a request hits your Grok-3 endpoint the service pushes a metric sample into the Microsoft.CognitiveServices/accounts namespace. This happens automatically, so you get usage data as soon as the deployment is live.

The feed includes both operational and consumption numbers. Operational series show whether the endpoint is healthy and fast, while consumption series track how many tokens you burn.

• Calls and SuccessfulCalls count total requests and those that finished without error.

• Latency captures end-to-end time in milliseconds for each request window .

• PromptTokens, CompletionTokens, and TotalTokens record the tokens the model read, wrote, and the sum of both, so you can tie spend directly to traffic.

• ThrottledCalls and ServerErrors flag rate-limit hits or service faults, which helps you spot scaling gaps before users complain.

In the Azure portal open your cognitive-services resource, choose Monitoring then Metrics, pick the Microsoft.CognitiveServices/accounts namespace, and select a metric like TotalTokens. The chart updates in real time and you can split the curve by deployment name to see which model is busiest.

Diagnostic settings let you stream every metric and the richer request logs into a Log Analytics workspace or external tools like Datadog or New Relic. That makes it easy to join token spend with app traces and set custom queries over weeks or months.

Alerts ride on top of the same data. For example, set a rule that fires if TotalTokens jumps above 1,000,000 in 1h or if Latency exceeds 2,000 ms for 5 minutes. Azure Monitor then sends email, posts to Teams, or calls a webhook, so you can scale up capacity or investigate long-running prompts before users see issues.

In short, the metrics stream is your real-time dashboard and alarm system. It tells you how busy Grok-3 is, how much it costs, and whether the endpoint stays within the response time you promise to your users.

🧩 10. Scale when traffic grows

az cognitiveservices account deployment update \\

\--name "${AZURE_AI_NAME}" \\

\--resource-group "${AZURE_RESOURCE_GROUP}" \\

\--deployment-name "${AZURE_AI_NAME}-${MODEL}" \\

\--sku-capacity 3

The last flag, --sku-capacity 3, tells Azure to keep 3 capacity units online for your Grok-3 deployment. A capacity unit is a reserved slice of the shared GPU pool that hosts the model. When you first deployed Grok-3 you used 1 unit, which is fine for low traffic while you test prompts. If more users arrive, that single unit can become a bottleneck, so some requests would have to wait and the chat feels slow. Raising the number asks Azure to spin up extra replicas of the model on more GPUs.

🛠️ Why you need to scale

1 capacity unit can handle only a small number of concurrent requests before queueing starts. Adding units lets the service process more calls in parallel, which keeps latency low and prevents time-outs when many users hit the endpoint at once. Because the command is an update on the existing deployment, Azure brings the extra replicas online first, shifts traffic to them, and only then releases any surplus hardware. The endpoint address and your API key do not change, so clients keep sending requests without noticing the transition. Scaling is therefore a live, zero-downtime operation.

💰 How this affects cost

Azure still bills mainly by the tokens you generate, but each extra capacity unit holds hardware reserved for you. While traffic is high the larger pool keeps response times stable. When load drops, Azure can shrink back automatically, or you can set the capacity value lower with the same command. This on-demand sizing lets you balance performance and cost instead of over-provisioning from day one.

That’s a wrap for today, see you all tomorrow.