Distance between Relevant Information Pieces Causes Bias in Long-Context LLMs

LLMs excel at dense information but stumble when key details are spread apart.

LLMs excel at dense information but stumble when key details are spread apart.

Distance between key information pieces degrades LLM performance more than their absolute positions

Original Problem 🔍:

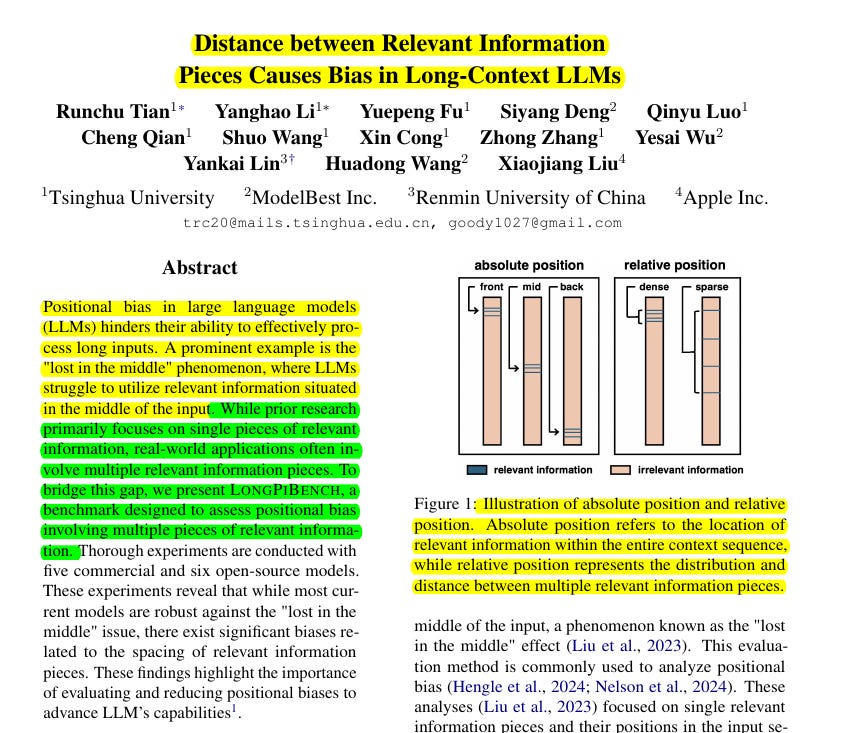

Current research on positional bias in LLMs mainly focuses on single-piece information effects like "lost in the middle". However, real applications often require processing multiple relevant pieces across long contexts.

Solution in this Paper 🛠️:

• Introduced LONGPIBENCH - a benchmark evaluating two types of positional biases:

Absolute positions (location within entire context)

Relative positions (spacing between multiple relevant pieces)

• Spans input lengths from 32K to 256K tokens

• Tests 3 tasks: Table SQL, Timeline Reordering, Equation Solving

• Evaluates 11 models (5 commercial, 6 open-source)

Key Insights 💡:

• Modern LLMs show improved robustness against "lost in the middle" phenomenon

• Performance declines sharply as distance between relevant pieces increases

• Increasing model parameters helps with absolute position bias but not relative position bias

• Query placement significantly impacts decoder-only models' performance

• Timeline Reordering and Equation Solving tasks proved too challenging for current models

Results 📊:

• Commercial models show 20-30% reduction in recall rates due to relative position bias

• Qwen 2.5 (7B) drops from 85.5% to 45% accuracy across absolute positions

• Query placement at beginning vs end shows up to 40% performance difference

• Models maintain ~95% accuracy for dense information, dropping to ~65% for sparse

→ Significant biases exist related to spacing between relevant information pieces - performance declines sharply as distance increases before stabilizing

→ Increasing model parameters helps with absolute position bias but not relative position bias

→ Query placement (beginning vs end) significantly impacts performance for decoder-only models.