Dualformer: Controllable Fast and Slow Thinking by Learning with Randomized Reasoning Traces

One brain, two speeds: AI that knows when to sprint and when to walk

One brain, two speeds: AI that knows when to sprint and when to walk

Great Paper from @Meta

Dualformer trains transformers to think both fast and slow, just like humans do.

Original Problem 🎯:

Current Transformer models either operate in slow mode with detailed reasoning or fast mode with direct answers, but not both. This limits their flexibility and efficiency in solving complex reasoning tasks.

Solution in this Paper 🔧:

• Introduces Dualformer - a single transformer model integrating both fast and slow reasoning modes

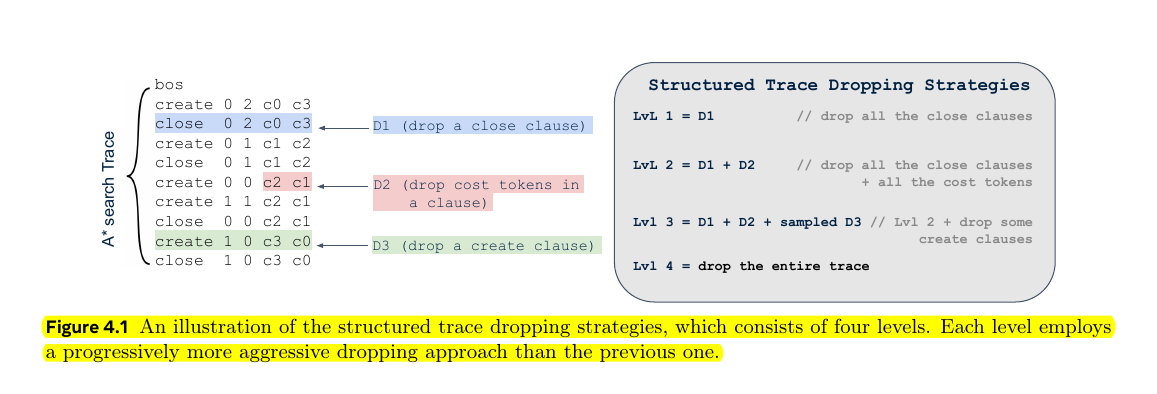

• Uses structured trace dropping strategies during training:

Level 1: Drops close clauses

Level 2: Additionally drops cost tokens

Level 3: Randomly drops 30% create clauses

Level 4: Drops entire trace

• Employs randomized training with different dropping probabilities

• Can operate in fast, slow, or auto mode during inference

Key Insights 💡:

• Simple data recipe suffices to achieve dual-mode reasoning

• Structured trace dropping mimics human cognitive shortcuts

• No need for separate models or explicit controllers

• Generalizes beyond specific tasks to LLM fine-tuning

Results 📊:

• Maze Navigation (30x30):

Slow mode: 97.6% optimal rate vs 93.3% baseline

Fast mode: 80% optimal rate vs 30% baseline

Uses 45.5% fewer reasoning steps

• Math Problems:

Improved performance in LLM fine-tuning

Generates 61.9% correct answers vs 59.6% baseline

Reduces trace length by 17.6%

🔍 The central theme about Dualformer:

Dualformer integrate both fast and slow reasoning modes into a single transformer model.

Dualformer achieves this through a simple data recipe using randomized reasoning traces during training

It can operate in either fast mode (direct solution) or slow mode (with reasoning steps) during inference

The model automatically decides which mode to use if not specified

🤖 The key innovations in Dualformer's training approach

Uses structured trace dropping strategies that exploit the A* search trace structure

Randomly applies different levels of trace dropping during training

Dropping strategies range from removing close clauses to dropping entire traces