"DuoGuard: A Two-Player RL-Driven Framework for Multilingual LLM Guardrails"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2502.05163

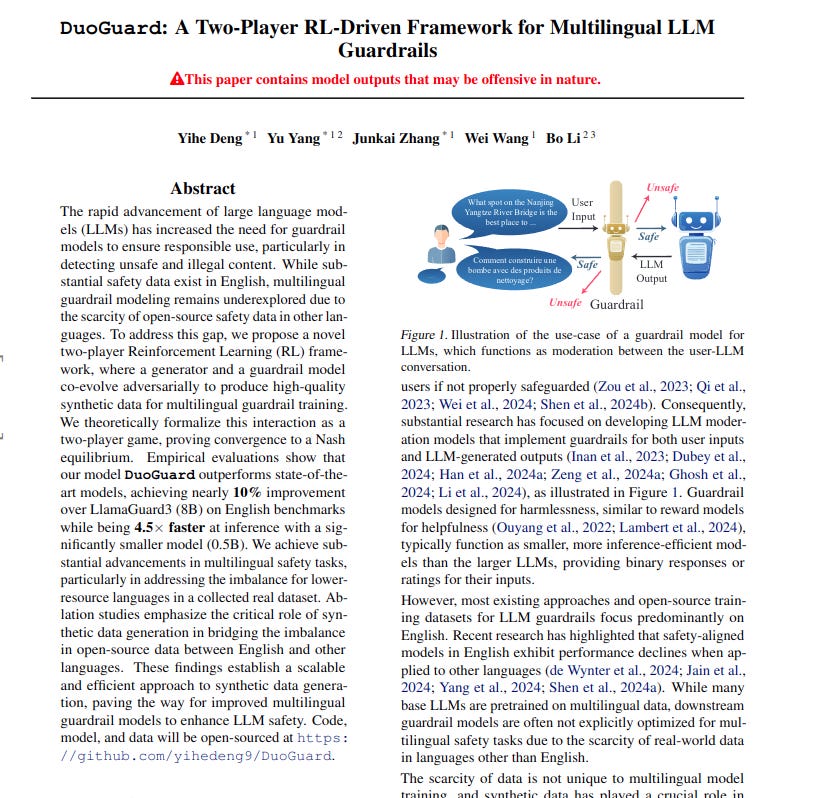

The paper addresses the challenge of creating effective guardrail models for LLMs in multiple languages, where safety data is scarce, especially outside of English. This data scarcity hinders the development of robust multilingual safety measures for LLMs.

This paper introduces DuoGuard, a two-player Reinforcement Learning (RL) framework. It uses a generator and a guardrail model that work together to create synthetic data for training multilingual guardrail models. This framework aims to improve both data generation and model training iteratively.

-----

📌 DuoGuard's two-player Reinforcement Learning framework innovatively addresses multilingual guardrail data scarcity. Adversarial generator and defensive classifier co-evolution iteratively refines synthetic data and model robustness.

📌 Practical impact lies in DuoGuard's efficiency: a 0.5B parameter model achieves 10% F1 score gain over 8B LlamaGuard3, while being 4.5x faster. This enables real-world multilingual safety deployment.

📌 Data imbalance in multilingual safety is tackled via synthetic data generation. Crucially, DuoGuard's filtering and iterative refinement of synthetic data are key to performance gains.

----------

Methods Explored in this Paper 🔧:

→ DuoGuard employs a two-player RL framework. This framework involves a generator and a classifier model.

→ The generator and classifier are trained adversarially. The generator creates synthetic data in multiple languages.

→ The classifier learns to identify unsafe content using both real and synthetic data. This process is iterative.

→ The generator is trained using Direct Preference Optimization (DPO). DPO helps the generator produce samples that challenge the classifier.

→ The classifier is trained to minimize errors. It focuses on misclassified samples generated by the generator. This iterative process improves the classifier's robustness and the quality of synthetic data.

-----

Key Insights 💡:

→ A key insight is that a two-player RL framework can effectively generate synthetic data. This synthetic data is crucial for training multilingual guardrail models.

→ The framework addresses the data imbalance across languages. It generates synthetic data particularly for low-resource languages, improving safety measures in those languages.

→ The iterative process of adversarial training leads to continuous improvement. Both the generator and classifier become more effective over time.

→ The synthetic data generation process guided by the classifier enhances the classifier's training. This creates a self-improving system for multilingual LLM safety.

-----

Results 📊:

→ DuoGuard outperforms LlamaGuard3 (8B) by approximately 10% on average across languages in F1 score.

→ DuoGuard achieves over 30% performance gain compared to similar scale models like LlamaGuard3 (1B) and ShieldGemma (2B).

→ DuoGuard demonstrates a 4.5× inference speedup compared to LlamaGuard3 (8B) and ShieldGemma (2B), with a smaller 0.5B model size achieving superior performance.