"DynVFX: Augmenting Real Videos with Dynamic Content"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2502.03621

The challenge of adding dynamic visual effects to real videos is complexity. Existing methods often require manual effort and expert tools.

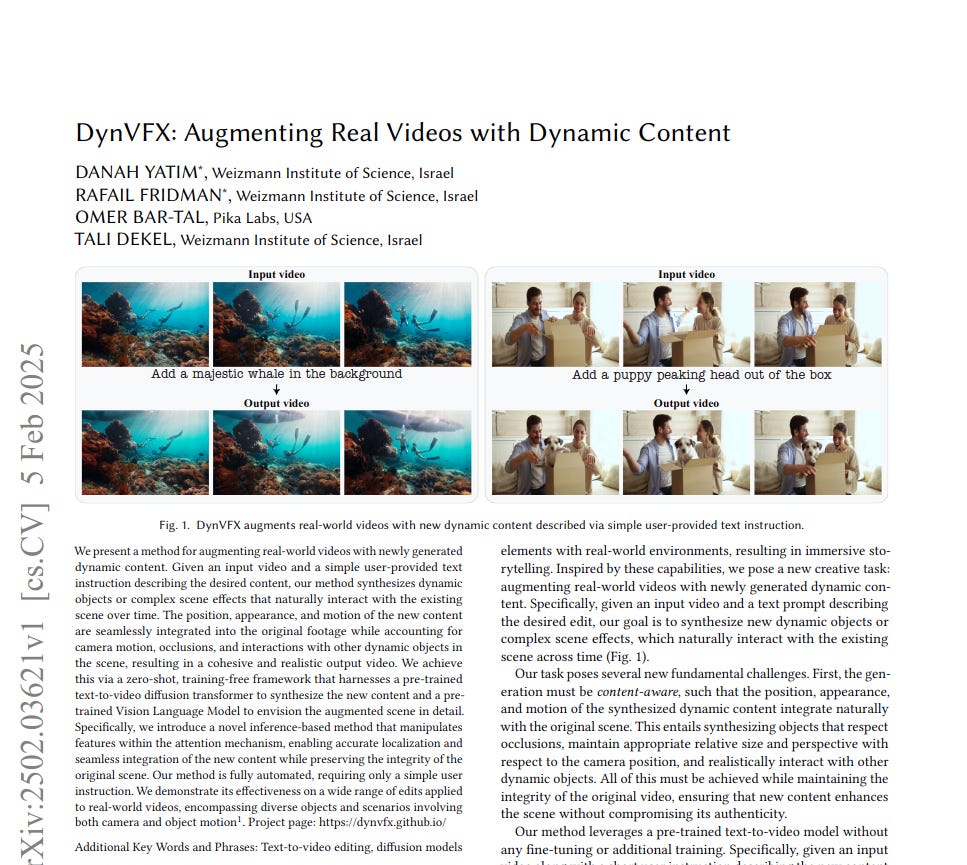

This paper proposes DynVFX. It is a zero-shot method to automatically augment videos with dynamic content using simple text prompts. DynVFX leverages pre-trained models and attention mechanisms for seamless integration.

-----

📌 DynVFX expertly uses attention manipulation within Diffusion Transformers. Anchor Extended Attention cleverly guides content generation by injecting original video's features. This method achieves zero-shot video editing without fine-tuning, demonstrating efficient knowledge transfer from pre-trained models.

📌 Iterative refinement in DynVFX is a key innovation for harmonization. By updating residual latents, the method progressively aligns generated content with the original video. This iterative approach effectively addresses the challenge of seamless visual integration in video editing.

📌 The Vision Language Model as a "VFX assistant" is a powerful concept. It bridges the gap between user intent and model execution. By generating detailed prompts, the VLM ensures context-aware video augmentation, enhancing the practicality and user-friendliness of DynVFX.

----------

Methods Explored in this Paper 🔧:

→ DynVFX utilizes a pre-trained text-to-video Diffusion Transformer model. This model is capable of generating video content from text descriptions.

→ A Vision Language Model (VLM) acts as a "VFX assistant". It interprets user instructions and scene context from input video keyframes. The VLM generates detailed prompts for the text-to-video model, guiding it to create contextually relevant dynamic effects.

→ Anchor Extended Attention is a novel technique for accurate content localization. It extracts key and value pairs from the original video's attention layers via DDIM inversion. These are incorporated as additional context during the diffusion process. This steers the generation to align with the original scene’s spatial and temporal cues, particularly focusing on foreground objects identified by the VLM and segmentation model.

→ Iterative refinement ensures seamless content harmonization. The method iteratively refines the generated content by repeating the sampling process. In each iteration, it updates a residual latent based on the difference between the generated and original video, focusing on regions where new content is added. This progressively blends the new dynamic elements with the existing video, improving visual consistency.

-----

Key Insights 💡:

→ DynVFX introduces a novel task: integrating dynamic content into real-world videos without complex user inputs like masks or 3D assets.

→ Anchor Extended Attention is crucial. It enables content-aware localization of edits by leveraging spatial and temporal information from the original video's attention features, ensuring new elements interact naturally with the scene.

→ Iterative refinement is essential for visual harmony. It addresses pixel-level alignment and blending challenges, leading to better integrated and more realistic dynamic effects.

→ Introduces a VLM-based automatic evaluation metric. This metric assesses edit quality considering original content preservation, new content harmonization, visual quality, and prompt alignment.

-----

Results 📊:

→ Achieves higher Directional CLIP Similarity and SSIM scores than baselines. This indicates superior edit fidelity and better preservation of the original video's structure.

→ User studies show DynVFX is preferred over baselines. Users rated DynVFX higher in preserving original footage (97.65 score) and realistically integrating new content (93.33 score).