EAGLE-2: Faster Inference of Language Models with Dynamic Draft Trees

LLM inference becomes 4x faster by predicting future tokens using dynamic confidence trees

LLM inference becomes 4x faster by predicting future tokens using dynamic confidence trees

Dynamic tree structures predict LLM outputs by learning from context-specific confidence patterns

Original Problem 🎯:

LLM inference is slow and expensive due to sequential token generation. Current speculative sampling methods use static draft trees, assuming token acceptance rates depend only on position, limiting their effectiveness.

Solution in this Paper ⚡:

• EAGLE-2 introduces dynamic draft trees that adapt based on context

• Uses draft model's confidence scores to approximate token acceptance rates

• Two-phase operation:

Expansion Phase: Selects top-k tokens with highest global acceptance probabilities

Reranking Phase: Reranks all draft tokens to optimize verification

• No additional training required, maintains exact output distribution of original LLM

Key Insights from this Paper 💡:

• Token acceptance rates are context-dependent, not just position-dependent

• Draft model's confidence scores closely approximate actual acceptance rates

• Dynamic tree structure outperforms static approaches

• Value calculation (multiplying confidence scores along path) enables optimal token selection

Results 📊:

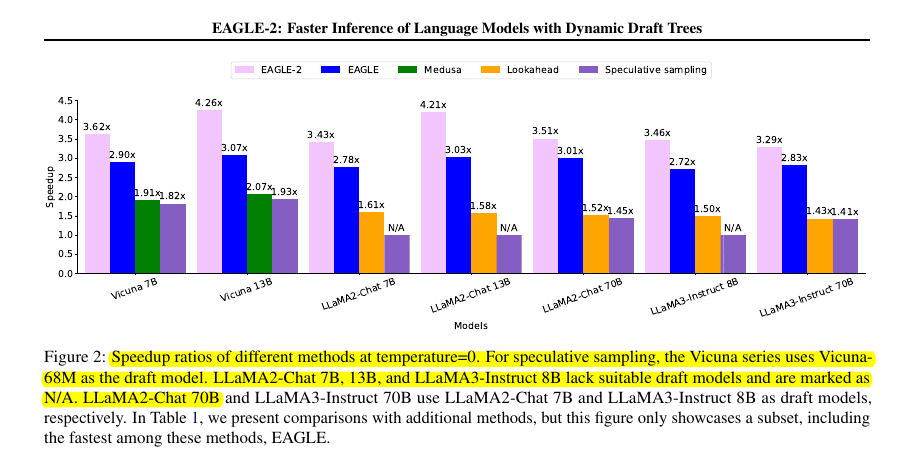

• Achieves speedup ratios of 3.05x-4.26x across different models and tasks

• 20%-40% faster than EAGLE-1

• ~2x faster than Medusa and ~2.3x faster than Lookahead on MT-bench

• Maintains consistent performance across conversations, coding, math reasoning, and summarization

• Works out-of-the-box with no extra model training required

🤔 The core concept behind EAGLE-2

EAGLE-2 introduces dynamic draft trees that adapt based on context, unlike EAGLE's static draft structure.

It uses the draft model's confidence scores to approximate token acceptance rates, allowing it to adjust the tree structure on the fly without additional training. When a token is highly likely to be correct (like predicting "1" after "10+2="), EAGLE-2 adds fewer candidates, while adding more options for uncertain predictions.