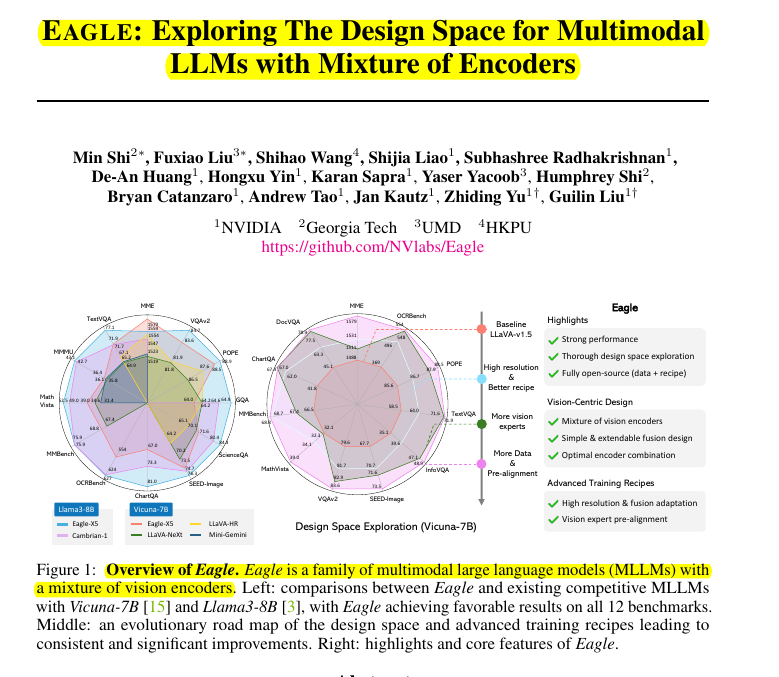

Eagle: Exploring The Design Space for Multimodal LLMs with Mixture of Encoders

Mixture of vision encoders enhances MLLM performance across diverse visual understanding tasks.

Mixture of vision encoders enhances MLLM performance across diverse visual understanding tasks.

Problem 🔍:

Multimodal large language models (MLLMs) struggle with accurately interpreting complex visual information, especially for resolution-sensitive tasks like OCR and document analysis. Existing approaches lack systematic comparisons and detailed studies on critical aspects like expert selection and integration of multiple vision encoders.

Key Insights from this Paper 💡:

• Unlocking vision encoders during MLLM training significantly improves performance

• Simple channel concatenation outperforms complex fusion strategies

• Adding vision experts leads to consistent gains, particularly with unlocked encoders

• Pre-alignment of non-text-aligned vision experts enhances overall performance

• High-resolution adaptation improves fine-grained visual understanding

Solution in this Paper 🛠️:

• Introduces Eagle: a family of MLLMs using mixture of vision encoders

• Systematically explores MLLM design space with multiple vision encoders:

Optimizes high-resolution adaptation for vision encoders

Compares various fusion strategies (e.g., channel concatenation, sequence append)

Employs progressive expert addition using a round-robin approach

• Proposes pre-alignment stage to enhance synergy between visual and linguistic capabilities

• Integrates multiple vision experts (CLIP, ConvNeXt, SAM, Pix2Struct, EVA-02)

• Uses channel concatenation for efficient and effective fusion of visual features

Results 📊:

• Eagle achieves state-of-the-art performance across various MLLM benchmarks

• Eagle-X5 (Vicuna-13B) results:

MME: 1604

MMBench: 70.5

OCRBench: 573

TextVQA: 73.9

GQA: 60.9

• Outperforms other leading open-source models, especially in OCR and document understanding tasks

• Demonstrates consistent improvements with additional vision encoders