EchoPrompt: Instructing the Model to Rephrase Queries for Improved In-context Learning

Just like humans, LLMs understand better when they restate problems in their own words

Just like humans, LLMs understand better when they restate problems in their own words

Simple Query rephrasing in prompts boosts in-context learning across various reasoning tasks for both zero-shot and few-shot.

Problem 🔍:

EchoPrompt enhances in-context learning for large language models by incorporating query rephrasing as a subtask. This technique aims to improve model performance across various reasoning tasks.

Solution in this Paper 🛠️:

• Introduces EchoPrompt, a technique that instructs models to rephrase queries before answering

• Implements EchoPrompt in zero-shot and few-shot settings with various rephrasing structures

• Adapts EchoPrompt for both standard and chain-of-thought prompting

• Evaluates performance across multiple reasoning tasks and model families

Key Insights from this Paper 💡:

• Both original and rephrased queries contribute to performance gains

• EchoPrompt is effective across different model sizes and prompting strategies

• The technique is robust to irrelevant information in queries

Results 📊:

• Improves Zero-shot-CoT performance of code-davinci-002 by 5% in numerical tasks and 13% in reading comprehension

• Enhances few-shot-CoT accuracy on GSM8K from 75.1% to 82.6% with GPT-3.5-turbo

• Outperforms least-to-most prompting on most benchmarks

• Maintains improvements even with irrelevant text in queries

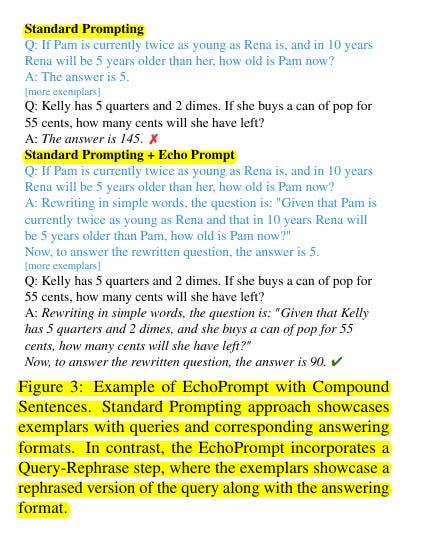

Example of EchoPrompt with Compound Sentences.

Standard Prompting approach showcases exemplars with queries and corresponding answering formats. In contrast, the EchoPrompt incorporates a Query-Rephrase step, where the exemplars showcase a rephrased version of the query along with the answering format.